Zhi Ye

Towards Accurate Knowledge Transfer via Target-awareness Representation Disentanglement

Oct 16, 2020

Abstract:Fine-tuning deep neural networks pre-trained on large scale datasets is one of the most practical transfer learning paradigm given limited quantity of training samples. To obtain better generalization, using the starting point as the reference, either through weights or features, has been successfully applied to transfer learning as a regularizer. However, due to the domain discrepancy between the source and target tasks, there exists obvious risk of negative transfer. In this paper, we propose a novel transfer learning algorithm, introducing the idea of Target-awareness REpresentation Disentanglement (TRED), where the relevant knowledge with respect to the target task is disentangled from the original source model and used as a regularizer during fine-tuning the target model. Experiments on various real world datasets show that our method stably improves the standard fine-tuning by more than 2% in average. TRED also outperforms other state-of-the-art transfer learning regularizers such as L2-SP, AT, DELTA and BSS.

Semi-Supervised Recognition under a Noisy and Fine-grained Dataset

Jun 18, 2020

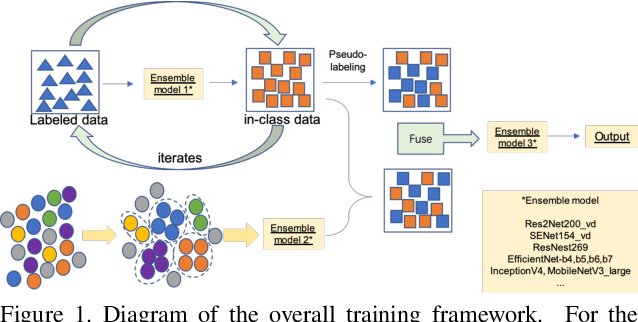

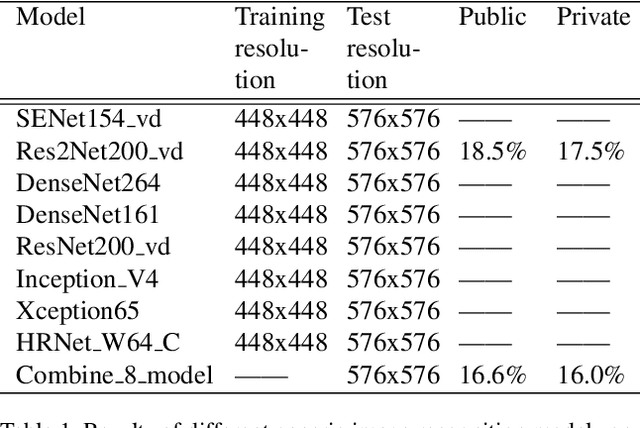

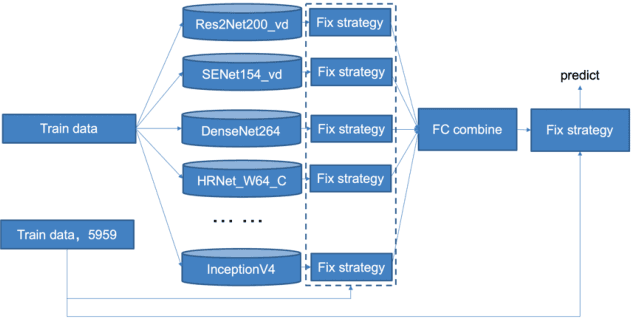

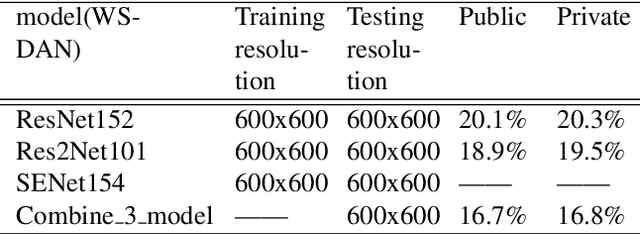

Abstract:Simi-Supervised Recognition Challenge-FGVC7 is a challenging fine-grained recognition competition. One of the difficulties of this competition is how to use unlabeled data. We adopted pseudo-tag data mining to increase the amount of training data. The other one is how to identify similar birds with a very small difference, especially those have a relatively tiny main-body in examples. We combined generic image recognition and fine-grained image recognition method to solve the problem. All generic image recognition models were training using PaddleClas . Using the combination of two different ways of deep recognition models, we finally won the third place in the competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge