Zhenyu Han

AsyncFlow: An Asynchronous Streaming RL Framework for Efficient LLM Post-Training

Jul 02, 2025Abstract:Reinforcement learning (RL) has become a pivotal technology in the post-training phase of large language models (LLMs). Traditional task-colocated RL frameworks suffer from significant scalability bottlenecks, while task-separated RL frameworks face challenges in complex dataflows and the corresponding resource idling and workload imbalance. Moreover, most existing frameworks are tightly coupled with LLM training or inference engines, making it difficult to support custom-designed engines. To address these challenges, we propose AsyncFlow, an asynchronous streaming RL framework for efficient post-training. Specifically, we introduce a distributed data storage and transfer module that provides a unified data management and fine-grained scheduling capability in a fully streamed manner. This architecture inherently facilitates automated pipeline overlapping among RL tasks and dynamic load balancing. Moreover, we propose a producer-consumer-based asynchronous workflow engineered to minimize computational idleness by strategically deferring parameter update process within staleness thresholds. Finally, the core capability of AsynFlow is architecturally decoupled from underlying training and inference engines and encapsulated by service-oriented user interfaces, offering a modular and customizable user experience. Extensive experiments demonstrate an average of 1.59 throughput improvement compared with state-of-the-art baseline. The presented architecture in this work provides actionable insights for next-generation RL training system designs.

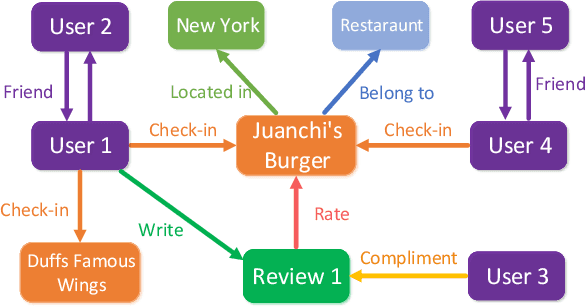

Large Language Model-driven Meta-structure Discovery in Heterogeneous Information Network

Feb 18, 2024

Abstract:Heterogeneous information networks (HIN) have gained increasing popularity for being able to capture complex relations between nodes of diverse types. Meta-structure was proposed to identify important patterns of relations on HIN, which has been proven effective for extracting rich semantic information and facilitating graph neural networks to learn expressive representations. However, hand-crafted meta-structures pose challenges for scaling up, which draws wide research attention for developing automatic meta-structure search algorithms. Previous efforts concentrate on searching for meta-structures with good empirical prediction performance, overlooking explainability. Thus, they often produce meta-structures prone to overfitting and incomprehensible to humans. To address this, we draw inspiration from the emergent reasoning abilities of large language models (LLMs). We propose a novel REasoning meta-STRUCTure search (ReStruct) framework that integrates LLM reasoning into the evolutionary procedure. ReStruct uses a grammar translator to encode meta-structures into natural language sentences, and leverages the reasoning power of LLMs to evaluate semantically feasible meta-structures. ReStruct also employs performance-oriented evolutionary operations. These two competing forces jointly optimize for semantic explainability and empirical performance of meta-structures. We also design a differential LLM explainer that can produce natural language explanations for the discovered meta-structures, and refine the explanation by reasoning through the search history. Experiments on five datasets demonstrate ReStruct achieve SOTA performance in node classification and link recommendation tasks. Additionally, a survey study involving 73 graduate students shows that the meta-structures and natural language explanations generated by ReStruct are substantially more comprehensible.

Devil in the Landscapes: Inferring Epidemic Exposure Risks from Street View Imagery

Nov 09, 2023

Abstract:Built environment supports all the daily activities and shapes our health. Leveraging informative street view imagery, previous research has established the profound correlation between the built environment and chronic, non-communicable diseases; however, predicting the exposure risk of infectious diseases remains largely unexplored. The person-to-person contacts and interactions contribute to the complexity of infectious disease, which is inherently different from non-communicable diseases. Besides, the complex relationships between street view imagery and epidemic exposure also hinder accurate predictions. To address these problems, we construct a regional mobility graph informed by the gravity model, based on which we propose a transmission-aware graph convolutional network (GCN) to capture disease transmission patterns arising from human mobility. Experiments show that the proposed model significantly outperforms baseline models by 8.54% in weighted F1, shedding light on a low-cost, scalable approach to assess epidemic exposure risks from street view imagery.

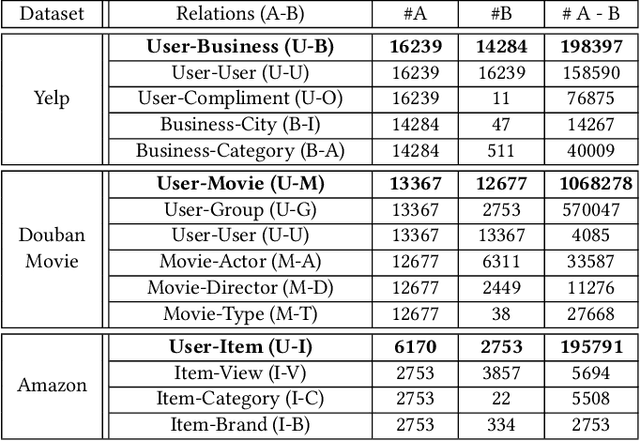

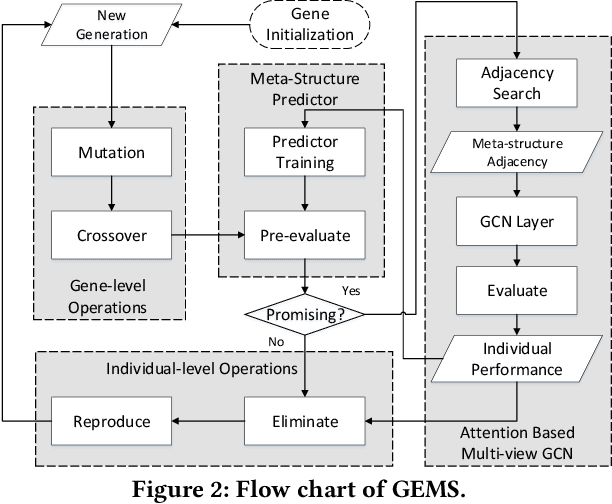

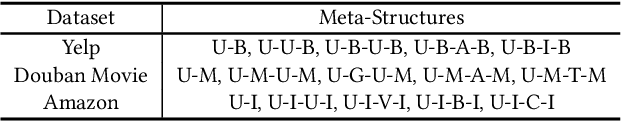

Genetic Meta-Structure Search for Recommendation on Heterogeneous Information Network

Feb 21, 2021

Abstract:In the past decade, the heterogeneous information network (HIN) has become an important methodology for modern recommender systems. To fully leverage its power, manually designed network templates, i.e., meta-structures, are introduced to filter out semantic-aware information. The hand-crafted meta-structure rely on intense expert knowledge, which is both laborious and data-dependent. On the other hand, the number of meta-structures grows exponentially with its size and the number of node types, which prohibits brute-force search. To address these challenges, we propose Genetic Meta-Structure Search (GEMS) to automatically optimize meta-structure designs for recommendation on HINs. Specifically, GEMS adopts a parallel genetic algorithm to search meaningful meta-structures for recommendation, and designs dedicated rules and a meta-structure predictor to efficiently explore the search space. Finally, we propose an attention based multi-view graph convolutional network module to dynamically fuse information from different meta-structures. Extensive experiments on three real-world datasets suggest the effectiveness of GEMS, which consistently outperforms all baseline methods in HIN recommendation. Compared with simplified GEMS which utilizes hand-crafted meta-paths, GEMS achieves over $6\%$ performance gain on most evaluation metrics. More importantly, we conduct an in-depth analysis on the identified meta-structures, which sheds light on the HIN based recommender system design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge