Menglong Chen

AsyncFlow: An Asynchronous Streaming RL Framework for Efficient LLM Post-Training

Jul 02, 2025Abstract:Reinforcement learning (RL) has become a pivotal technology in the post-training phase of large language models (LLMs). Traditional task-colocated RL frameworks suffer from significant scalability bottlenecks, while task-separated RL frameworks face challenges in complex dataflows and the corresponding resource idling and workload imbalance. Moreover, most existing frameworks are tightly coupled with LLM training or inference engines, making it difficult to support custom-designed engines. To address these challenges, we propose AsyncFlow, an asynchronous streaming RL framework for efficient post-training. Specifically, we introduce a distributed data storage and transfer module that provides a unified data management and fine-grained scheduling capability in a fully streamed manner. This architecture inherently facilitates automated pipeline overlapping among RL tasks and dynamic load balancing. Moreover, we propose a producer-consumer-based asynchronous workflow engineered to minimize computational idleness by strategically deferring parameter update process within staleness thresholds. Finally, the core capability of AsynFlow is architecturally decoupled from underlying training and inference engines and encapsulated by service-oriented user interfaces, offering a modular and customizable user experience. Extensive experiments demonstrate an average of 1.59 throughput improvement compared with state-of-the-art baseline. The presented architecture in this work provides actionable insights for next-generation RL training system designs.

Cluster-aware Contrastive Learning for Unsupervised Out-of-distribution Detection

Feb 06, 2023

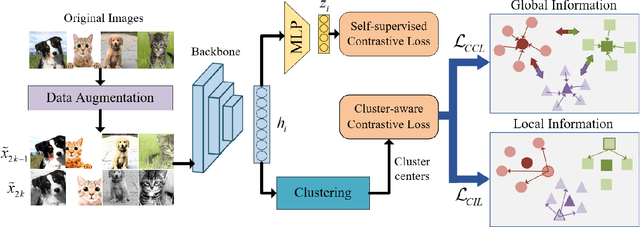

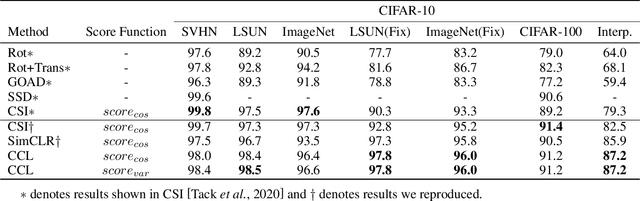

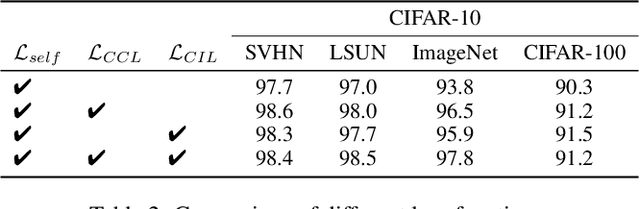

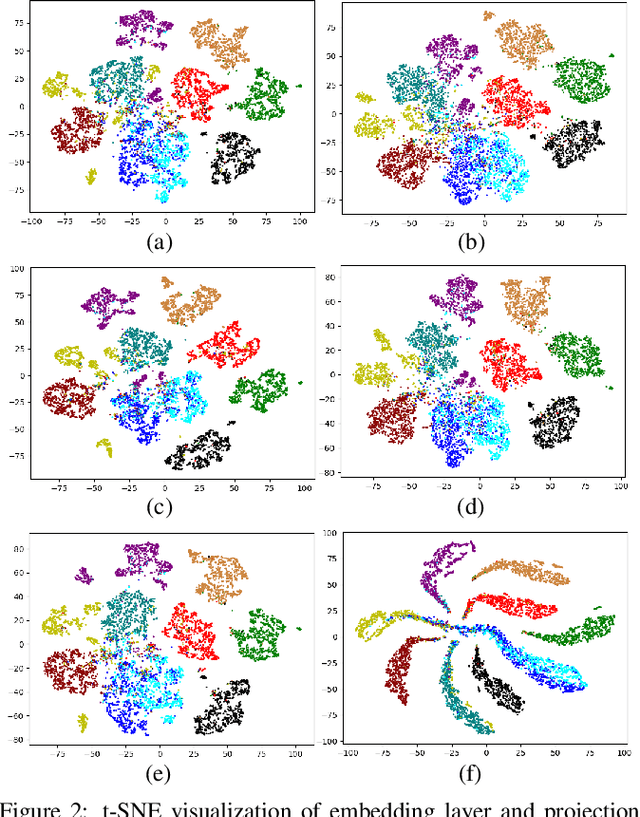

Abstract:Unsupervised out-of-distribution (OOD) Detection aims to separate the samples falling outside the distribution of training data without label information. Among numerous branches, contrastive learning has shown its excellent capability of learning discriminative representation in OOD detection. However, for its limited vision, merely focusing on instance-level relationship between augmented samples, it lacks attention to the relationship between samples with same semantics. Based on the classic contrastive learning, we propose Cluster-aware Contrastive Learning (CCL) framework for unsupervised OOD detection, which considers both instance-level and semantic-level information. Specifically, we study a cooperation strategy of clustering and contrastive learning to effectively extract the latent semantics and design a cluster-aware contrastive loss function to enhance OOD discriminative ability. The loss function can simultaneously pay attention to the global and local relationships by treating both the cluster centers and the samples belonging to the same cluster as positive samples. We conducted sufficient experiments to verify the effectiveness of our framework and the model achieves significant improvement on various image benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge