Zhenting Zhao

Reinforcement Learning for Resilient Power Grids

Dec 08, 2022

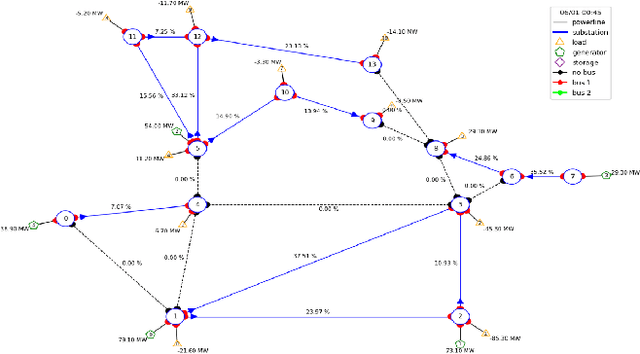

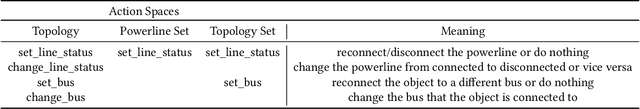

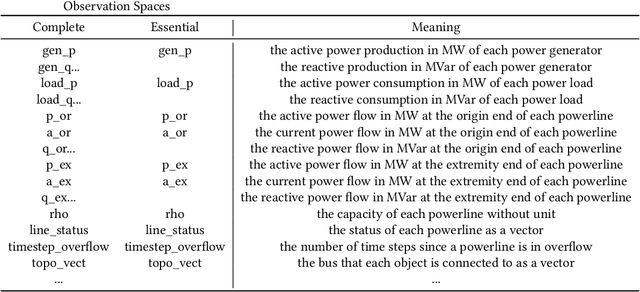

Abstract:Traditional power grid systems have become obsolete under more frequent and extreme natural disasters. Reinforcement learning (RL) has been a promising solution for resilience given its successful history of power grid control. However, most power grid simulators and RL interfaces do not support simulation of power grid under large-scale blackouts or when the network is divided into sub-networks. In this study, we proposed an updated power grid simulator built on Grid2Op, an existing simulator and RL interface, and experimented on limiting the action and observation spaces of Grid2Op. By testing with DDQN and SliceRDQN algorithms, we found that reduced action spaces significantly improve training performance and efficiency. In addition, we investigated a low-rank neural network regularization method for deep Q-learning, one of the most widely used RL algorithms, in this power grid control scenario. As a result, the experiment demonstrated that in the power grid simulation environment, adopting this method will significantly increase the performance of RL agents.

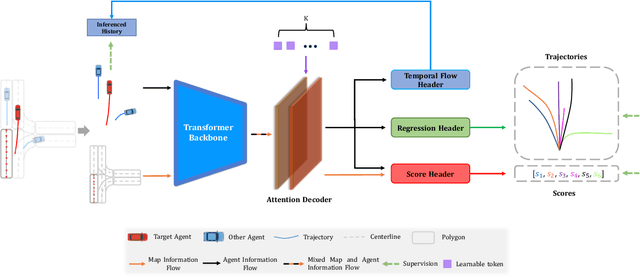

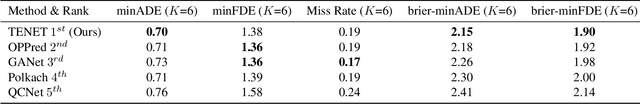

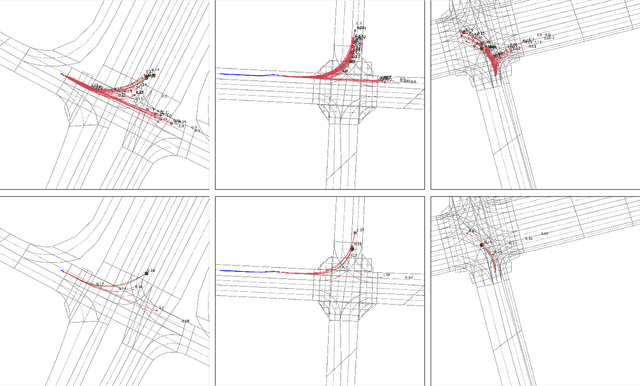

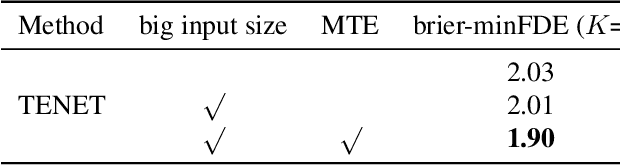

TENET: Transformer Encoding Network for Effective Temporal Flow on Motion Prediction

Jun 30, 2022

Abstract:This technical report presents an effective method for motion prediction in autonomous driving. We develop a Transformer-based method for input encoding and trajectory prediction. Besides, we propose the Temporal Flow Header to enhance the trajectory encoding. In the end, an efficient K-means ensemble method is used. Using our Transformer network and ensemble method, we win the first place of Argoverse 2 Motion Forecasting Challenge with the state-of-the-art brier-minFDE score of 1.90.

Collective Conditioned Reflex: A Bio-Inspired Fast Emergency Reaction Mechanism for Designing Safe Multi-Robot Systems

Feb 24, 2022

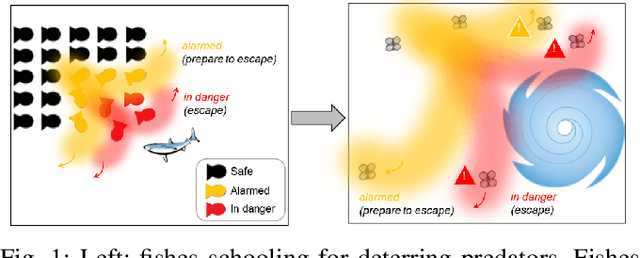

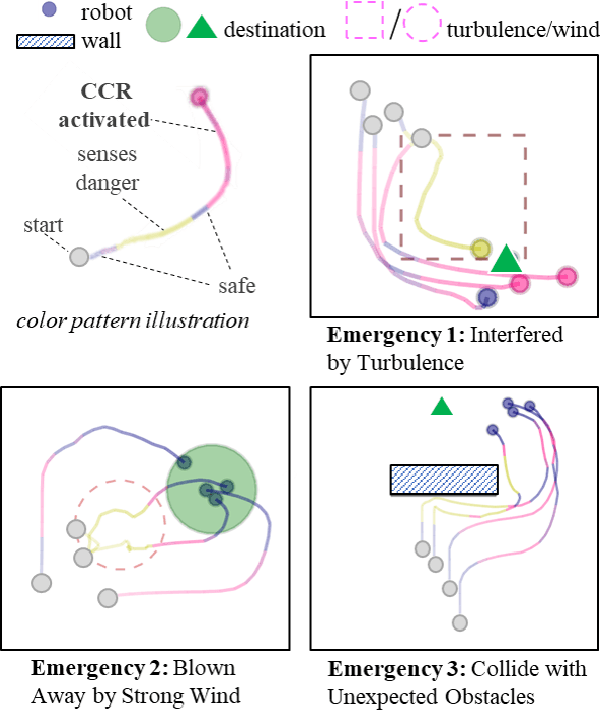

Abstract:A multi-robot system (MRS) is a group of coordinated robots designed to cooperate with each other and accomplish set tasks. Due to the uncertainties in operating environments, the system may encounter unexpected emergencies, such as unobserved obstacles, unexpectedly moving vehicles, explosions, and fires. Animal groups such as bee colonies initiate collective emergency reaction behaviors such as bypassing the front trees and avoiding back predators, similar to muscle conditioned reflex which organizes local muscles to avoid hazards in the first response without delaying passage through the central brain. Inspired by this, we develop a similar collective conditioned reflex mechanism for multi-robot systems to respond to emergencies. In this study, Collective Conditioned Reflex (CCR), a bio-inspired emergency reaction mechanism, is developed based on animal collective behavior analysis and multi-agent reinforcement learning. The algorithm uses a physical model to determine if the robots are experiencing an emergency; then, rewards for robots involved in the emergency are augmented with corresponding heuristic rewards, which evaluate emergency magnitudes and consequences and decide local robots' participation. CCR is validated on three typical emergency scenarios: unexpected turbulence, strong wind, and uncertain obstacle. Experimental results demonstrate that CCR improves robot teams' emergency reaction capability with faster reaction speed and safer trajectory adjustment compared with traditional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge