Zhengxin Yu

SpreadFGL: Edge-Client Collaborative Federated Graph Learning with Adaptive Neighbor Generation

Jul 14, 2024

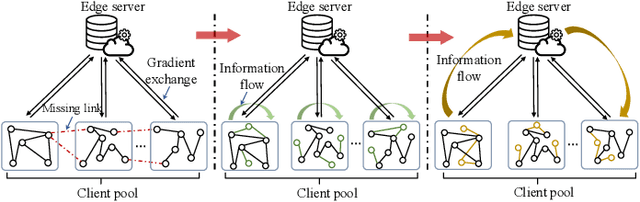

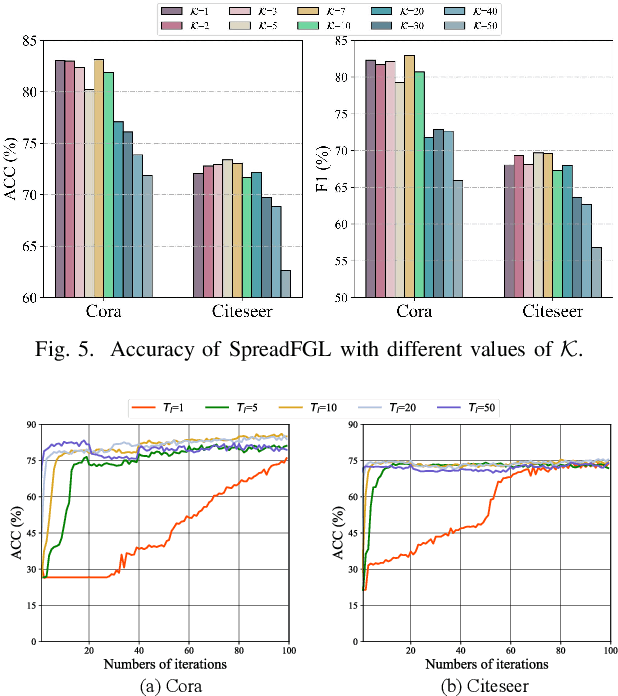

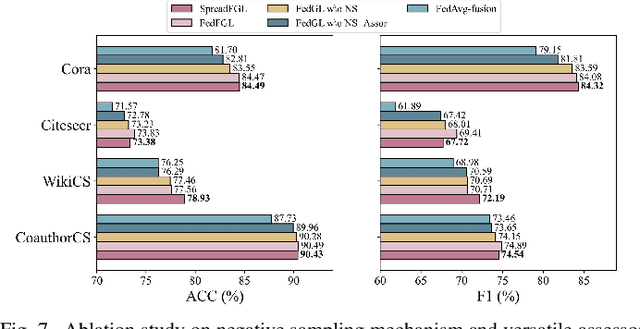

Abstract:Federated Graph Learning (FGL) has garnered widespread attention by enabling collaborative training on multiple clients for semi-supervised classification tasks. However, most existing FGL studies do not well consider the missing inter-client topology information in real-world scenarios, causing insufficient feature aggregation of multi-hop neighbor clients during model training. Moreover, the classic FGL commonly adopts the FedAvg but neglects the high training costs when the number of clients expands, resulting in the overload of a single edge server. To address these important challenges, we propose a novel FGL framework, named SpreadFGL, to promote the information flow in edge-client collaboration and extract more generalized potential relationships between clients. In SpreadFGL, an adaptive graph imputation generator incorporated with a versatile assessor is first designed to exploit the potential links between subgraphs, without sharing raw data. Next, a new negative sampling mechanism is developed to make SpreadFGL concentrate on more refined information in downstream tasks. To facilitate load balancing at the edge layer, SpreadFGL follows a distributed training manner that enables fast model convergence. Using real-world testbed and benchmark graph datasets, extensive experiments demonstrate the effectiveness of the proposed SpreadFGL. The results show that SpreadFGL achieves higher accuracy and faster convergence against state-of-the-art algorithms.

Federated Adversarial Learning for Robust Autonomous Landing Runway Detection

Jun 22, 2024

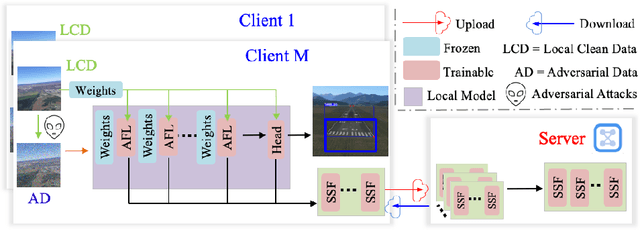

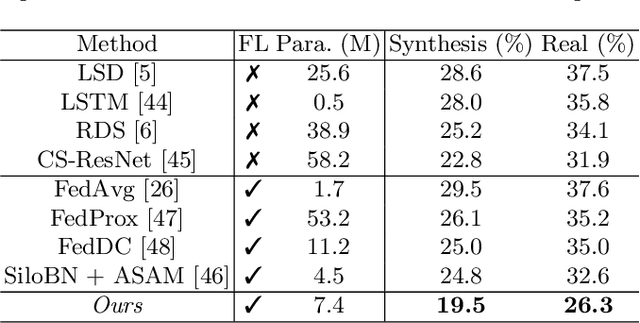

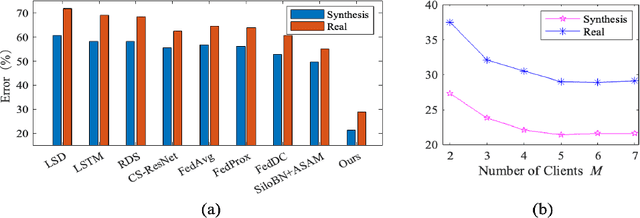

Abstract:As the development of deep learning techniques in autonomous landing systems continues to grow, one of the major challenges is trust and security in the face of possible adversarial attacks. In this paper, we propose a federated adversarial learning-based framework to detect landing runways using paired data comprising of clean local data and its adversarial version. Firstly, the local model is pre-trained on a large-scale lane detection dataset. Then, instead of exploiting large instance-adaptive models, we resort to a parameter-efficient fine-tuning method known as scale and shift deep features (SSF), upon the pre-trained model. Secondly, in each SSF layer, distributions of clean local data and its adversarial version are disentangled for accurate statistics estimation. To the best of our knowledge, this marks the first instance of federated learning work that address the adversarial sample problem in landing runway detection. Our experimental evaluations over both synthesis and real images of Landing Approach Runway Detection (LARD) dataset consistently demonstrate good performance of the proposed federated adversarial learning and robust to adversarial attacks.

* ICANN2024

Privacy-preserving Decentralized Federated Learning over Time-varying Communication Graph

Oct 01, 2022

Abstract:Establishing how a set of learners can provide privacy-preserving federated learning in a fully decentralized (peer-to-peer, no coordinator) manner is an open problem. We propose the first privacy-preserving consensus-based algorithm for the distributed learners to achieve decentralized global model aggregation in an environment of high mobility, where the communication graph between the learners may vary between successive rounds of model aggregation. In particular, in each round of global model aggregation, the Metropolis-Hastings method is applied to update the weighted adjacency matrix based on the current communication topology. In addition, the Shamir's secret sharing scheme is integrated to facilitate privacy in reaching consensus of the global model. The paper establishes the correctness and privacy properties of the proposed algorithm. The computational efficiency is evaluated by a simulation built on a federated learning framework with a real-word dataset.

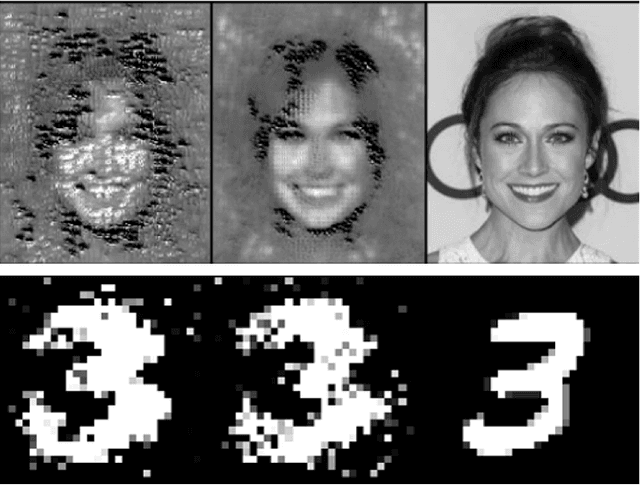

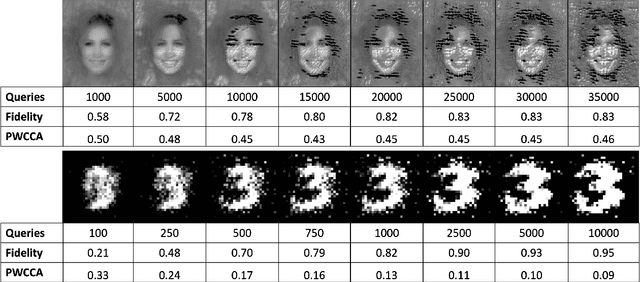

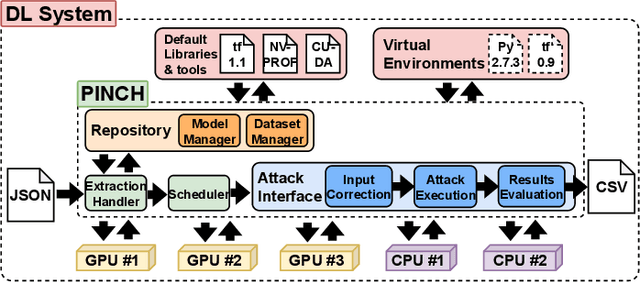

PINCH: An Adversarial Extraction Attack Framework for Deep Learning Models

Sep 13, 2022

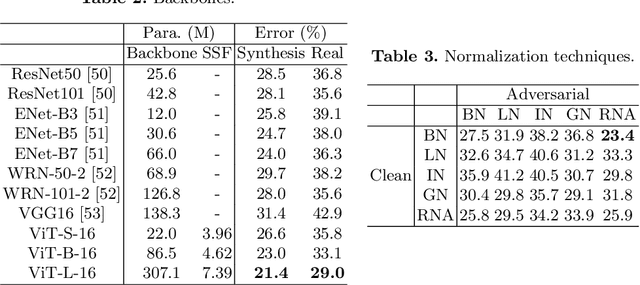

Abstract:Deep Learning (DL) models increasingly power a diversity of applications. Unfortunately, this pervasiveness also makes them attractive targets for extraction attacks which can steal the architecture, parameters, and hyper-parameters of a targeted DL model. Existing extraction attack studies have observed varying levels of attack success for different DL models and datasets, yet the underlying cause(s) behind their susceptibility often remain unclear. Ascertaining such root-cause weaknesses would help facilitate secure DL systems, though this requires studying extraction attacks in a wide variety of scenarios to identify commonalities across attack success and DL characteristics. The overwhelmingly high technical effort and time required to understand, implement, and evaluate even a single attack makes it infeasible to explore the large number of unique extraction attack scenarios in existence, with current frameworks typically designed to only operate for specific attack types, datasets and hardware platforms. In this paper we present PINCH: an efficient and automated extraction attack framework capable of deploying and evaluating multiple DL models and attacks across heterogeneous hardware platforms. We demonstrate the effectiveness of PINCH by empirically evaluating a large number of previously unexplored extraction attack scenarios, as well as secondary attack staging. Our key findings show that 1) multiple characteristics affect extraction attack success spanning DL model architecture, dataset complexity, hardware, attack type, and 2) partially successful extraction attacks significantly enhance the success of further adversarial attack staging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge