Zhaoyu Fan

Towards Unified Multimodal Editing with Enhanced Knowledge Collaboration

Sep 30, 2024

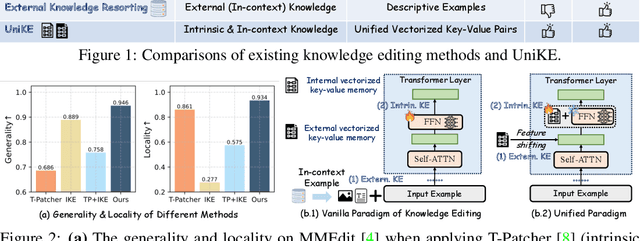

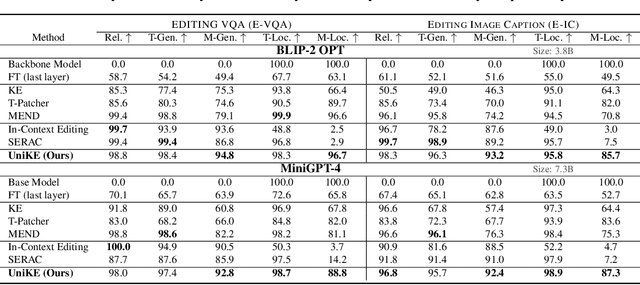

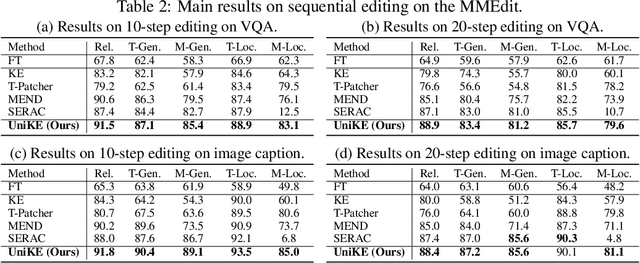

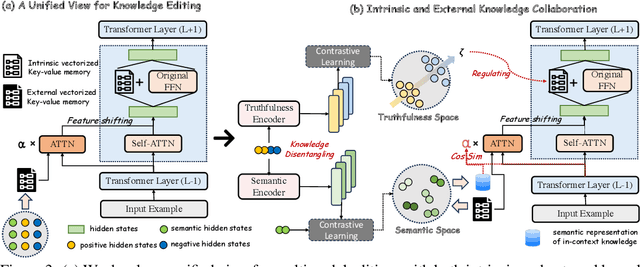

Abstract:The swift advancement in Multimodal LLMs (MLLMs) also presents significant challenges for effective knowledge editing. Current methods, including intrinsic knowledge editing and external knowledge resorting, each possess strengths and weaknesses, struggling to balance the desired properties of reliability, generality, and locality when applied to MLLMs. In this paper, we propose UniKE, a novel multimodal editing method that establishes a unified perspective and paradigm for intrinsic knowledge editing and external knowledge resorting. Both types of knowledge are conceptualized as vectorized key-value memories, with the corresponding editing processes resembling the assimilation and accommodation phases of human cognition, conducted at the same semantic levels. Within such a unified framework, we further promote knowledge collaboration by disentangling the knowledge representations into the semantic and truthfulness spaces. Extensive experiments validate the effectiveness of our method, which ensures that the post-edit MLLM simultaneously maintains excellent reliability, generality, and locality. The code for UniKE will be available at \url{https://github.com/beepkh/UniKE}.

Coordinated Spectral Efficiency Prediction for Real-World 5G CoMP Systems

Aug 14, 2024Abstract:Coordinated multipoint (CoMP) systems incur substantial resource consumption due to the management of backhaul links and the coordination among various base stations (BSs). Accurate prediction of coordinated spectral efficiency (CSE) can guide the optimization of network parameters, resulting in enhanced resource utilization efficiency. However, characterizing the CSE is intractable due to the inherent complexity of the CoMP channel model and the diversity of the 5G dynamic network environment, which poses a great challenge for CSE prediction in real-world 5G CoMP systems. To address this challenge, in this letter, we propose a data-driven model-assisted approach. Initially, we leverage domain knowledge to preprocess the collected raw data, thereby creating a well-informed dataset. Within this dataset, we explicitly define the target variable and the input feature space relevant to channel statistics for CSE prediction. Subsequently, a residual-based network model is built to capture the high-dimensional non-linear mapping function from the channel statistics to the CSE. The effectiveness of the proposed approach is validated by experimental results on real-world data.

Auto-Encoding Morph-Tokens for Multimodal LLM

May 03, 2024Abstract:For multimodal LLMs, the synergy of visual comprehension (textual output) and generation (visual output) presents an ongoing challenge. This is due to a conflicting objective: for comprehension, an MLLM needs to abstract the visuals; for generation, it needs to preserve the visuals as much as possible. Thus, the objective is a dilemma for visual-tokens. To resolve the conflict, we propose encoding images into morph-tokens to serve a dual purpose: for comprehension, they act as visual prompts instructing MLLM to generate texts; for generation, they take on a different, non-conflicting role as complete visual-tokens for image reconstruction, where the missing visual cues are recovered by the MLLM. Extensive experiments show that morph-tokens can achieve a new SOTA for multimodal comprehension and generation simultaneously. Our project is available at https://github.com/DCDmllm/MorphTokens.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge