Zeynep G. Saribatur

The Dual Role of Abstracting over the Irrelevant in Symbolic Explanations: Cognitive Effort vs. Understanding

Feb 03, 2026Abstract:Explanations are central to human cognition, yet AI systems often produce outputs that are difficult to understand. While symbolic AI offers a transparent foundation for interpretability, raw logical traces often impose a high extraneous cognitive load. We investigate how formal abstractions, specifically removal and clustering, impact human reasoning performance and cognitive effort. Utilizing Answer Set Programming (ASP) as a formal framework, we define a notion of irrelevant details to be abstracted over to obtain simplified explanations. Our cognitive experiments, in which participants classified stimuli across domains with explanations derived from an answer set program, show that clustering details significantly improve participants' understanding, while removal of details significantly reduce cognitive effort, supporting the hypothesis that abstraction enhances human-centered symbolic explanations.

An XAI View on Explainable ASP: Methods, Systems, and Perspectives

Jan 21, 2026Abstract:Answer Set Programming (ASP) is a popular declarative reasoning and problem solving approach in symbolic AI. Its rule-based formalism makes it inherently attractive for explainable and interpretive reasoning, which is gaining importance with the surge of Explainable AI (XAI). A number of explanation approaches and tools for ASP have been developed, which often tackle specific explanatory settings and may not cover all scenarios that ASP users encounter. In this survey, we provide, guided by an XAI perspective, an overview of types of ASP explanations in connection with user questions for explanation, and describe how their coverage by current theory and tools. Furthermore, we pinpoint gaps in existing ASP explanations approaches and identify research directions for future work.

Aligning Generalisation Between Humans and Machines

Nov 23, 2024

Abstract:Recent advances in AI -- including generative approaches -- have resulted in technology that can support humans in scientific discovery and decision support but may also disrupt democracies and target individuals. The responsible use of AI increasingly shows the need for human-AI teaming, necessitating effective interaction between humans and machines. A crucial yet often overlooked aspect of these interactions is the different ways in which humans and machines generalise. In cognitive science, human generalisation commonly involves abstraction and concept learning. In contrast, AI generalisation encompasses out-of-domain generalisation in machine learning, rule-based reasoning in symbolic AI, and abstraction in neuro-symbolic AI. In this perspective paper, we combine insights from AI and cognitive science to identify key commonalities and differences across three dimensions: notions of generalisation, methods for generalisation, and evaluation of generalisation. We map the different conceptualisations of generalisation in AI and cognitive science along these three dimensions and consider their role in human-AI teaming. This results in interdisciplinary challenges across AI and cognitive science that must be tackled to provide a foundation for effective and cognitively supported alignment in human-AI teaming scenarios.

A Unified View on Forgetting and Strong Equivalence Notions in Answer Set Programming

Dec 13, 2023Abstract:Answer Set Programming (ASP) is a prominent rule-based language for knowledge representation and reasoning with roots in logic programming and non-monotonic reasoning. The aim to capture the essence of removing (ir)relevant details in ASP programs led to the investigation of different notions, from strong persistence (SP) forgetting, to faithful abstractions, and, recently, strong simplifications, where the latter two can be seen as relaxed and strengthened notions of forgetting, respectively. Although it was observed that these notions are related, especially given that they have characterizations through the semantics for strong equivalence, it remained unclear whether they can be brought together. In this work, we bridge this gap by introducing a novel relativized equivalence notion, which is a relaxation of the recent simplification notion, that is able to capture all related notions from the literature. We provide necessary and sufficient conditions for relativized simplifiability, which shows that the challenging part is for when the context programs do not contain all the atoms to remove. We then introduce an operator that combines projection and a relaxation of (SP)-forgetting to obtain the relativized simplifications. We furthermore present complexity results that complete the overall picture.

Abstraction for Zooming-In to Unsolvability Reasons of Grid-Cell Problems

Sep 11, 2019

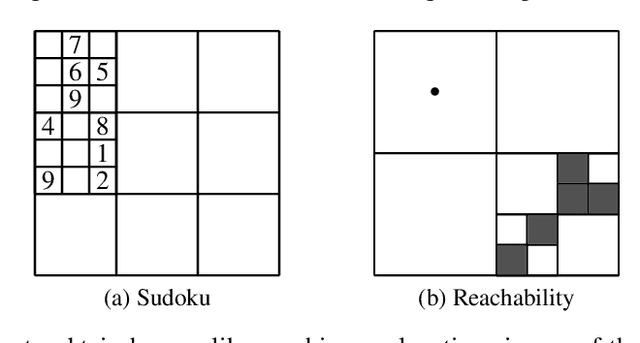

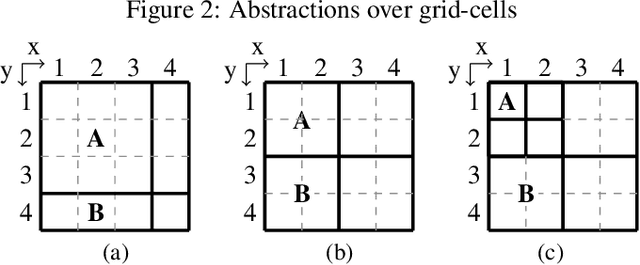

Abstract:Humans are capable of abstracting away irrelevant details when studying problems. This is especially noticeable for problems over grid-cells, as humans are able to disregard certain parts of the grid and focus on the key elements important for the problem. Recently, the notion of abstraction has been introduced for Answer Set Programming (ASP), a knowledge representation and reasoning paradigm widely used in problem solving, with the potential to understand the key elements of a program that play a role in finding a solution. The present paper takes this further and empowers abstraction to deal with structural aspects, and in particular with hierarchical abstraction over the domain. We focus on obtaining the reasons for unsolvability of problems on grids, and show the possibility to automatically achieve human-like abstractions that distinguish only the relevant part of the grid. A user study on abstract explanations confirms the similarity of the focus points in machine vs. human explanations and reaffirms the challenge of employing abstraction to obtain machine explanations.

Towards Abstraction in ASP with an Application on Reasoning about Agent Policies

Sep 18, 2018

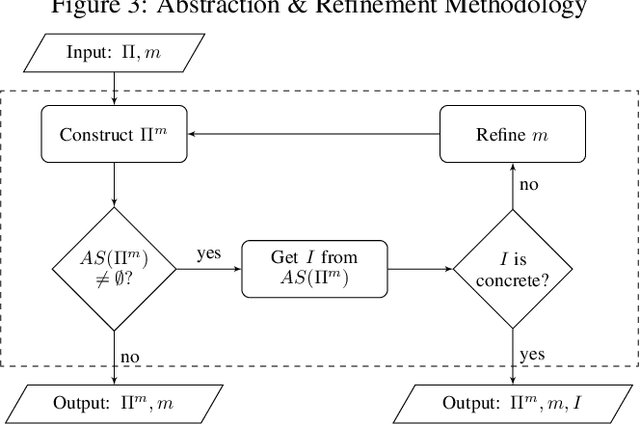

Abstract:ASP programs are a convenient tool for problem solving, whereas with large problem instances the size of the state space can be prohibitive. We consider abstraction as a means of over-approximation and introduce a method to automatically abstract (possibly non-ground) ASP programs that preserves their structure, while reducing the size of the problem. One particular application case is the problem of defining declarative policies for reactive agents and reasoning about them, which we illustrate on examples.

Reactive Policies with Planning for Action Languages

Mar 31, 2016

Abstract:We describe a representation in a high-level transition system for policies that express a reactive behavior for the agent. We consider a target decision component that figures out what to do next and an (online) planning capability to compute the plans needed to reach these targets. Our representation allows one to analyze the flow of executing the given reactive policy, and to determine whether it works as expected. Additionally, the flexibility of the representation opens a range of possibilities for designing behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge