Yuxing Li

Knowledge Transfer between Datasets for Learning-based Tissue Microstructure Estimation

Oct 24, 2019

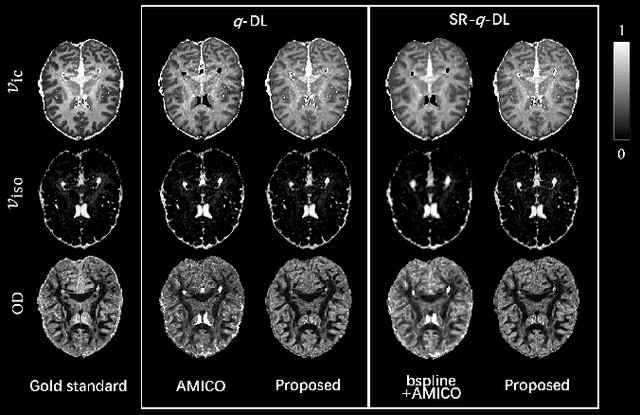

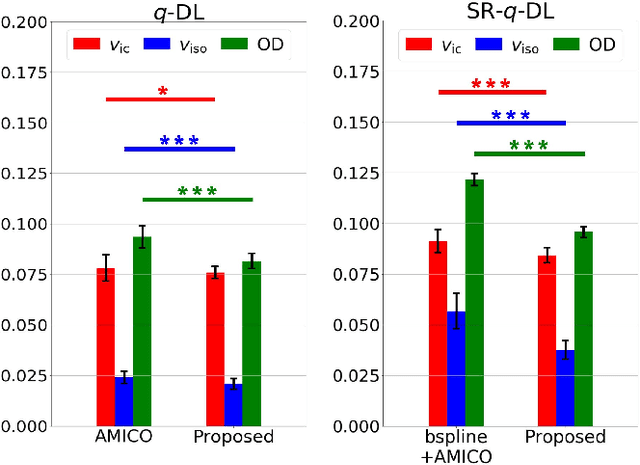

Abstract:Learning-based approaches, especially those based on deep networks, have enabled high-quality estimation of tissue microstructure from low-quality diffusion magnetic resonance imaging (dMRI) scans, which are acquired with a limited number of diffusion gradients and a relatively poor spatial resolution. These learning-based approaches to tissue microstructure estimation require acquisitions of training dMRI scans with high-quality diffusion signals, which are densely sampled in the q-space and have a high spatial resolution. However, the acquisition of training scans may not be available for all datasets. Therefore, we explore knowledge transfer between different dMRI datasets so that learning-based tissue microstructure estimation can be applied for datasets where training scans are not acquired. Specifically, for a target dataset of interest, where only low-quality diffusion signals are acquired without training scans, we exploit the information in a source dMRI dataset acquired with high-quality diffusion signals. We interpolate the diffusion signals in the source dataset in the q-space using a dictionary-based signal representation, so that the interpolated signals match the acquisition scheme of the target dataset. Then, the interpolated signals are used together with the high-quality tissue microstructure computed from the source dataset to train deep networks that perform tissue microstructure estimation for the target dataset. Experiments were performed on brain dMRI scans with low-quality diffusion signals, where the benefit of the proposed strategy is demonstrated.

Semi-Supervised Brain Lesion Segmentation with an Adapted Mean Teacher Model

Mar 04, 2019

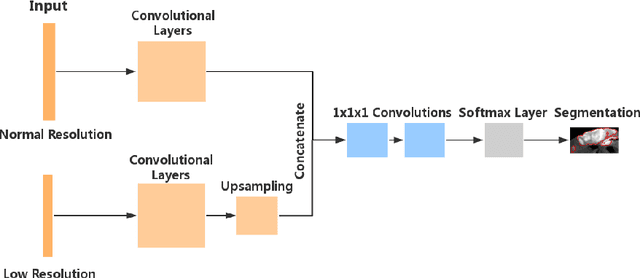

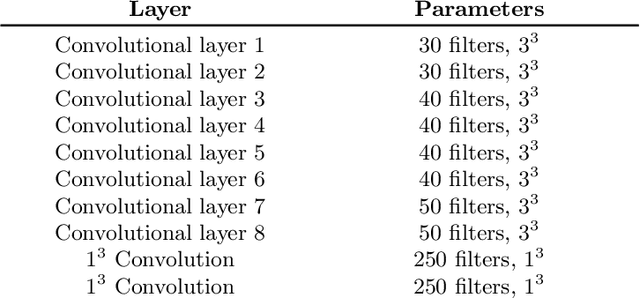

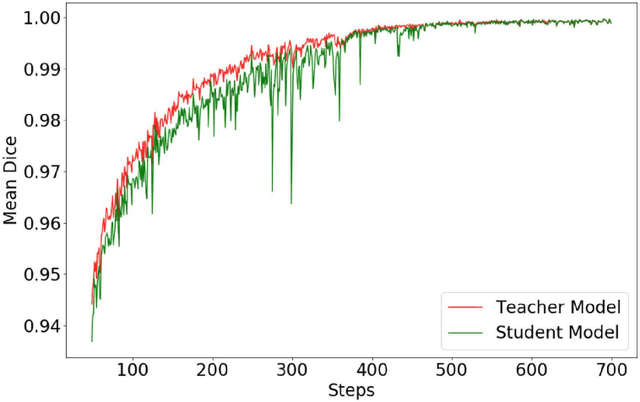

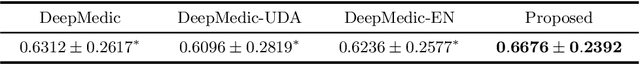

Abstract:Automated brain lesion segmentation provides valuable information for the analysis and intervention of patients. In particular, methods based on convolutional neural networks (CNNs) have achieved state-of-the-art segmentation performance. However, CNNs usually require a decent amount of annotated data, which may be costly and time-consuming to obtain. Since unannotated data is generally abundant, it is desirable to use unannotated data to improve the segmentation performance for CNNs when limited annotated data is available. In this work, we propose a semi-supervised learning (SSL) approach to brain lesion segmentation, where unannotated data is incorporated into the training of CNNs. We adapt the mean teacher model, which is originally developed for SSL-based image classification, for brain lesion segmentation. Assuming that the network should produce consistent outputs for similar inputs, a loss of segmentation consistency is designed and integrated into a self-ensembling framework. Specifically, we build a student model and a teacher model, which share the same CNN architecture for segmentation. The student and teacher models are updated alternately. At each step, the student model learns from the teacher model by minimizing the weighted sum of the segmentation loss computed from annotated data and the segmentation consistency loss between the teacher and student models computed from unannotated data. Then, the teacher model is updated by combining the updated student model with the historical information of teacher models using an exponential moving average strategy. For demonstration, the proposed approach was evaluated on ischemic stroke lesion segmentation, where it improves stroke lesion segmentation with the incorporation of unannotated data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge