Young Sang Choi

ICYM2I: The illusion of multimodal informativeness under missingness

May 22, 2025

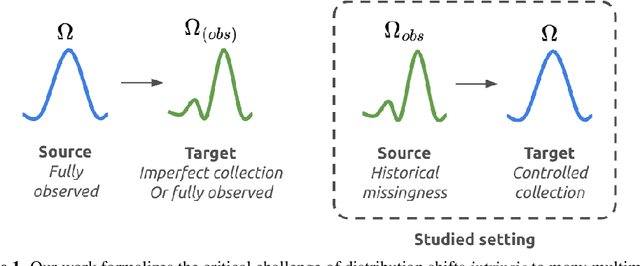

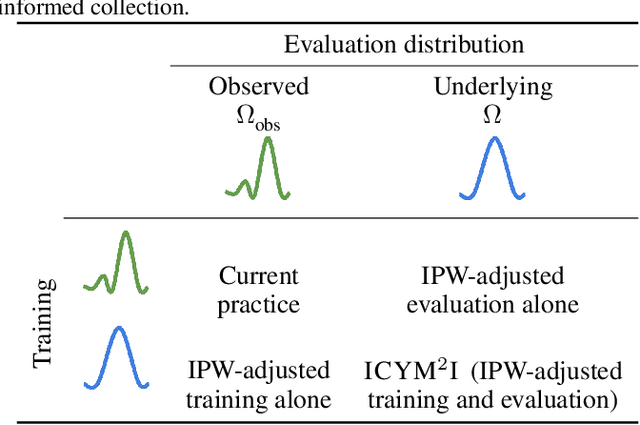

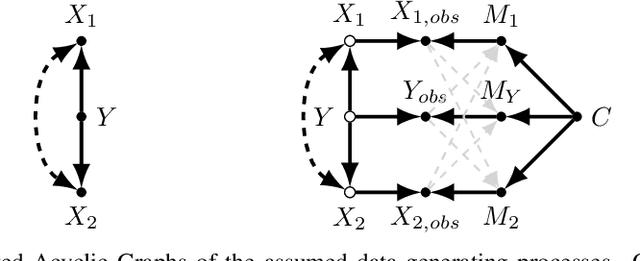

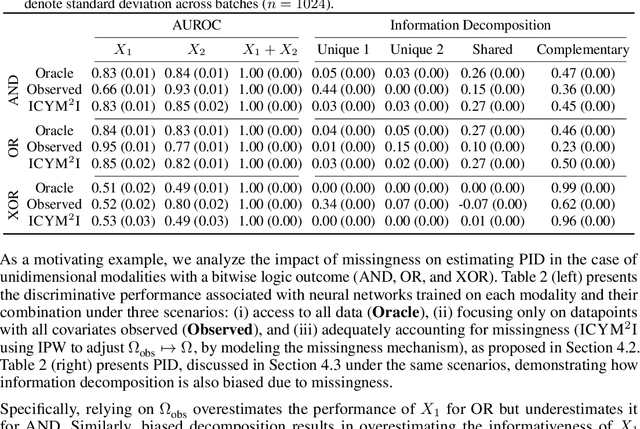

Abstract:Multimodal learning is of continued interest in artificial intelligence-based applications, motivated by the potential information gain from combining different types of data. However, modalities collected and curated during development may differ from the modalities available at deployment due to multiple factors including cost, hardware failure, or -- as we argue in this work -- the perceived informativeness of a given modality. Na{\"i}ve estimation of the information gain associated with including an additional modality without accounting for missingness may result in improper estimates of that modality's value in downstream tasks. Our work formalizes the problem of missingness in multimodal learning and demonstrates the biases resulting from ignoring this process. To address this issue, we introduce ICYM2I (In Case You Multimodal Missed It), a framework for the evaluation of predictive performance and information gain under missingness through inverse probability weighting-based correction. We demonstrate the importance of the proposed adjustment to estimate information gain under missingness on synthetic, semi-synthetic, and real-world medical datasets.

FoMoH: A clinically meaningful foundation model evaluation for structured electronic health records

May 22, 2025Abstract:Foundation models hold significant promise in healthcare, given their capacity to extract meaningful representations independent of downstream tasks. This property has enabled state-of-the-art performance across several clinical applications trained on structured electronic health record (EHR) data, even in settings with limited labeled data, a prevalent challenge in healthcare. However, there is little consensus on these models' potential for clinical utility due to the lack of desiderata of comprehensive and meaningful tasks and sufficiently diverse evaluations to characterize the benefit over conventional supervised learning. To address this gap, we propose a suite of clinically meaningful tasks spanning patient outcomes, early prediction of acute and chronic conditions, including desiderata for robust evaluations. We evaluate state-of-the-art foundation models on EHR data consisting of 5 million patients from Columbia University Irving Medical Center (CUMC), a large urban academic medical center in New York City, across 14 clinically relevant tasks. We measure overall accuracy, calibration, and subpopulation performance to surface tradeoffs based on the choice of pre-training, tokenization, and data representation strategies. Our study aims to advance the empirical evaluation of structured EHR foundation models and guide the development of future healthcare foundation models.

Adaptable Multi-Domain Language Model for Transformer ASR

Aug 14, 2020

Abstract:We propose an adapter based multi-domain Transformer based language model (LM) for Transformer ASR. The model consists of a big size common LM and small size adapters. The model can perform multi-domain adaptation with only the small size adapters and its related layers. The proposed model can reuse the full fine-tuned LM which is fine-tuned using all layers of an original model. The proposed LM can be expanded to new domains by adding about 2% of parameters for a first domain and 13% parameters for after second domain. The proposed model is also effective in reducing the model maintenance cost because it is possible to omit the costly and time-consuming common LM pre-training process. Using proposed adapter based approach, we observed that a general LM with adapter can outperform a dedicated music domain LM in terms of word error rate (WER).

Graph-Aware Transformer: Is Attention All Graphs Need?

Jun 09, 2020

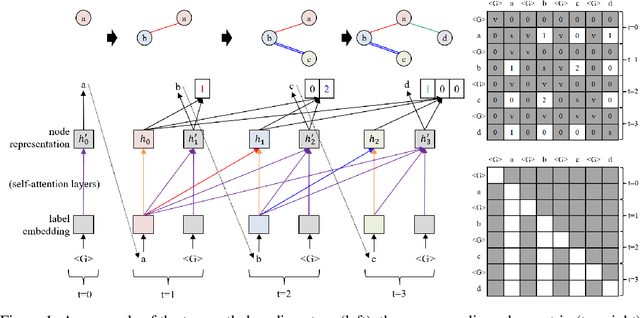

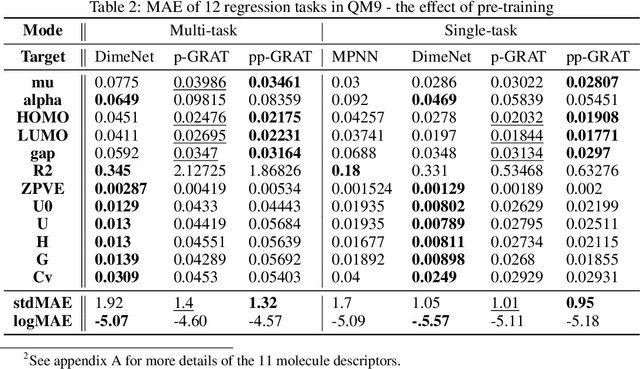

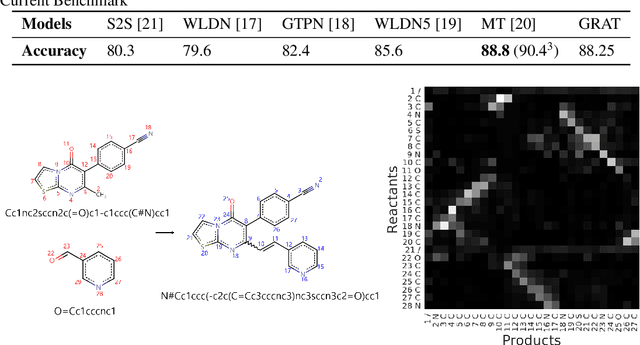

Abstract:Graphs are the natural data structure to represent relational and structural information in many domains. To cover the broad range of graph-data applications including graph classification as well as graph generation, it is desirable to have a general and flexible model consisting of an encoder and a decoder that can handle graph data. Although the representative encoder-decoder model, Transformer, shows superior performance in various tasks especially of natural language processing, it is not immediately available for graphs due to their non-sequential characteristics. To tackle this incompatibility, we propose GRaph-Aware Transformer (GRAT), the first Transformer-based model which can encode and decode whole graphs in end-to-end fashion. GRAT is featured with a self-attention mechanism adaptive to the edge information and an auto-regressive decoding mechanism based on the two-path approach consisting of sub-graph encoding path and node-and-edge generation path for each decoding step. We empirically evaluated GRAT on multiple setups including encoder-based tasks such as molecule property predictions on QM9 datasets and encoder-decoder-based tasks such as molecule graph generation in the organic molecule synthesis domain. GRAT has shown very promising results including state-of-the-art performance on 4 regression tasks in QM9 benchmark.

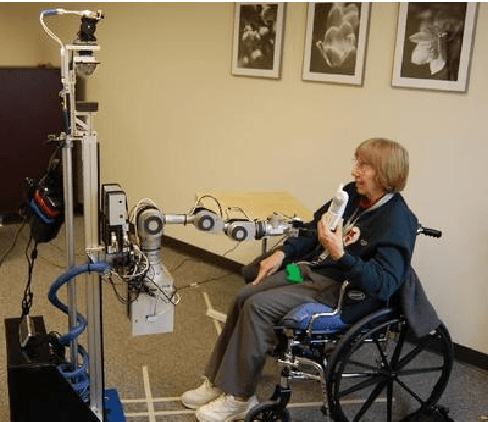

A List of Household Objects for Robotic Retrieval Prioritized by People with ALS

Feb 12, 2009

Abstract:This technical report is designed to serve as a citable reference for the original prioritized object list that the Healthcare Robotics Lab at Georgia Tech released on its website in September of 2008. It is also expected to serve as the primary citable reference for the research associated with this list until the publication of a detailed, peer-reviewed paper. The original prioritized list of object classes resulted from a needs assessment involving 8 motor-impaired patients with amyotrophic lateral sclerosis (ALS) and targeted, in-person interviews of 15 motor-impaired ALS patients. All of these participants were drawn from the Emory ALS Center. The prioritized object list consists of 43 object classes ranked by how important the participants considered each class to be for retrieval by an assistive robot. We intend for this list to be used by researchers to inform the design and benchmarking of robotic systems, especially research related to autonomous mobile manipulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge