Yifang Liu

Active Face Frontalization using Commodity Unmanned Aerial Vehicles

Feb 17, 2021

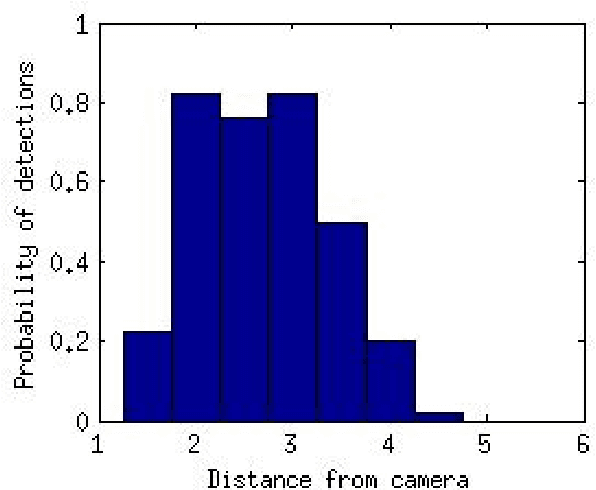

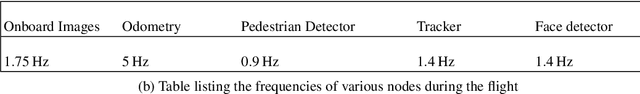

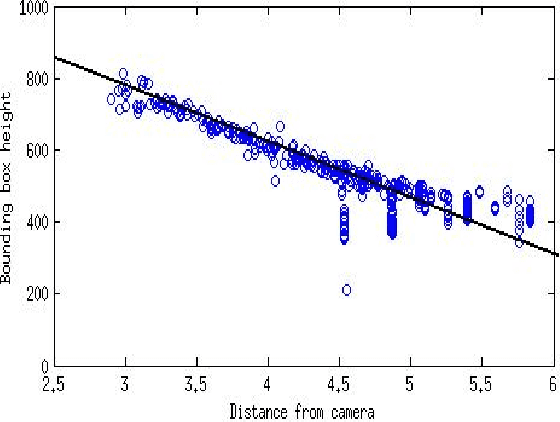

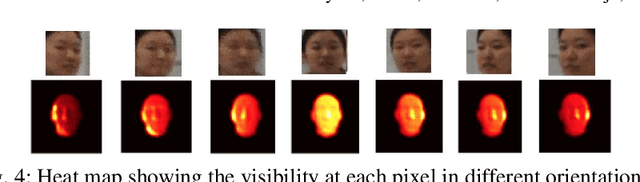

Abstract:This paper describes a system by which Unmanned Aerial Vehicles (UAVs) can gather high-quality face images that can be used in biometric identification tasks. Success in face-based identification depends in large part on the image quality, and a major factor is how frontal the view is. Face recognition software pipelines can improve identification rates by synthesizing frontal views from non-frontal views by a process call {\em frontalization}. Here we exploit the high mobility of UAVs to actively gather frontal images using components of a synthetic frontalization pipeline. We define a frontalization error and show that it can be used to guide an UAVs to capture frontal views. Further, we show that the resulting image stream improves matching quality of a typical face recognition similarity metric. The system is implemented using an off-the-shelf hardware and software components and can be easily transfered to any ROS enabled UAVs.

Simultaneous Relevance and Diversity: A New Recommendation Inference Approach

Sep 27, 2020

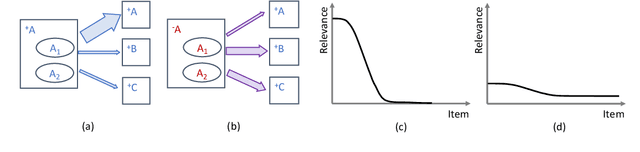

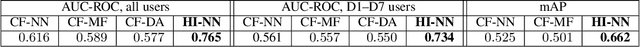

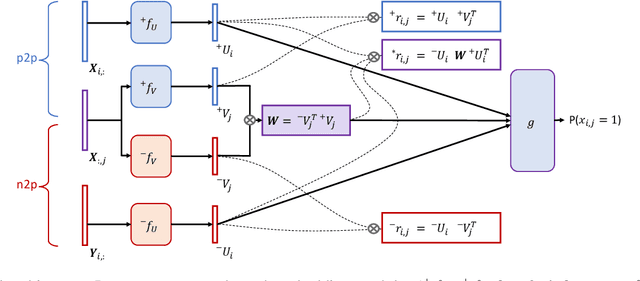

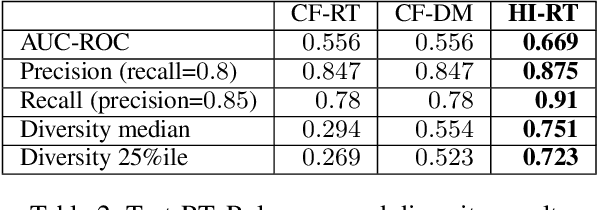

Abstract:Relevance and diversity are both important to the success of recommender systems, as they help users to discover from a large pool of items a compact set of candidates that are not only interesting but exploratory as well. The challenge is that relevance and diversity usually act as two competing objectives in conventional recommender systems, which necessities the classic trade-off between exploitation and exploration. Traditionally, higher diversity often means sacrifice on relevance and vice versa. We propose a new approach, heterogeneous inference, which extends the general collaborative filtering (CF) by introducing a new way of CF inference, negative-to-positive. Heterogeneous inference achieves divergent relevance, where relevance and diversity support each other as two collaborating objectives in one recommendation model, and where recommendation diversity is an inherent outcome of the relevance inference process. Benefiting from its succinctness and flexibility, our approach is applicable to a wide range of recommendation scenarios/use-cases at various sophistication levels. Our analysis and experiments on public datasets and real-world production data show that our approach outperforms existing methods on relevance and diversity simultaneously.

Understanding Multi-Modal Perception Using Behavioral Cloning for Peg-In-a-Hole Insertion Tasks

Jul 22, 2020

Abstract:One of the main challenges in peg-in-a-hole (PiH) insertion tasks is in handling the uncertainty in the location of the target hole. In order to address it, high-dimensional sensor inputs from sensor modalities such as vision, force/torque sensing, and proprioception can be combined to learn control policies that are robust to this uncertainty in the target pose. Whereas deep learning has shown success in recognizing objects and making decisions with high-dimensional inputs, the learning procedure might damage the robot when applying directly trial- and-error algorithms on the real system. At the same time, learning from Demonstration (LfD) methods have been shown to achieve compelling performance in real robotic systems by leveraging demonstration data provided by experts. In this paper, we investigate the merits of multiple sensor modalities such as vision, force/torque sensors, and proprioception when combined to learn a controller for real world assembly operation tasks using LfD techniques. The study is limited to PiH insertions; we plan to extend the study to more experiments in the future. Additionally, we propose a multi-step-ahead loss function to improve the performance of the behavioral cloning method. Experimental results on a real manipulator support our findings, and show the effectiveness of the proposed loss function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge