Yi-Fan Lu

SEOE: A Scalable and Reliable Semantic Evaluation Framework for Open Domain Event Detection

Mar 05, 2025Abstract:Automatic evaluation for Open Domain Event Detection (ODED) is a highly challenging task, because ODED is characterized by a vast diversity of un-constrained output labels from various domains. Nearly all existing evaluation methods for ODED usually first construct evaluation benchmarks with limited labels and domain coverage, and then evaluate ODED methods using metrics based on token-level label matching rules. However, this kind of evaluation framework faces two issues: (1) The limited evaluation benchmarks lack representatives of the real world, making it difficult to accurately reflect the performance of various ODED methods in real-world scenarios; (2) Evaluation metrics based on token-level matching rules fail to capture semantic similarity between predictions and golden labels. To address these two problems above, we propose a scalable and reliable Semantic-level Evaluation framework for Open domain Event detection (SEOE) by constructing a more representative evaluation benchmark and introducing a semantic evaluation metric. Specifically, our proposed framework first constructs a scalable evaluation benchmark that currently includes 564 event types covering 7 major domains, with a cost-effective supplementary annotation strategy to ensure the benchmark's representativeness. The strategy also allows for the supplement of new event types and domains in the future. Then, the proposed SEOE leverages large language models (LLMs) as automatic evaluation agents to compute a semantic F1-score, incorporating fine-grained definitions of semantically similar labels to enhance the reliability of the evaluation. Extensive experiments validate the representatives of the benchmark and the reliability of the semantic evaluation metric. Existing ODED methods are thoroughly evaluated, and the error patterns of predictions are analyzed, revealing several insightful findings.

Beyond Exact Match: Semantically Reassessing Event Extraction by Large Language Models

Oct 12, 2024

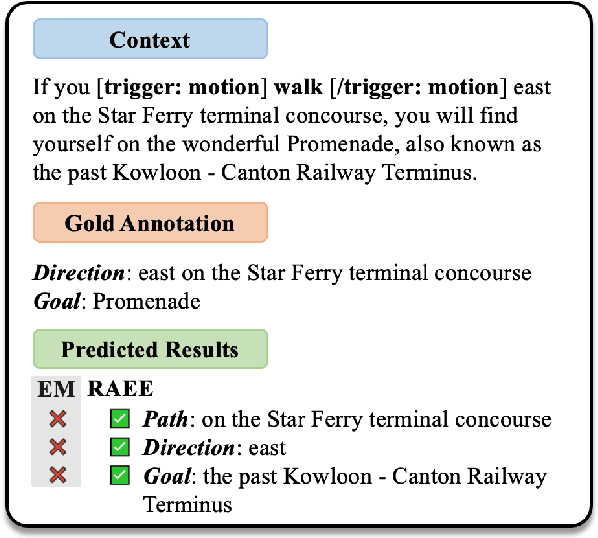

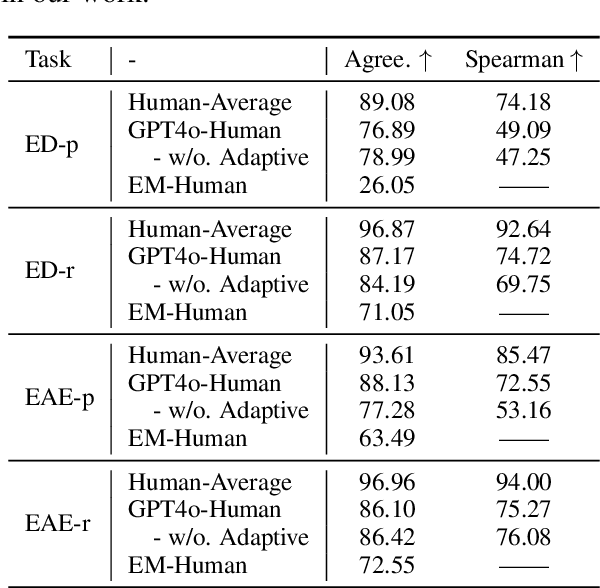

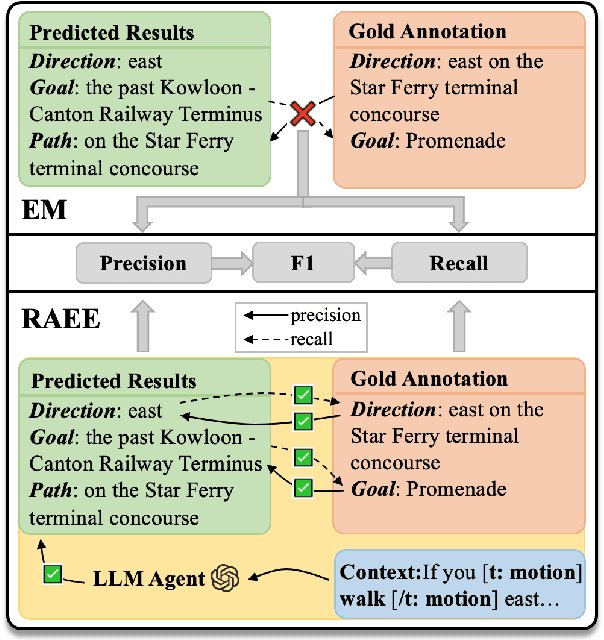

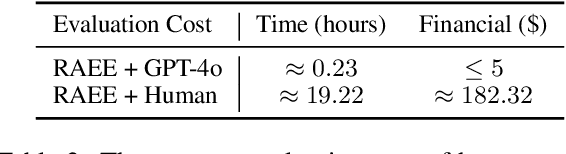

Abstract:Event extraction has gained extensive research attention due to its broad range of applications. However, the current mainstream evaluation method for event extraction relies on token-level exact match, which misjudges numerous semantic-level correct cases. This reliance leads to a significant discrepancy between the evaluated performance of models under exact match criteria and their real performance. To address this problem, we propose RAEE, an automatic evaluation framework that accurately assesses event extraction results at semantic-level instead of token-level. Specifically, RAEE leverages Large Language Models (LLMs) as automatic evaluation agents, incorporating chain-of-thought prompting and an adaptive mechanism to achieve interpretable and adaptive evaluations for precision and recall of triggers and arguments. Extensive experimental results demonstrate that: (1) RAEE achieves a very high correlation with the human average; (2) after reassessing 14 models, including advanced LLMs, on 10 datasets, there is a significant performance gap between exact match and RAEE. The exact match evaluation significantly underestimates the performance of existing event extraction models, particularly underestimating the capabilities of LLMs; (3) fine-grained analysis under RAEE evaluation reveals insightful phenomena worth further exploration. The evaluation toolkit of our proposed RAEE will be publicly released.

EXCEEDS: Extracting Complex Events as Connecting the Dots to Graphs in Scientific Domain

Jun 20, 2024Abstract:It is crucial to utilize events to understand a specific domain. There are lots of research on event extraction in many domains such as news, finance and biology domain. However, scientific domain still lacks event extraction research, including comprehensive datasets and corresponding methods. Compared to other domains, scientific domain presents two characteristics: denser nuggets and more complex events. To solve the above problem, considering these two characteristics, we first construct SciEvents, a large-scale multi-event document-level dataset with a schema tailored for scientific domain. It has 2,508 documents and 24,381 events under refined annotation and quality control. Then, we propose EXCEEDS, a novel end-to-end scientific event extraction framework by storing dense nuggets in a grid matrix and simplifying complex event extraction into a dot construction and connection task. Experimental results demonstrate state-of-the-art performances of EXCEEDS on SciEvents. Additionally, we release SciEvents and EXCEEDS on GitHub.

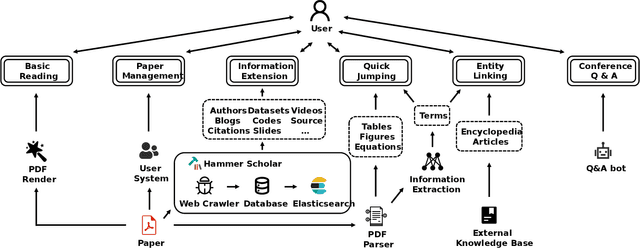

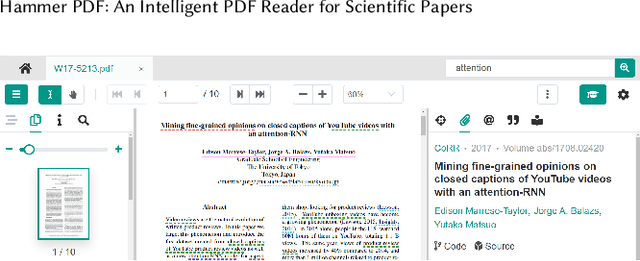

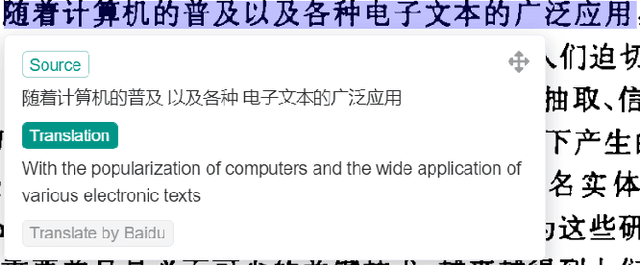

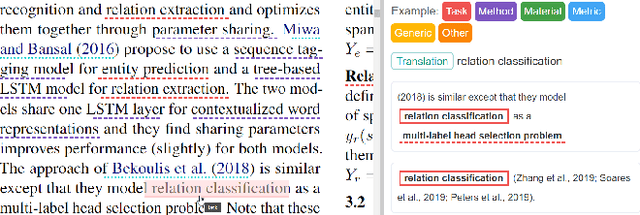

Hammer PDF: An Intelligent PDF Reader for Scientific Papers

Apr 06, 2022

Abstract:Reading scientific papers has been an essential way for researchers to acquire academic knowledge, and most papers are in PDF format. However, existing PDF Readers only support reading, editing, annotating and other basic functions, and lack of multi-granularity analysis for academic papers. Specifically, taking a paper as a whole, these PDF Readers cannot access extended information of the paper, such as related videos, blogs, codes, etc.; meanwhile, for the content of a paper, these PDF Readers also cannot extract and display academic details of the paper, such as terms, authors, citations, etc. In this paper, we introduce Hammer PDF, a novel intelligent PDF Reader for scientific papers. Beyond basic reading functions, Hammer PDF comes with four innovative features: (1) locate, mark and interact with spans (e.g., terms) obtained by information extraction; (2) citation, reference, code, video, blog, and other extended information are displayed with a paper; (3) built-in Hammer Scholar, an academic search engine that uses academic information collected from major academic databases; (4) built-in Q\&A bot to support asking for interested conference information. Our product helps researchers, especially those who study computer science, to improve the efficiency and experience of reading scientific papers. We release Hammer PDF, available for download at https://pdf.hammerscholar.net/face.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge