Yevgeni Koucheryavy

Remote Detection of Applications for Improved Beam Tracking in mmWave/sub-THz 5G/6G Systems

Oct 24, 2024

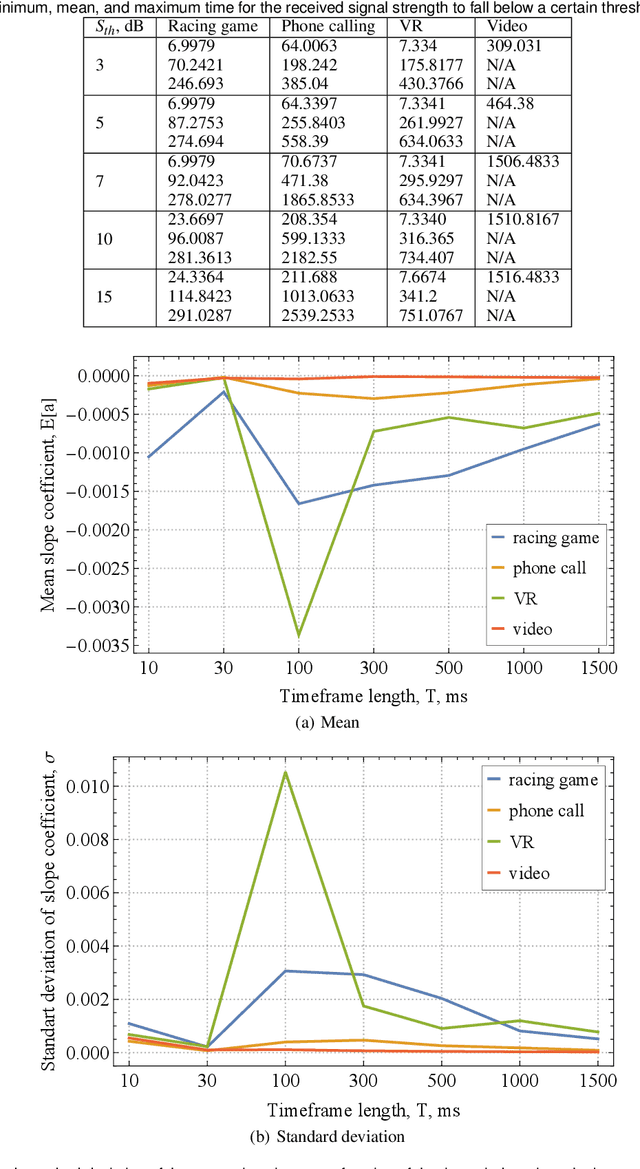

Abstract:Beam tracking is an essential functionality of millimeter wave (mmWave, 30-100 GHz) and sub-terahertz (sub-THz, 100-300 GHz) 5G/6G systems. It operates by performing antenna sweeping at both base station (BS) and user equipment (UE) sides using the Synchronization Signal Blocks (SSB). The optimal frequency of beam tracking events is not specified by 3GPP standards and heavily depends on the micromobility properties of the applications currently utilized by the user. In absence of explicit signalling for the type of application at the air interface, in this paper, we propose a way to remotely detect it at the BS side based on the received signal strength pattern. To this aim, we first perform a multi-stage measurement campaign at 156 GHz, belonging to the sub-THz band, to obtain the received signal strength traces of popular smartphone applications. Then, we proceed applying conventional statistical Mann-Whitney tests and various machine learning (ML) based classification techniques to discriminate applications remotely. Our results show that Mann-Whitney test can be used to differentiate between fast and slow application classes with a confidence of 0.95 inducing class detection delay on the order of 1 s after application initialization. With the same time budget, random forest classifiers can differentiate between applications with fast and slow micromobility with 80% accuracy using received signal strength metric only. The accuracy of detecting a specific application however is lower, reaching 60%. By utilizing the proposed technique one can estimate the optimal values of the beam tracking intervals without adding additional signalling to the air interface.

Low Complexity Algorithms for Mission Completion Time Minimization in UAV-Based ISAC Systems

Oct 12, 2023

Abstract:The inherent support of sixth-generation (6G) systems enabling integrated sensing and communications (ISAC) paradigm greatly enhances the application area of intelligent transportation systems (ITS). One of the mission-critical applications enabled by these systems is disaster management, where ISAC functionality may not only provide localization but also provide users with supplementary information such as escape routes, time to rescue, etc. In this paper, by considering a large area with several locations of interest, we formulate and solve the optimization problem of delivering task parameters of the ISAC system by optimizing the UAV speed and the order of visits to the locations of interest such that the mission time is minimized. The formulated problem is a mixed integer non-linear program which is quite challenging to solve. To reduce the complexity of the solution algorithms, we propose two circular trajectory designs. The first algorithm finds the optimal UAV velocity and radius of the circular trajectories. The second algorithm finds the optimal connecting points for joining the individual circular trajectories. Our numerical results reveal that, with practical simulation parameters, the first algorithm provides a time saving of at least $20\%$, while the second algorithm cuts down the total completion time by at least $7$ times.

Multi-Task Model Personalization for Federated Supervised SVM in Heterogeneous Networks

Apr 01, 2023Abstract:Federated systems enable collaborative training on highly heterogeneous data through model personalization, which can be facilitated by employing multi-task learning algorithms. However, significant variation in device computing capabilities may result in substantial degradation in the convergence rate of training. To accelerate the learning procedure for diverse participants in a multi-task federated setting, more efficient and robust methods need to be developed. In this paper, we design an efficient iterative distributed method based on the alternating direction method of multipliers (ADMM) for support vector machines (SVMs), which tackles federated classification and regression. The proposed method utilizes efficient computations and model exchange in a network of heterogeneous nodes and allows personalization of the learning model in the presence of non-i.i.d. data. To further enhance privacy, we introduce a random mask procedure that helps avoid data inversion. Finally, we analyze the impact of the proposed privacy mechanisms and participant hardware and data heterogeneity on the system performance.

SURIMI: Supervised Radio Map Augmentation with Deep Learning and a Generative Adversarial Network for Fingerprint-based Indoor Positioning

Jul 13, 2022

Abstract:Indoor Positioning based on Machine Learning has drawn increasing attention both in the academy and the industry as meaningful information from the reference data can be extracted. Many researchers are using supervised, semi-supervised, and unsupervised Machine Learning models to reduce the positioning error and offer reliable solutions to the end-users. In this article, we propose a new architecture by combining Convolutional Neural Network (CNN), Long short-term memory (LSTM) and Generative Adversarial Network (GAN) in order to increase the training data and thus improve the position accuracy. The proposed combination of supervised and unsupervised models was tested in 17 public datasets, providing an extensive analysis of its performance. As a result, the positioning error has been reduced in more than 70% of them.

User Association and Multi-connectivity Strategies in Joint Terahertz and Millimeter Wave 6G Systems

Jun 07, 2022

Abstract:Terahertz (THz) wireless access is considered as a next step towards sixth generation (6G) cellular systems. By utilizing even higher frequency bands than 5G millimeter wave (mmWave) New Radio (NR), they will operate over extreme bandwidth delivering unprecedented rates at the access interface. However, by relying upon pencil-wide beams, these systems will not only inherit mmWave propagation challenges such as blockage phenomenon but introduce their own issues associated with micromobility of user equipment (UE). In this paper, we analyze and compare user association schemes and multi-connectivity strategies for joint 6G THz/mmWave deployments. Differently, from stochastic geometry studies, we develop a unified analytically tractable framework that simultaneously accounts for specifics of THz and mmWave radio part design and traffic service specifics at mmWave and THz base stations (BS). Our results show that (i) for negligible blockers density, $\lambda_B\leq{}0.1$ bl./$m^2$, the operator needs to enlarge the coverage of THz BS by accepting sessions that experience outage in case of blockage (ii) for $\lambda_B>0.1$ bl./$m^2$, only those sessions that does not experience outage in case of blockage need to be accepted at THz BS, (iii) THz/mmWave multi-connectivity improves the ongoing session loss probability by $0.1-0.4$ depending on the system parameters.

Lightweight Hybrid CNN-ELM Model for Multi-building and Multi-floor Classification

Apr 21, 2022

Abstract:Machine learning models have become an essential tool in current indoor positioning solutions, given their high capabilities to extract meaningful information from the environment. Convolutional neural networks (CNNs) are one of the most used neural networks (NNs) due to that they are capable of learning complex patterns from the input data. Another model used in indoor positioning solutions is the Extreme Learning Machine (ELM), which provides an acceptable generalization performance as well as a fast speed of learning. In this paper, we offer a lightweight combination of CNN and ELM, which provides a quick and accurate classification of building and floor, suitable for power and resource-constrained devices. As a result, the proposed model is 58\% faster than the benchmark, with a slight improvement in the classification accuracy (by less than 1\%

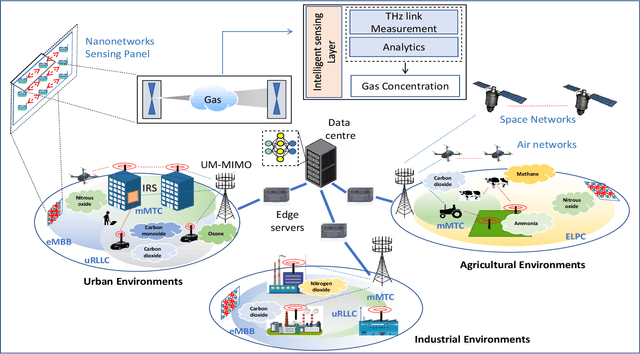

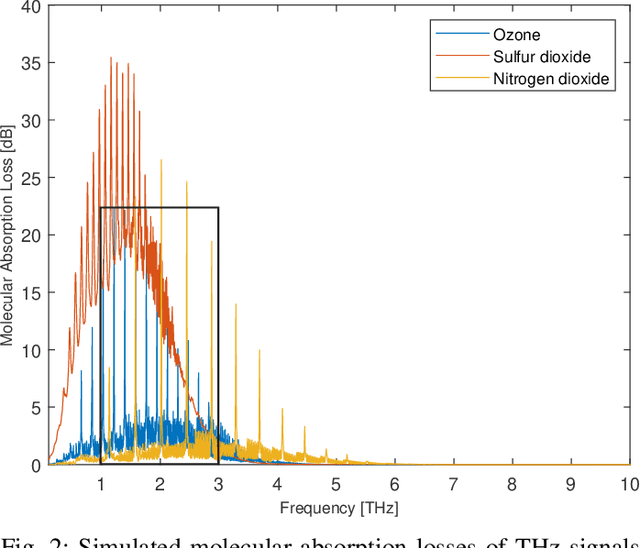

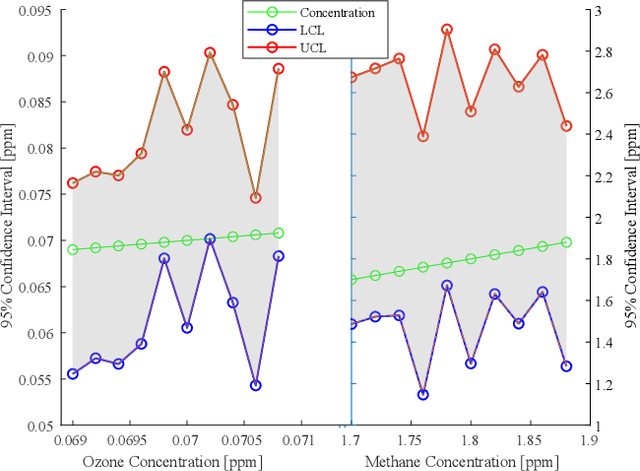

Climate Change Sensing through Terahertz Communications: A Disruptive Application of 6G Networks

Oct 06, 2021

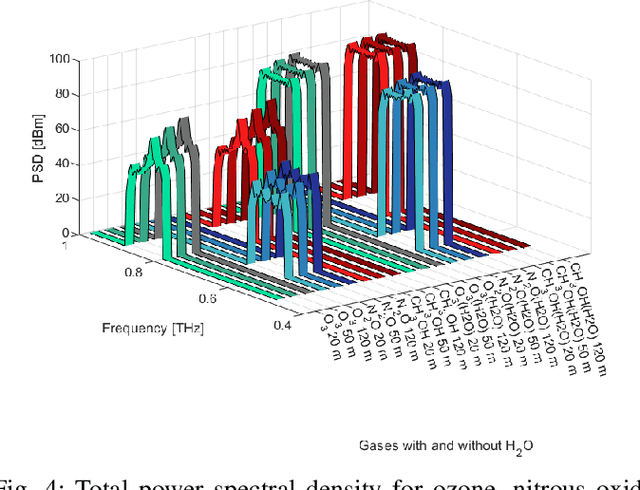

Abstract:Climate change resulting from the misuse and over-exploitation of natural resources has affected and continues to impact the planet's ecosystem. This pressing issue is leading to the development of novel technologies to sense and measure damaging gas emissions. In parallel, the accelerating evolution of wireless communication networks is resulting in wider deployment of mobile telecommunication infrastructure. With 5G technologies already being commercially deployed, the research community is starting research into new technologies for 6G. One of the visions for 6G is the use of the terahertz (THz) spectrum. In this paper, we propose and explore the use of THz spectrum simultaneously for ultrabroadband communication and atmospheric sensing by leveraging the absorption of THz signals. Through the use of machine learning, we present preliminary results on how we can analyze signal path loss and power spectral density to infer the concentration of different climate-impacting gases. Our vision is to demonstrate how 6G infrastructure can provide sensor data for climate change sensing, in addition to its primary purpose of wireless communication.

Peer Offloading with Delayed Feedback in Fog Networks

Nov 24, 2020

Abstract:Comparing to cloud computing, fog computing performs computation and services at the edge of networks, thus relieving the computation burden of the data center and reducing the task latency of end devices. Computation latency is a crucial performance metric in fog computing, especially for real-time applications. In this paper, we study a peer computation offloading problem for a fog network with unknown dynamics. In this scenario, each fog node (FN) can offload their computation tasks to neighboring FNs in a time slot manner. The offloading latency, however, could not be fed back to the task dispatcher instantaneously due to the uncertainty of the processing time in peer FNs. Besides, peer competition occurs when different FNs offload tasks to one FN at the same time. To tackle the above difficulties, we model the computation offloading problem as a sequential FN selection problem with delayed information feedback. Using adversarial multi-arm bandit framework, we construct an online learning policy to deal with delayed information feedback. Different contention resolution approaches are considered to resolve peer competition. Performance analysis shows that the regret of the proposed algorithm, or the performance loss with suboptimal FN selections, achieves a sub-linear order, suggesting an optimal FN selection policy. In addition, we prove that the proposed strategy can result in a Nash equilibrium (NE) with all FNs playing the same policy. Simulation results validate the effectiveness of the proposed policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge