Yearim Kim

Bi-ICE: An Inner Interpretable Framework for Image Classification via Bi-directional Interactions between Concept and Input Embeddings

Nov 26, 2024

Abstract:Inner interpretability is a promising field focused on uncovering the internal mechanisms of AI systems and developing scalable, automated methods to understand these systems at a mechanistic level. While significant research has explored top-down approaches starting from high-level problems or algorithmic hypotheses and bottom-up approaches building higher-level abstractions from low-level or circuit-level descriptions, most efforts have concentrated on analyzing large language models. Moreover, limited attention has been given to applying inner interpretability to large-scale image tasks, primarily focusing on architectural and functional levels to visualize learned concepts. In this paper, we first present a conceptual framework that supports inner interpretability and multilevel analysis for large-scale image classification tasks. We introduce the Bi-directional Interaction between Concept and Input Embeddings (Bi-ICE) module, which facilitates interpretability across the computational, algorithmic, and implementation levels. This module enhances transparency by generating predictions based on human-understandable concepts, quantifying their contributions, and localizing them within the inputs. Finally, we showcase enhanced transparency in image classification, measuring concept contributions and pinpointing their locations within the inputs. Our approach highlights algorithmic interpretability by demonstrating the process of concept learning and its convergence.

Decompose the model: Mechanistic interpretability in image models with Generalized Integrated Gradients (GIG)

Sep 03, 2024

Abstract:In the field of eXplainable AI (XAI) in language models, the progression from local explanations of individual decisions to global explanations with high-level concepts has laid the groundwork for mechanistic interpretability, which aims to decode the exact operations. However, this paradigm has not been adequately explored in image models, where existing methods have primarily focused on class-specific interpretations. This paper introduces a novel approach to systematically trace the entire pathway from input through all intermediate layers to the final output within the whole dataset. We utilize Pointwise Feature Vectors (PFVs) and Effective Receptive Fields (ERFs) to decompose model embeddings into interpretable Concept Vectors. Then, we calculate the relevance between concept vectors with our Generalized Integrated Gradients (GIG), enabling a comprehensive, dataset-wide analysis of model behavior. We validate our method of concept extraction and concept attribution in both qualitative and quantitative evaluations. Our approach advances the understanding of semantic significance within image models, offering a holistic view of their operational mechanics.

Respect the model: Fine-grained and Robust Explanation with Sharing Ratio Decomposition

Jan 25, 2024

Abstract:The truthfulness of existing explanation methods in authentically elucidating the underlying model's decision-making process has been questioned. Existing methods have deviated from faithfully representing the model, thus susceptible to adversarial attacks. To address this, we propose a novel eXplainable AI (XAI) method called SRD (Sharing Ratio Decomposition), which sincerely reflects the model's inference process, resulting in significantly enhanced robustness in our explanations. Different from the conventional emphasis on the neuronal level, we adopt a vector perspective to consider the intricate nonlinear interactions between filters. We also introduce an interesting observation termed Activation-Pattern-Only Prediction (APOP), letting us emphasize the importance of inactive neurons and redefine relevance encapsulating all relevant information including both active and inactive neurons. Our method, SRD, allows for the recursive decomposition of a Pointwise Feature Vector (PFV), providing a high-resolution Effective Receptive Field (ERF) at any layer.

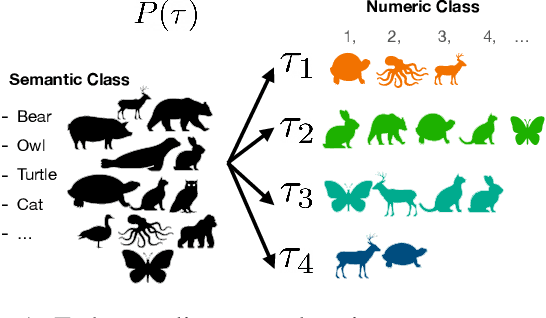

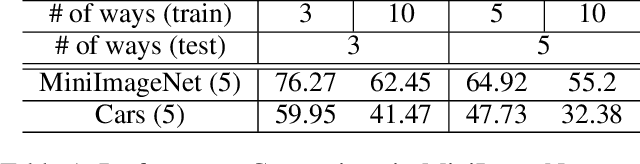

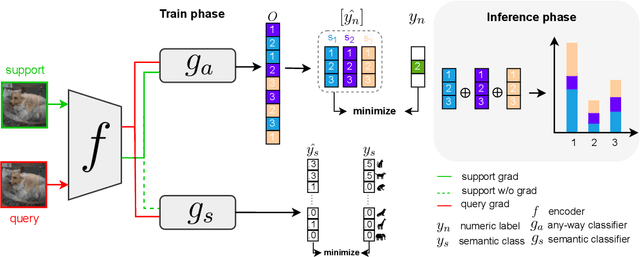

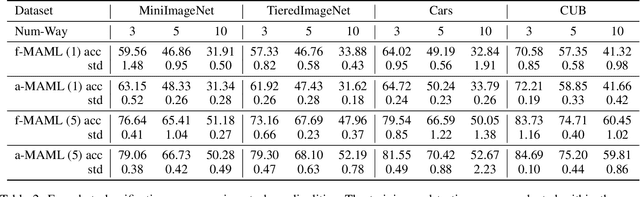

Any-Way Meta Learning

Jan 10, 2024

Abstract:Although meta-learning seems promising performance in the realm of rapid adaptability, it is constrained by fixed cardinality. When faced with tasks of varying cardinalities that were unseen during training, the model lacks its ability. In this paper, we address and resolve this challenge by harnessing `label equivalence' emerged from stochastic numeric label assignments during episodic task sampling. Questioning what defines ``true" meta-learning, we introduce the ``any-way" learning paradigm, an innovative model training approach that liberates model from fixed cardinality constraints. Surprisingly, this model not only matches but often outperforms traditional fixed-way models in terms of performance, convergence speed, and stability. This disrupts established notions about domain generalization. Furthermore, we argue that the inherent label equivalence naturally lacks semantic information. To bridge this semantic information gap arising from label equivalence, we further propose a mechanism for infusing semantic class information into the model. This would enhance the model's comprehension and functionality. Experiments conducted on renowned architectures like MAML and ProtoNet affirm the effectiveness of our method.

Fast Sun-aligned Outdoor Scene Relighting based on TensoRF

Nov 07, 2023

Abstract:In this work, we introduce our method of outdoor scene relighting for Neural Radiance Fields (NeRF) named Sun-aligned Relighting TensoRF (SR-TensoRF). SR-TensoRF offers a lightweight and rapid pipeline aligned with the sun, thereby achieving a simplified workflow that eliminates the need for environment maps. Our sun-alignment strategy is motivated by the insight that shadows, unlike viewpoint-dependent albedo, are determined by light direction. We directly use the sun direction as an input during shadow generation, simplifying the requirements of the inference process significantly. Moreover, SR-TensoRF leverages the training efficiency of TensoRF by incorporating our proposed cubemap concept, resulting in notable acceleration in both training and rendering processes compared to existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge