Yasir Zaki

Self-Regulating Cars: Automating Traffic Control in Free Flow Road Networks

Jun 13, 2025

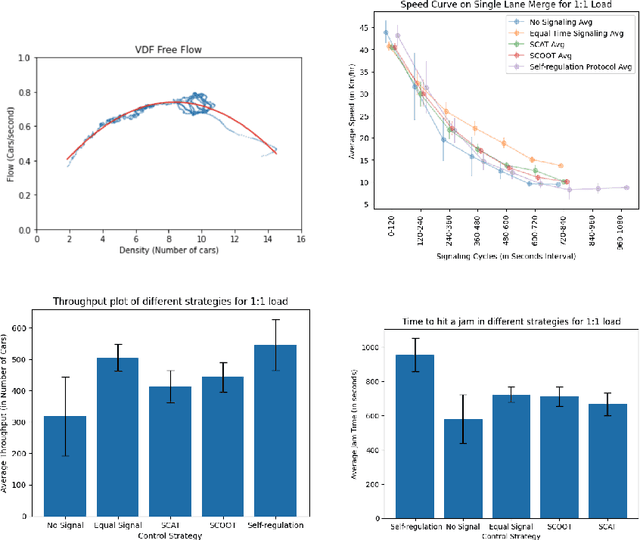

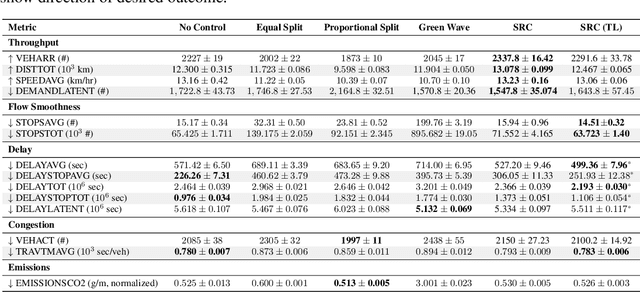

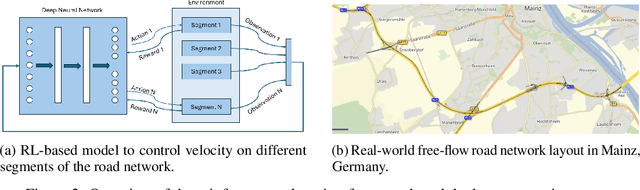

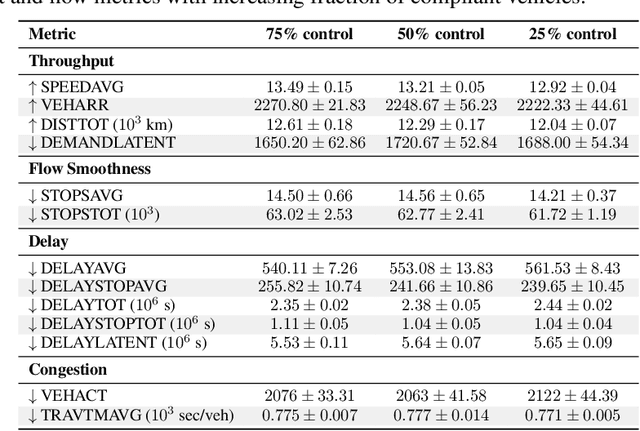

Abstract:Free-flow road networks, such as suburban highways, are increasingly experiencing traffic congestion due to growing commuter inflow and limited infrastructure. Traditional control mechanisms, such as traffic signals or local heuristics, are ineffective or infeasible in these high-speed, signal-free environments. We introduce self-regulating cars, a reinforcement learning-based traffic control protocol that dynamically modulates vehicle speeds to optimize throughput and prevent congestion, without requiring new physical infrastructure. Our approach integrates classical traffic flow theory, gap acceptance models, and microscopic simulation into a physics-informed RL framework. By abstracting roads into super-segments, the agent captures emergent flow dynamics and learns robust speed modulation policies from instantaneous traffic observations. Evaluated in the high-fidelity PTV Vissim simulator on a real-world highway network, our method improves total throughput by 5%, reduces average delay by 13%, and decreases total stops by 3% compared to the no-control setting. It also achieves smoother, congestion-resistant flow while generalizing across varied traffic patterns, demonstrating its potential for scalable, ML-driven traffic management.

Data Doping or True Intelligence? Evaluating the Transferability of Injected Knowledge in LLMs

May 22, 2025Abstract:As the knowledge of large language models (LLMs) becomes outdated over time, there is a growing need for efficient methods to update them, especially when injecting proprietary information. Our study reveals that comprehension-intensive fine-tuning tasks (e.g., question answering and blanks) achieve substantially higher knowledge retention rates (48%) compared to mapping-oriented tasks like translation (17%) or text-to-JSON conversion (20%), despite exposure to identical factual content. We demonstrate that this pattern persists across model architectures and follows scaling laws, with larger models showing improved retention across all task types. However, all models exhibit significant performance drops when applying injected knowledge in broader contexts, suggesting limited semantic integration. These findings show the importance of task selection in updating LLM knowledge, showing that effective knowledge injection relies not just on data exposure but on the depth of cognitive engagement during fine-tuning.

Large Language Models are often politically extreme, usually ideologically inconsistent, and persuasive even in informational contexts

May 07, 2025

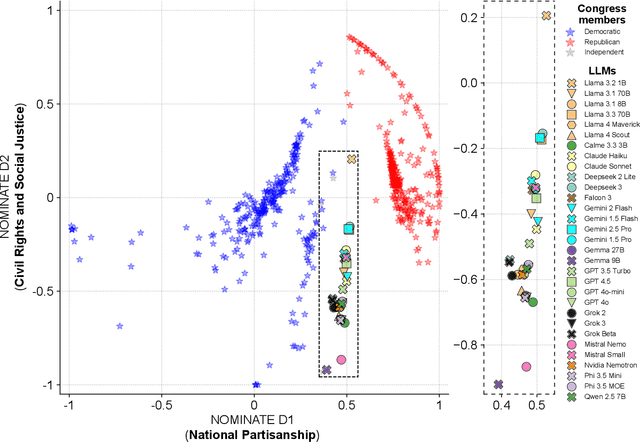

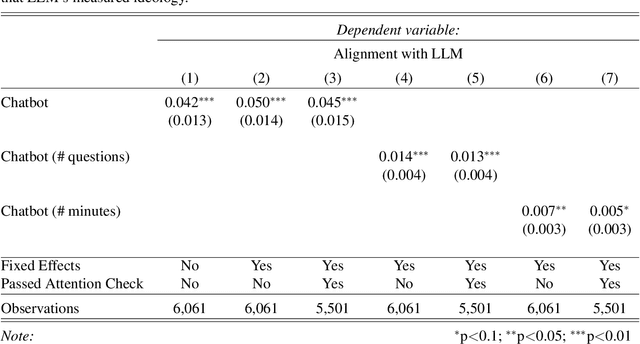

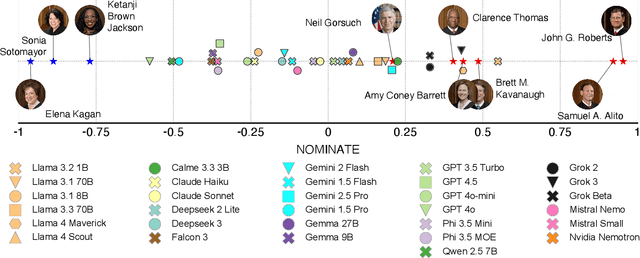

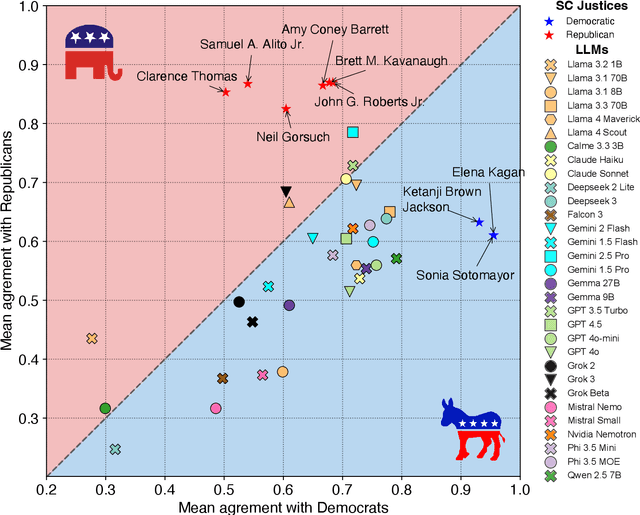

Abstract:Large Language Models (LLMs) are a transformational technology, fundamentally changing how people obtain information and interact with the world. As people become increasingly reliant on them for an enormous variety of tasks, a body of academic research has developed to examine these models for inherent biases, especially political biases, often finding them small. We challenge this prevailing wisdom. First, by comparing 31 LLMs to legislators, judges, and a nationally representative sample of U.S. voters, we show that LLMs' apparently small overall partisan preference is the net result of offsetting extreme views on specific topics, much like moderate voters. Second, in a randomized experiment, we show that LLMs can promulgate their preferences into political persuasiveness even in information-seeking contexts: voters randomized to discuss political issues with an LLM chatbot are as much as 5 percentage points more likely to express the same preferences as that chatbot. Contrary to expectations, these persuasive effects are not moderated by familiarity with LLMs, news consumption, or interest in politics. LLMs, especially those controlled by private companies or governments, may become a powerful and targeted vector for political influence.

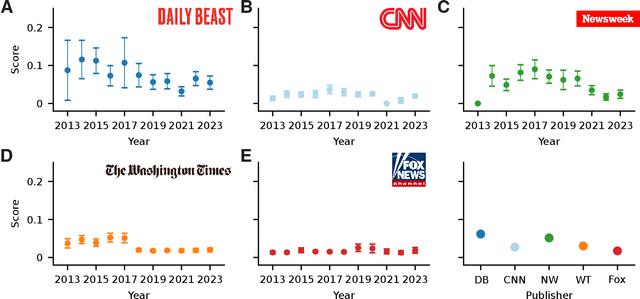

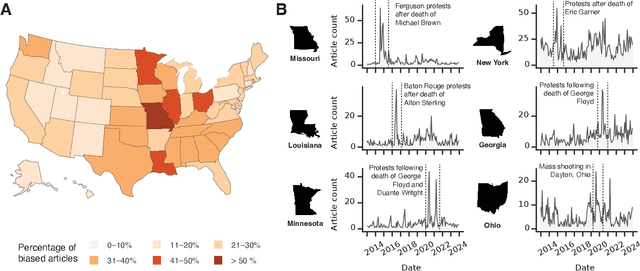

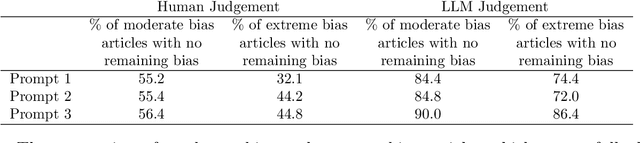

Neutralizing the Narrative: AI-Powered Debiasing of Online News Articles

Apr 04, 2025

Abstract:Bias in news reporting significantly impacts public perception, particularly regarding crime, politics, and societal issues. Traditional bias detection methods, predominantly reliant on human moderation, suffer from subjective interpretations and scalability constraints. Here, we introduce an AI-driven framework leveraging advanced large language models (LLMs), specifically GPT-4o, GPT-4o Mini, Gemini Pro, Gemini Flash, Llama 8B, and Llama 3B, to systematically identify and mitigate biases in news articles. To this end, we collect an extensive dataset consisting of over 30,000 crime-related articles from five politically diverse news sources spanning a decade (2013-2023). Our approach employs a two-stage methodology: (1) bias detection, where each LLM scores and justifies biased content at the paragraph level, validated through human evaluation for ground truth establishment, and (2) iterative debiasing using GPT-4o Mini, verified by both automated reassessment and human reviewers. Empirical results indicate GPT-4o Mini's superior accuracy in bias detection and effectiveness in debiasing. Furthermore, our analysis reveals temporal and geographical variations in media bias correlating with socio-political dynamics and real-world events. This study contributes to scalable computational methodologies for bias mitigation, promoting fairness and accountability in news reporting.

PixLift: Accelerating Web Browsing via AI Upscaling

Feb 13, 2025Abstract:Accessing the internet in regions with expensive data plans and limited connectivity poses significant challenges, restricting information access and economic growth. Images, as a major contributor to webpage sizes, exacerbate this issue, despite advances in compression formats like WebP and AVIF. The continued growth of complex and curated web content, coupled with suboptimal optimization practices in many regions, has prevented meaningful reductions in web page sizes. This paper introduces PixLift, a novel solution to reduce webpage sizes by downscaling their images during transmission and leveraging AI models on user devices to upscale them. By trading computational resources for bandwidth, PixLift enables more affordable and inclusive web access. We address key challenges, including the feasibility of scaled image requests on popular websites, the implementation of PixLift as a browser extension, and its impact on user experience. Through the analysis of 71.4k webpages, evaluations of three mainstream upscaling models, and a user study, we demonstrate PixLift's ability to significantly reduce data usage without compromising image quality, fostering a more equitable internet.

Advancing Vehicle Plate Recognition: Multitasking Visual Language Models with VehiclePaliGemma

Dec 14, 2024

Abstract:License plate recognition (LPR) involves automated systems that utilize cameras and computer vision to read vehicle license plates. Such plates collected through LPR can then be compared against databases to identify stolen vehicles, uninsured drivers, crime suspects, and more. The LPR system plays a significant role in saving time for institutions such as the police force. In the past, LPR relied heavily on Optical Character Recognition (OCR), which has been widely explored to recognize characters in images. Usually, collected plate images suffer from various limitations, including noise, blurring, weather conditions, and close characters, making the recognition complex. Existing LPR methods still require significant improvement, especially for distorted images. To fill this gap, we propose utilizing visual language models (VLMs) such as OpenAI GPT4o, Google Gemini 1.5, Google PaliGemma (Pathways Language and Image model + Gemma model), Meta Llama 3.2, Anthropic Claude 3.5 Sonnet, LLaVA, NVIDIA VILA, and moondream2 to recognize such unclear plates with close characters. This paper evaluates the VLM's capability to address the aforementioned problems. Additionally, we introduce ``VehiclePaliGemma'', a fine-tuned Open-sourced PaliGemma VLM designed to recognize plates under challenging conditions. We compared our proposed VehiclePaliGemma with state-of-the-art methods and other VLMs using a dataset of Malaysian license plates collected under complex conditions. The results indicate that VehiclePaliGemma achieved superior performance with an accuracy of 87.6\%. Moreover, it is able to predict the car's plate at a speed of 7 frames per second using A100-80GB GPU. Finally, we explored the multitasking capability of VehiclePaliGemma model to accurately identify plates containing multiple cars of various models and colors, with plates positioned and oriented in different directions.

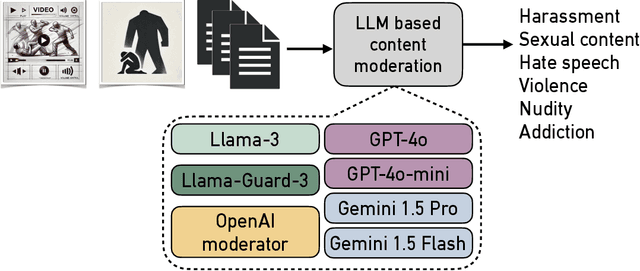

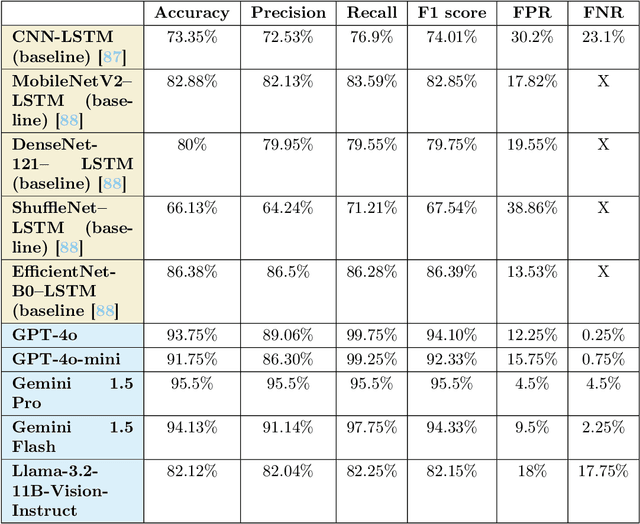

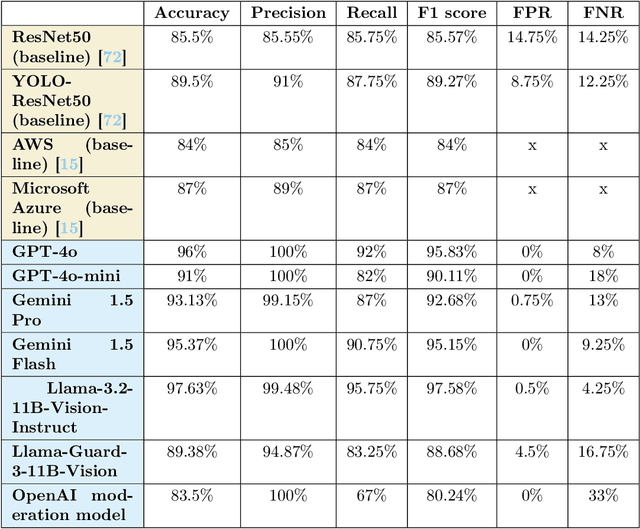

Advancing Content Moderation: Evaluating Large Language Models for Detecting Sensitive Content Across Text, Images, and Videos

Nov 26, 2024

Abstract:The widespread dissemination of hate speech, harassment, harmful and sexual content, and violence across websites and media platforms presents substantial challenges and provokes widespread concern among different sectors of society. Governments, educators, and parents are often at odds with media platforms about how to regulate, control, and limit the spread of such content. Technologies for detecting and censoring the media contents are a key solution to addressing these challenges. Techniques from natural language processing and computer vision have been used widely to automatically identify and filter out sensitive content such as offensive languages, violence, nudity, and addiction in both text, images, and videos, enabling platforms to enforce content policies at scale. However, existing methods still have limitations in achieving high detection accuracy with fewer false positives and false negatives. Therefore, more sophisticated algorithms for understanding the context of both text and image may open rooms for improvement in content censorship to build a more efficient censorship system. In this paper, we evaluate existing LLM-based content moderation solutions such as OpenAI moderation model and Llama-Guard3 and study their capabilities to detect sensitive contents. Additionally, we explore recent LLMs such as GPT, Gemini, and Llama in identifying inappropriate contents across media outlets. Various textual and visual datasets like X tweets, Amazon reviews, news articles, human photos, cartoons, sketches, and violence videos have been utilized for evaluation and comparison. The results demonstrate that LLMs outperform traditional techniques by achieving higher accuracy and lower false positive and false negative rates. This highlights the potential to integrate LLMs into websites, social media platforms, and video-sharing services for regulatory and content moderation purposes.

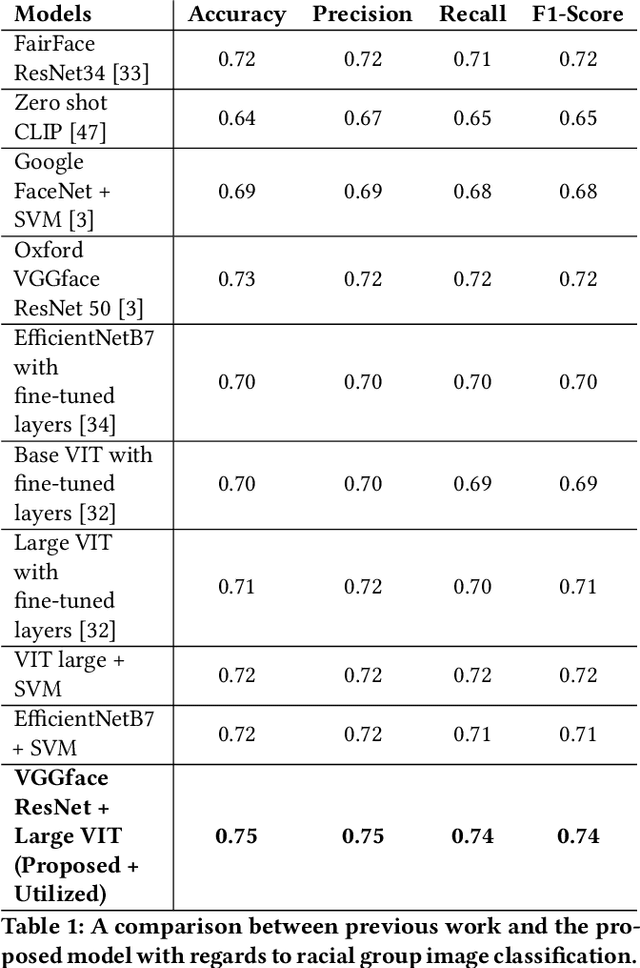

Exploring Vision Language Models for Facial Attribute Recognition: Emotion, Race, Gender, and Age

Oct 31, 2024

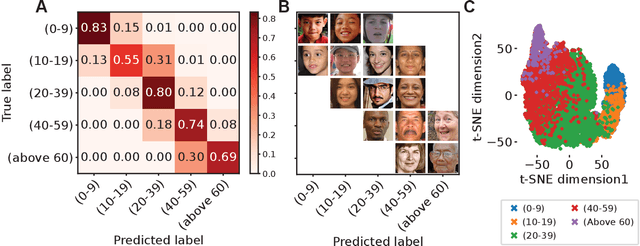

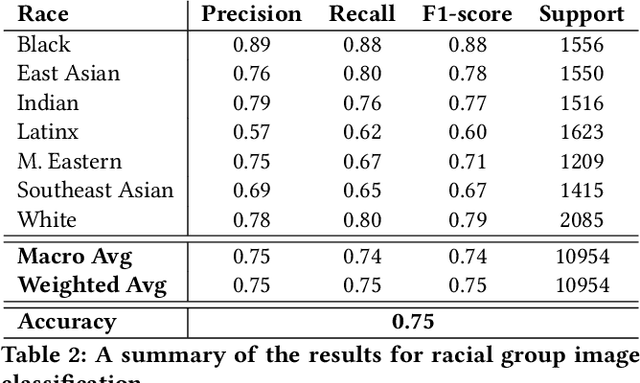

Abstract:Technologies for recognizing facial attributes like race, gender, age, and emotion have several applications, such as surveillance, advertising content, sentiment analysis, and the study of demographic trends and social behaviors. Analyzing demographic characteristics based on images and analyzing facial expressions have several challenges due to the complexity of humans' facial attributes. Traditional approaches have employed CNNs and various other deep learning techniques, trained on extensive collections of labeled images. While these methods demonstrated effective performance, there remains potential for further enhancements. In this paper, we propose to utilize vision language models (VLMs) such as generative pre-trained transformer (GPT), GEMINI, large language and vision assistant (LLAVA), PaliGemma, and Microsoft Florence2 to recognize facial attributes such as race, gender, age, and emotion from images with human faces. Various datasets like FairFace, AffectNet, and UTKFace have been utilized to evaluate the solutions. The results show that VLMs are competitive if not superior to traditional techniques. Additionally, we propose "FaceScanPaliGemma"--a fine-tuned PaliGemma model--for race, gender, age, and emotion recognition. The results show an accuracy of 81.1%, 95.8%, 80%, and 59.4% for race, gender, age group, and emotion classification, respectively, outperforming pre-trained version of PaliGemma, other VLMs, and SotA methods. Finally, we propose "FaceScanGPT", which is a GPT-4o model to recognize the above attributes when several individuals are present in the image using a prompt engineered for a person with specific facial and/or physical attributes. The results underscore the superior multitasking capability of FaceScanGPT to detect the individual's attributes like hair cut, clothing color, postures, etc., using only a prompt to drive the detection and recognition tasks.

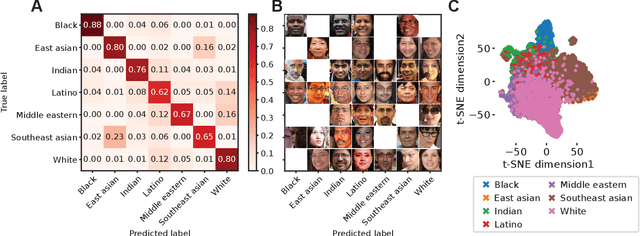

A Longitudinal Analysis of Racial and Gender Bias in New York Times and Fox News Images and Articles

Oct 29, 2024

Abstract:The manner in which different racial and gender groups are portrayed in news coverage plays a large role in shaping public opinion. As such, understanding how such groups are portrayed in news media is of notable societal value, and has thus been a significant endeavour in both the computer and social sciences. Yet, the literature still lacks a longitudinal study examining both the frequency of appearance of different racial and gender groups in online news articles, as well as the context in which such groups are discussed. To fill this gap, we propose two machine learning classifiers to detect the race and age of a given subject. Next, we compile a dataset of 123,337 images and 441,321 online news articles from New York Times (NYT) and Fox News (Fox), and examine representation through two computational approaches. Firstly, we examine the frequency and prominence of appearance of racial and gender groups in images embedded in news articles, revealing that racial and gender minorities are largely under-represented, and when they do appear, they are featured less prominently compared to majority groups. Furthermore, we find that NYT largely features more images of racial minority groups compared to Fox. Secondly, we examine both the frequency and context with which racial minority groups are presented in article text. This reveals the narrow scope in which certain racial groups are covered and the frequency with which different groups are presented as victims and/or perpetrators in a given conflict. Taken together, our analysis contributes to the literature by providing two novel open-source classifiers to detect race and age from images, and shedding light on the racial and gender biases in news articles from venues on opposite ends of the American political spectrum.

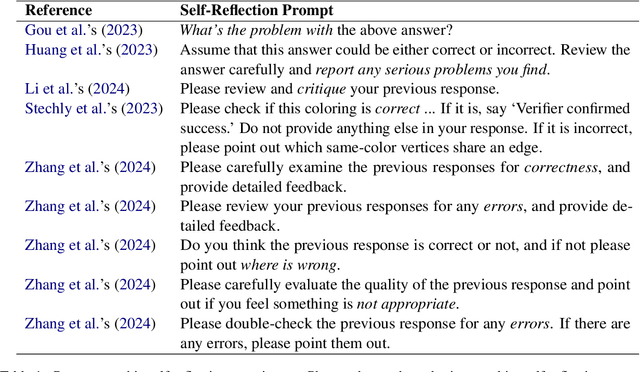

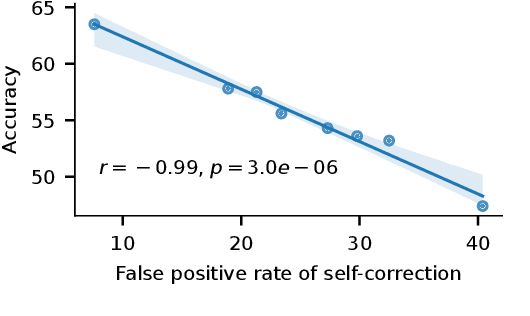

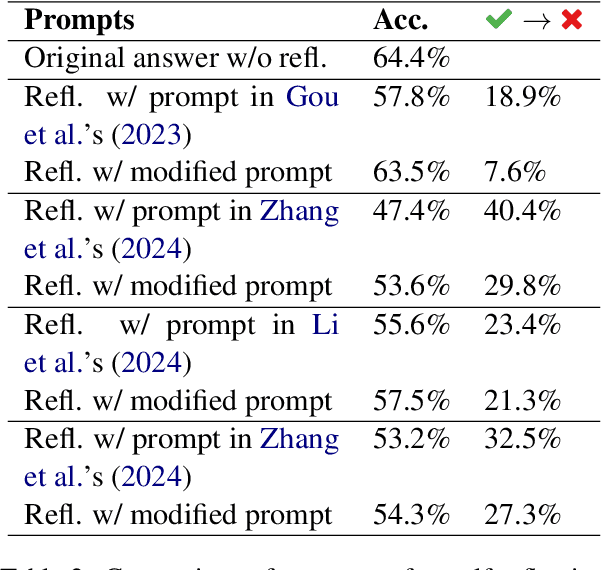

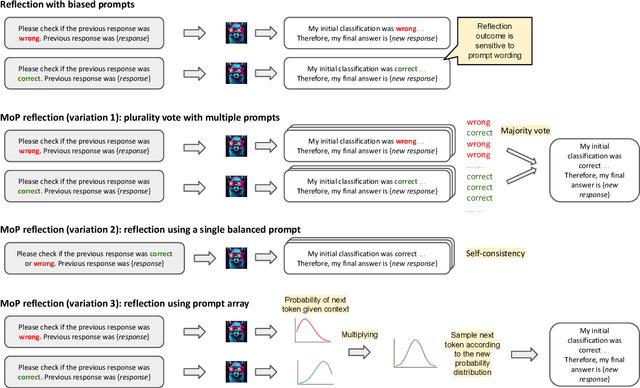

Self-Reflection Outcome is Sensitive to Prompt Construction

Jun 14, 2024

Abstract:Large language models (LLMs) demonstrate impressive zero-shot and few-shot reasoning capabilities. Some propose that such capabilities can be improved through self-reflection, i.e., letting LLMs reflect on their own output to identify and correct mistakes in the initial responses. However, despite some evidence showing the benefits of self-reflection, recent studies offer mixed results. Here, we aim to reconcile these conflicting findings by first demonstrating that the outcome of self-reflection is sensitive to prompt wording; e.g., LLMs are more likely to conclude that it has made a mistake when explicitly prompted to find mistakes. Consequently, idiosyncrasies in reflection prompts may lead LLMs to change correct responses unnecessarily. We show that most prompts used in the self-reflection literature are prone to this bias. We then propose different ways of constructing prompts that are conservative in identifying mistakes and show that self-reflection using such prompts results in higher accuracy. Our findings highlight the importance of prompt engineering in self-reflection tasks. We release our code at https://github.com/Michael98Liu/mixture-of-prompts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge