Yashraj S. Narang

DexYCB: A Benchmark for Capturing Hand Grasping of Objects

Apr 09, 2021

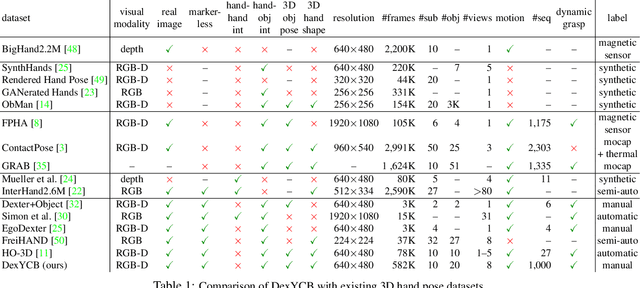

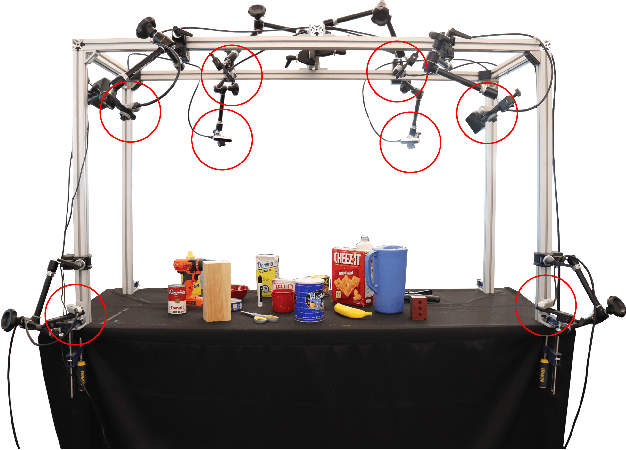

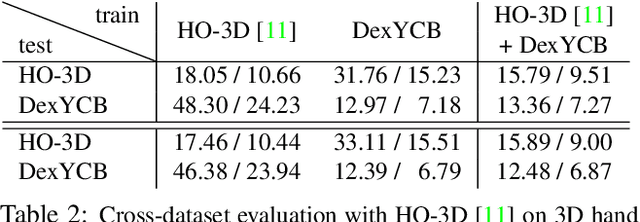

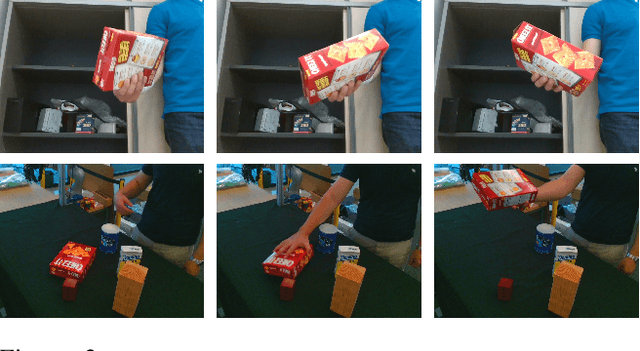

Abstract:We introduce DexYCB, a new dataset for capturing hand grasping of objects. We first compare DexYCB with a related one through cross-dataset evaluation. We then present a thorough benchmark of state-of-the-art approaches on three relevant tasks: 2D object and keypoint detection, 6D object pose estimation, and 3D hand pose estimation. Finally, we evaluate a new robotics-relevant task: generating safe robot grasps in human-to-robot object handover. Dataset and code are available at https://dex-ycb.github.io.

Interpreting and Predicting Tactile Signals for the SynTouch BioTac

Jan 14, 2021

Abstract:In the human hand, high-density contact information provided by afferent neurons is essential for many human grasping and manipulation capabilities. In contrast, robotic tactile sensors, including the state-of-the-art SynTouch BioTac, are typically used to provide low-density contact information, such as contact location, center of pressure, and net force. Although useful, these data do not convey or leverage the rich information content that some tactile sensors naturally measure. This research extends robotic tactile sensing beyond reduced-order models through 1) the automated creation of a precise experimental tactile dataset for the BioTac over a diverse range of physical interactions, 2) a 3D finite element (FE) model of the BioTac, which complements the experimental dataset with high-density, distributed contact data, 3) neural-network-based mappings from raw BioTac signals to not only low-dimensional experimental data, but also high-density FE deformation fields, and 4) mappings from the FE deformation fields to the raw signals themselves. The high-density data streams can provide a far greater quantity of interpretable information for grasping and manipulation algorithms than previously accessible.

Interpreting and Predicting Tactile Signals via a Physics-Based and Data-Driven Framework

Jun 06, 2020

Abstract:High-density afferents in the human hand have long been regarded as essential for human grasping and manipulation abilities. In contrast, robotic tactile sensors are typically used to provide low-density contact data, such as center-of-pressure and resultant force. Although useful, this data does not exploit the rich information content that some tactile sensors (e.g., the SynTouch BioTac) naturally provide. This research extends robotic tactile sensing beyond reduced-order models through 1) the automated creation of a precise tactile dataset for the BioTac over diverse physical interactions, 2) a 3D finite element (FE) model of the BioTac, which complements the experimental dataset with high-resolution, distributed contact data, and 3) neural-network-based mappings from raw BioTac signals to low-dimensional experimental data, and more importantly, high-density FE deformation fields. These data streams can provide a far greater quantity of interpretable information for grasping and manipulation algorithms than previously accessible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge