Xun Sun

On the Evolution of Knowledge Graphs: A Survey and Perspective

Oct 10, 2023

Abstract:Knowledge graphs (KGs) are structured representations of diversified knowledge. They are widely used in various intelligent applications. In this article, we provide a comprehensive survey on the evolution of various types of knowledge graphs (i.e., static KGs, dynamic KGs, temporal KGs, and event KGs) and techniques for knowledge extraction and reasoning. Furthermore, we introduce the practical applications of different types of KGs, including a case study in financial analysis. Finally, we propose our perspective on the future directions of knowledge engineering, including the potential of combining the power of knowledge graphs and large language models (LLMs), and the evolution of knowledge extraction, reasoning, and representation.

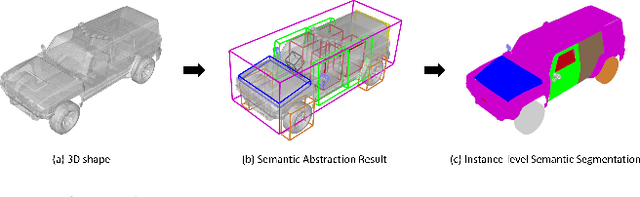

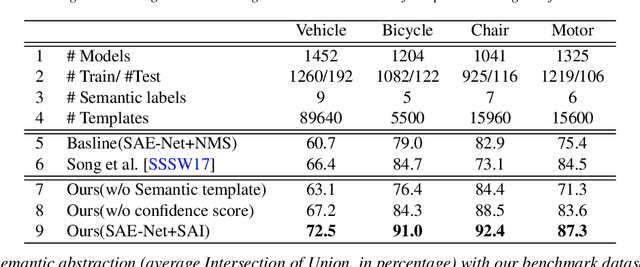

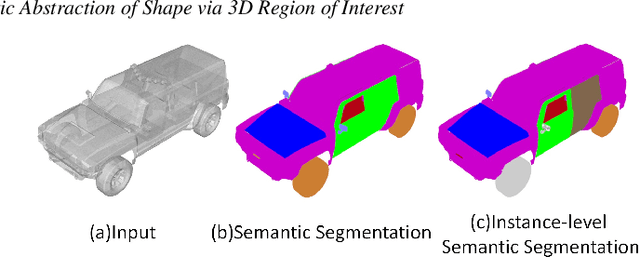

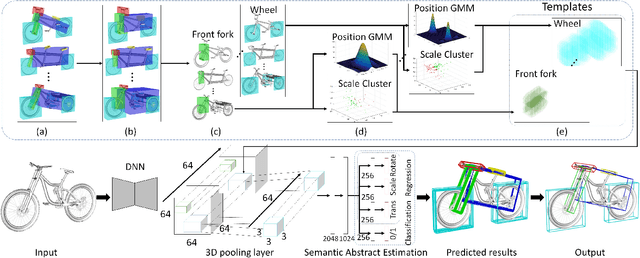

Learning Semantic Abstraction of Shape via 3D Region of Interest

Jan 13, 2022

Abstract:In this paper, we focus on the two tasks of 3D shape abstraction and semantic analysis. This is in contrast to current methods, which focus solely on either 3D shape abstraction or semantic analysis. In addition, previous methods have had difficulty producing instance-level semantic results, which has limited their application. We present a novel method for the joint estimation of a 3D shape abstraction and semantic analysis. Our approach first generates a number of 3D semantic candidate regions for a 3D shape; we then employ these candidates to directly predict the semantic categories and refine the parameters of the candidate regions simultaneously using a deep convolutional neural network. Finally, we design an algorithm to fuse the predicted results and obtain the final semantic abstraction, which is shown to be an improvement over a standard non maximum suppression. Experimental results demonstrate that our approach can produce state-of-the-art results. Moreover, we also find that our results can be easily applied to instance-level semantic part segmentation and shape matching.

Learning Fine-Grained Segmentation of 3D Shapes without Part Labels

Mar 24, 2021

Abstract:Learning-based 3D shape segmentation is usually formulated as a semantic labeling problem, assuming that all parts of training shapes are annotated with a given set of tags. This assumption, however, is impractical for learning fine-grained segmentation. Although most off-the-shelf CAD models are, by construction, composed of fine-grained parts, they usually miss semantic tags and labeling those fine-grained parts is extremely tedious. We approach the problem with deep clustering, where the key idea is to learn part priors from a shape dataset with fine-grained segmentation but no part labels. Given point sampled 3D shapes, we model the clustering priors of points with a similarity matrix and achieve part segmentation through minimizing a novel low rank loss. To handle highly densely sampled point sets, we adopt a divide-and-conquer strategy. We partition the large point set into a number of blocks. Each block is segmented using a deep-clustering-based part prior network trained in a category-agnostic manner. We then train a graph convolution network to merge the segments of all blocks to form the final segmentation result. Our method is evaluated with a challenging benchmark of fine-grained segmentation, showing state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge