Xinshun Wang

Superman: Unifying Skeleton and Vision for Human Motion Perception and Generation

Feb 02, 2026Abstract:Human motion analysis tasks, such as temporal 3D pose estimation, motion prediction, and motion in-betweening, play an essential role in computer vision. However, current paradigms suffer from severe fragmentation. First, the field is split between ``perception'' models that understand motion from video but only output text, and ``generation'' models that cannot perceive from raw visual input. Second, generative MLLMs are often limited to single-frame, static poses using dense, parametric SMPL models, failing to handle temporal motion. Third, existing motion vocabularies are built from skeleton data alone, severing the link to the visual domain. To address these challenges, we introduce Superman, a unified framework that bridges visual perception with temporal, skeleton-based motion generation. Our solution is twofold. First, to overcome the modality disconnect, we propose a Vision-Guided Motion Tokenizer. Leveraging the natural geometric alignment between 3D skeletons and visual data, this module pioneers robust joint learning from both modalities, creating a unified, cross-modal motion vocabulary. Second, grounded in this motion language, a single, unified MLLM architecture is trained to handle all tasks. This module flexibly processes diverse, temporal inputs, unifying 3D skeleton pose estimation from video (perception) with skeleton-based motion prediction and in-betweening (generation). Extensive experiments on standard benchmarks, including Human3.6M, demonstrate that our unified method achieves state-of-the-art or competitive performance across all motion tasks. This showcases a more efficient and scalable path for generative motion analysis using skeletons.

Human-in-Context: Unified Cross-Domain 3D Human Motion Modeling via In-Context Learning

Aug 14, 2025Abstract:This paper aims to model 3D human motion across domains, where a single model is expected to handle multiple modalities, tasks, and datasets. Existing cross-domain models often rely on domain-specific components and multi-stage training, which limits their practicality and scalability. To overcome these challenges, we propose a new setting to train a unified cross-domain model through a single process, eliminating the need for domain-specific components and multi-stage training. We first introduce Pose-in-Context (PiC), which leverages in-context learning to create a pose-centric cross-domain model. While PiC generalizes across multiple pose-based tasks and datasets, it encounters difficulties with modality diversity, prompting strategy, and contextual dependency handling. We thus propose Human-in-Context (HiC), an extension of PiC that broadens generalization across modalities, tasks, and datasets. HiC combines pose and mesh representations within a unified framework, expands task coverage, and incorporates larger-scale datasets. Additionally, HiC introduces a max-min similarity prompt sampling strategy to enhance generalization across diverse domains and a network architecture with dual-branch context injection for improved handling of contextual dependencies. Extensive experimental results show that HiC performs better than PiC in terms of generalization, data scale, and performance across a wide range of domains. These results demonstrate the potential of HiC for building a unified cross-domain 3D human motion model with improved flexibility and scalability. The source codes and models are available at https://github.com/BradleyWang0416/Human-in-Context.

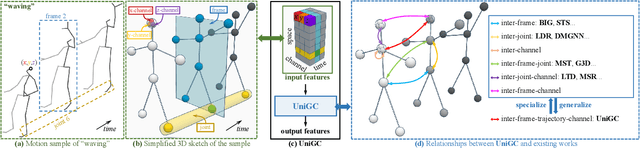

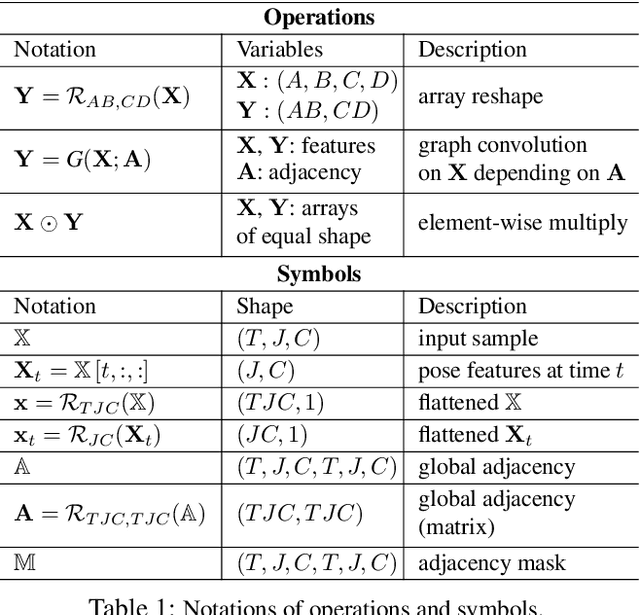

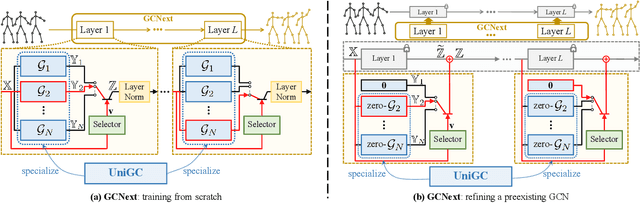

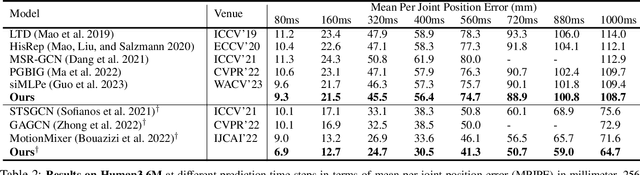

GCNext: Towards the Unity of Graph Convolutions for Human Motion Prediction

Dec 19, 2023

Abstract:The past few years has witnessed the dominance of Graph Convolutional Networks (GCNs) over human motion prediction.Various styles of graph convolutions have been proposed, with each one meticulously designed and incorporated into a carefully-crafted network architecture. This paper breaks the limits of existing knowledge by proposing Universal Graph Convolution (UniGC), a novel graph convolution concept that re-conceptualizes different graph convolutions as its special cases. Leveraging UniGC on network-level, we propose GCNext, a novel GCN-building paradigm that dynamically determines the best-fitting graph convolutions both sample-wise and layer-wise. GCNext offers multiple use cases, including training a new GCN from scratch or refining a preexisting GCN. Experiments on Human3.6M, AMASS, and 3DPW datasets show that, by incorporating unique module-to-network designs, GCNext yields up to 9x lower computational cost than existing GCN methods, on top of achieving state-of-the-art performance.

Skeleton-in-Context: Unified Skeleton Sequence Modeling with In-Context Learning

Dec 06, 2023

Abstract:In-context learning provides a new perspective for multi-task modeling for vision and NLP. Under this setting, the model can perceive tasks from prompts and accomplish them without any extra task-specific head predictions or model fine-tuning. However, Skeleton sequence modeling via in-context learning remains unexplored. Directly applying existing in-context models from other areas onto skeleton sequences fails due to the inter-frame and cross-task pose similarity that makes it outstandingly hard to perceive the task correctly from a subtle context. To address this challenge, we propose Skeleton-in-Context (SiC), an effective framework for in-context skeleton sequence modeling. Our SiC is able to handle multiple skeleton-based tasks simultaneously after a single training process and accomplish each task from context according to the given prompt. It can further generalize to new, unseen tasks according to customized prompts. To facilitate context perception, we additionally propose a task-unified prompt, which adaptively learns tasks of different natures, such as partial joint-level generation, sequence-level prediction, or 2D-to-3D motion prediction. We conduct extensive experiments to evaluate the effectiveness of our SiC on multiple tasks, including motion prediction, pose estimation, joint completion, and future pose estimation. We also evaluate its generalization capability on unseen tasks such as motion-in-between. These experiments show that our model achieves state-of-the-art multi-task performance and even outperforms single-task methods on certain tasks.

Dynamic Dense Graph Convolutional Network for Skeleton-based Human Motion Prediction

Nov 29, 2023Abstract:Graph Convolutional Networks (GCN) which typically follows a neural message passing framework to model dependencies among skeletal joints has achieved high success in skeleton-based human motion prediction task. Nevertheless, how to construct a graph from a skeleton sequence and how to perform message passing on the graph are still open problems, which severely affect the performance of GCN. To solve both problems, this paper presents a Dynamic Dense Graph Convolutional Network (DD-GCN), which constructs a dense graph and implements an integrated dynamic message passing. More specifically, we construct a dense graph with 4D adjacency modeling as a comprehensive representation of motion sequence at different levels of abstraction. Based on the dense graph, we propose a dynamic message passing framework that learns dynamically from data to generate distinctive messages reflecting sample-specific relevance among nodes in the graph. Extensive experiments on benchmark Human 3.6M and CMU Mocap datasets verify the effectiveness of our DD-GCN which obviously outperforms state-of-the-art GCN-based methods, especially when using long-term and our proposed extremely long-term protocol.

Learning Snippet-to-Motion Progression for Skeleton-based Human Motion Prediction

Jul 26, 2023Abstract:Existing Graph Convolutional Networks to achieve human motion prediction largely adopt a one-step scheme, which output the prediction straight from history input, failing to exploit human motion patterns. We observe that human motions have transitional patterns and can be split into snippets representative of each transition. Each snippet can be reconstructed from its starting and ending poses referred to as the transitional poses. We propose a snippet-to-motion multi-stage framework that breaks motion prediction into sub-tasks easier to accomplish. Each sub-task integrates three modules: transitional pose prediction, snippet reconstruction, and snippet-to-motion prediction. Specifically, we propose to first predict only the transitional poses. Then we use them to reconstruct the corresponding snippets, obtaining a close approximation to the true motion sequence. Finally we refine them to produce the final prediction output. To implement the network, we propose a novel unified graph modeling, which allows for direct and effective feature propagation compared to existing approaches which rely on separate space-time modeling. Extensive experiments on Human 3.6M, CMU Mocap and 3DPW datasets verify the effectiveness of our method which achieves state-of-the-art performance.

A Mixer Layer is Worth One Graph Convolution: Unifying MLP-Mixers and GCNs for Human Motion Prediction

Apr 07, 2023

Abstract:The past few years has witnessed the dominance of Graph Convolutional Networks (GCNs) over human motion prediction, while their performance is still far from satisfactory. Recently, MLP-Mixers show competitive results on top of being more efficient and simple. To extract features, GCNs typically follow an aggregate-and-update paradigm, while Mixers rely on token mixing and channel mixing operations. The two research paths have been independently established in the community. In this paper, we develop a novel perspective by unifying Mixers and GCNs. We show that a mixer layer can be seen as a graph convolutional layer applied to a fully-connected graph with parameterized adjacency. Extending this theoretical finding to the practical side, we propose Meta-Mixing Network (M$^2$-Net). Assisted with a novel zero aggregation operation, our network is capable of capturing both the structure-agnostic and the structure-sensitive dependencies in a collaborative manner. Not only is it computationally efficient, but most importantly, it also achieves state-of-the-art performance. An extensive evaluation on the Human3.6M, AMASS, and 3DPW datasets shows that M$^2$-Net consistently outperforms all other approaches. We hope our work brings the community one step further towards truly predictable human motion. Our code will be publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge