Xinlei Zhou

Feature Statistics with Uncertainty Help Adversarial Robustness

Mar 26, 2025Abstract:Despite the remarkable success of deep neural networks (DNNs), the security threat of adversarial attacks poses a significant challenge to the reliability of DNNs. By introducing randomness into different parts of DNNs, stochastic methods can enable the model to learn some uncertainty, thereby improving model robustness efficiently. In this paper, we theoretically discover a universal phenomenon that adversarial attacks will shift the distributions of feature statistics. Motivated by this theoretical finding, we propose a robustness enhancement module called Feature Statistics with Uncertainty (FSU). It resamples channel-wise feature means and standard deviations of examples from multivariate Gaussian distributions, which helps to reconstruct the attacked examples and calibrate the shifted distributions. The calibration recovers some domain characteristics of the data for classification, thereby mitigating the influence of perturbations and weakening the ability of attacks to deceive models. The proposed FSU module has universal applicability in training, attacking, predicting and fine-tuning, demonstrating impressive robustness enhancement ability at trivial additional time cost. For example, against powerful optimization-based CW attacks, by incorporating FSU into attacking and predicting phases, it endows many collapsed state-of-the-art models with 50%-80% robust accuracy on CIFAR10, CIFAR100 and SVHN.

A Survey on Epistemic (Model) Uncertainty in Supervised Learning: Recent Advances and Applications

Nov 04, 2021

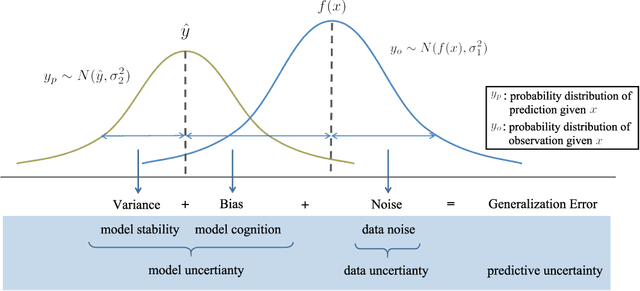

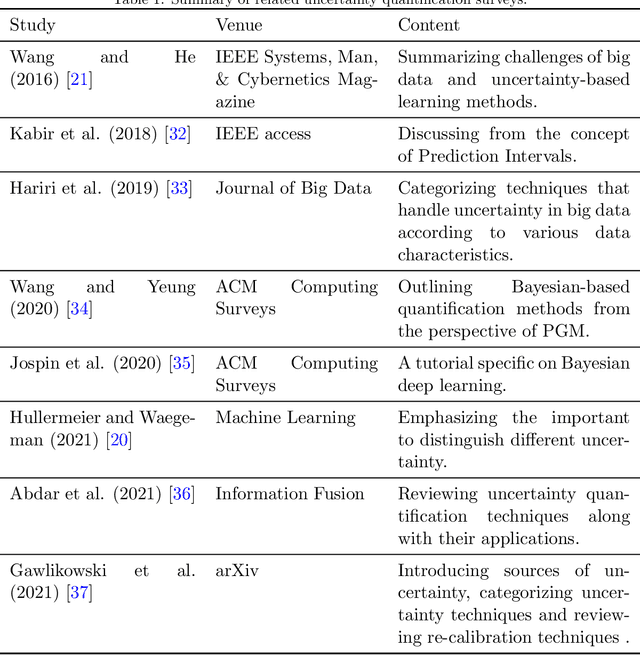

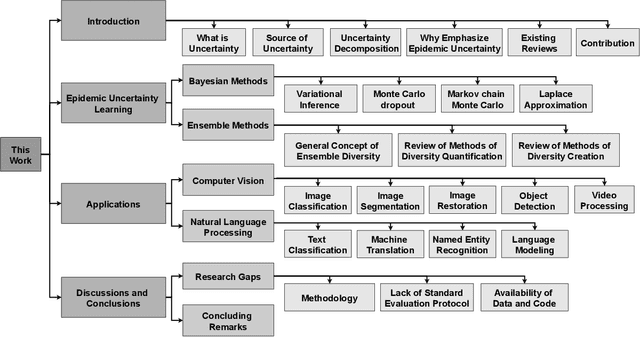

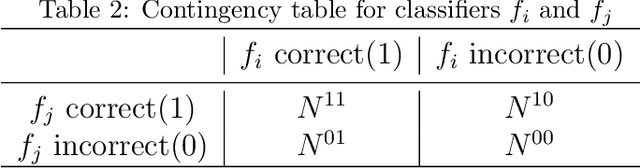

Abstract:Quantifying the uncertainty of supervised learning models plays an important role in making more reliable predictions. Epistemic uncertainty, which usually is due to insufficient knowledge about the model, can be reduced by collecting more data or refining the learning models. Over the last few years, scholars have proposed many epistemic uncertainty handling techniques which can be roughly grouped into two categories, i.e., Bayesian and ensemble. This paper provides a comprehensive review of epistemic uncertainty learning techniques in supervised learning over the last five years. As such, we, first, decompose the epistemic uncertainty into bias and variance terms. Then, a hierarchical categorization of epistemic uncertainty learning techniques along with their representative models is introduced. In addition, several applications such as computer vision (CV) and natural language processing (NLP) are presented, followed by a discussion on research gaps and possible future research directions.

A Review of Generalized Zero-Shot Learning Methods

Nov 17, 2020

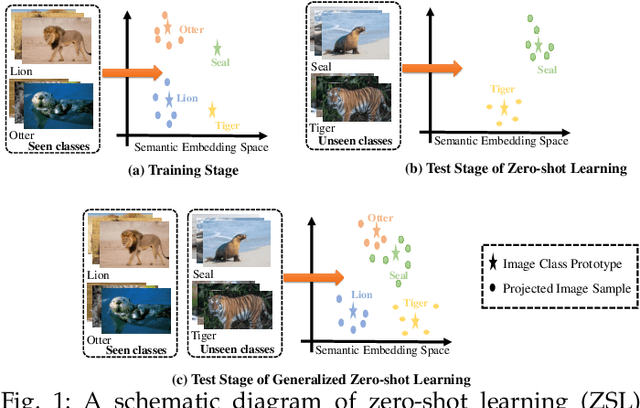

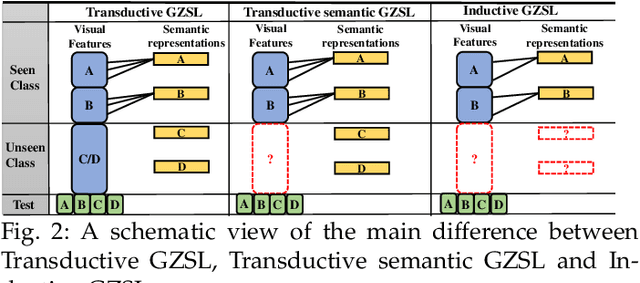

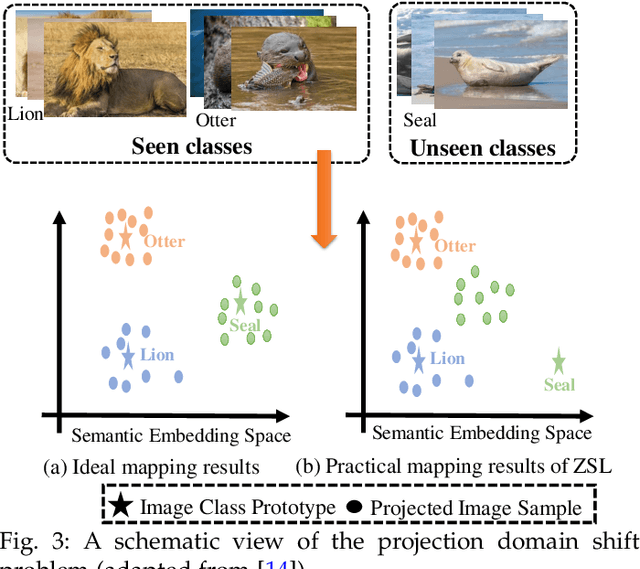

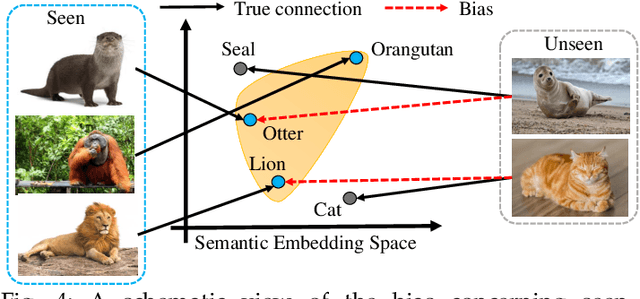

Abstract:Generalized zero-shot learning (GZSL) aims to train a model for classifying data samples under the condition that some output classes are unknown during supervised learning. To address this challenging task, GZSL leverages semantic information of both seen (source) and unseen (target) classes to bridge the gap between both seen and unseen classes. Since its introduction, many GZSL models have been formulated. In this review paper, we present a comprehensive review of GZSL. Firstly, we provide an overview of GZSL including the problems and challenging issues. Then, we introduce a hierarchical categorization of the GZSL methods and discuss the representative methods of each category. In addition, we discuss several research directions for future studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge