Xinghua Li

Weakly Supervised Cloud Detection Combining Spectral Features and Multi-Scale Deep Network

Oct 01, 2025Abstract:Clouds significantly affect the quality of optical satellite images, which seriously limits their precise application. Recently, deep learning has been widely applied to cloud detection and has achieved satisfactory results. However, the lack of distinctive features in thin clouds and the low quality of training samples limit the cloud detection accuracy of deep learning methods, leaving space for further improvements. In this paper, we propose a weakly supervised cloud detection method that combines spectral features and multi-scale scene-level deep network (SpecMCD) to obtain highly accurate pixel-level cloud masks. The method first utilizes a progressive training framework with a multi-scale scene-level dataset to train the multi-scale scene-level cloud detection network. Pixel-level cloud probability maps are then obtained by combining the multi-scale probability maps and cloud thickness map based on the characteristics of clouds in dense cloud coverage and large cloud-area coverage images. Finally, adaptive thresholds are generated based on the differentiated regions of the scene-level cloud masks at different scales and combined with distance-weighted optimization to obtain binary cloud masks. Two datasets, WDCD and GF1MS-WHU, comprising a total of 60 Gaofen-1 multispectral (GF1-MS) images, were used to verify the effectiveness of the proposed method. Compared to the other weakly supervised cloud detection methods such as WDCD and WSFNet, the F1-score of the proposed SpecMCD method shows an improvement of over 7.82%, highlighting the superiority and potential of the SpecMCD method for cloud detection under different cloud coverage conditions.

AI-Empowered Multiple Access for 6G: A Survey of Spectrum Sensing, Protocol Designs, and Optimizations

Jun 19, 2024Abstract:With the rapidly increasing number of bandwidth-intensive terminals capable of intelligent computing and communication, such as smart devices equipped with shallow neural network models, the complexity of multiple access for these intelligent terminals is increasing due to the dynamic network environment and ubiquitous connectivity in 6G systems. Traditional multiple access (MA) design and optimization methods are gradually losing ground to artificial intelligence (AI) techniques that have proven their superiority in handling complexity. AI-empowered MA and its optimization strategies aimed at achieving high Quality-of-Service (QoS) are attracting more attention, especially in the area of latency-sensitive applications in 6G systems. In this work, we aim to: 1) present the development and comparative evaluation of AI-enabled MA; 2) provide a timely survey focusing on spectrum sensing, protocol design, and optimization for AI-empowered MA; and 3) explore the potential use cases of AI-empowered MA in the typical application scenarios within 6G systems. Specifically, we first present a unified framework of AI-empowered MA for 6G systems by incorporating various promising machine learning techniques in spectrum sensing, resource allocation, MA protocol design, and optimization. We then introduce AI-empowered MA spectrum sensing related to spectrum sharing and spectrum interference management. Next, we discuss the AI-empowered MA protocol designs and implementation methods by reviewing and comparing the state-of-the-art, and we further explore the optimization algorithms related to dynamic resource management, parameter adjustment, and access scheme switching. Finally, we discuss the current challenges, point out open issues, and outline potential future research directions in this field.

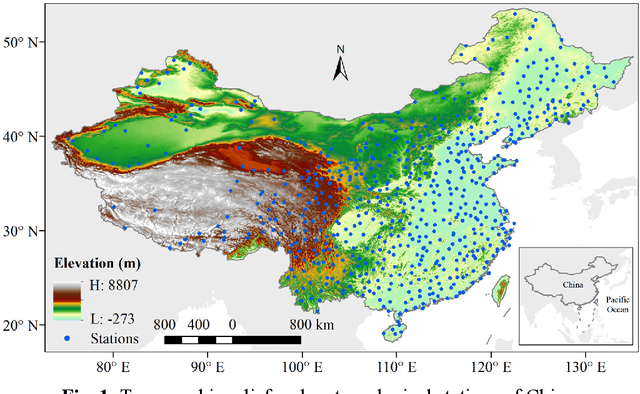

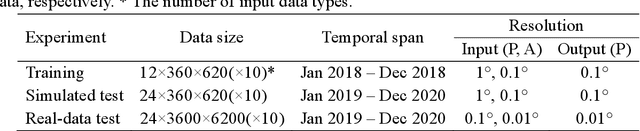

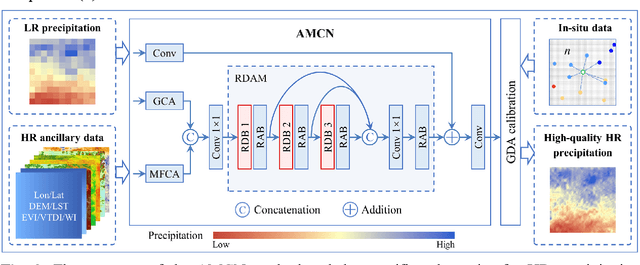

An attention mechanism based convolutional network for satellite precipitation downscaling over China

Mar 28, 2022

Abstract:Precipitation is a key part of hydrological circulation and is a sensitive indicator of climate change. The Integrated Multi-satellitE Retrievals for the Global Precipitation Measurement (GPM) mission (IMERG) datasets are widely used for global and regional precipitation investigations. However, their local application is limited by the relatively coarse spatial resolution. Therefore, in this paper, an attention mechanism based convolutional network (AMCN) is proposed to downscale GPM IMERG monthly precipitation data. The proposed method is an end-to-end network, which consists of a global cross-attention module, a multi-factor cross-attention module, and a residual convolutional module, comprehensively considering the potential relationships between precipitation and complicated surface characteristics. In addition, a degradation loss function based on low-resolution precipitation is designed to physically constrain the network training, to improve the robustness of the proposed network under different time and scale variations. The experiments demonstrate that the proposed network significantly outperforms three baseline methods. Finally, a geographic difference analysis method is introduced to further improve the downscaled results by incorporating in-situ measurements for high-quality and fine-scale precipitation estimation.

Fully Polarimetric SAR and Single-Polarization SAR Image Fusion Network

Jul 18, 2021

Abstract:The data fusion technology aims to aggregate the characteristics of different data and obtain products with multiple data advantages. To solves the problem of reduced resolution of PolSAR images due to system limitations, we propose a fully polarimetric synthetic aperture radar (PolSAR) images and single-polarization synthetic aperture radar SAR (SinSAR) images fusion network to generate high-resolution PolSAR (HR-PolSAR) images. To take advantage of the polarimetric information of the low-resolution PolSAR (LR-PolSAR) image and the spatial information of the high-resolution single-polarization SAR (HR-SinSAR) image, we propose a fusion framework for joint LR-PolSAR image and HR-SinSAR image and design a cross-attention mechanism to extract features from the joint input data. Besides, based on the physical imaging mechanism, we designed the PolSAR polarimetric loss function for constrained network training. The experimental results confirm the superiority of fusion network over traditional algorithms. The average PSNR is increased by more than 3.6db, and the average MAE is reduced to less than 0.07. Experiments on polarimetric decomposition and polarimetric signature show that it maintains polarimetric information well.

Long time-series NDVI reconstruction in cloud-prone regions via spatio-temporal tensor completion

Feb 04, 2021

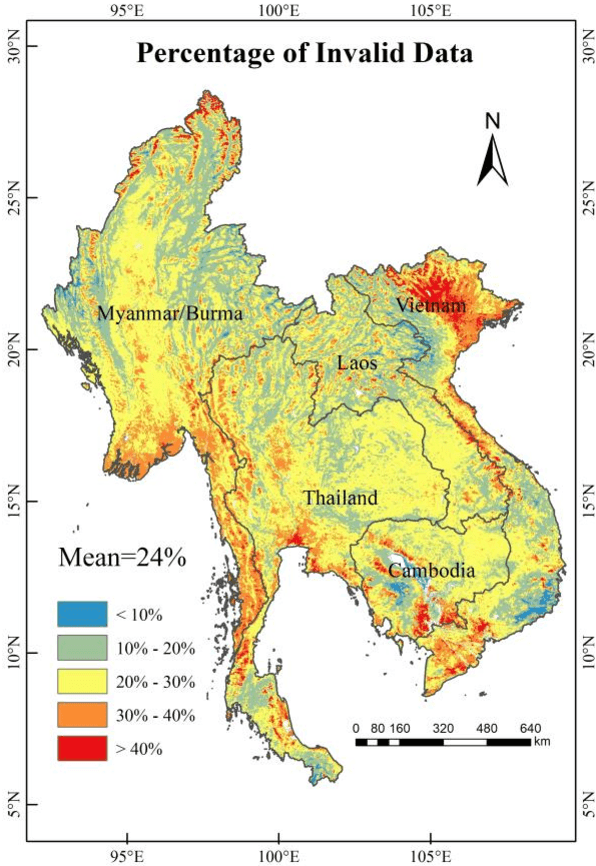

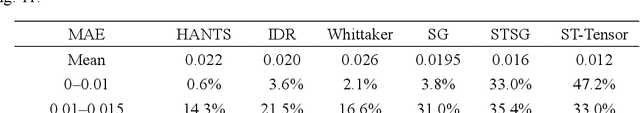

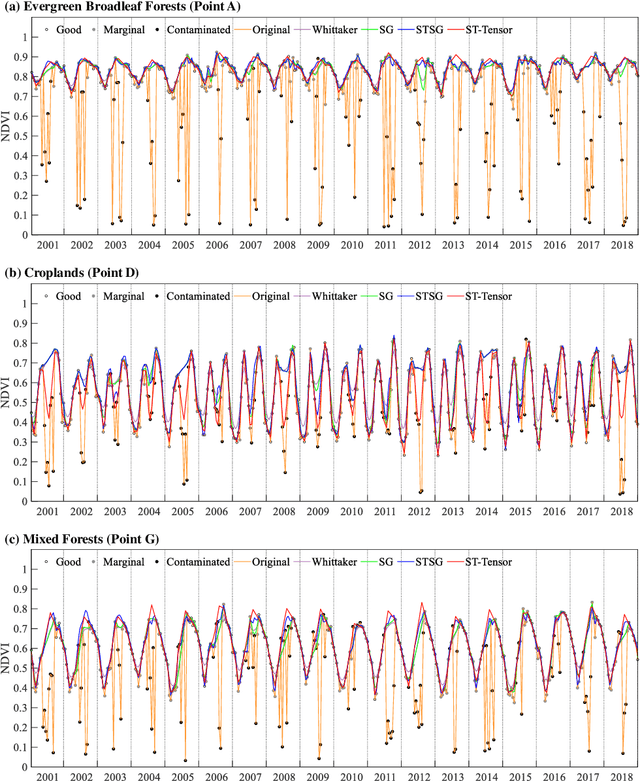

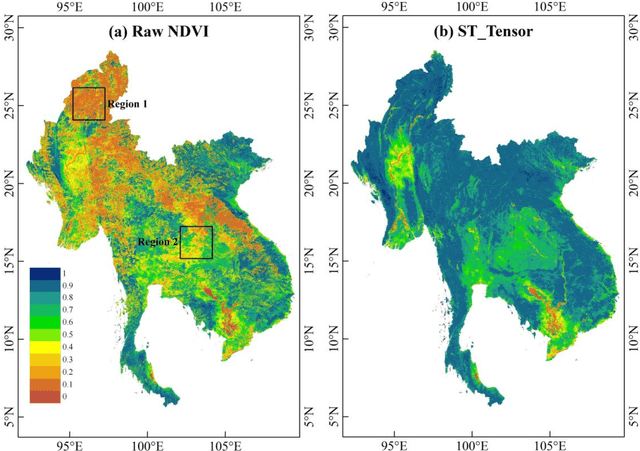

Abstract:The applications of Normalized Difference Vegetation Index (NDVI) time-series data are inevitably hampered by cloud-induced gaps and noise. Although numerous reconstruction methods have been developed, they have not effectively addressed the issues associated with large gaps in the time series over cloudy and rainy regions, due to the insufficient utilization of the spatial and temporal correlations. In this paper, an adaptive Spatio-Temporal Tensor Completion method (termed ST-Tensor) method is proposed to reconstruct long-term NDVI time series in cloud-prone regions, by making full use of the multi-dimensional spatio-temporal information simultaneously. For this purpose, a highly-correlated tensor is built by considering the correlations among the spatial neighbors, inter-annual variations, and periodic characteristics, in order to reconstruct the missing information via an adaptive-weighted low-rank tensor completion model. An iterative l1 trend filtering method is then implemented to eliminate the residual temporal noise. This new method was tested using MODIS 16-day composite NDVI products from 2001 to 2018 obtained in the region of Mainland Southeast Asia, where the rainy climate commonly induces large gaps and noise in the data. The qualitative and quantitative results indicate that the ST-Tensor method is more effective than the five previous methods in addressing the different missing data problems, especially the temporally continuous gaps and spatio-temporally continuous gaps. It is also shown that the ST-Tensor method performs better than the other methods in tracking NDVI seasonal trajectories, and is therefore a superior option for generating high-quality long-term NDVI time series for cloud-prone regions.

Missing Data Reconstruction in Remote Sensing image with a Unified Spatial-Temporal-Spectral Deep Convolutional Neural Network

Feb 23, 2018

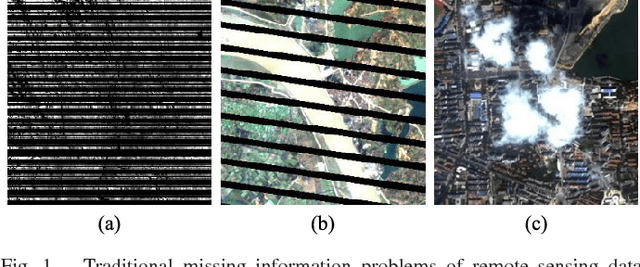

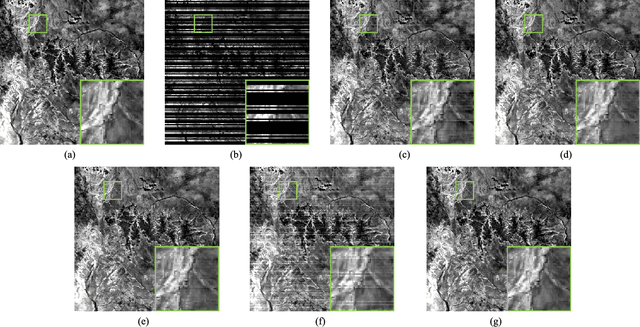

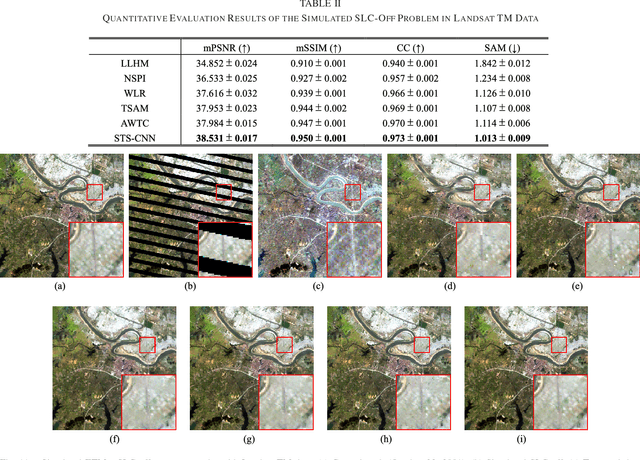

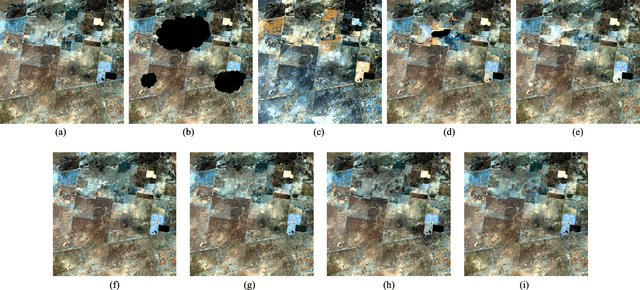

Abstract:Because of the internal malfunction of satellite sensors and poor atmospheric conditions such as thick cloud, the acquired remote sensing data often suffer from missing information, i.e., the data usability is greatly reduced. In this paper, a novel method of missing information reconstruction in remote sensing images is proposed. The unified spatial-temporal-spectral framework based on a deep convolutional neural network (STS-CNN) employs a unified deep convolutional neural network combined with spatial-temporal-spectral supplementary information. In addition, to address the fact that most methods can only deal with a single missing information reconstruction task, the proposed approach can solve three typical missing information reconstruction tasks: 1) dead lines in Aqua MODIS band 6; 2) the Landsat ETM+ Scan Line Corrector (SLC)-off problem; and 3) thick cloud removal. It should be noted that the proposed model can use multi-source data (spatial, spectral, and temporal) as the input of the unified framework. The results of both simulated and real-data experiments demonstrate that the proposed model exhibits high effectiveness in the three missing information reconstruction tasks listed above.

Correction of "Cloud Removal By Fusing Multi-Source and Multi-Temporal Images"

Jul 25, 2017

Abstract:Remote sensing images often suffer from cloud cover. Cloud removal is required in many applications of remote sensing images. Multitemporal-based methods are popular and effective to cope with thick clouds. This paper contributes to a summarization and experimental comparation of the existing multitemporal-based methods. Furthermore, we propose a spatiotemporal-fusion with poisson-adjustment method to fuse multi-sensor and multi-temporal images for cloud removal. The experimental results show that the proposed method has potential to address the problem of accuracy reduction of cloud removal in multi-temporal images with significant changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge