Wenhui Xu

A high-performance reconstruction method for partially coherent ptychography

Jun 09, 2024

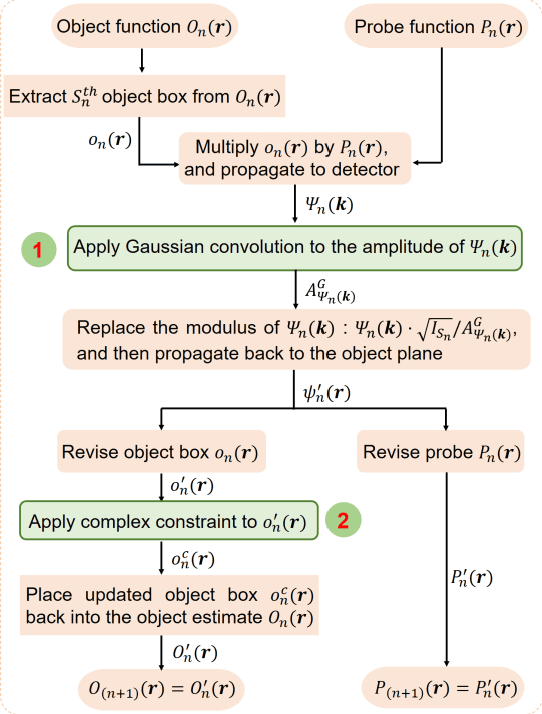

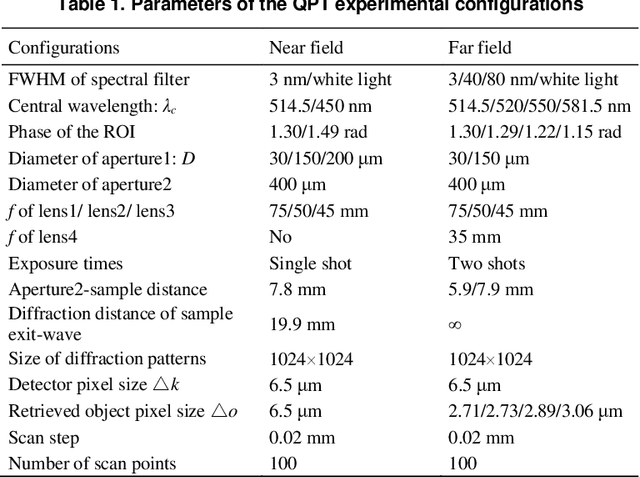

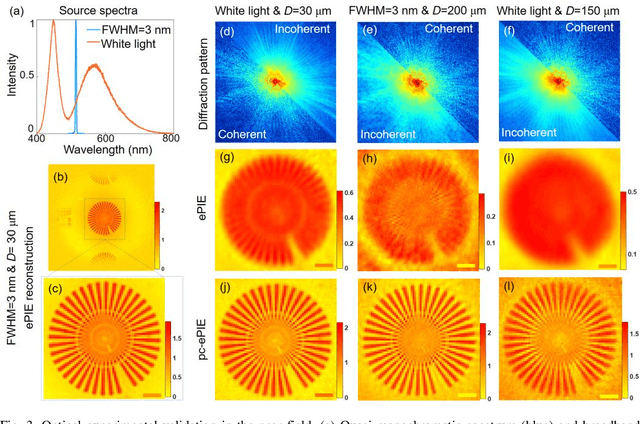

Abstract:Ptychography is now integrated as a tool in mainstream microscopy allowing quantitative and high-resolution imaging capabilities over a wide field of view. However, its ultimate performance is inevitably limited by the available coherent flux when implemented using electrons or laboratory X-ray sources. We present a universal reconstruction algorithm with high tolerance to low coherence for both far-field and near-field ptychography. The approach is practical for partial temporal and spatial coherence and requires no prior knowledge of the source properties. Our initial visible-light and electron data show that the method can dramatically improve the reconstruction quality and accelerate the convergence rate of the reconstruction. The approach also integrates well into existing ptychographic engines. It can also improve mixed-state and numerical monochromatisation methods, requiring a smaller number of coherent modes or lower dimensionality of Krylov subspace while providing more stable and faster convergence. We propose that this approach could have significant impact on ptychography of weakly scattering samples.

An Integrated Constrained Gradient Descent (iCGD) Protocol to Correct Scan-Positional Errors for Electron Ptychography with High Accuracy and Precision

Nov 06, 2022

Abstract:Correcting scan-positional errors is critical in achieving electron ptychography with both high resolution and high precision. This is a demanding and challenging task due to the sheer number of parameters that need to be optimized. For atomic-resolution ptychographic reconstructions, we found classical refining methods for scan positions not satisfactory due to the inherent entanglement between the object and scan positions, which can produce systematic errors in the results. Here, we propose a new protocol consisting of a series of constrained gradient descent (CGD) methods to achieve better recovery of scan positions. The central idea of these CGD methods is to utilize a priori knowledge about the nature of STEM experiments and add necessary constraints to isolate different types of scan positional errors during the iterative reconstruction process. Each constraint will be introduced with the help of simulated 4D-STEM datasets with known positional errors. Then the integrated constrained gradient decent (iCGD) protocol will be demonstrated using an experimental 4D-STEM dataset of the 1H-MoS2 monolayer. We will show that the iCGD protocol can effectively address the errors of scan positions across the spectrum and help to achieve electron ptychography with high accuracy and precision.

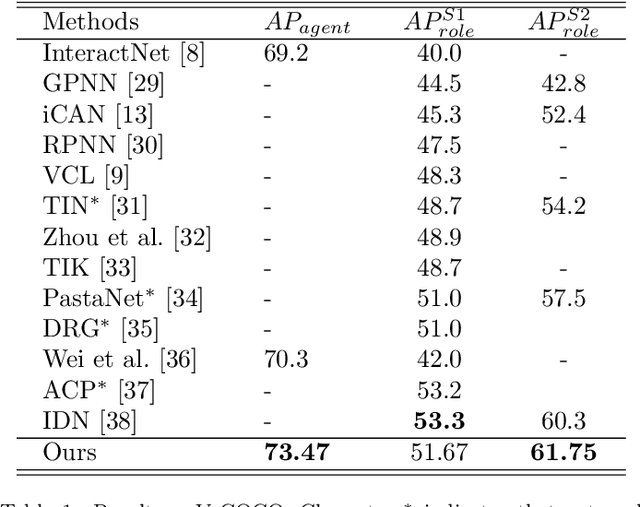

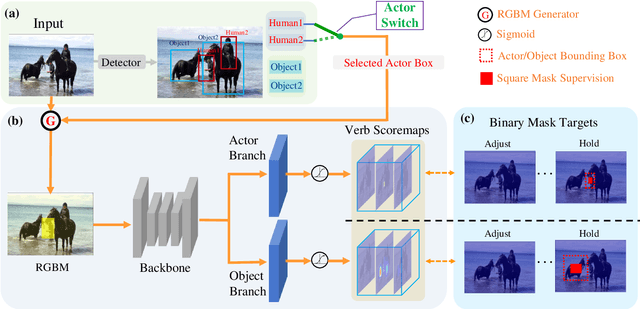

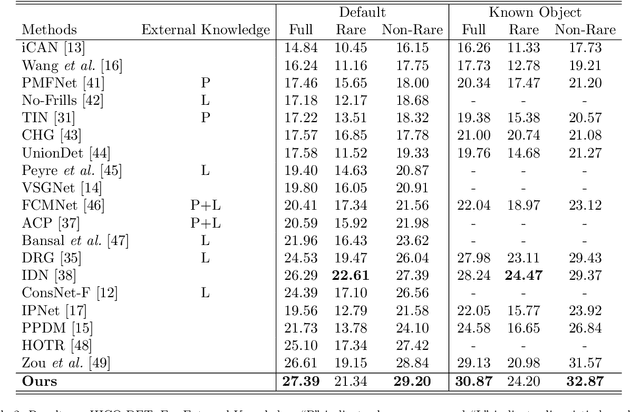

Effective Actor-centric Human-object Interaction Detection

Feb 24, 2022

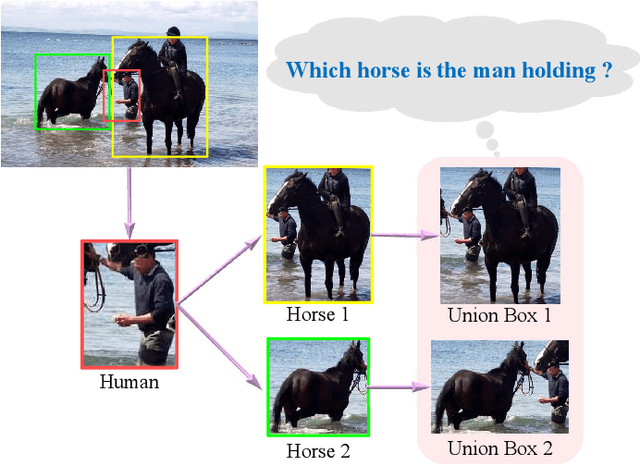

Abstract:While Human-Object Interaction(HOI) Detection has achieved tremendous advances in recent, it still remains challenging due to complex interactions with multiple humans and objects occurring in images, which would inevitably lead to ambiguities. Most existing methods either generate all human-object pair candidates and infer their relationships by cropped local features successively in a two-stage manner, or directly predict interaction points in a one-stage procedure. However, the lack of spatial configurations or reasoning steps of two- or one- stage methods respectively limits their performance in such complex scenes. To avoid this ambiguity, we propose a novel actor-centric framework. The main ideas are that when inferring interactions: 1) the non-local features of the entire image guided by actor position are obtained to model the relationship between the actor and context, and then 2) we use an object branch to generate pixel-wise interaction area prediction, where the interaction area denotes the object central area. Moreover, we also use an actor branch to get interaction prediction of the actor and propose a novel composition strategy based on center-point indexing to generate the final HOI prediction. Thanks to the usage of the non-local features and the partly-coupled property of the human-objects composition strategy, our proposed framework can detect HOI more accurately especially for complex images. Extensive experimental results show that our method achieves the state-of-the-art on the challenging V-COCO and HICO-DET benchmarks and is more robust especially in multiple persons and/or objects scenes.

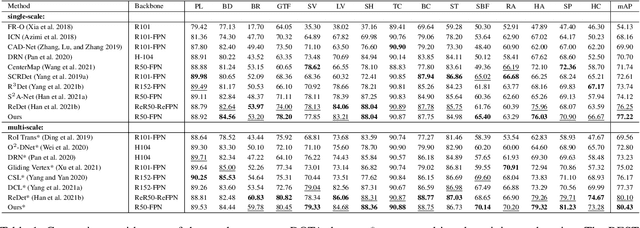

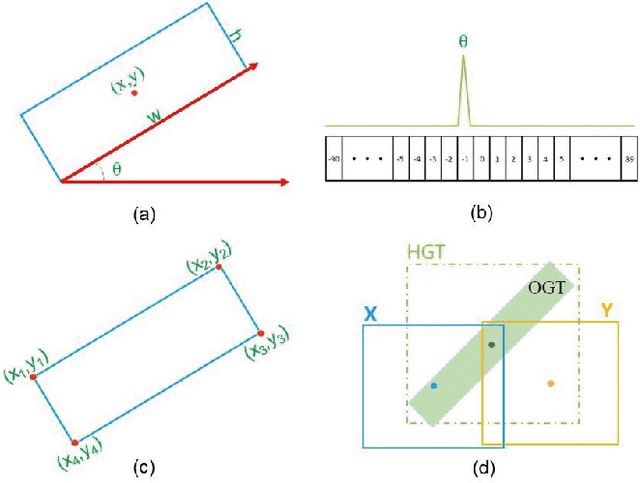

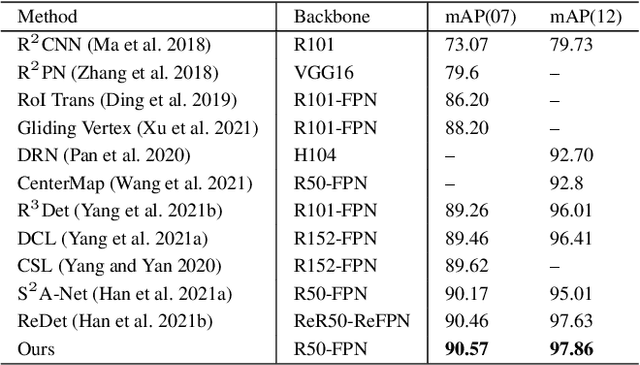

Learning Oriented Remote Sensing Object Detection via Naive Geometric Computing

Dec 01, 2021

Abstract:Detecting oriented objects along with estimating their rotation information is one crucial step for analyzing remote sensing images. Despite that many methods proposed recently have achieved remarkable performance, most of them directly learn to predict object directions under the supervision of only one (e.g. the rotation angle) or a few (e.g. several coordinates) groundtruth values individually. Oriented object detection would be more accurate and robust if extra constraints, with respect to proposal and rotation information regression, are adopted for joint supervision during training. To this end, we innovatively propose a mechanism that simultaneously learns the regression of horizontal proposals, oriented proposals, and rotation angles of objects in a consistent manner, via naive geometric computing, as one additional steady constraint (see Figure 1). An oriented center prior guided label assignment strategy is proposed for further enhancing the quality of proposals, yielding better performance. Extensive experiments demonstrate the model equipped with our idea significantly outperforms the baseline by a large margin to achieve a new state-of-the-art result without any extra computational burden during inference. Our proposed idea is simple and intuitive that can be readily implemented. Source codes and trained models are involved in supplementary files.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge