Weitao Wan

Towards Domain Generalization in Object Detection

Mar 27, 2022

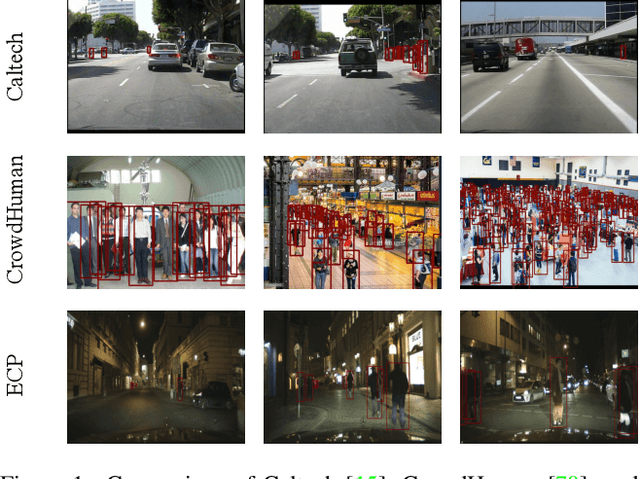

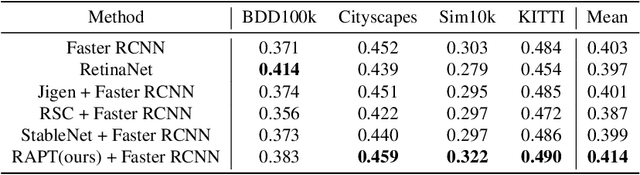

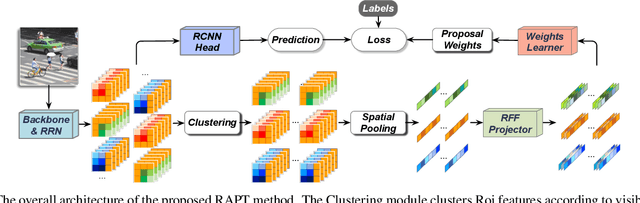

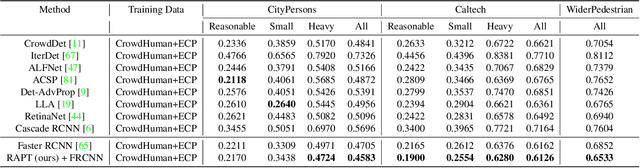

Abstract:Despite the striking performance achieved by modern detectors when training and test data are sampled from the same or similar distribution, the generalization ability of detectors under unknown distribution shifts remains hardly studied. Recently several works discussed the detectors' adaptation ability to a specific target domain which are not readily applicable in real-world applications since detectors may encounter various environments or situations while pre-collecting all of them before training is inconceivable. In this paper, we study the critical problem, domain generalization in object detection (DGOD), where detectors are trained with source domains and evaluated on unknown target domains. To thoroughly evaluate detectors under unknown distribution shifts, we formulate the DGOD problem and propose a comprehensive evaluation benchmark to fill the vacancy. Moreover, we propose a novel method named Region Aware Proposal reweighTing (RAPT) to eliminate dependence within RoI features. Extensive experiments demonstrate that current DG methods fail to address the DGOD problem and our method outperforms other state-of-the-art counterparts.

Shaping Deep Feature Space towards Gaussian Mixture for Visual Classification

Nov 18, 2020

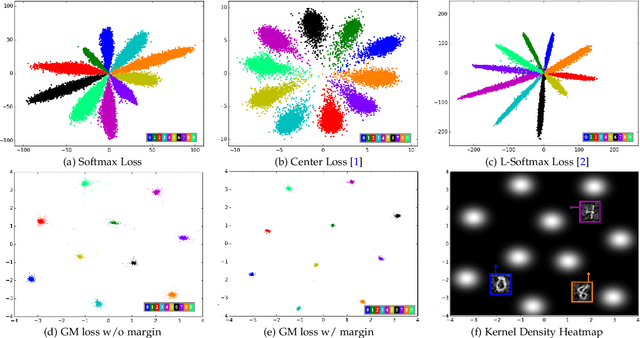

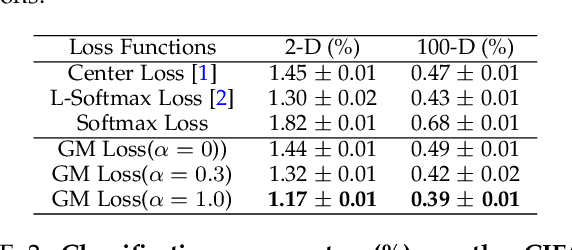

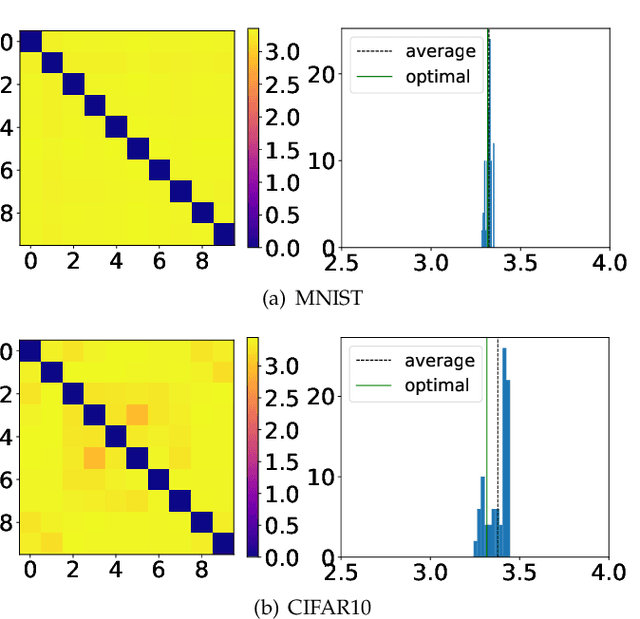

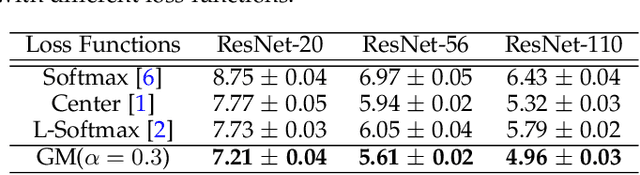

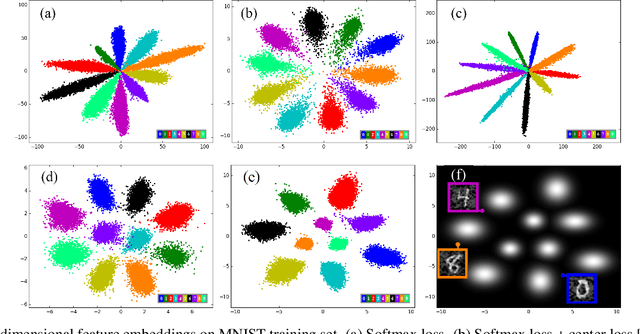

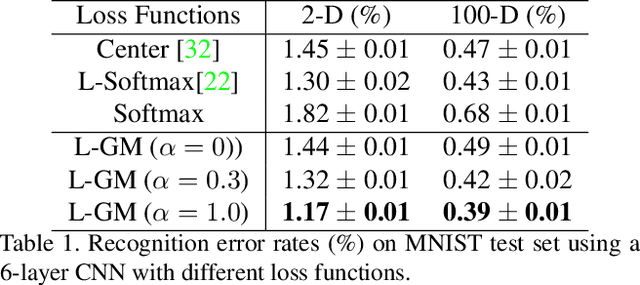

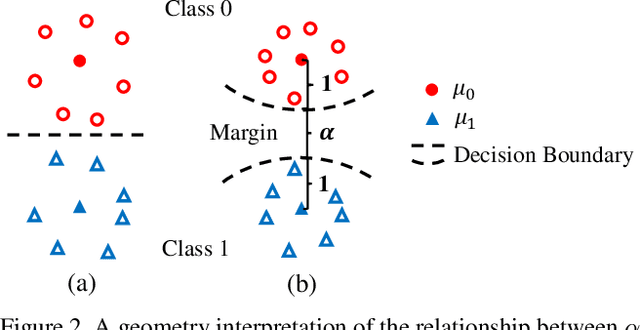

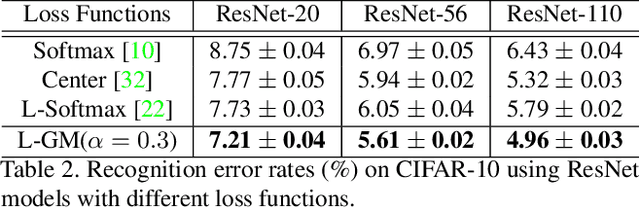

Abstract:The softmax cross-entropy loss function has been widely used to train deep models for various tasks. In this work, we propose a Gaussian mixture (GM) loss function for deep neural networks for visual classification. Unlike the softmax cross-entropy loss, our method explicitly shapes the deep feature space towards a Gaussian Mixture distribution. With a classification margin and a likelihood regularization, the GM loss facilitates both high classification performance and accurate modeling of the feature distribution. The GM loss can be readily used to distinguish abnormal inputs, such as the adversarial examples, based on the discrepancy between feature distributions of the inputs and the training set. Furthermore, theoretical analysis shows that a symmetric feature space can be achieved by using the GM loss, which enables the models to perform robustly against adversarial attacks. The proposed model can be implemented easily and efficiently without using extra trainable parameters. Extensive evaluations demonstrate that the proposed method performs favorably not only on image classification but also on robust detection of adversarial examples generated by strong attacks under different threat models.

Rethinking Feature Distribution for Loss Functions in Image Classification

Mar 08, 2018

Abstract:We propose a large-margin Gaussian Mixture (L-GM) loss for deep neural networks in classification tasks. Different from the softmax cross-entropy loss, our proposal is established on the assumption that the deep features of the training set follow a Gaussian Mixture distribution. By involving a classification margin and a likelihood regularization, the L-GM loss facilitates both a high classification performance and an accurate modeling of the training feature distribution. As such, the L-GM loss is superior to the softmax loss and its major variants in the sense that besides classification, it can be readily used to distinguish abnormal inputs, such as the adversarial examples, based on their features' likelihood to the training feature distribution. Extensive experiments on various recognition benchmarks like MNIST, CIFAR, ImageNet and LFW, as well as on adversarial examples demonstrate the effectiveness of our proposal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge