Viktor Prasanna

ConsensusDrop: Fusing Visual and Cross-Modal Saliency for Efficient Vision Language Models

Feb 01, 2026Abstract:Vision-Language Models (VLMs) are expensive because the LLM processes hundreds of largely redundant visual tokens. Existing token reduction methods typically exploit \textit{either} vision-encoder saliency (broad but query-agnostic) \textit{or} LLM cross-attention (query-aware but sparse and costly). We show that neither signal alone is sufficient: fusing them consistently improves performance compared to unimodal visual token selection (ranking). However, making such fusion practical is non-trivial: cross-modal saliency is usually only available \emph{inside} the LLM (too late for efficient pre-LLM pruning), and the two signals are inherently asymmetric, so naive fusion underutilizes their complementary strengths. We propose \textbf{ConsensusDrop}, a training-free framework that derives a \emph{consensus} ranking by reconciling vision encoder saliency with query-aware cross-attention, retaining the most informative tokens while compressing the remainder via encoder-guided token merging. Across LLaVA-1.5/NeXT, Video-LLaVA, and other open-source VLMs, ConsensusDrop consistently outperforms prior pruning methods under identical token budgets and delivers a stronger accuracy-efficiency Pareto frontier -- preserving near-baseline accuracy even at aggressive token reductions while reducing TTFT and KV cache footprint. Our code will be open-sourced.

Primitive-Driven Acceleration of Hyperdimensional Computing for Real-Time Image Classification

Jan 27, 2026Abstract:Hyperdimensional Computing (HDC) represents data using extremely high-dimensional, low-precision vectors, termed hypervectors (HVs), and performs learning and inference through lightweight, noise-tolerant operations. However, the high dimensionality, sparsity, and repeated data movement involved in HDC make these computations difficult to accelerate efficiently on conventional processors. As a result, executing core HDC operations: binding, permutation, bundling, and similarity search: on CPUs or GPUs often leads to suboptimal utilization, memory bottlenecks, and limits on real-time performance. In this paper, our contributions are two-fold. First, we develop an image-encoding algorithm that, similar in spirit to convolutional neural networks, maps local image patches to hypervectors enriched with spatial information. These patch-level hypervectors are then merged into a global representation using the fundamental HDC operations, enabling spatially sensitive and robust image encoding. This encoder achieves 95.67% accuracy on MNIST and 85.14% on Fashion-MNIST, outperforming prior HDC-based image encoders. Second, we design an end-to-end accelerator that implements these compute operations on an FPGA through a pipelined architecture that exploits parallelism both across the hypervector dimensionality and across the set of image patches. Our Alveo U280 implementation delivers 0.09ms inference latency, achieving up to 1300x and 60x speedup over state-of-the-art CPU and GPU baselines, respectively.

TIGER-MARL: Enhancing Multi-Agent Reinforcement Learning with Temporal Information through Graph-based Embeddings and Representations

Nov 11, 2025

Abstract:In this paper, we propose capturing and utilizing \textit{Temporal Information through Graph-based Embeddings and Representations} or \textbf{TIGER} to enhance multi-agent reinforcement learning (MARL). We explicitly model how inter-agent coordination structures evolve over time. While most MARL approaches rely on static or per-step relational graphs, they overlook the temporal evolution of interactions that naturally arise as agents adapt, move, or reorganize cooperation strategies. Capturing such evolving dependencies is key to achieving robust and adaptive coordination. To this end, TIGER constructs dynamic temporal graphs of MARL agents, connecting their current and historical interactions. It then employs a temporal attention-based encoder to aggregate information across these structural and temporal neighborhoods, yielding time-aware agent embeddings that guide cooperative policy learning. Through extensive experiments on two coordination-intensive benchmarks, we show that TIGER consistently outperforms diverse value-decomposition and graph-based MARL baselines in task performance and sample efficiency. Furthermore, we conduct comprehensive ablation studies to isolate the impact of key design parameters in TIGER, revealing how structural and temporal factors can jointly shape effective policy learning in MARL. All codes can be found here: https://github.com/Nikunj-Gupta/tiger-marl.

ScalableHD: Scalable and High-Throughput Hyperdimensional Computing Inference on Multi-Core CPUs

Jun 10, 2025Abstract:Hyperdimensional Computing (HDC) is a brain-inspired computing paradigm that represents and manipulates information using high-dimensional vectors, called hypervectors (HV). Traditional HDC methods, while robust to noise and inherently parallel, rely on single-pass, non-parametric training and often suffer from low accuracy. To address this, recent approaches adopt iterative training of base and class HVs, typically accelerated on GPUs. Inference, however, remains lightweight and well-suited for real-time execution. Yet, efficient HDC inference has been studied almost exclusively on specialized hardware such as FPGAs and GPUs, with limited attention to general-purpose multi-core CPUs. To address this gap, we propose ScalableHD for scalable and high-throughput HDC inference on multi-core CPUs. ScalableHD employs a two-stage pipelined execution model, where each stage is parallelized across cores and processes chunks of base and class HVs. Intermediate results are streamed between stages using a producer-consumer mechanism, enabling on-the-fly consumption and improving cache locality. To maximize performance, ScalableHD integrates memory tiling and NUMA-aware worker-to-core binding. Further, it features two execution variants tailored for small and large batch sizes, each designed to exploit compute parallelism based on workload characteristics while mitigating the memory-bound compute pattern that limits HDC inference performance on modern multi-core CPUs. ScalableHD achieves up to 10x speedup in throughput (samples per second) over state-of-the-art baselines such as TorchHD, across a diverse set of tasks ranging from human activity recognition to image classification, while preserving task accuracy. Furthermore, ScalableHD exhibits robust scalability: increasing the number of cores yields near-proportional throughput improvements.

RECIPE-TKG: From Sparse History to Structured Reasoning for LLM-based Temporal Knowledge Graph Completion

May 23, 2025Abstract:Temporal Knowledge Graphs (TKGs) represent dynamic facts as timestamped relations between entities. TKG completion involves forecasting missing or future links, requiring models to reason over time-evolving structure. While LLMs show promise for this task, existing approaches often overemphasize supervised fine-tuning and struggle particularly when historical evidence is limited or missing. We introduce RECIPE-TKG, a lightweight and data-efficient framework designed to improve accuracy and generalization in settings with sparse historical context. It combines (1) rule-based multi-hop retrieval for structurally diverse history, (2) contrastive fine-tuning of lightweight adapters to encode relational semantics, and (3) test-time semantic filtering to iteratively refine generations based on embedding similarity. Experiments on four TKG benchmarks show that RECIPE-TKG outperforms previous LLM-based approaches, achieving up to 30.6\% relative improvement in Hits@10. Moreover, our proposed framework produces more semantically coherent predictions, even for the samples with limited historical context.

LocAgent: Graph-Guided LLM Agents for Code Localization

Mar 12, 2025

Abstract:Code localization--identifying precisely where in a codebase changes need to be made--is a fundamental yet challenging task in software maintenance. Existing approaches struggle to efficiently navigate complex codebases when identifying relevant code sections. The challenge lies in bridging natural language problem descriptions with the appropriate code elements, often requiring reasoning across hierarchical structures and multiple dependencies. We introduce LocAgent, a framework that addresses code localization through graph-based representation. By parsing codebases into directed heterogeneous graphs, LocAgent creates a lightweight representation that captures code structures (files, classes, functions) and their dependencies (imports, invocations, inheritance), enabling LLM agents to effectively search and locate relevant entities through powerful multi-hop reasoning. Experimental results on real-world benchmarks demonstrate that our approach significantly enhances accuracy in code localization. Notably, our method with the fine-tuned Qwen-2.5-Coder-Instruct-32B model achieves comparable results to SOTA proprietary models at greatly reduced cost (approximately 86% reduction), reaching up to 92.7% accuracy on file-level localization while improving downstream GitHub issue resolution success rates by 12% for multiple attempts (Pass@10). Our code is available at https://github.com/gersteinlab/LocAgent.

Deep Meta Coordination Graphs for Multi-agent Reinforcement Learning

Feb 06, 2025

Abstract:This paper presents deep meta coordination graphs (DMCG) for learning cooperative policies in multi-agent reinforcement learning (MARL). Coordination graph formulations encode local interactions and accordingly factorize the joint value function of all agents to improve efficiency in MARL. However, existing approaches rely solely on pairwise relations between agents, which potentially oversimplifies complex multi-agent interactions. DMCG goes beyond these simple direct interactions by also capturing useful higher-order and indirect relationships among agents. It generates novel graph structures accommodating multiple types of interactions and arbitrary lengths of multi-hop connections in coordination graphs to model such interactions. It then employs a graph convolutional network module to learn powerful representations in an end-to-end manner. We demonstrate its effectiveness in multiple coordination problems in MARL where other state-of-the-art methods can suffer from sample inefficiency or fail entirely. All codes can be found here: https://github.com/Nikunj-Gupta/dmcg-marl.

ClusterViG: Efficient Globally Aware Vision GNNs via Image Partitioning

Jan 18, 2025Abstract:Convolutional Neural Networks (CNN) and Vision Transformers (ViT) have dominated the field of Computer Vision (CV). Graph Neural Networks (GNN) have performed remarkably well across diverse domains because they can represent complex relationships via unstructured graphs. However, the applicability of GNNs for visual tasks was unexplored till the introduction of Vision GNNs (ViG). Despite the success of ViGs, their performance is severely bottlenecked due to the expensive $k$-Nearest Neighbors ($k$-NN) based graph construction. Recent works addressing this bottleneck impose constraints on the flexibility of GNNs to build unstructured graphs, undermining their core advantage while introducing additional inefficiencies. To address these issues, in this paper, we propose a novel method called Dynamic Efficient Graph Convolution (DEGC) for designing efficient and globally aware ViGs. DEGC partitions the input image and constructs graphs in parallel for each partition, improving graph construction efficiency. Further, DEGC integrates local intra-graph and global inter-graph feature learning, enabling enhanced global context awareness. Using DEGC as a building block, we propose a novel CNN-GNN architecture, ClusterViG, for CV tasks. Extensive experiments indicate that ClusterViG reduces end-to-end inference latency for vision tasks by up to $5\times$ when compared against a suite of models such as ViG, ViHGNN, PVG, and GreedyViG, with a similar model parameter count. Additionally, ClusterViG reaches state-of-the-art performance on image classification, object detection, and instance segmentation tasks, demonstrating the effectiveness of the proposed globally aware learning strategy. Finally, input partitioning performed by DEGC enables ClusterViG to be trained efficiently on higher-resolution images, underscoring the scalability of our approach.

Towards Ideal Temporal Graph Neural Networks: Evaluations and Conclusions after 10,000 GPU Hours

Dec 28, 2024Abstract:Temporal Graph Neural Networks (TGNNs) have emerged as powerful tools for modeling dynamic interactions across various domains. The design space of TGNNs is notably complex, given the unique challenges in runtime efficiency and scalability raised by the evolving nature of temporal graphs. We contend that many of the existing works on TGNN modeling inadequately explore the design space, leading to suboptimal designs. Viewing TGNN models through a performance-focused lens often obstructs a deeper understanding of the advantages and disadvantages of each technique. Specifically, benchmarking efforts inherently evaluate models in their original designs and implementations, resulting in unclear accuracy comparisons and misleading runtime. To address these shortcomings, we propose a practical comparative evaluation framework that performs a design space search across well-known TGNN modules based on a unified, optimized code implementation. Using our framework, we make the first efforts towards addressing three critical questions in TGNN design, spending over 10,000 GPU hours: (1) investigating the efficiency of TGNN module designs, (2) analyzing how the effectiveness of these modules correlates with dataset patterns, and (3) exploring the interplay between multiple modules. Key outcomes of this directed investigative approach include demonstrating that the most recent neighbor sampling and attention aggregator outperform uniform neighbor sampling and MLP-Mixer aggregator; Assessing static node memory as an effective node memory alternative, and showing that the choice between static or dynamic node memory should be based on the repetition patterns in the dataset. Our in-depth analysis of the interplay between TGNN modules and dataset patterns should provide a deeper insight into TGNN performance along with potential research directions for designing more general and effective TGNNs.

Adversarial Training in Low-Label Regimes with Margin-Based Interpolation

Nov 27, 2024

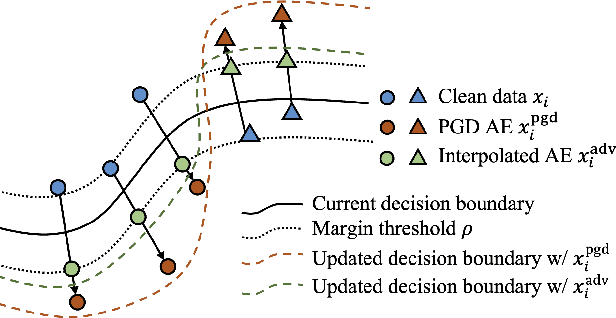

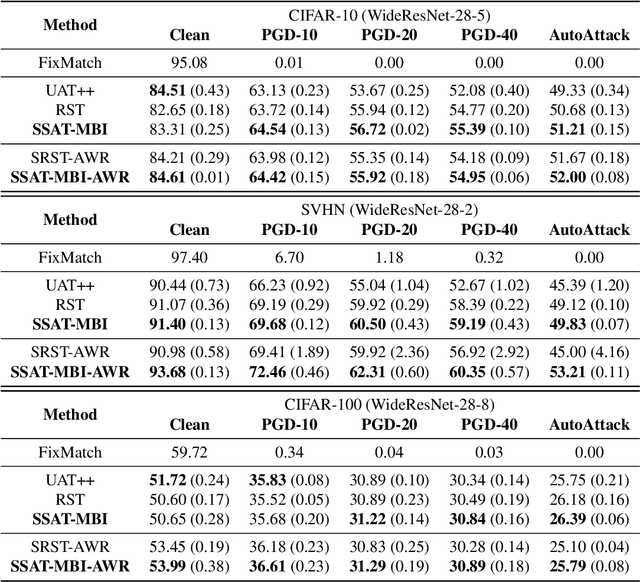

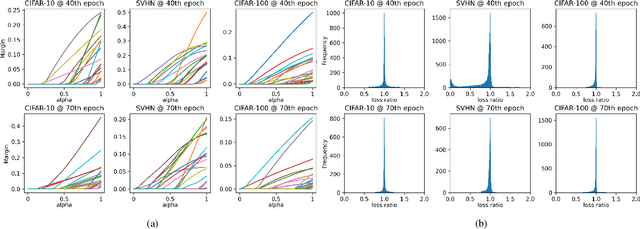

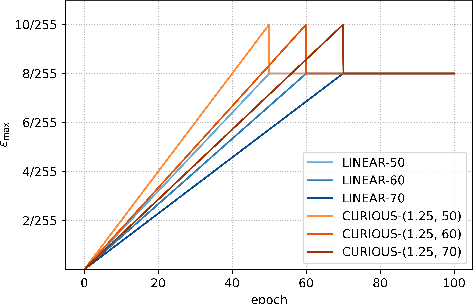

Abstract:Adversarial training has emerged as an effective approach to train robust neural network models that are resistant to adversarial attacks, even in low-label regimes where labeled data is scarce. In this paper, we introduce a novel semi-supervised adversarial training approach that enhances both robustness and natural accuracy by generating effective adversarial examples. Our method begins by applying linear interpolation between clean and adversarial examples to create interpolated adversarial examples that cross decision boundaries by a controlled margin. This sample-aware strategy tailors adversarial examples to the characteristics of each data point, enabling the model to learn from the most informative perturbations. Additionally, we propose a global epsilon scheduling strategy that progressively adjusts the upper bound of perturbation strengths during training. The combination of these strategies allows the model to develop increasingly complex decision boundaries with better robustness and natural accuracy. Empirical evaluations show that our approach effectively enhances performance against various adversarial attacks, such as PGD and AutoAttack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge