Tudor Dumitras

Like Oil and Water: Group Robustness Methods and Poisoning Defenses May Be at Odds

Apr 02, 2025

Abstract:Group robustness has become a major concern in machine learning (ML) as conventional training paradigms were found to produce high error on minority groups. Without explicit group annotations, proposed solutions rely on heuristics that aim to identify and then amplify the minority samples during training. In our work, we first uncover a critical shortcoming of these methods: an inability to distinguish legitimate minority samples from poison samples in the training set. By amplifying poison samples as well, group robustness methods inadvertently boost the success rate of an adversary -- e.g., from $0\%$ without amplification to over $97\%$ with it. Notably, we supplement our empirical evidence with an impossibility result proving this inability of a standard heuristic under some assumptions. Moreover, scrutinizing recent poisoning defenses both in centralized and federated learning, we observe that they rely on similar heuristics to identify which samples should be eliminated as poisons. In consequence, minority samples are eliminated along with poisons, which damages group robustness -- e.g., from $55\%$ without the removal of the minority samples to $41\%$ with it. Finally, as they pursue opposing goals using similar heuristics, our attempt to alleviate the trade-off by combining group robustness methods and poisoning defenses falls short. By exposing this tension, we also hope to highlight how benchmark-driven ML scholarship can obscure the trade-offs among different metrics with potentially detrimental consequences.

On the Effectiveness of Regularization Against Membership Inference Attacks

Jun 09, 2020

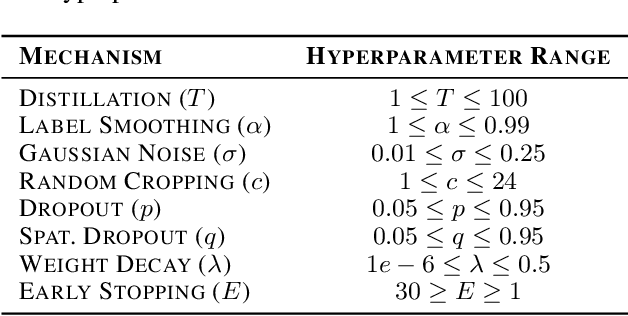

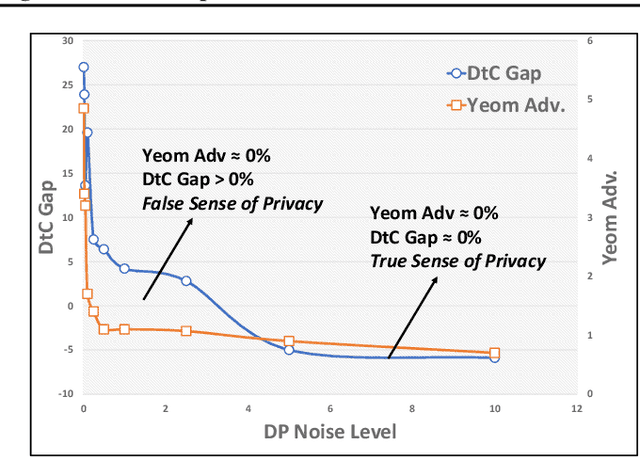

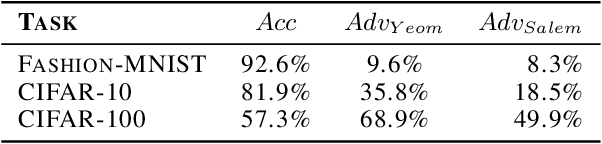

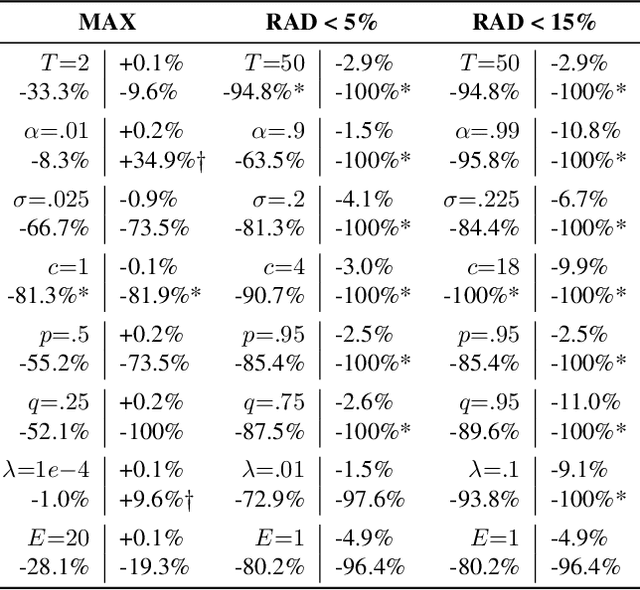

Abstract:Deep learning models often raise privacy concerns as they leak information about their training data. This enables an adversary to determine whether a data point was in a model's training set by conducting a membership inference attack (MIA). Prior work has conjectured that regularization techniques, which combat overfitting, may also mitigate the leakage. While many regularization mechanisms exist, their effectiveness against MIAs has not been studied systematically, and the resulting privacy properties are not well understood. We explore the lower bound for information leakage that practical attacks can achieve. First, we evaluate the effectiveness of 8 mechanisms in mitigating two recent MIAs, on three standard image classification tasks. We find that certain mechanisms, such as label smoothing, may inadvertently help MIAs. Second, we investigate the potential of improving the resilience to MIAs by combining complementary mechanisms. Finally, we quantify the opportunity of future MIAs to compromise privacy by designing a white-box `distance-to-confident' (DtC) metric, based on adversarial sample crafting. Our metric reveals that, even when existing MIAs fail, the training samples may remain distinguishable from test samples. This suggests that regularization mechanisms can provide a false sense of privacy, even when they appear effective against existing MIAs.

How to Stop Off-the-Shelf Deep Neural Networks from Overthinking

Oct 16, 2018

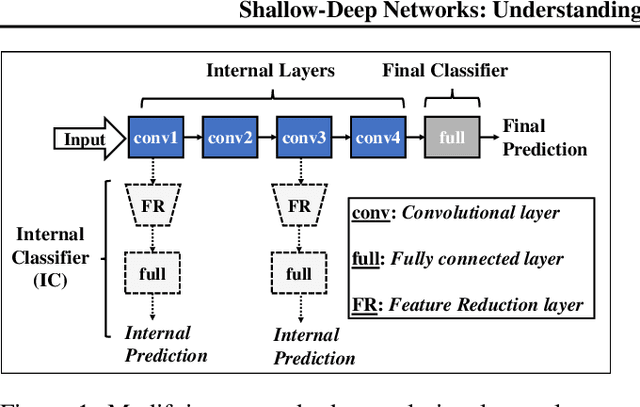

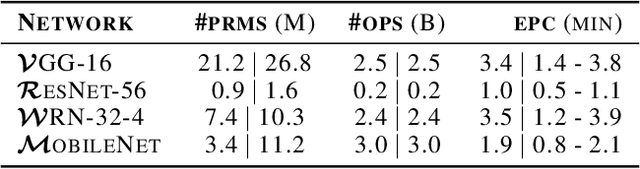

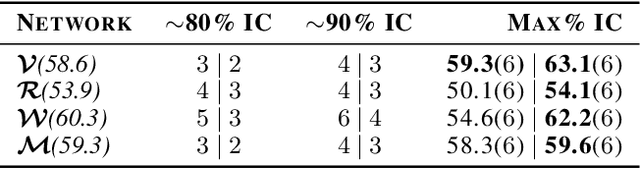

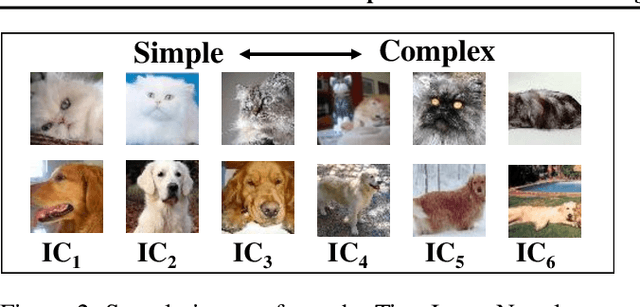

Abstract:While deep neural networks (DNNs) can perform complex classification tasks, most of their natural inputs do not necessitate the depth of the modern architectures. This leads to wasted computation, as the network overthinks on the simpler inputs. The overthinking problem could be prevented if standard DNNs could produce early predictions. However, prior work suggests that this is challenging in existing architectures, such as ResNet, as their internal layers are not trained for classification and optimizing them for accurate predictions hurts the end performance. In this paper, we explore the overthinking problem, and, as a remedy, we propose a generic modification to off-the-shelf DNNs---the Shallow-Deep Network (SDN). With this modification, a DNN can efficiently produce predictions from either shallow or deep layers, as appropriate for the given input. We employ feature reduction and a layer-wise objective function to train these progressively deeper internal classifiers while preserving the end-performance. We can apply the SDN modification either by training from scratch or by tuning a pre-trained model. Experiments on four architectures (VGG, ResNet, WideResNet, and MobileNet) and three image classifications tasks suggest that, for an average input, an SDN can produce a correct prediction before its middle layer. By avoiding unnecessary computation, the SDN can reduce the required number of operations for an input by 41% over the original network. Finally, we observe that disagreements among the early classifiers reliably indicate inputs where the network is likely to make a mistake. Building on this observation we propose an internal confusion metric and a method to diagnose misclassifications by visualizing these disagreements.

Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks

Apr 03, 2018

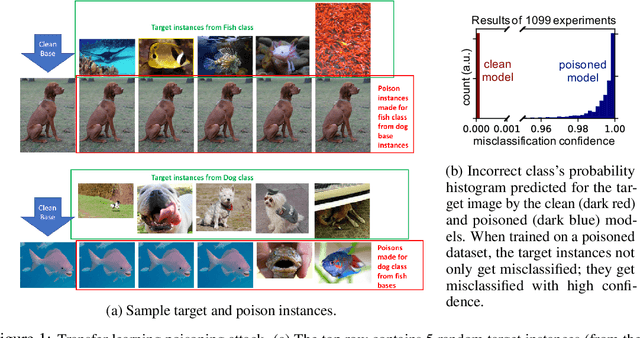

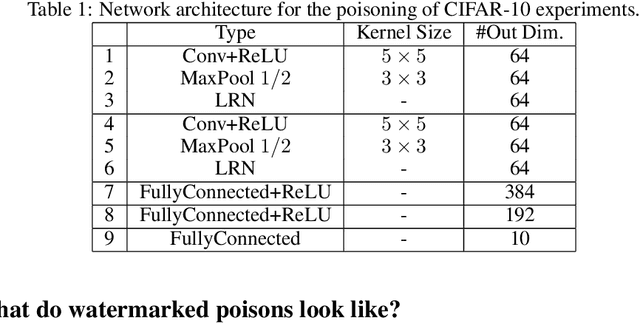

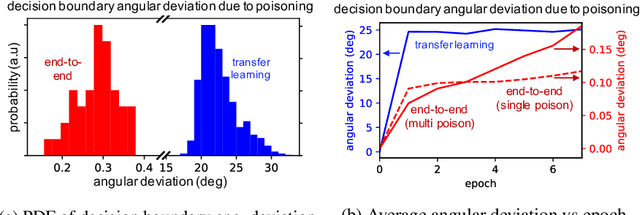

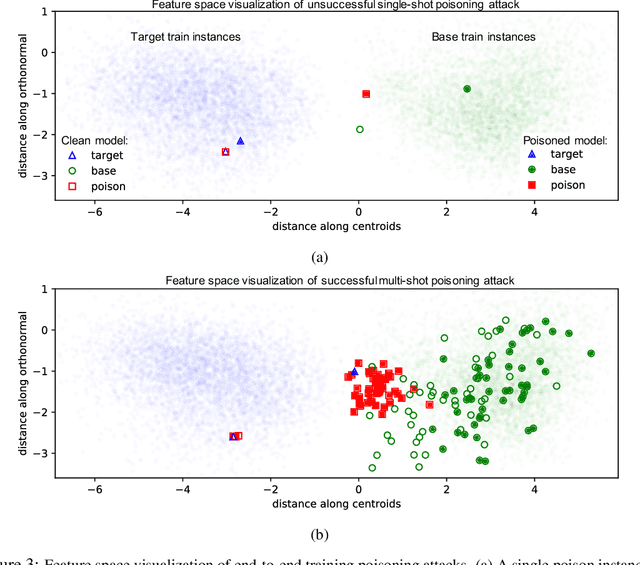

Abstract:Data poisoning is a type of adversarial attack on machine learning models wherein the attacker adds examples to the training set to manipulate the behavior of the model at test time. This paper explores a broad class of poisoning attacks on neural nets. The proposed attacks use "clean-labels"; they don't require the attacker to have any control over the labeling of training data. They are also targeted; they control the behavior of the classifier on a specific test instance without noticeably degrading classifier performance on other instances. For example, an attacker could add a seemingly innocuous image (that is properly labeled) to a training set for a face recognition engine, and control the identity of a chosen person at test time. Because the attacker does not need to control the labeling function, poisons could be entered into the training set simply by putting them online and waiting for them to be scraped by a data collection bot. We present an optimization-based method for crafting poisons, and show that just one single poison image can control classifier behavior when transfer learning is used. For full end-to-end training, we present a "watermarking" strategy that makes poisoning reliable using multiple (~50) poisoned training instances. We demonstrate our method by generating poisoned frog images from the CIFAR dataset and using them to manipulate image classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge