Tristan Anselm Kuder

Retina U-Net: Embarrassingly Simple Exploitation of Segmentation Supervision for Medical Object Detection

Nov 21, 2018

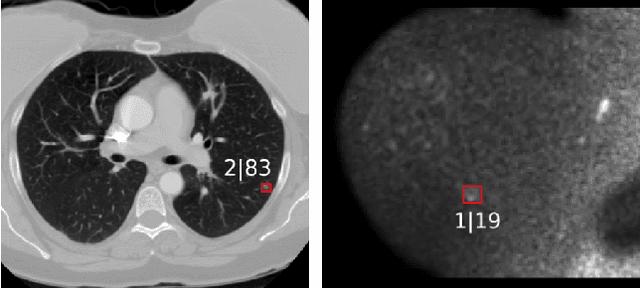

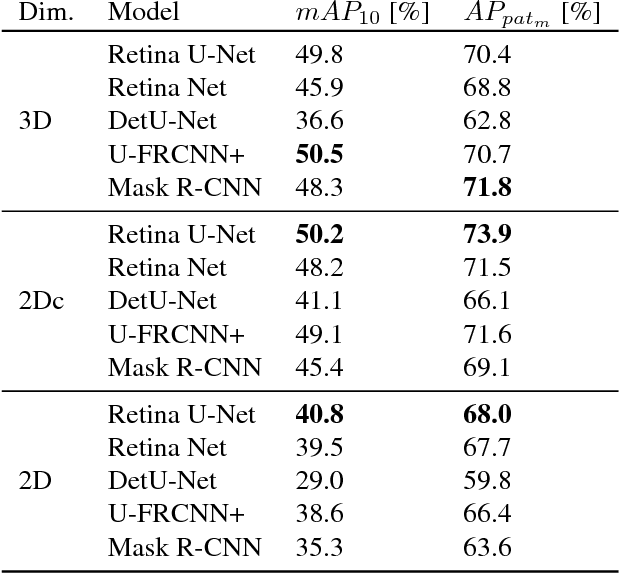

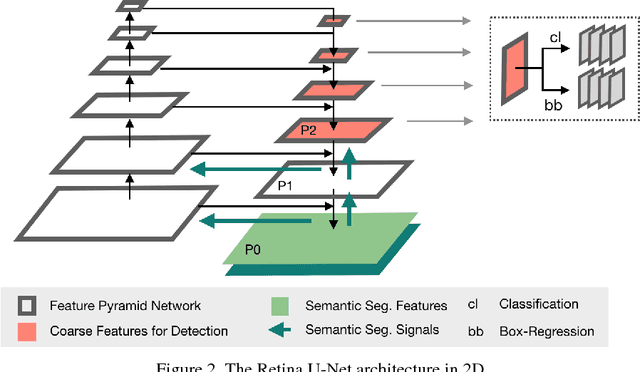

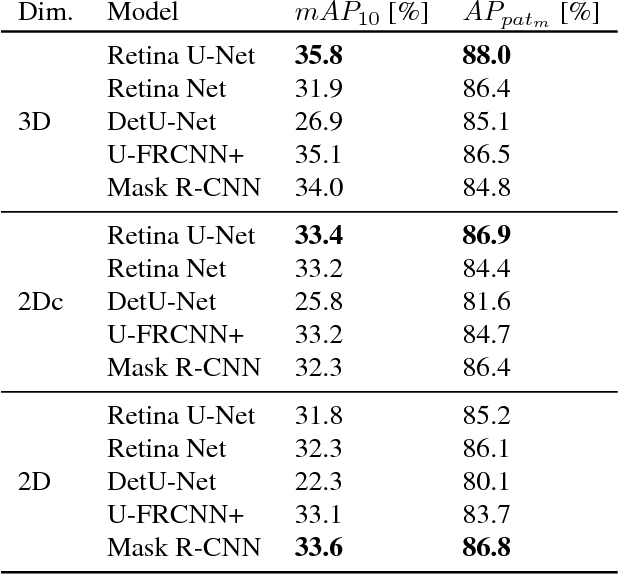

Abstract:The task of localizing and categorizing objects in medical images often remains formulated as a semantic segmentation problem. This approach, however, only indirectly solves the coarse localization task by predicting pixel-level scores, requiring ad-hoc heuristics when mapping back to object-level scores. State-of-the-art object detectors on the other hand, allow for individual object scoring in an end-to-end fashion, while ironically trading in the ability to exploit the full pixel-wise supervision signal. This can be particularly disadvantageous in the setting of medical image analysis, where data sets are notoriously small. In this paper, we propose Retina U-Net, a simple architecture, which naturally fuses the Retina Net one-stage detector with the U-Net architecture widely used for semantic segmentation in medical images. The proposed architecture recaptures discarded supervision signals by complementing object detection with an auxiliary task in the form of semantic segmentation without introducing the additional complexity of previously proposed two-stage detectors. We evaluate the importance of full segmentation supervision on two medical data sets, provide an in-depth analysis on a series of toy experiments and show how the corresponding performance gain grows in the limit of small data sets. Retina U-Net yields strong detection performance only reached by its more complex two-staged counterparts. Our framework including all methods implemented for operation on 2D and 3D images is available at github.com/pfjaeger/medicaldetectiontoolkit.

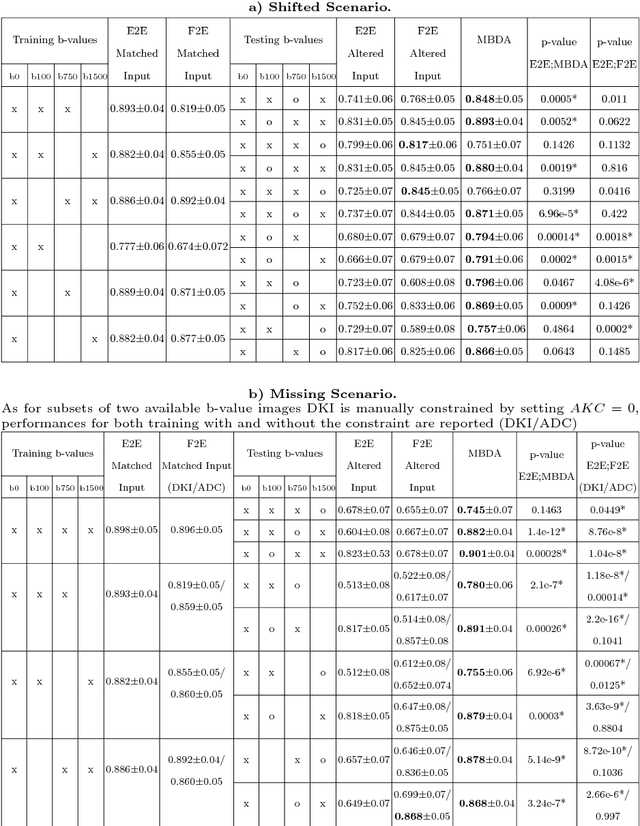

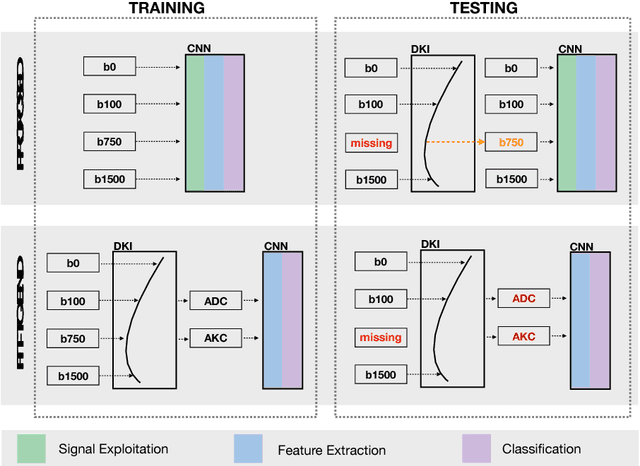

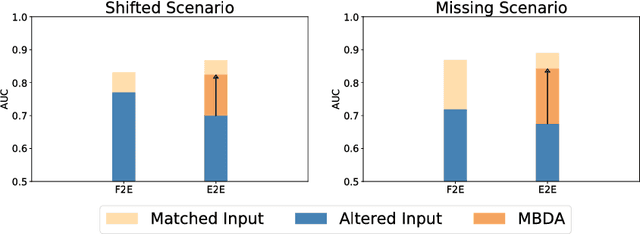

Domain Adaptation for Deviating Acquisition Protocols in CNN-based Lesion Classification on Diffusion-Weighted MR Images

Jul 17, 2018

Abstract:End-to-end deep learning improves breast cancer classification on diffusion-weighted MR images (DWI) using a convolutional neural network (CNN) architecture. A limitation of CNN as opposed to previous model-based approaches is the dependence on specific DWI input channels used during training. However, in the context of large-scale application, methods agnostic towards heterogeneous inputs are desirable, due to the high deviation of scanning protocols between clinical sites. We propose model-based domain adaptation to overcome input dependencies and avoid re-training of networks at clinical sites by restoring training inputs from altered input channels given during deployment. We demonstrate the method's significant increase in classification performance and superiority over implicit domain adaptation provided by training-schemes operating on model-parameters instead of raw DWI images.

Revealing Hidden Potentials of the q-Space Signal in Breast Cancer

May 22, 2017

Abstract:Mammography screening for early detection of breast lesions currently suffers from high amounts of false positive findings, which result in unnecessary invasive biopsies. Diffusion-weighted MR images (DWI) can help to reduce many of these false-positive findings prior to biopsy. Current approaches estimate tissue properties by means of quantitative parameters taken from generative, biophysical models fit to the q-space encoded signal under certain assumptions regarding noise and spatial homogeneity. This process is prone to fitting instability and partial information loss due to model simplicity. We reveal unexplored potentials of the signal by integrating all data processing components into a convolutional neural network (CNN) architecture that is designed to propagate clinical target information down to the raw input images. This approach enables simultaneous and target-specific optimization of image normalization, signal exploitation, global representation learning and classification. Using a multicentric data set of 222 patients, we demonstrate that our approach significantly improves clinical decision making with respect to the current state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge