Tom Kelly

FacadeNet: Conditional Facade Synthesis via Selective Editing

Nov 03, 2023

Abstract:We introduce FacadeNet, a deep learning approach for synthesizing building facade images from diverse viewpoints. Our method employs a conditional GAN, taking a single view of a facade along with the desired viewpoint information and generates an image of the facade from the distinct viewpoint. To precisely modify view-dependent elements like windows and doors while preserving the structure of view-independent components such as walls, we introduce a selective editing module. This module leverages image embeddings extracted from a pre-trained vision transformer. Our experiments demonstrated state-of-the-art performance on building facade generation, surpassing alternative methods.

WinSyn: A High Resolution Testbed for Synthetic Data

Oct 09, 2023

Abstract:We present WinSyn, a dataset consisting of high-resolution photographs and renderings of 3D models as a testbed for synthetic-to-real research. The dataset consists of 75,739 high-resolution photographs of building windows, including traditional and modern designs, captured globally. These include 89,318 cropped subimages of windows, of which 9,002 are semantically labeled. Further, we present our domain-matched photorealistic procedural model which enables experimentation over a variety of parameter distributions and engineering approaches. Our procedural model provides a second corresponding dataset of 21,290 synthetic images. This jointly developed dataset is designed to facilitate research in the field of synthetic-to-real learning and synthetic data generation. WinSyn allows experimentation into the factors that make it challenging for synthetic data to compete with real-world data. We perform ablations using our synthetic model to identify the salient rendering, materials, and geometric factors pertinent to accuracy within the labeling task. We chose windows as a benchmark because they exhibit a large variability of geometry and materials in their design, making them ideal to study synthetic data generation in a constrained setting. We argue that the dataset is a crucial step to enable future research in synthetic data generation for deep learning.

Projective Urban Texturing

Feb 04, 2022

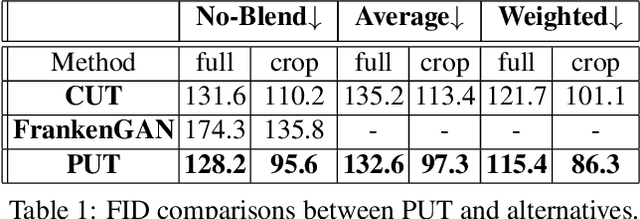

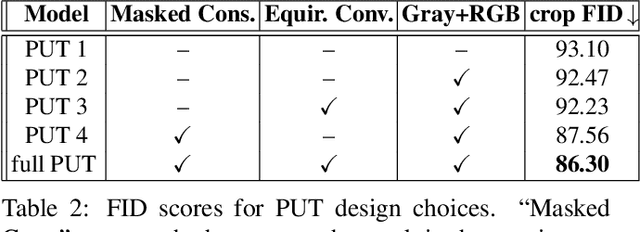

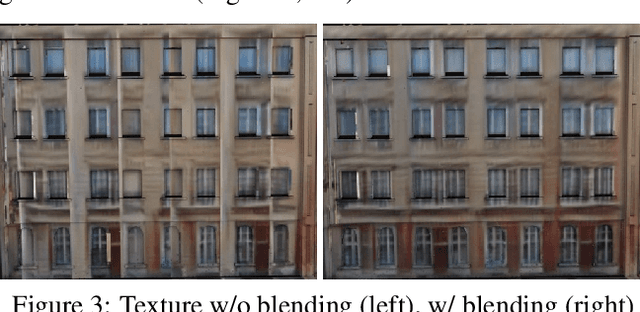

Abstract:This paper proposes a method for automatic generation of textures for 3D city meshes in immersive urban environments. Many recent pipelines capture or synthesize large quantities of city geometry using scanners or procedural modeling pipelines. Such geometry is intricate and realistic, however the generation of photo-realistic textures for such large scenes remains a problem. We propose to generate textures for input target 3D meshes driven by the textural style present in readily available datasets of panoramic photos capturing urban environments. Re-targeting such 2D datasets to 3D geometry is challenging because the underlying shape, size, and layout of the urban structures in the photos do not correspond to the ones in the target meshes. Photos also often have objects (e.g., trees, vehicles) that may not even be present in the target geometry. To address these issues we present a method, called Projective Urban Texturing (PUT), which re-targets textural style from real-world panoramic images to unseen urban meshes. PUT relies on contrastive and adversarial training of a neural architecture designed for unpaired image-to-texture translation. The generated textures are stored in a texture atlas applied to the target 3D mesh geometry. To promote texture consistency, PUT employs an iterative procedure in which texture synthesis is conditioned on previously generated, adjacent textures. We demonstrate both quantitative and qualitative evaluation of the generated textures.

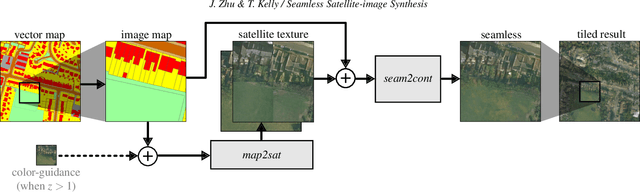

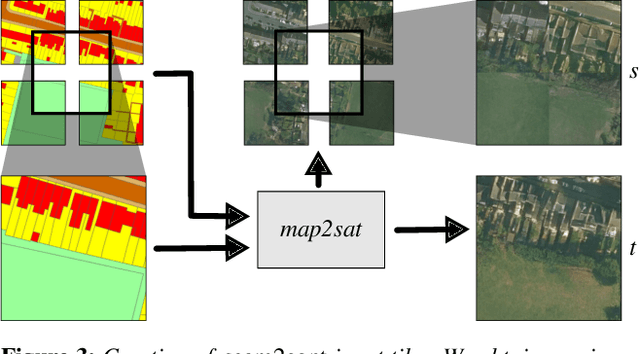

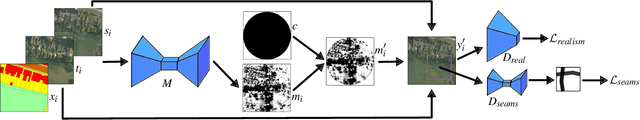

Seamless Satellite-image Synthesis

Nov 05, 2021

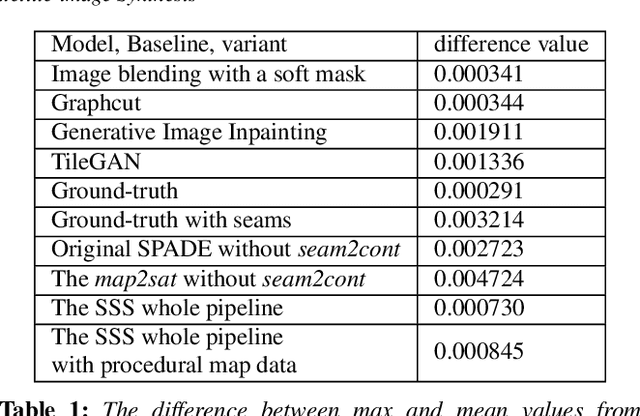

Abstract:We introduce Seamless Satellite-image Synthesis (SSS), a novel neural architecture to create scale-and-space continuous satellite textures from cartographic data. While 2D map data is cheap and easily synthesized, accurate satellite imagery is expensive and often unavailable or out of date. Our approach generates seamless textures over arbitrarily large spatial extents which are consistent through scale-space. To overcome tile size limitations in image-to-image translation approaches, SSS learns to remove seams between tiled images in a semantically meaningful manner. Scale-space continuity is achieved by a hierarchy of networks conditioned on style and cartographic data. Our qualitative and quantitative evaluations show that our system improves over the state-of-the-art in several key areas. We show applications to texturing procedurally generation maps and interactive satellite image manipulation.

SketchGen: Generating Constrained CAD Sketches

Jun 04, 2021

Abstract:Computer-aided design (CAD) is the most widely used modeling approach for technical design. The typical starting point in these designs is 2D sketches which can later be extruded and combined to obtain complex three-dimensional assemblies. Such sketches are typically composed of parametric primitives, such as points, lines, and circular arcs, augmented with geometric constraints linking the primitives, such as coincidence, parallelism, or orthogonality. Sketches can be represented as graphs, with the primitives as nodes and the constraints as edges. Training a model to automatically generate CAD sketches can enable several novel workflows, but is challenging due to the complexity of the graphs and the heterogeneity of the primitives and constraints. In particular, each type of primitive and constraint may require a record of different size and parameter types. We propose SketchGen as a generative model based on a transformer architecture to address the heterogeneity problem by carefully designing a sequential language for the primitives and constraints that allows distinguishing between different primitive or constraint types and their parameters, while encouraging our model to re-use information across related parameters, encoding shared structure. A particular highlight of our work is the ability to produce primitives linked via constraints that enables the final output to be further regularized via a constraint solver. We evaluate our model by demonstrating constraint prediction for given sets of primitives and full sketch generation from scratch, showing that our approach significantly out performs the state-of-the-art in CAD sketch generation.

Generative Layout Modeling using Constraint Graphs

Nov 26, 2020

Abstract:We propose a new generative model for layout generation. We generate layouts in three steps. First, we generate the layout elements as nodes in a layout graph. Second, we compute constraints between layout elements as edges in the layout graph. Third, we solve for the final layout using constrained optimization. For the first two steps, we build on recent transformer architectures. The layout optimization implements the constraints efficiently. We show three practical contributions compared to the state of the art: our work requires no user input, produces higher quality layouts, and enables many novel capabilities for conditional layout generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge