Tom Finley

Resonance: Replacing Software Constants with Context-Aware Models in Real-time Communication

Nov 23, 2020

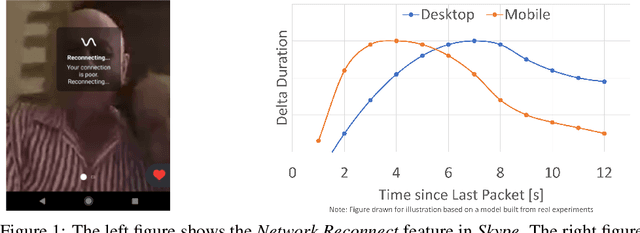

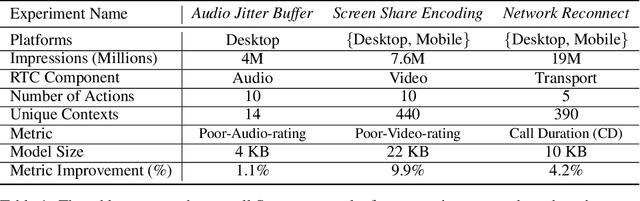

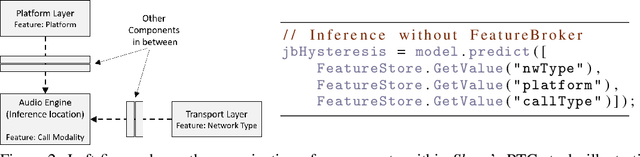

Abstract:Large software systems tune hundreds of 'constants' to optimize their runtime performance. These values are commonly derived through intuition, lab tests, or A/B tests. A 'one-size-fits-all' approach is often sub-optimal as the best value depends on runtime context. In this paper, we provide an experimental approach to replace constants with learned contextual functions for Skype - a widely used real-time communication (RTC) application. We present Resonance, a system based on contextual bandits (CB). We describe experiences from three real-world experiments: applying it to the audio, video, and transport components in Skype. We surface a unique and practical challenge of performing machine learning (ML) inference in large software systems written using encapsulation principles. Finally, we open-source FeatureBroker, a library to reduce the friction in adopting ML models in such development environments

Machine Learning at Microsoft with ML .NET

May 15, 2019

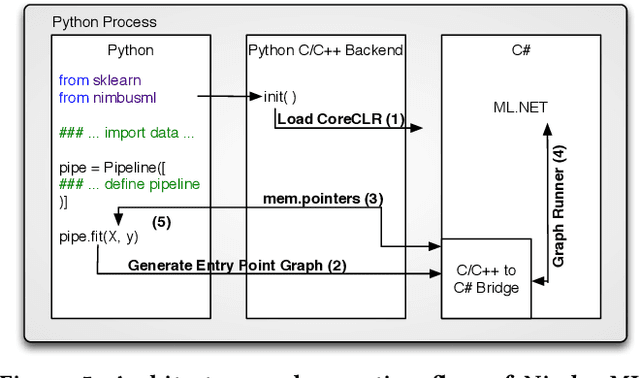

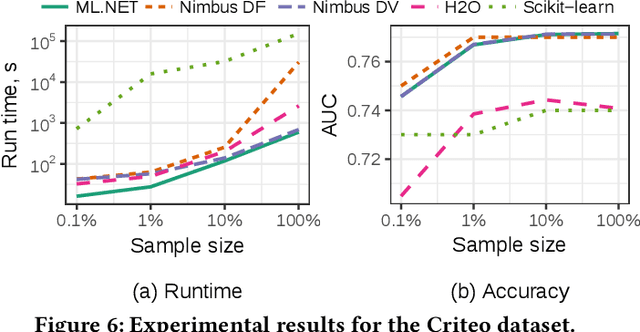

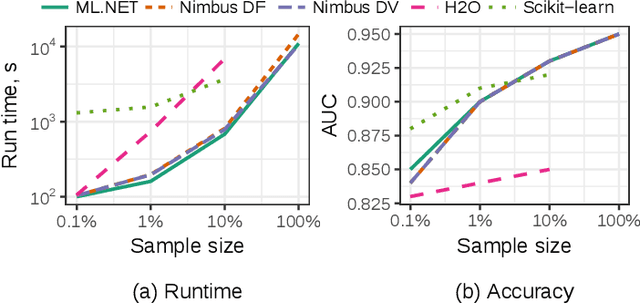

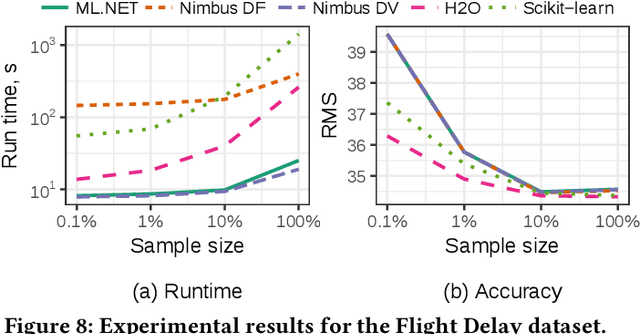

Abstract:Machine Learning is transitioning from an art and science into a technology available to every developer. In the near future, every application on every platform will incorporate trained models to encode data-based decisions that would be impossible for developers to author. This presents a significant engineering challenge, since currently data science and modeling are largely decoupled from standard software development processes. This separation makes incorporating machine learning capabilities inside applications unnecessarily costly and difficult, and furthermore discourage developers from embracing ML in first place. In this paper we present ML .NET, a framework developed at Microsoft over the last decade in response to the challenge of making it easy to ship machine learning models in large software applications. We present its architecture, and illuminate the application demands that shaped it. Specifically, we introduce DataView, the core data abstraction of ML .NET which allows it to capture full predictive pipelines efficiently and consistently across training and inference lifecycles. We close the paper with a surprisingly favorable performance study of ML .NET compared to more recent entrants, and a discussion of some lessons learned.

Crypto-Nets: Neural Networks over Encrypted Data

Dec 24, 2014

Abstract:The problem we address is the following: how can a user employ a predictive model that is held by a third party, without compromising private information. For example, a hospital may wish to use a cloud service to predict the readmission risk of a patient. However, due to regulations, the patient's medical files cannot be revealed. The goal is to make an inference using the model, without jeopardizing the accuracy of the prediction or the privacy of the data. To achieve high accuracy, we use neural networks, which have been shown to outperform other learning models for many tasks. To achieve the privacy requirements, we use homomorphic encryption in the following protocol: the data owner encrypts the data and sends the ciphertexts to the third party to obtain a prediction from a trained model. The model operates on these ciphertexts and sends back the encrypted prediction. In this protocol, not only the data remains private, even the values predicted are available only to the data owner. Using homomorphic encryption and modifications to the activation functions and training algorithms of neural networks, we show that it is protocol is possible and may be feasible. This method paves the way to build a secure cloud-based neural network prediction services without invading users' privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge