Tianyue Wu

Flying in Clutter on Monocular RGB by Learning in 3D Radiance Fields with Domain Adaptation

Dec 19, 2025Abstract:Modern autonomous navigation systems predominantly rely on lidar and depth cameras. However, a fundamental question remains: Can flying robots navigate in clutter using solely monocular RGB images? Given the prohibitive costs of real-world data collection, learning policies in simulation offers a promising path. Yet, deploying such policies directly in the physical world is hindered by the significant sim-to-real perception gap. Thus, we propose a framework that couples the photorealism of 3D Gaussian Splatting (3DGS) environments with Adversarial Domain Adaptation. By training in high-fidelity simulation while explicitly minimizing feature discrepancy, our method ensures the policy relies on domain-invariant cues. Experimental results demonstrate that our policy achieves robust zero-shot transfer to the physical world, enabling safe and agile flight in unstructured environments with varying illumination.

Reactive Aerobatic Flight via Reinforcement Learning

May 30, 2025Abstract:Quadrotors have demonstrated remarkable versatility, yet their full aerobatic potential remains largely untapped due to inherent underactuation and the complexity of aggressive maneuvers. Traditional approaches, separating trajectory optimization and tracking control, suffer from tracking inaccuracies, computational latency, and sensitivity to initial conditions, limiting their effectiveness in dynamic, high-agility scenarios. Inspired by recent breakthroughs in data-driven methods, we propose a reinforcement learning-based framework that directly maps drone states and aerobatic intentions to control commands, eliminating modular separation to enable quadrotors to perform end-to-end policy optimization for extreme aerobatic maneuvers. To ensure efficient and stable training, we introduce an automated curriculum learning strategy that dynamically adjusts aerobatic task difficulty. Enabled by domain randomization for robust zero-shot sim-to-real transfer, our approach is validated in demanding real-world experiments, including the first demonstration of a drone autonomously performing continuous inverted flight while reactively navigating a moving gate, showcasing unprecedented agility.

Automatic Generation of Aerobatic Flight in Complex Environments via Diffusion Models

Apr 21, 2025Abstract:Performing striking aerobatic flight in complex environments demands manual designs of key maneuvers in advance, which is intricate and time-consuming as the horizon of the trajectory performed becomes long. This paper presents a novel framework that leverages diffusion models to automate and scale up aerobatic trajectory generation. Our key innovation is the decomposition of complex maneuvers into aerobatic primitives, which are short frame sequences that act as building blocks, featuring critical aerobatic behaviors for tractable trajectory synthesis. The model learns aerobatic primitives using historical trajectory observations as dynamic priors to ensure motion continuity, with additional conditional inputs (target waypoints and optional action constraints) integrated to enable user-editable trajectory generation. During model inference, classifier guidance is incorporated with batch sampling to achieve obstacle avoidance. Additionally, the generated outcomes are refined through post-processing with spatial-temporal trajectory optimization to ensure dynamical feasibility. Extensive simulations and real-world experiments have validated the key component designs of our method, demonstrating its feasibility for deploying on real drones to achieve long-horizon aerobatic flight.

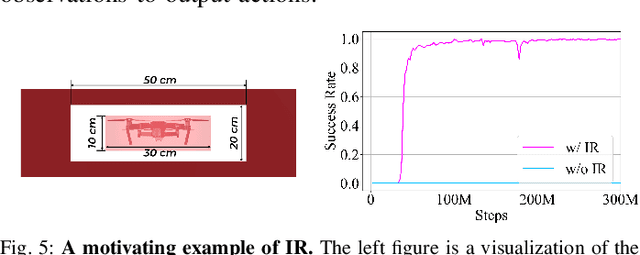

Whole-Body Control Through Narrow Gaps From Pixels To Action

Sep 02, 2024

Abstract:Flying through body-size narrow gaps in the environment is one of the most challenging moments for an underactuated multirotor. We explore a purely data-driven method to master this flight skill in simulation, where a neural network directly maps pixels and proprioception to continuous low-level control commands. This learned policy enables whole-body control through gaps with different geometries demanding sharp attitude changes (e.g., near-vertical roll angle). The policy is achieved by successive model-free reinforcement learning (RL) and online observation space distillation. The RL policy receives (virtual) point clouds of the gaps' edges for scalable simulation and is then distilled into the high-dimensional pixel space. However, this flight skill is fundamentally expensive to learn by exploring due to restricted feasible solution space. We propose to reset the agent as states on the trajectories by a model-based trajectory optimizer to alleviate this problem. The presented training pipeline is compared with baseline methods, and ablation studies are conducted to identify the key ingredients of our method. The immediate next step is to scale up the variation of gap sizes and geometries in anticipation of emergent policies and demonstrate the sim-to-real transformation.

Scalable Distance-based Multi-Agent Relative State Estimation via Block Multiconvex Optimization

May 31, 2024Abstract:This paper explores the distance-based relative state estimation problem in large-scale systems, which is hard to solve effectively due to its high-dimensionality and non-convexity. In this paper, we alleviate this inherent hardness to simultaneously achieve scalability and robustness of inference on this problem. Our idea is launched from a universal geometric formulation, called \emph{generalized graph realization}, for the distance-based relative state estimation problem. Based on this formulation, we introduce two collaborative optimization models, one of which is convex and thus globally solvable, and the other enables fast searching on non-convex landscapes to refine the solution offered by the convex one. Importantly, both models enjoy \emph{multiconvex} and \emph{decomposable} structures, allowing efficient and safe solutions using \emph{block coordinate descent} that enjoys scalability and a distributed nature. The proposed algorithms collaborate to demonstrate superior or comparable solution precision to the current centralized convex relaxation-based methods, which are known for their high optimality. Distinctly, the proposed methods demonstrate scalability beyond the reach of previous convex relaxation-based methods. We also demonstrate that the combination of the two proposed algorithms achieves a more robust pipeline than deploying the local search method alone in a continuous-time scenario.

Learning Agility Adaptation for Flight in Clutter

Mar 07, 2024Abstract:Animals learn to adapt agility of their movements to their capabilities and the environment they operate in. Mobile robots should also demonstrate this ability to combine agility and safety. The aim of this work is to endow flight vehicles with the ability of agility adaptation in prior unknown and partially observable cluttered environments. We propose a hierarchical learning and planning framework where we utilize both trial and error to comprehensively learn an agility policy with the vehicle's observation as the input, and well-established methods of model-based trajectory generation. Technically, we use online model-free reinforcement learning and a pre-training-fine-tuning reward scheme to obtain the deployable policy. The statistical results in simulation demonstrate the advantages of our method over the constant agility baselines and an alternative method in terms of flight efficiency and safety. In particular, the policy leads to intelligent behaviors, such as perception awareness, which distinguish it from other approaches. By deploying the policy to hardware, we verify that these advantages can be brought to the real world.

Distributed Optimization in Sensor Network for Scalable Multi-Robot Relative State Estimation

Mar 19, 2023Abstract:This paper is dedicated to achieving scalable relative state estimation using inter-robot Euclidean distance measurements. We consider equipping robots with distance sensors and focus on the optimization problem underlying relative state estimation in this setup. We reveal the commonality between this problem and the coordinates realization problem of a sensor network. Based on this insight, we propose an effective unconstrained optimization model to infer the relative states among robots. To work on this model in a distributed manner, we propose an efficient and scalable optimization algorithm with the classical block coordinate descent method as its backbone. This algorithm exactly solves each block update subproblem with a closed-form solution while ensuring convergence. Our results pave the way for distance measurements-based relative state estimation in large-scale multi-robot systems.

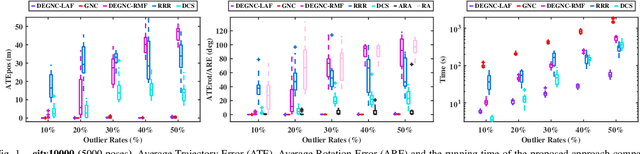

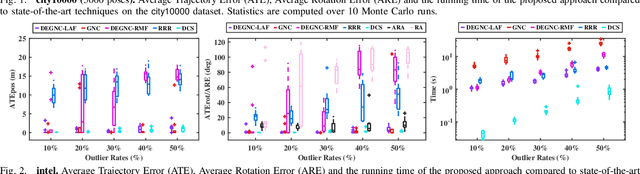

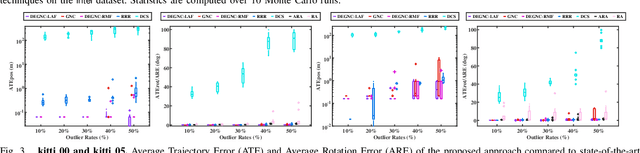

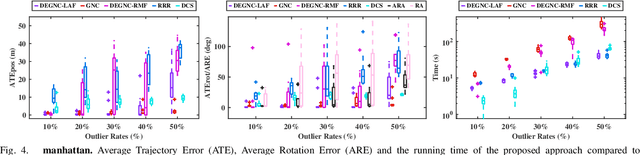

A Decoupled and Linear Framework for Global Outlier Rejection over Planar Pose Graph

Sep 18, 2022

Abstract:We propose a robust framework for the planar pose graph optimization contaminated by loop closure outliers. Our framework rejects outliers by first decoupling the robust PGO problem wrapped by a Truncated Least Squares kernel into two subproblems. Then, the framework introduces a linear angle representation to rewrite the first subproblem that is originally formulated with rotation matrices. The framework is configured with the Graduated Non-Convexity (GNC) algorithm to solve the two non-convex subproblems in succession without initial guesses. Thanks to the linearity properties of both the subproblems, our framework requires only linear solvers to optimally solve the optimization problems encountered in GNC. We extensively validate the proposed framework, named DEGNC-LAF (DEcoupled Graduated Non-Convexity with Linear Angle Formulation) in planar PGO benchmarks. It turns out that it runs significantly (sometimes up to over 30 times) faster than the standard and general-purpose GNC while resulting in high-quality estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge