Guangyu Zhao

Optimizing Latent Goal by Learning from Trajectory Preference

Dec 03, 2024

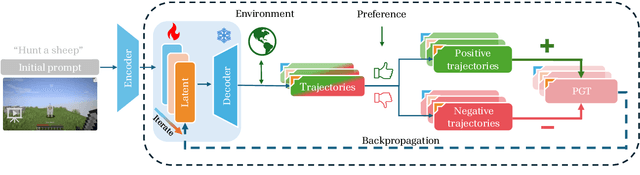

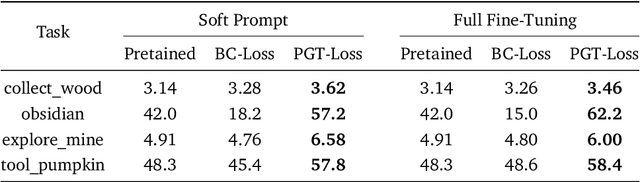

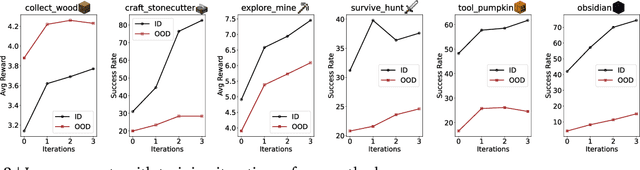

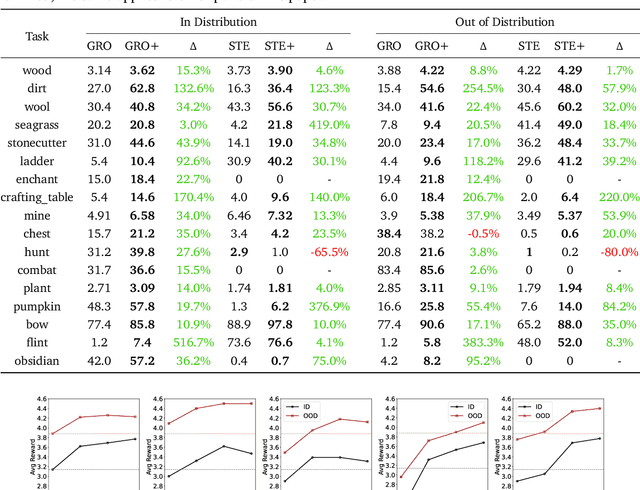

Abstract:A glowing body of work has emerged focusing on instruction-following policies for open-world agents, aiming to better align the agent's behavior with human intentions. However, the performance of these policies is highly susceptible to the initial prompt, which leads to extra efforts in selecting the best instructions. We propose a framework named Preference Goal Tuning (PGT). PGT allows an instruction following policy to interact with the environment to collect several trajectories, which will be categorized into positive and negative samples based on preference. Then we use preference learning to fine-tune the initial goal latent representation with the categorized trajectories while keeping the policy backbone frozen. The experiment result shows that with minimal data and training, PGT achieves an average relative improvement of 72.0% and 81.6% over 17 tasks in 2 different foundation policies respectively, and outperforms the best human-selected instructions. Moreover, PGT surpasses full fine-tuning in the out-of-distribution (OOD) task-execution environments by 13.4%, indicating that our approach retains strong generalization capabilities. Since our approach stores a single latent representation for each task independently, it can be viewed as an efficient method for continual learning, without the risk of catastrophic forgetting or task interference. In short, PGT enhances the performance of agents across nearly all tasks in the Minecraft Skillforge benchmark and demonstrates robustness to the execution environment.

Whole-Body Control Through Narrow Gaps From Pixels To Action

Sep 02, 2024

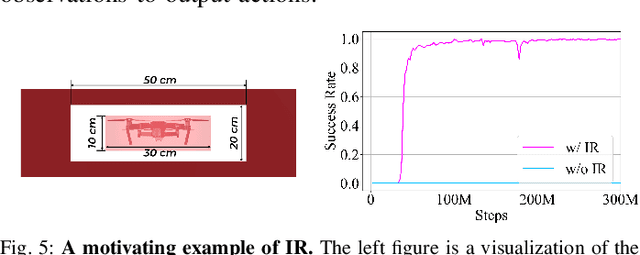

Abstract:Flying through body-size narrow gaps in the environment is one of the most challenging moments for an underactuated multirotor. We explore a purely data-driven method to master this flight skill in simulation, where a neural network directly maps pixels and proprioception to continuous low-level control commands. This learned policy enables whole-body control through gaps with different geometries demanding sharp attitude changes (e.g., near-vertical roll angle). The policy is achieved by successive model-free reinforcement learning (RL) and online observation space distillation. The RL policy receives (virtual) point clouds of the gaps' edges for scalable simulation and is then distilled into the high-dimensional pixel space. However, this flight skill is fundamentally expensive to learn by exploring due to restricted feasible solution space. We propose to reset the agent as states on the trajectories by a model-based trajectory optimizer to alleviate this problem. The presented training pipeline is compared with baseline methods, and ablation studies are conducted to identify the key ingredients of our method. The immediate next step is to scale up the variation of gap sizes and geometries in anticipation of emergent policies and demonstrate the sim-to-real transformation.

Learning Agility Adaptation for Flight in Clutter

Mar 07, 2024Abstract:Animals learn to adapt agility of their movements to their capabilities and the environment they operate in. Mobile robots should also demonstrate this ability to combine agility and safety. The aim of this work is to endow flight vehicles with the ability of agility adaptation in prior unknown and partially observable cluttered environments. We propose a hierarchical learning and planning framework where we utilize both trial and error to comprehensively learn an agility policy with the vehicle's observation as the input, and well-established methods of model-based trajectory generation. Technically, we use online model-free reinforcement learning and a pre-training-fine-tuning reward scheme to obtain the deployable policy. The statistical results in simulation demonstrate the advantages of our method over the constant agility baselines and an alternative method in terms of flight efficiency and safety. In particular, the policy leads to intelligent behaviors, such as perception awareness, which distinguish it from other approaches. By deploying the policy to hardware, we verify that these advantages can be brought to the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge