Thomas Boquet

DECoVaC: Design of Experiments with Controlled Variability Components

Sep 21, 2019

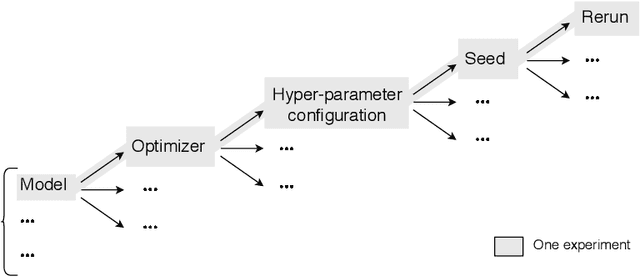

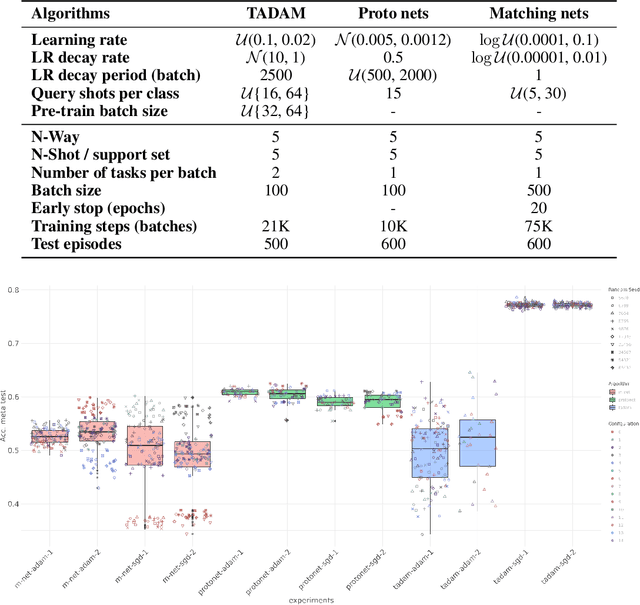

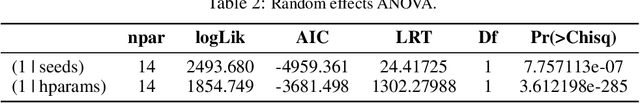

Abstract:Reproducible research in Machine Learning has seen a salutary abundance of progress lately: workflows, transparency, and statistical analysis of validation and test performance. We build on these efforts and take them further. We offer a principled experimental design methodology, based on linear mixed models, to study and separate the effects of multiple factors of variation in machine learning experiments. This approach allows to account for the effects of architecture, optimizer, hyper-parameters, intentional randomization, as well as unintended lack of determinism across reruns. We illustrate that methodology by analyzing Matching Networks, Prototypical Networks and TADAM on the miniImagenet dataset.

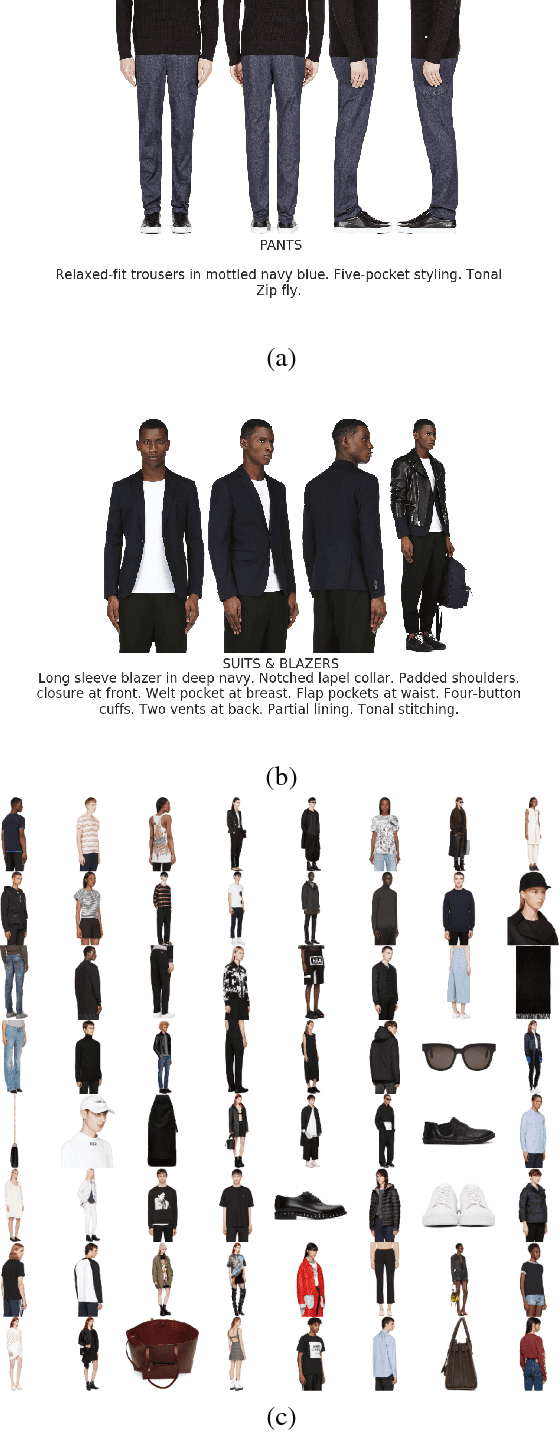

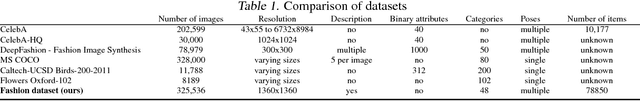

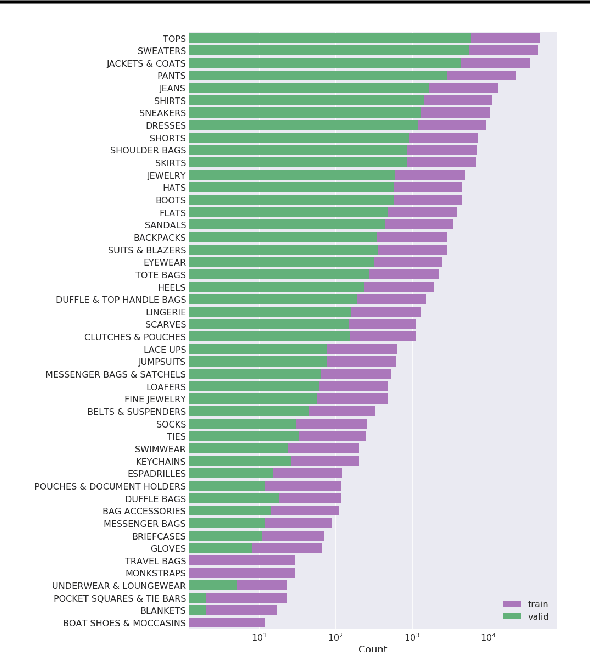

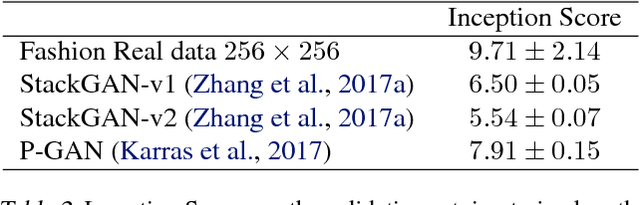

Fashion-Gen: The Generative Fashion Dataset and Challenge

Jul 30, 2018

Abstract:We introduce a new dataset of 293,008 high definition (1360 x 1360 pixels) fashion images paired with item descriptions provided by professional stylists. Each item is photographed from a variety of angles. We provide baseline results on 1) high-resolution image generation, and 2) image generation conditioned on the given text descriptions. We invite the community to improve upon these baselines. In this paper, we also outline the details of a challenge that we are launching based upon this dataset.

Uncertainty in Multitask Transfer Learning

Jul 06, 2018

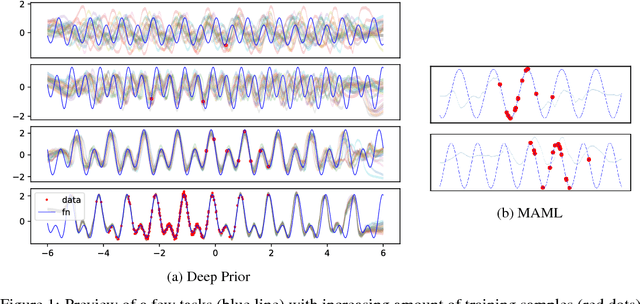

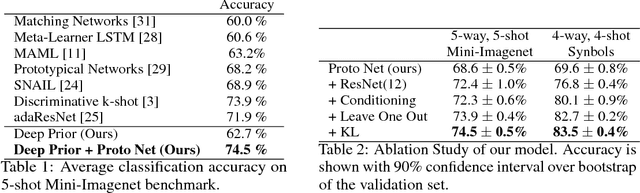

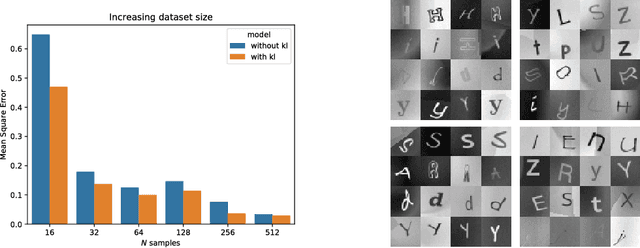

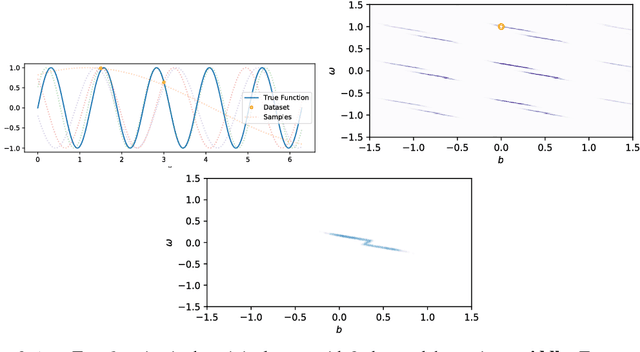

Abstract:Using variational Bayes neural networks, we develop an algorithm capable of accumulating knowledge into a prior from multiple different tasks. The result is a rich and meaningful prior capable of few-shot learning on new tasks. The posterior can go beyond the mean field approximation and yields good uncertainty on the performed experiments. Analysis on toy tasks shows that it can learn from significantly different tasks while finding similarities among them. Experiments of Mini-Imagenet yields the new state of the art with 74.5% accuracy on 5 shot learning. Finally, we provide experiments showing that other existing methods can fail to perform well in different benchmarks.

Deep Prior

Dec 16, 2017

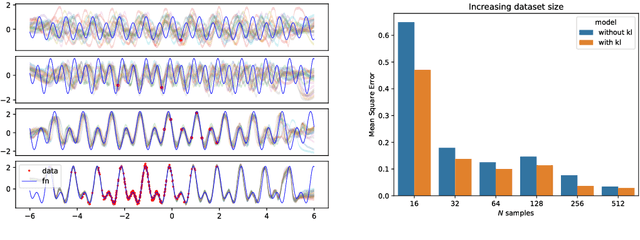

Abstract:The recent literature on deep learning offers new tools to learn a rich probability distribution over high dimensional data such as images or sounds. In this work we investigate the possibility of learning the prior distribution over neural network parameters using such tools. Our resulting variational Bayes algorithm generalizes well to new tasks, even when very few training examples are provided. Furthermore, this learned prior allows the model to extrapolate correctly far from a given task's training data on a meta-dataset of periodic signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge