Taras Holotyak

Radio-astronomical Image Reconstruction with Conditional Denoising Diffusion Model

Feb 20, 2024Abstract:Reconstructing sky models from dirty radio images for accurate source localization and flux estimation is crucial for studying galaxy evolution at high redshift, especially in deep fields using instruments like the Atacama Large Millimetre Array (ALMA). With new projects like the Square Kilometre Array (SKA), there's a growing need for better source extraction methods. Current techniques, such as CLEAN and PyBDSF, often fail to detect faint sources, highlighting the need for more accurate methods. This study proposes using stochastic neural networks to rebuild sky models directly from dirty images. This method can pinpoint radio sources and measure their fluxes with related uncertainties, marking a potential improvement in radio source characterization. We tested this approach on 10164 images simulated with the CASA tool simalma, based on ALMA's Cycle 5.3 antenna setup. We applied conditional Denoising Diffusion Probabilistic Models (DDPMs) for sky models reconstruction, then used Photutils to determine source coordinates and fluxes, assessing the model's performance across different water vapor levels. Our method showed excellence in source localization, achieving more than 90% completeness at a signal-to-noise ratio (SNR) as low as 2. It also surpassed PyBDSF in flux estimation, accurately identifying fluxes for 96% of sources in the test set, a significant improvement over CLEAN+ PyBDSF's 57%. Conditional DDPMs is a powerful tool for image-to-image translation, yielding accurate and robust characterisation of radio sources, and outperforming existing methodologies. While this study underscores its significant potential for applications in radio astronomy, we also acknowledge certain limitations that accompany its usage, suggesting directions for further refinement and research.

Mathematical model of printing-imaging channel for blind detection of fake copy detection patterns

Dec 14, 2022

Abstract:Nowadays, copy detection patterns (CDP) appear as a very promising anti-counterfeiting technology for physical object protection. However, the advent of deep learning as a powerful attacking tool has shown that the general authentication schemes are unable to compete and fail against such attacks. In this paper, we propose a new mathematical model of printing-imaging channel for the authentication of CDP together with a new detection scheme based on it. The results show that even deep learning created copy fakes unknown at the training stage can be reliably authenticated based on the proposed approach and using only digital references of CDP during authentication.

Digital twins of physical printing-imaging channel

Oct 28, 2022

Abstract:In this paper, we address the problem of modeling a printing-imaging channel built on a machine learning approach a.k.a. digital twin for anti-counterfeiting applications based on copy detection patterns (CDP). The digital twin is formulated on an information-theoretic framework called Turbo that uses variational approximations of mutual information developed for both encoder and decoder in a two-directional information passage. The proposed model generalizes several state-of-the-art architectures such as adversarial autoencoder (AAE), CycleGAN and adversarial latent space autoencoder (ALAE). This model can be applied to any type of printing and imaging and it only requires training data consisting of digital templates or artworks that are sent to a printing device and data acquired by an imaging device. Moreover, these data can be paired, unpaired or hybrid paired-unpaired which makes the proposed architecture very flexible and scalable to many practical setups. We demonstrate the impact of various architectural factors, metrics and discriminators on the overall system performance in the task of generation/prediction of printed CDP from their digital counterparts and vice versa. We also compare the proposed system with several state-of-the-art methods used for image-to-image translation applications.

Printing variability of copy detection patterns

Oct 11, 2022

Abstract:Copy detection pattern (CDP) is a novel solution for products' protection against counterfeiting, which gains its popularity in recent years. CDP attracts the anti-counterfeiting industry due to its numerous benefits in comparison to alternative protection techniques. Besides its attractiveness, there is an essential gap in the fundamental analysis of CDP authentication performance in large-scale industrial applications. It concerns variability of CDP parameters under different production conditions that include a type of printer, substrate, printing resolution, etc. Since digital off-set printing represents great flexibility in terms of product personalized in comparison with traditional off-set printing, it looks very interesting to address the above concerns for digital off-set printers that are used by several companies for the CDP protection of physical objects. In this paper, we thoroughly investigate certain factors impacting CDP. The experimental results obtained during our study reveal some previously unknown results and raise new and even more challenging questions. The results prove that it is a matter of great importance to choose carefully the substrate or printer for CDP production. This paper presents a new dataset produced by two industrial HP Indigo printers. The similarity between printed CDP and the digital templates, from which they have been produced, is chosen as a simple measure in our study. We found several particularities that might be of interest for large-scale industrial applications.

Anomaly localization for copy detection patterns through print estimations

Sep 29, 2022

Abstract:Copy detection patterns (CDP) are recent technologies for protecting products from counterfeiting. However, in contrast to traditional copy fakes, deep learning-based fakes have shown to be hardly distinguishable from originals by traditional authentication systems. Systems based on classical supervised learning and digital templates assume knowledge of fake CDP at training time and cannot generalize to unseen types of fakes. Authentication based on printed copies of originals is an alternative that yields better results even for unseen fakes and simple authentication metrics but comes at the impractical cost of acquisition and storage of printed copies. In this work, to overcome these shortcomings, we design a machine learning (ML) based authentication system that only requires digital templates and printed original CDP for training, whereas authentication is based solely on digital templates, which are used to estimate original printed codes. The obtained results show that the proposed system can efficiently authenticate original and detect fake CDP by accurately locating the anomalies in the fake CDP. The empirical evaluation of the authentication system under investigation is performed on the original and ML-based fakes CDP printed on two industrial printers.

Authentication of Copy Detection Patterns under Machine Learning Attacks: A Supervised Approach

Jun 25, 2022

Abstract:Copy detection patterns (CDP) are an attractive technology that allows manufacturers to defend their products against counterfeiting. The main assumption behind the protection mechanism of CDP is that these codes printed with the smallest symbol size (1x1) on an industrial printer cannot be copied or cloned with sufficient accuracy due to data processing inequality. However, previous works have shown that Machine Learning (ML) based attacks can produce high-quality fakes, resulting in decreased accuracy of authentication based on traditional feature-based authentication systems. While Deep Learning (DL) can be used as a part of the authentication system, to the best of our knowledge, none of the previous works has studied the performance of a DL-based authentication system against ML-based attacks on CDP with 1x1 symbol size. In this work, we study such a performance assuming a supervised learning (SL) setting.

Mobile authentication of copy detection patterns

Mar 04, 2022

Abstract:In the recent years, the copy detection patterns (CDP) attracted a lot of attention as a link between the physical and digital worlds, which is of great interest for the internet of things and brand protection applications. However, the security of CDP in terms of their reproducibility by unauthorized parties or clonability remains largely unexplored. In this respect this paper addresses a problem of anti-counterfeiting of physical objects and aims at investigating the authentication aspects and the resistances to illegal copying of the modern CDP from machine learning perspectives. A special attention is paid to a reliable authentication under the real life verification conditions when the codes are printed on an industrial printer and enrolled via modern mobile phones under regular light conditions. The theoretical and empirical investigation of authentication aspects of CDP is performed with respect to four types of copy fakes from the point of view of (i) multi-class supervised classification as a baseline approach and (ii) one-class classification as a real-life application case. The obtained results show that the modern machine-learning approaches and the technical capacities of modern mobile phones allow to reliably authenticate CDP on end-user mobile phones under the considered classes of fakes.

Machine learning attack on copy detection patterns: are 1x1 patterns cloneable?

Oct 06, 2021

Abstract:Nowadays, the modern economy critically requires reliable yet cheap protection solutions against product counterfeiting for the mass market. Copy detection patterns (CDP) are considered as such solution in several applications. It is assumed that being printed at the maximum achievable limit of a printing resolution of an industrial printer with the smallest symbol size 1x1 elements, the CDP cannot be copied with sufficient accuracy and thus are unclonable. In this paper, we challenge this hypothesis and consider a copy attack against the CDP based on machine learning. The experimental based on samples produced on two industrial printers demonstrate that simple detection metrics used in the CDP authentication cannot reliably distinguish the original CDP from their fakes. Thus, the paper calls for a need of careful reconsideration of CDP cloneability and search for new authentication techniques and CDP optimization because of the current attack.

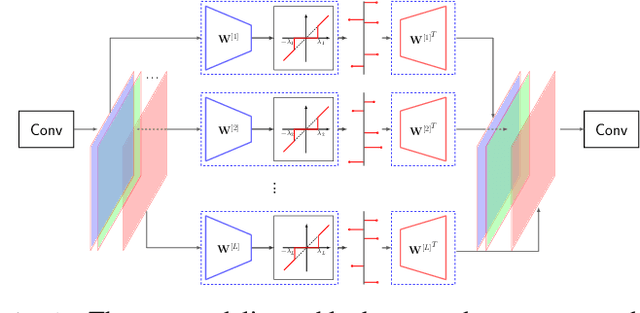

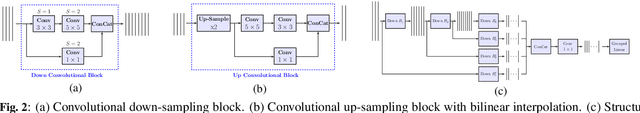

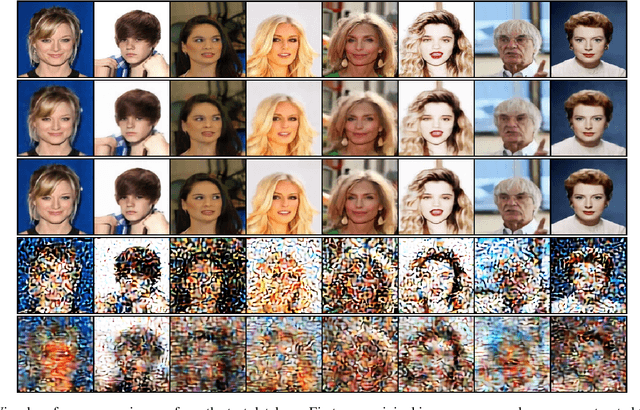

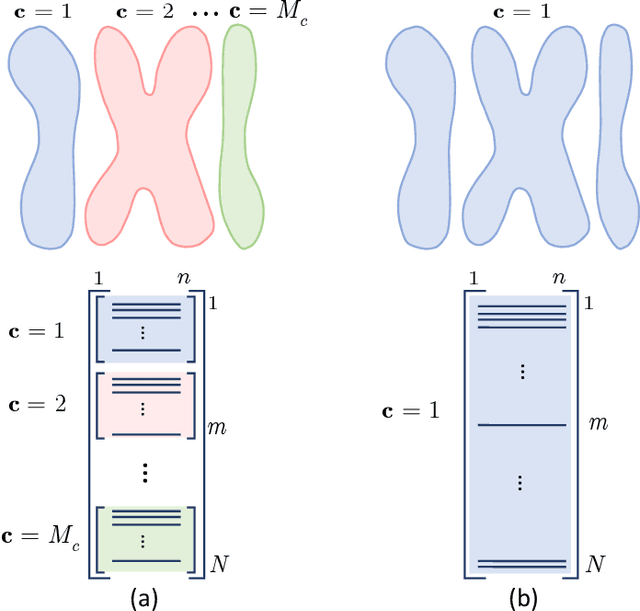

Privacy-Preserving Image Sharing via Sparsifying Layers on Convolutional Groups

Feb 04, 2020

Abstract:We propose a practical framework to address the problem of privacy-aware image sharing in large-scale setups. We argue that, while compactness is always desired at scale, this need is more severe when trying to furthermore protect the privacy-sensitive content. We therefore encode images, such that, from one hand, representations are stored in the public domain without paying the huge cost of privacy protection, but ambiguated and hence leaking no discernible content from the images, unless a combinatorially-expensive guessing mechanism is available for the attacker. From the other hand, authorized users are provided with very compact keys that can easily be kept secure. This can be used to disambiguate and reconstruct faithfully the corresponding access-granted images. We achieve this with a convolutional autoencoder of our design, where feature maps are passed independently through sparsifying transformations, providing multiple compact codes, each responsible for reconstructing different attributes of the image. The framework is tested on a large-scale database of images with public implementation available.

Information bottleneck through variational glasses

Dec 05, 2019

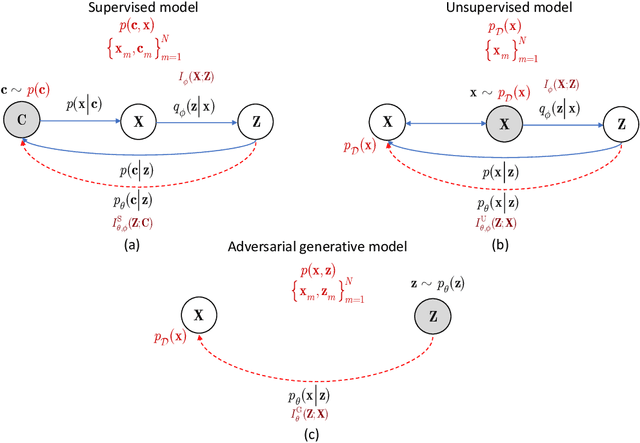

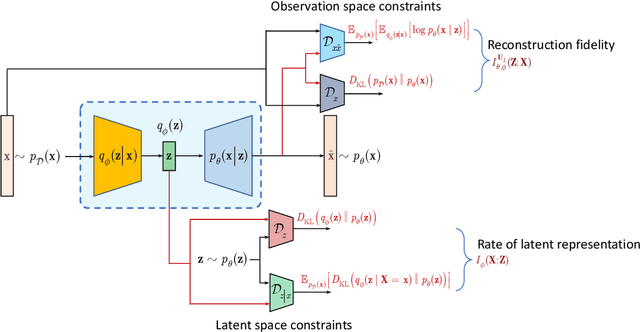

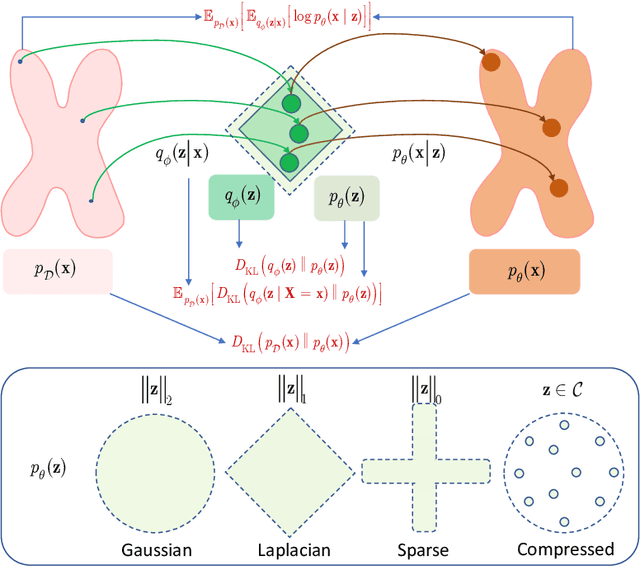

Abstract:Information bottleneck (IB) principle [1] has become an important element in information-theoretic analysis of deep models. Many state-of-the-art generative models of both Variational Autoencoder (VAE) [2; 3] and Generative Adversarial Networks (GAN) [4] families use various bounds on mutual information terms to introduce certain regularization constraints [5; 6; 7; 8; 9; 10]. Accordingly, the main difference between these models consists in add regularization constraints and targeted objectives. In this work, we will consider the IB framework for three classes of models that include supervised, unsupervised and adversarial generative models. We will apply a variational decomposition leading a common structure and allowing easily establish connections between these models and analyze underlying assumptions. Based on these results, we focus our analysis on unsupervised setup and reconsider the VAE family. In particular, we present a new interpretation of VAE family based on the IB framework using a direct decomposition of mutual information terms and show some interesting connections to existing methods such as VAE [2; 3], beta-VAE [11], AAE [12], InfoVAE [5] and VAE/GAN [13]. Instead of adding regularization constraints to an evidence lower bound (ELBO) [2; 3], which itself is a lower bound, we show that many known methods can be considered as a product of variational decomposition of mutual information terms in the IB framework. The proposed decomposition might also contribute to the interpretability of generative models of both VAE and GAN families and create a new insights to a generative compression [14; 15; 16; 17]. It can also be of interest for the analysis of novelty detection based on one-class classifiers [18] with the IB based discriminators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge