Taqwa I. Alhadidi

Enhancing Pavement Crack Classification with Bidirectional Cascaded Neural Networks

Mar 27, 2025

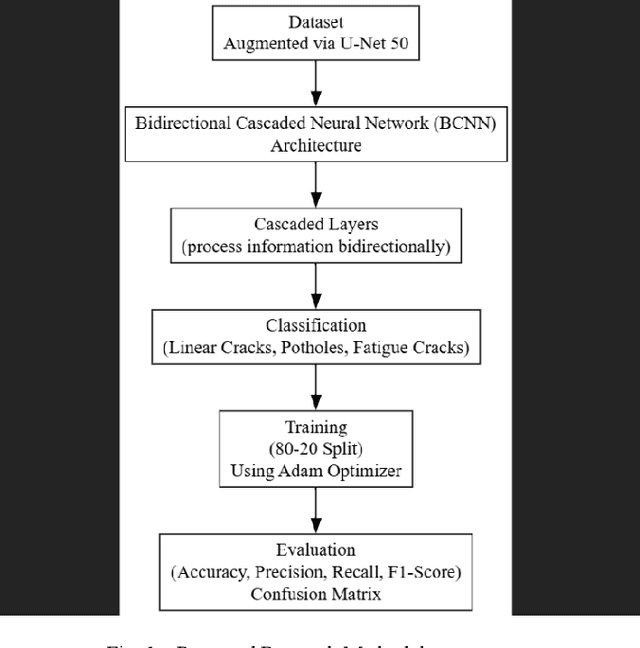

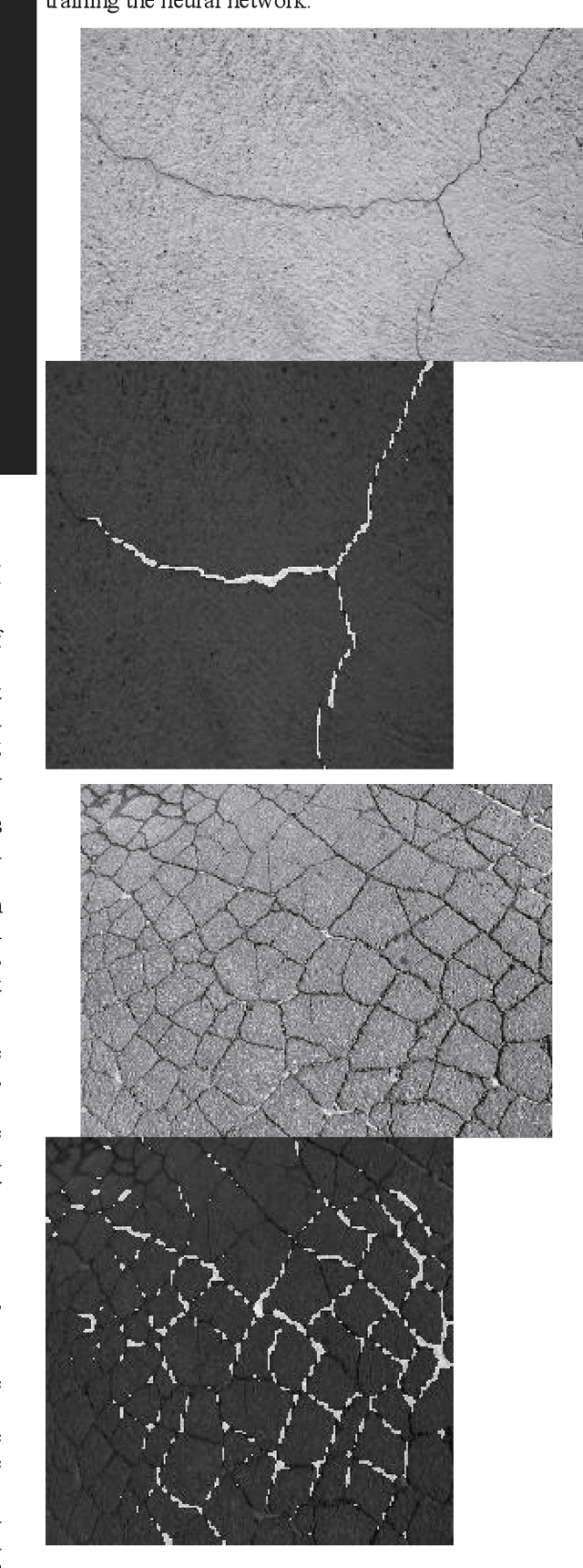

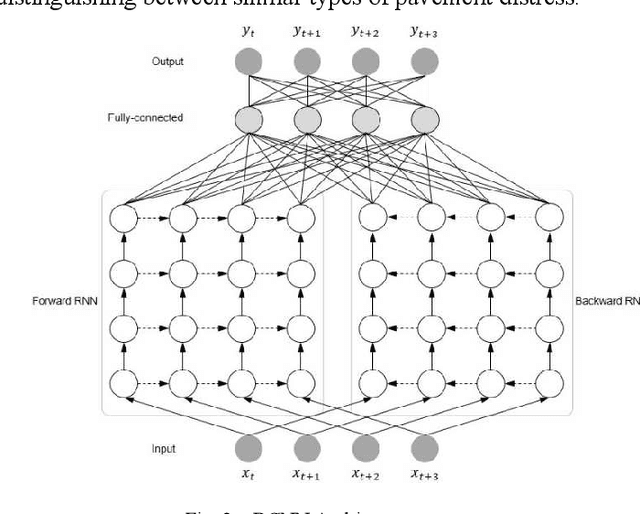

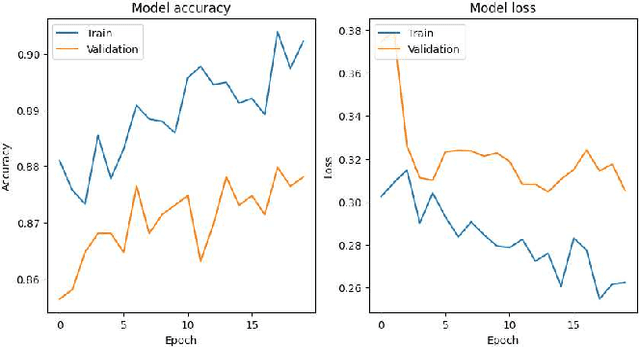

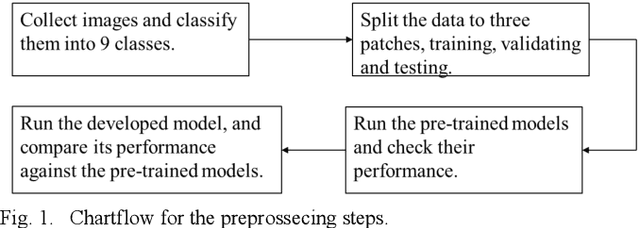

Abstract:Pavement distress, such as cracks and potholes, is a significant issue affecting road safety and maintenance. In this study, we present the implementation and evaluation of Bidirectional Cascaded Neural Networks (BCNNs) for the classification of pavement crack images following image augmentation. We classified pavement cracks into three main categories: linear cracks, potholes, and fatigue cracks on an enhanced dataset utilizing U-Net 50 for image augmentation. The augmented dataset comprised 599 images. Our proposed BCNN model was designed to leverage both forward and backward information flows, with detection accuracy enhanced by its cascaded structure wherein each layer progressively refines the output of the preceding one. Our model achieved an overall accuracy of 87%, with precision, recall, and F1-score measures indicating high effectiveness across the categories. For fatigue cracks, the model recorded a precision of 0.87, recall of 0.83, and F1-score of 0.85 on 205 images. Linear cracks were detected with a precision of 0.81, recall of 0.89, and F1-score of 0.85 on 205 images, and potholes with a precision of 0.96, recall of 0.90, and F1-score of 0.93 on 189 images. The macro and weighted average of precision, recall, and F1-score were identical at 0.88, confirming the BCNN's excellent performance in classifying complex pavement crack patterns. This research demonstrates the potential of BCNNs to significantly enhance the accuracy and reliability of pavement distress classification, resulting in more effective and efficient pavement maintenance and management systems.

Vision-Language Models for Autonomous Driving: CLIP-Based Dynamic Scene Understanding

Jan 09, 2025

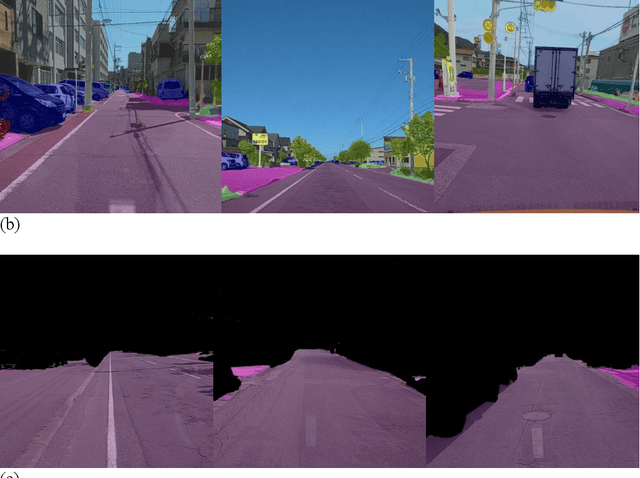

Abstract:Scene understanding is essential for enhancing driver safety, generating human-centric explanations for Automated Vehicle (AV) decisions, and leveraging Artificial Intelligence (AI) for retrospective driving video analysis. This study developed a dynamic scene retrieval system using Contrastive Language-Image Pretraining (CLIP) models, which can be optimized for real-time deployment on edge devices. The proposed system outperforms state-of-the-art in-context learning methods, including the zero-shot capabilities of GPT-4o, particularly in complex scenarios. By conducting frame-level analysis on the Honda Scenes Dataset, which contains a collection of about 80 hours of annotated driving videos capturing diverse real-world road and weather conditions, our study highlights the robustness of CLIP models in learning visual concepts from natural language supervision. Results also showed that fine-tuning the CLIP models, such as ViT-L/14 and ViT-B/32, significantly improved scene classification, achieving a top F1 score of 91.1%. These results demonstrate the ability of the system to deliver rapid and precise scene recognition, which can be used to meet the critical requirements of Advanced Driver Assistance Systems (ADAS). This study shows the potential of CLIP models to provide scalable and efficient frameworks for dynamic scene understanding and classification. Furthermore, this work lays the groundwork for advanced autonomous vehicle technologies by fostering a deeper understanding of driver behavior, road conditions, and safety-critical scenarios, marking a significant step toward smarter, safer, and more context-aware autonomous driving systems.

Advancing Object Detection in Transportation with Multimodal Large Language Models (MLLMs): A Comprehensive Review and Empirical Testing

Sep 26, 2024

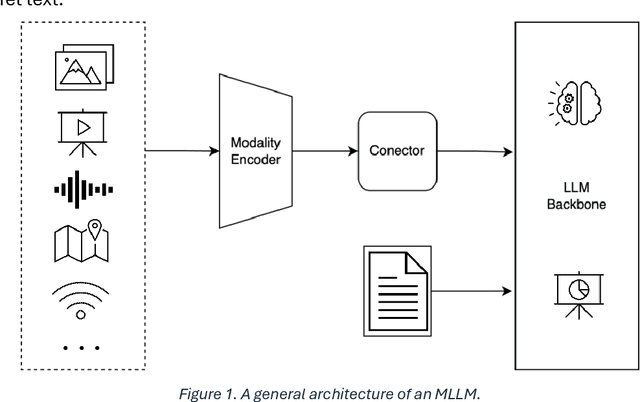

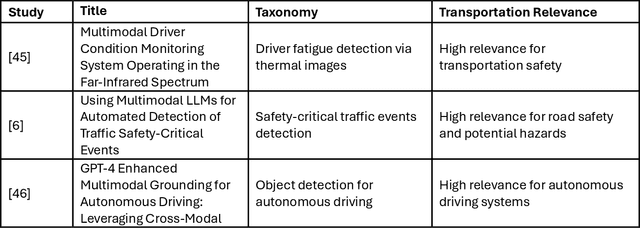

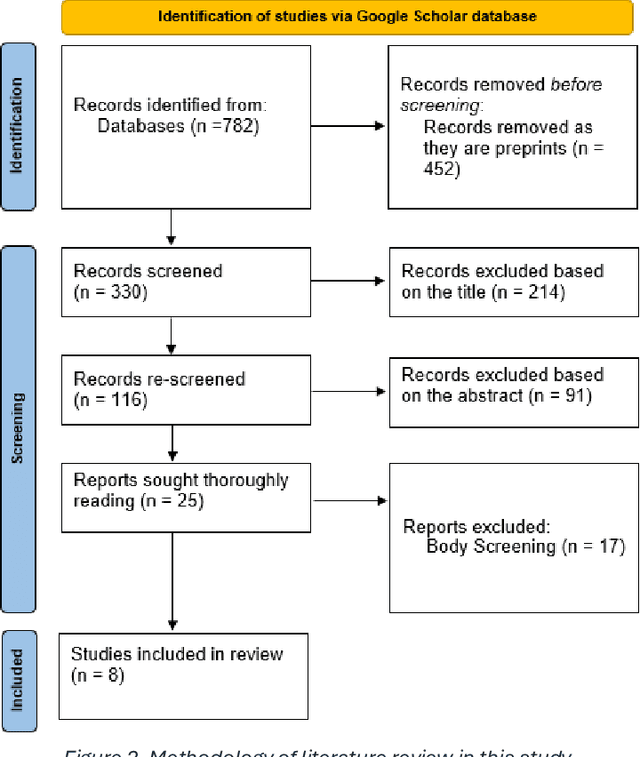

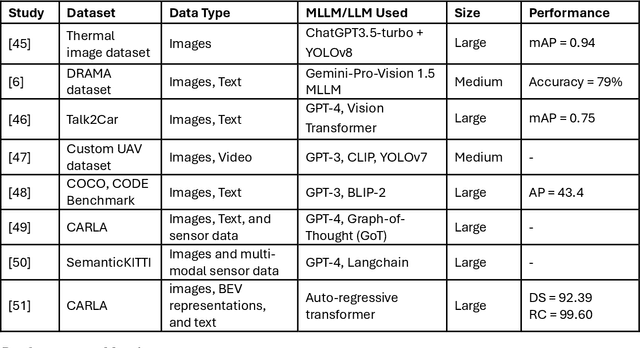

Abstract:This study aims to comprehensively review and empirically evaluate the application of multimodal large language models (MLLMs) and Large Vision Models (VLMs) in object detection for transportation systems. In the first fold, we provide a background about the potential benefits of MLLMs in transportation applications and conduct a comprehensive review of current MLLM technologies in previous studies. We highlight their effectiveness and limitations in object detection within various transportation scenarios. The second fold involves providing an overview of the taxonomy of end-to-end object detection in transportation applications and future directions. Building on this, we proposed empirical analysis for testing MLLMs on three real-world transportation problems that include object detection tasks namely, road safety attributes extraction, safety-critical event detection, and visual reasoning of thermal images. Our findings provide a detailed assessment of MLLM performance, uncovering both strengths and areas for improvement. Finally, we discuss practical limitations and challenges of MLLMs in enhancing object detection in transportation, thereby offering a roadmap for future research and development in this critical area.

Visual Reasoning and Multi-Agent Approach in Multimodal Large Language Models (MLLMs): Solving TSP and mTSP Combinatorial Challenges

Jun 26, 2024

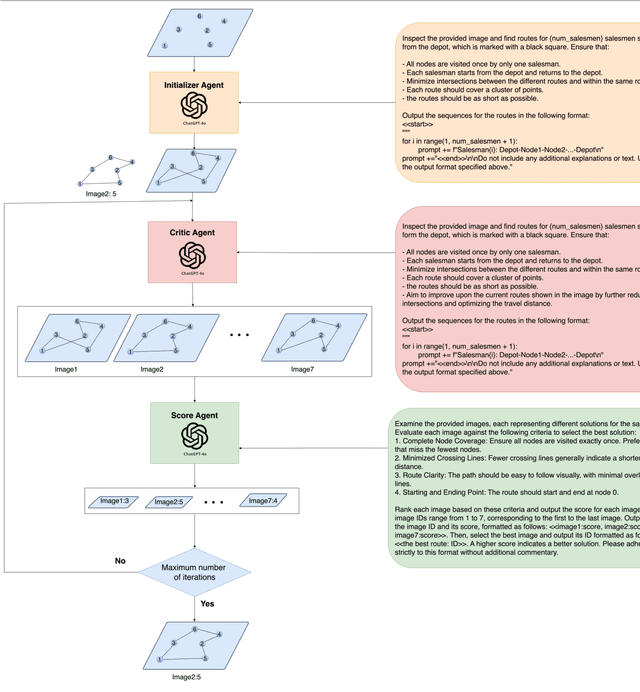

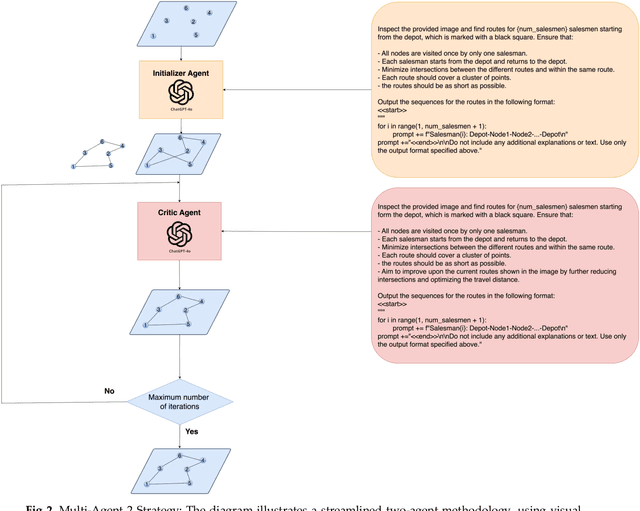

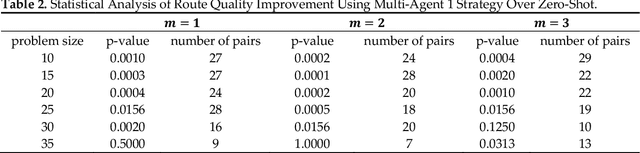

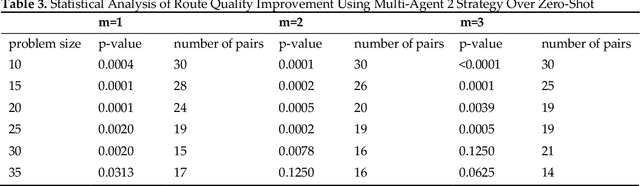

Abstract:Multimodal Large Language Models (MLLMs) harness comprehensive knowledge spanning text, images, and audio to adeptly tackle complex problems, including zero-shot in-context learning scenarios. This study explores the ability of MLLMs in visually solving the Traveling Salesman Problem (TSP) and Multiple Traveling Salesman Problem (mTSP) using images that portray point distributions on a two-dimensional plane. We introduce a novel approach employing multiple specialized agents within the MLLM framework, each dedicated to optimizing solutions for these combinatorial challenges. Our experimental investigation includes rigorous evaluations across zero-shot settings and introduces innovative multi-agent zero-shot in-context scenarios. The results demonstrated that both multi-agent models. Multi-Agent 1, which includes the Initializer, Critic, and Scorer agents, and Multi-Agent 2, which comprises only the Initializer and Critic agents; significantly improved solution quality for TSP and mTSP problems. Multi-Agent 1 excelled in environments requiring detailed route refinement and evaluation, providing a robust framework for sophisticated optimizations. In contrast, Multi-Agent 2, focusing on iterative refinements by the Initializer and Critic, proved effective for rapid decision-making scenarios. These experiments yield promising outcomes, showcasing the robust visual reasoning capabilities of MLLMs in addressing diverse combinatorial problems. The findings underscore the potential of MLLMs as powerful tools in computational optimization, offering insights that could inspire further advancements in this promising field. Project link: https://github.com/ahmed-abdulhuy/Solving-TSP-and-mTSP-Combinatorial-Challenges-using-Visual-Reasoning-and-Multi-Agent-Approach-MLLMs-.git

The Use of Multimodal Large Language Models to Detect Objects from Thermal Images: Transportation Applications

Jun 20, 2024Abstract:The integration of thermal imaging data with Multimodal Large Language Models (MLLMs) constitutes an exciting opportunity for improving the safety and functionality of autonomous driving systems and many Intelligent Transportation Systems (ITS) applications. This study investigates whether MLLMs can understand complex images from RGB and thermal cameras and detect objects directly. Our goals were to 1) assess the ability of the MLLM to learn from information from various sets, 2) detect objects and identify elements in thermal cameras, 3) determine whether two independent modality images show the same scene, and 4) learn all objects using different modalities. The findings showed that both GPT-4 and Gemini were effective in detecting and classifying objects in thermal images. Similarly, the Mean Absolute Percentage Error (MAPE) for pedestrian classification was 70.39% and 81.48%, respectively. Moreover, the MAPE for bike, car, and motorcycle detection were 78.4%, 55.81%, and 96.15%, respectively. Gemini produced MAPE of 66.53%, 59.35% and 78.18% respectively. This finding further demonstrates that MLLM can identify thermal images and can be employed in advanced imaging automation technologies for ITS applications.

Exploring Traffic Crash Narratives in Jordan Using Text Mining Analytics

Jun 11, 2024

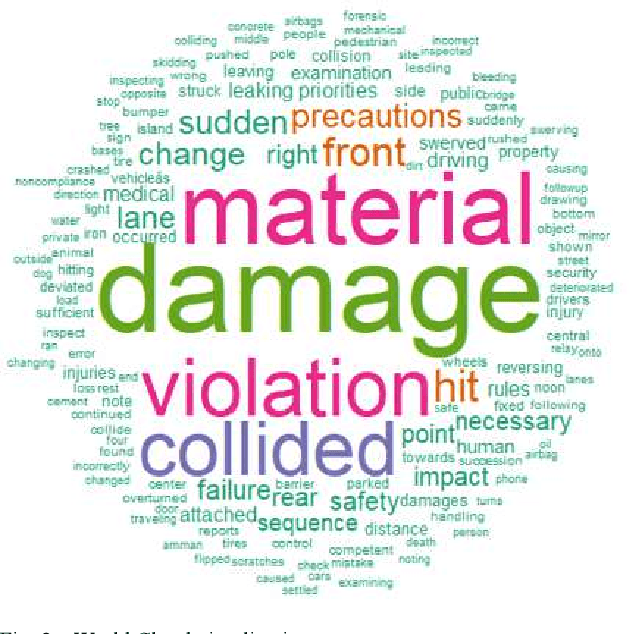

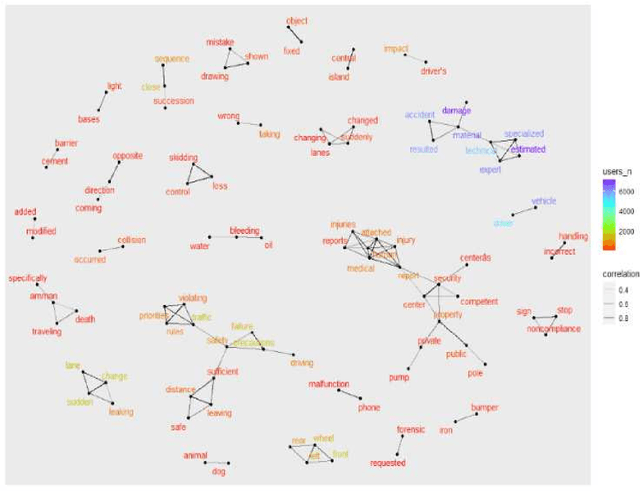

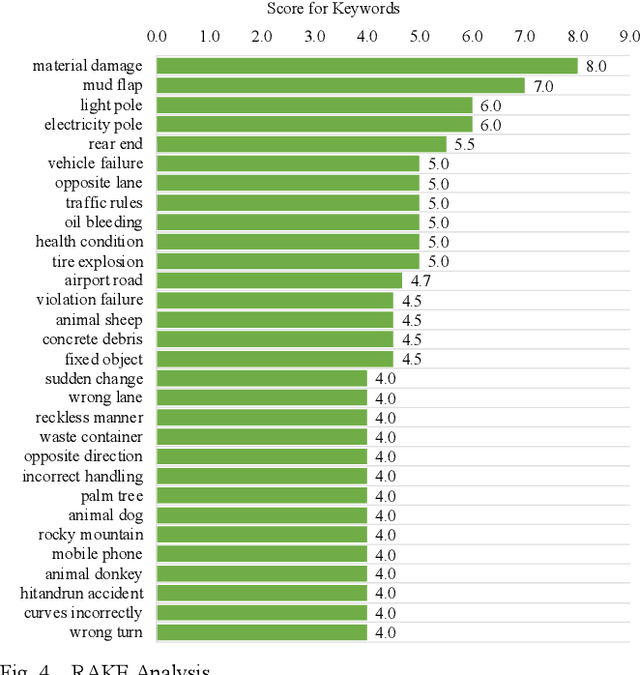

Abstract:This study explores traffic crash narratives in an attempt to inform and enhance effective traffic safety policies using text-mining analytics. Text mining techniques are employed to unravel key themes and trends within the narratives, aiming to provide a deeper understanding of the factors contributing to traffic crashes. This study collected crash data from five major freeways in Jordan that cover narratives of 7,587 records from 2018-2022. An unsupervised learning method was adopted to learn the pattern from crash data. Various text mining techniques, such as topic modeling, keyword extraction, and Word Co-Occurrence Network, were also used to reveal the co-occurrence of crash patterns. Results show that text mining analytics is a promising method and underscore the multifactorial nature of traffic crashes, including intertwining human decisions and vehicular conditions. The recurrent themes across all analyses highlight the need for a balanced approach to road safety, merging both proactive and reactive measures. Emphasis on driver education and awareness around animal-related incidents is paramount.

Eyeballing Combinatorial Problems: A Case Study of Using Multimodal Large Language Models to Solve Traveling Salesman Problems

Jun 11, 2024

Abstract:Multimodal Large Language Models (MLLMs) have demonstrated proficiency in processing di-verse modalities, including text, images, and audio. These models leverage extensive pre-existing knowledge, enabling them to address complex problems with minimal to no specific training examples, as evidenced in few-shot and zero-shot in-context learning scenarios. This paper investigates the use of MLLMs' visual capabilities to 'eyeball' solutions for the Traveling Salesman Problem (TSP) by analyzing images of point distributions on a two-dimensional plane. Our experiments aimed to validate the hypothesis that MLLMs can effectively 'eyeball' viable TSP routes. The results from zero-shot, few-shot, self-ensemble, and self-refine zero-shot evaluations show promising outcomes. We anticipate that these findings will inspire further exploration into MLLMs' visual reasoning abilities to tackle other combinatorial problems.

Advancing Roadway Sign Detection with YOLO Models and Transfer Learning

Jun 11, 2024

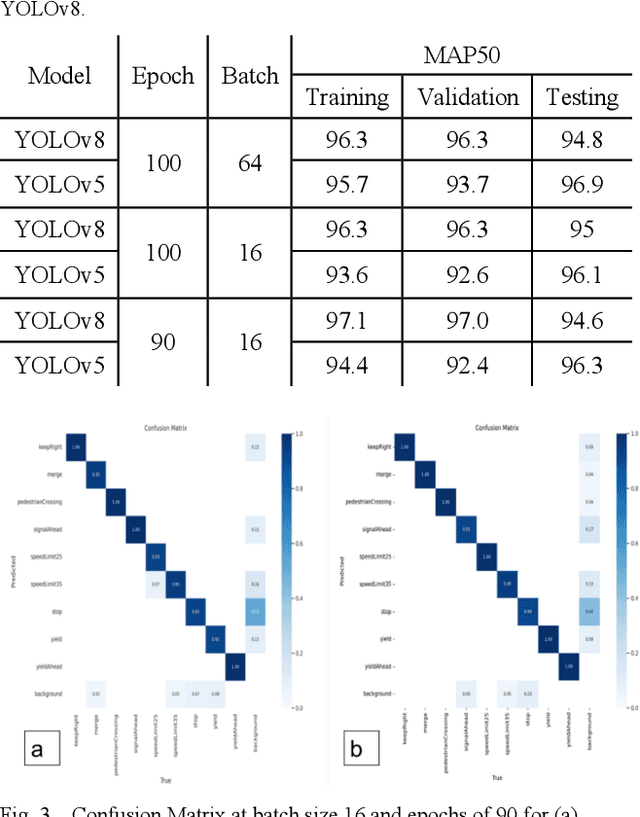

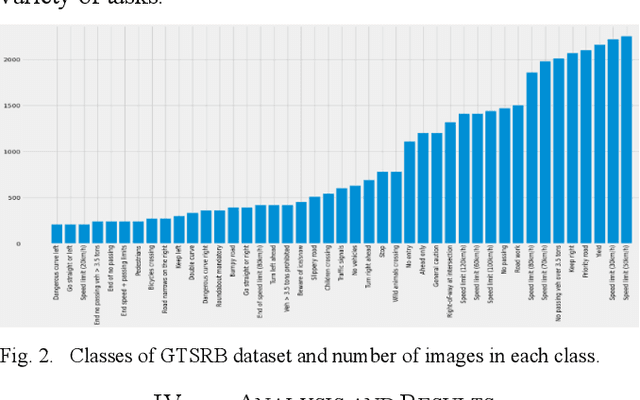

Abstract:Roadway signs detection and recognition is an essential element in the Advanced Driving Assistant Systems (ADAS). Several artificial intelligence methods have been used widely among of them YOLOv5 and YOLOv8. In this paper, we used a modified YOLOv5 and YOLOv8 to detect and classify different roadway signs under different illumination conditions. Experimental results indicated that for the YOLOv8 model, varying the number of epochs and batch size yields consistent MAP50 scores, ranging from 94.6% to 97.1% on the testing set. The YOLOv5 model demonstrates competitive performance, with MAP50 scores ranging from 92.4% to 96.9%. These results suggest that both models perform well across different training setups, with YOLOv8 generally achieving slightly higher MAP50 scores. These findings suggest that both models can perform well under different training setups, offering valuable insights for practitioners seeking reliable and adaptable solutions in object detection applications.

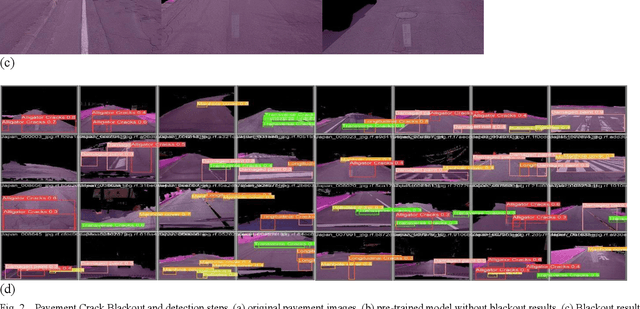

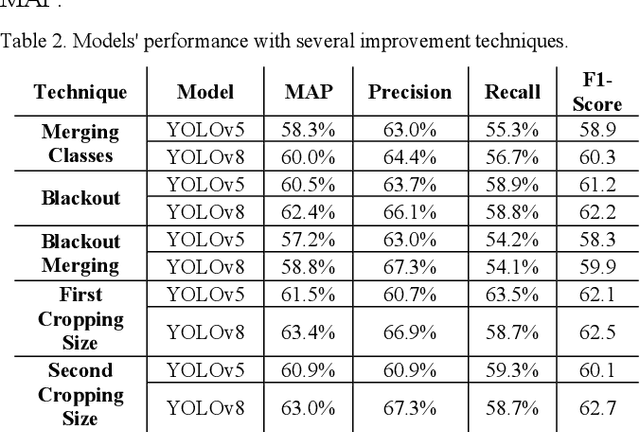

Automated Pavement Cracks Detection and Classification Using Deep Learning

Jun 11, 2024

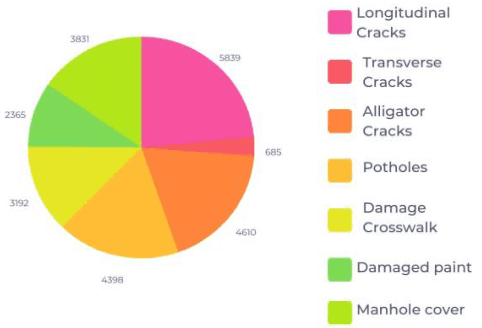

Abstract:Monitoring asset conditions is a crucial factor in building efficient transportation asset management. Because of substantial advances in image processing, traditional manual classification has been largely replaced by semi-automatic/automatic techniques. As a result, automated asset detection and classification techniques are required. This paper proposes a methodology to detect and classify roadway pavement cracks using the well-known You Only Look Once (YOLO) version five (YOLOv5) and version 8 (YOLOv8) algorithms. Experimental results indicated that the precision of pavement crack detection reaches up to 67.3% under different illumination conditions and image sizes. The findings of this study can assist highway agencies in accurately detecting and classifying asset conditions under different illumination conditions. This will reduce the cost and time that are associated with manual inspection, which can greatly reduce the cost of highway asset maintenance.

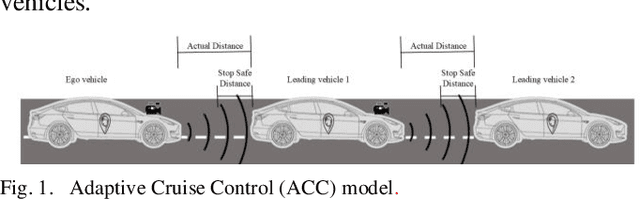

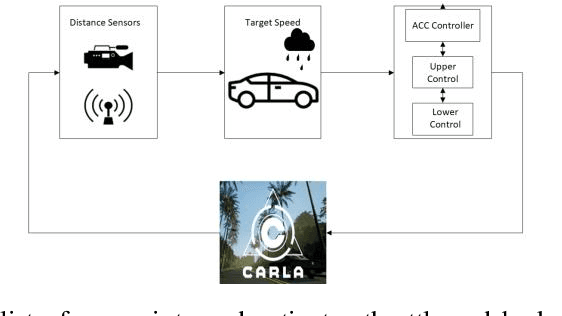

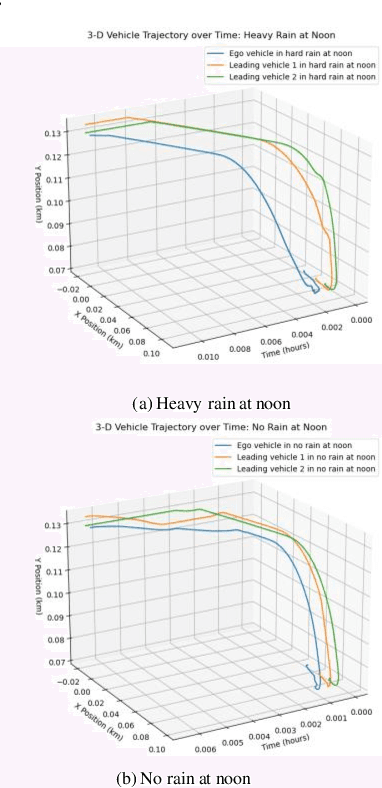

Evaluation and Optimization of Adaptive Cruise Control in Autonomous Vehicles using the CARLA Simulator: A Study on Performance under Wet and Dry Weather Conditions

May 02, 2024

Abstract:Adaptive Cruise Control ACC can change the speed of the ego vehicle to maintain a safe distance from the following vehicle automatically. The primary purpose of this research is to use cutting-edge computing approaches to locate and track vehicles in real time under various conditions to achieve a safe ACC. The paper examines the extension of ACC employing depth cameras and radar sensors within Autonomous Vehicles AVs to respond in real time by changing weather conditions using the Car Learning to Act CARLA simulation platform at noon. The ego vehicle controller's decision to accelerate or decelerate depends on the speed of the leading ahead vehicle and the safe distance from that vehicle. Simulation results show that a Proportional Integral Derivative PID control of autonomous vehicles using a depth camera and radar sensors reduces the speed of the leading vehicle and the ego vehicle when it rains. In addition, longer travel time was observed for both vehicles in rainy conditions than in dry conditions. Also, PID control prevents the leading vehicle from rear collisions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge