Swarup Ranjan Behera

Are Multimodal Foundation Models All That Is Needed for Emofake Detection?

Sep 19, 2025Abstract:In this work, we investigate multimodal foundation models (MFMs) for EmoFake detection (EFD) and hypothesize that they will outperform audio foundation models (AFMs). MFMs due to their cross-modal pre-training, learns emotional patterns from multiple modalities, while AFMs rely only on audio. As such, MFMs can better recognize unnatural emotional shifts and inconsistencies in manipulated audio, making them more effective at distinguishing real from fake emotional expressions. To validate our hypothesis, we conduct a comprehensive comparative analysis of state-of-the-art (SOTA) MFMs (e.g. LanguageBind) alongside AFMs (e.g. WavLM). Our experiments confirm that MFMs surpass AFMs for EFD. Beyond individual foundation models (FMs) performance, we explore FMs fusion, motivated by findings in related research areas such synthetic speech detection and speech emotion recognition. To this end, we propose SCAR, a novel framework for effective fusion. SCAR introduces a nested cross-attention mechanism, where representations from FMs interact at two stages sequentially to refine information exchange. Additionally, a self-attention refinement module further enhances feature representations by reinforcing important cross-FM cues while suppressing noise. Through SCAR with synergistic fusion of MFMs, we achieve SOTA performance, surpassing both standalone FMs and conventional fusion approaches and previous works on EFD.

Rethinking Cross-Corpus Speech Emotion Recognition Benchmarking: Are Paralinguistic Pre-Trained Representations Sufficient?

Sep 19, 2025Abstract:Recent benchmarks evaluating pre-trained models (PTMs) for cross-corpus speech emotion recognition (SER) have overlooked PTM pre-trained for paralinguistic speech processing (PSP), raising concerns about their reliability, since SER is inherently a paralinguistic task. We hypothesize that PSP-focused PTM will perform better in cross-corpus SER settings. To test this, we analyze state-of-the-art PTMs representations including paralinguistic, monolingual, multilingual, and speaker recognition. Our results confirm that TRILLsson (a paralinguistic PTM) outperforms others, reinforcing the need to consider PSP-focused PTMs in cross-corpus SER benchmarks. This study enhances benchmark trustworthiness and guides PTMs evaluations for reliable cross-corpus SER.

Investigating Prosodic Signatures via Speech Pre-Trained Models for Audio Deepfake Source Attribution

Dec 23, 2024

Abstract:In this work, we investigate various state-of-the-art (SOTA) speech pre-trained models (PTMs) for their capability to capture prosodic signatures of the generative sources for audio deepfake source attribution (ADSD). These prosodic characteristics can be considered one of major signatures for ADSD, which is unique to each source. So better is the PTM at capturing prosodic signs better the ADSD performance. We consider various SOTA PTMs that have shown top performance in different prosodic tasks for our experiments on benchmark datasets, ASVSpoof 2019 and CFAD. x-vector (speaker recognition PTM) attains the highest performance in comparison to all the PTMs considered despite consisting lowest model parameters. This higher performance can be due to its speaker recognition pre-training that enables it for capturing unique prosodic characteristics of the sources in a better way. Further, motivated from tasks such as audio deepfake detection and speech recognition, where fusion of PTMs representations lead to improved performance, we explore the same and propose FINDER for effective fusion of such representations. With fusion of Whisper and x-vector representations through FINDER, we achieved the topmost performance in comparison to all the individual PTMs as well as baseline fusion techniques and attaining SOTA performance.

Beyond Speech and More: Investigating the Emergent Ability of Speech Foundation Models for Classifying Physiological Time-Series Signals

Oct 16, 2024

Abstract:Despite being trained exclusively on speech data, speech foundation models (SFMs) like Whisper have shown impressive performance in non-speech tasks such as audio classification. This is partly because speech shares some common traits with audio, enabling SFMs to transfer effectively. In this study, we push the boundaries by evaluating SFMs on a more challenging out-of-domain (OOD) task: classifying physiological time-series signals. We test two key hypotheses: first, that SFMs can generalize to physiological signals by capturing shared temporal patterns; second, that multilingual SFMs will outperform others due to their exposure to greater variability during pre-training, leading to more robust, generalized representations. Our experiments, conducted for stress recognition using ECG (Electrocardiogram), EMG (Electromyography), and EDA (Electrodermal Activity) signals, reveal that models trained on SFM-derived representations outperform those trained on raw physiological signals. Among all models, multilingual SFMs achieve the highest accuracy, supporting our hypothesis and demonstrating their OOD capabilities. This work positions SFMs as promising tools for new uncharted domains beyond speech.

Multi-View Multi-Task Modeling with Speech Foundation Models for Speech Forensic Tasks

Oct 16, 2024

Abstract:Speech forensic tasks (SFTs), such as automatic speaker recognition (ASR), speech emotion recognition (SER), gender recognition (GR), and age estimation (AE), find use in different security and biometric applications. Previous works have applied various techniques, with recent studies focusing on applying speech foundation models (SFMs) for improved performance. However, most prior efforts have centered on building individual models for each task separately, despite the inherent similarities among these tasks. This isolated approach results in higher computational resource requirements, increased costs, time consumption, and maintenance challenges. In this study, we address these challenges by employing a multi-task learning strategy. Firstly, we explore the various state-of-the-art (SOTA) SFMs by extracting their representations for learning these SFTs and investigating their effectiveness at each task specifically. Secondly, we analyze the performance of the extracted representations on the SFTs in a multi-task learning framework. We observe a decline in performance when SFTs are modeled together compared to individual task-specific models, and as a remedy, we propose multi-view learning (MVL). Views are representations from different SFMs transformed into distinct abstract spaces by characteristics unique to each SFM. By leveraging MVL, we integrate these diverse representations to capture complementary information across tasks, enhancing the shared learning process. We introduce a new framework called TANGO (Task Alignment with iNter-view Gated Optimal transport) to implement this approach. With TANGO, we achieve the topmost performance in comparison to individual SFM representations as well as baseline fusion techniques across benchmark datasets such as CREMA-D, emo-DB, and BAVED.

SeQuiFi: Mitigating Catastrophic Forgetting in Speech Emotion Recognition with Sequential Class-Finetuning

Oct 16, 2024

Abstract:In this work, we introduce SeQuiFi, a novel approach for mitigating catastrophic forgetting (CF) in speech emotion recognition (SER). SeQuiFi adopts a sequential class-finetuning strategy, where the model is fine-tuned incrementally on one emotion class at a time, preserving and enhancing retention for each class. While various state-of-the-art (SOTA) methods, such as regularization-based, memory-based, and weight-averaging techniques, have been proposed to address CF, it still remains a challenge, particularly with diverse and multilingual datasets. Through extensive experiments, we demonstrate that SeQuiFi significantly outperforms both vanilla fine-tuning and SOTA continual learning techniques in terms of accuracy and F1 scores on multiple benchmark SER datasets, including CREMA-D, RAVDESS, Emo-DB, MESD, and SHEMO, covering different languages.

Representation Loss Minimization with Randomized Selection Strategy for Efficient Environmental Fake Audio Detection

Sep 24, 2024

Abstract:The adaptation of foundation models has significantly advanced environmental audio deepfake detection (EADD), a rapidly growing area of research. These models are typically fine-tuned or utilized in their frozen states for downstream tasks. However, the dimensionality of their representations can substantially lead to a high parameter count of downstream models, leading to higher computational demands. So, a general way is to compress these representations by leveraging state-of-the-art (SOTA) unsupervised dimensionality reduction techniques (PCA, SVD, KPCA, GRP) for efficient EADD. However, with the application of such techniques, we observe a drop in performance. So in this paper, we show that representation vectors contain redundant information, and randomly selecting 40-50% of representation values and building downstream models on it preserves or sometimes even improves performance. We show that such random selection preserves more performance than the SOTA dimensionality reduction techniques while reducing model parameters and inference time by almost over half.

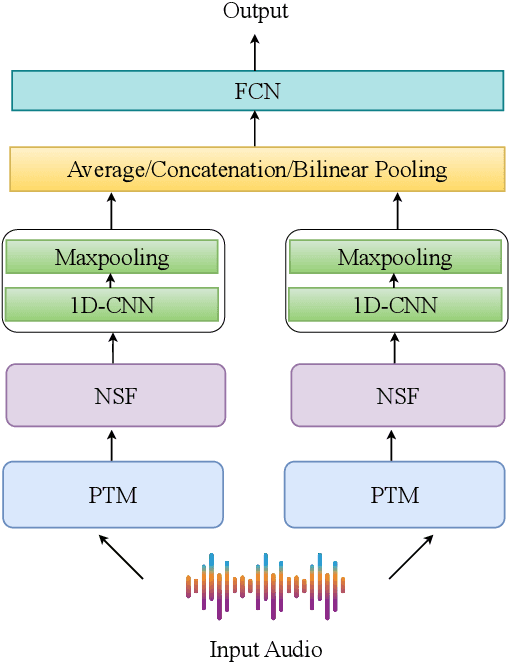

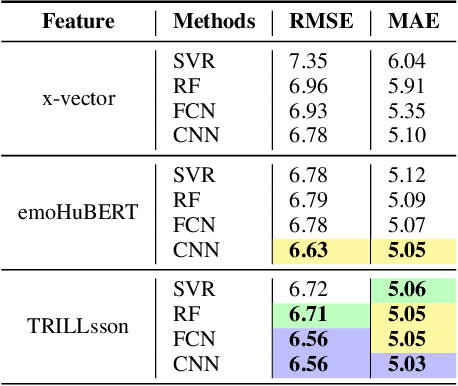

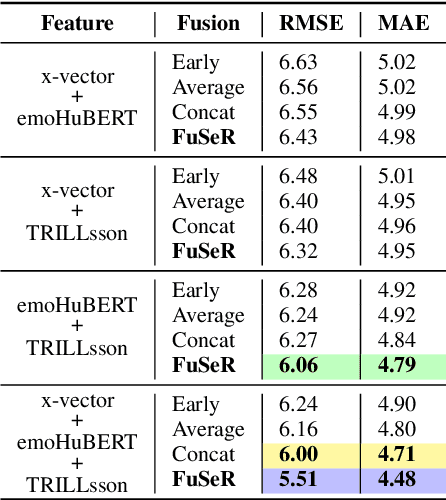

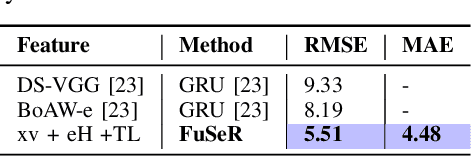

Avengers Assemble: Amalgamation of Non-Semantic Features for Depression Detection

Sep 22, 2024

Abstract:In this study, we address the challenge of depression detection from speech, focusing on the potential of non-semantic features (NSFs) to capture subtle markers of depression. While prior research has leveraged various features for this task, NSFs-extracted from pre-trained models (PTMs) designed for non-semantic tasks such as paralinguistic speech processing (TRILLsson), speaker recognition (x-vector), and emotion recognition (emoHuBERT)-have shown significant promise. However, the potential of combining these diverse features has not been fully explored. In this work, we demonstrate that the amalgamation of NSFs results in complementary behavior, leading to enhanced depression detection performance. Furthermore, to our end, we introduce a simple novel framework, FuSeR, designed to effectively combine these features. Our results show that FuSeR outperforms models utilizing individual NSFs as well as baseline fusion techniques and obtains state-of-the-art (SOTA) performance in E-DAIC benchmark with RMSE of 5.51 and MAE of 4.48, establishing it as a robust approach for depression detection.

Are Music Foundation Models Better at Singing Voice Deepfake Detection? Far-Better Fuse them with Speech Foundation Models

Sep 21, 2024

Abstract:In this study, for the first time, we extensively investigate whether music foundation models (MFMs) or speech foundation models (SFMs) work better for singing voice deepfake detection (SVDD), which has recently attracted attention in the research community. For this, we perform a comprehensive comparative study of state-of-the-art (SOTA) MFMs (MERT variants and music2vec) and SFMs (pre-trained for general speech representation learning as well as speaker recognition). We show that speaker recognition SFM representations perform the best amongst all the foundation models (FMs), and this performance can be attributed to its higher efficacy in capturing the pitch, tone, intensity, etc, characteristics present in singing voices. To our end, we also explore the fusion of FMs for exploiting their complementary behavior for improved SVDD, and we propose a novel framework, FIONA for the same. With FIONA, through the synchronization of x-vector (speaker recognition SFM) and MERT-v1-330M (MFM), we report the best performance with the lowest Equal Error Rate (EER) of 13.74 %, beating all the individual FMs as well as baseline FM fusions and achieving SOTA results.

Strong Alone, Stronger Together: Synergizing Modality-Binding Foundation Models with Optimal Transport for Non-Verbal Emotion Recognition

Sep 21, 2024

Abstract:In this study, we investigate multimodal foundation models (MFMs) for emotion recognition from non-verbal sounds. We hypothesize that MFMs, with their joint pre-training across multiple modalities, will be more effective in non-verbal sounds emotion recognition (NVER) by better interpreting and differentiating subtle emotional cues that may be ambiguous in audio-only foundation models (AFMs). To validate our hypothesis, we extract representations from state-of-the-art (SOTA) MFMs and AFMs and evaluated them on benchmark NVER datasets. We also investigate the potential of combining selected foundation model representations to enhance NVER further inspired by research in speech recognition and audio deepfake detection. To achieve this, we propose a framework called MATA (Intra-Modality Alignment through Transport Attention). Through MATA coupled with the combination of MFMs: LanguageBind and ImageBind, we report the topmost performance with accuracies of 76.47%, 77.40%, 75.12% and F1-scores of 70.35%, 76.19%, 74.63% for ASVP-ESD, JNV, and VIVAE datasets against individual FMs and baseline fusion techniques and report SOTA on the benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge