Suyoung Choi

RaiLab, Department of Mechanical Engineering, KAIST

Reference Free Platform Adaptive Locomotion for Quadrupedal Robots using a Dynamics Conditioned Policy

May 21, 2025Abstract:This article presents Platform Adaptive Locomotion (PAL), a unified control method for quadrupedal robots with different morphologies and dynamics. We leverage deep reinforcement learning to train a single locomotion policy on procedurally generated robots. The policy maps proprioceptive robot state information and base velocity commands into desired joint actuation targets, which are conditioned using a latent embedding of the temporally local system dynamics. We explore two conditioning strategies - one using a GRU-based dynamics encoder and another using a morphology-based property estimator - and show that morphology-aware conditioning outperforms temporal dynamics encoding regarding velocity task tracking for our hardware test on ANYmal C. Our results demonstrate that both approaches achieve robust zero-shot transfer across multiple unseen simulated quadrupeds. Furthermore, we demonstrate the need for careful robot reference modelling during training, enabling us to reduce the velocity tracking error by up to 30% compared to the baseline method. Despite PAL not surpassing the best-performing reference-free controller in all cases, our analysis uncovers critical design choices and informs improvements to the state of the art.

Legged Robot State Estimation With Invariant Extended Kalman Filter Using Neural Measurement Network

Feb 01, 2024

Abstract:This paper introduces a novel proprioceptive state estimator for legged robots that combines model-based filters and deep neural networks. Recent studies have shown that neural networks such as multi-layer perceptron or recurrent neural networks can estimate the robot states, including contact probability and linear velocity. Inspired by this, we develop a state estimation framework that integrates a neural measurement network (NMN) with an invariant extended Kalman filter. We show that our framework improves estimation performance in various terrains. Existing studies that combine model-based filters and learning-based approaches typically use real-world data. However, our approach relies solely on simulation data, as it allows us to easily obtain extensive data. This difference leads to a gap between the learning and the inference domain, commonly referred to as a sim-to-real gap. We address this challenge by adapting existing learning techniques and regularization. To validate our proposed method, we conduct experiments using a quadruped robot on four types of terrain: \textit{flat}, \textit{debris}, \textit{soft}, and \textit{slippery}. We observe that our approach significantly reduces position drift compared to the existing model-based state estimator.

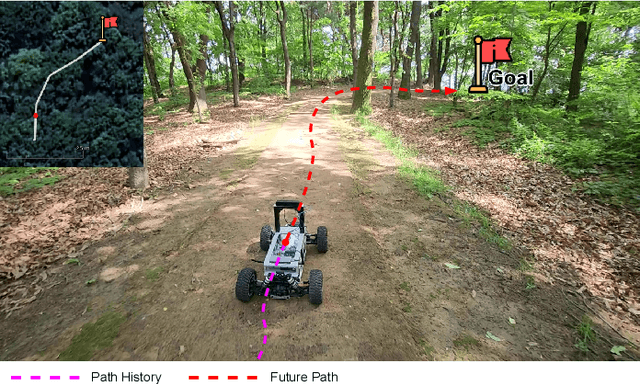

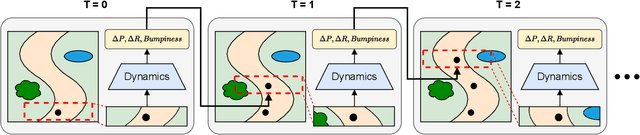

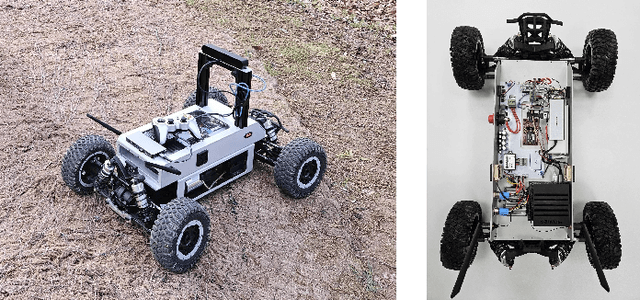

Learning Vehicle Dynamics from Cropped Image Patches for Robot Navigation in Unpaved Outdoor Terrains

Sep 06, 2023

Abstract:In the realm of autonomous mobile robots, safe navigation through unpaved outdoor environments remains a challenging task. Due to the high-dimensional nature of sensor data, extracting relevant information becomes a complex problem, which hinders adequate perception and path planning. Previous works have shown promising performances in extracting global features from full-sized images. However, they often face challenges in capturing essential local information. In this paper, we propose Crop-LSTM, which iteratively takes cropped image patches around the current robot's position and predicts the future position, orientation, and bumpiness. Our method performs local feature extraction by paying attention to corresponding image patches along the predicted robot trajectory in the 2D image plane. This enables more accurate predictions of the robot's future trajectory. With our wheeled mobile robot platform Raicart, we demonstrated the effectiveness of Crop-LSTM for point-goal navigation in an unpaved outdoor environment. Our method enabled safe and robust navigation using RGBD images in challenging unpaved outdoor terrains. The summary video is available at https://youtu.be/iIGNZ8ignk0.

Learning Whole-body Manipulation for Quadrupedal Robot

Sep 06, 2023

Abstract:We propose a learning-based system for enabling quadrupedal robots to manipulate large, heavy objects using their whole body. Our system is based on a hierarchical control strategy that uses the deep latent variable embedding which captures manipulation-relevant information from interactions, proprioception, and action history, allowing the robot to implicitly understand object properties. We evaluate our framework in both simulation and real-world scenarios. In the simulation, it achieves a success rate of 93.6 % in accurately re-positioning and re-orienting various objects within a tolerance of 0.03 m and 5 {\deg}. Real-world experiments demonstrate the successful manipulation of objects such as a 19.2 kg water-filled drum and a 15.3 kg plastic box filled with heavy objects while the robot weighs 27 kg. Unlike previous works that focus on manipulating small and light objects using prehensile manipulation, our framework illustrates the possibility of using quadrupeds for manipulating large and heavy objects that are ungraspable with the robot's entire body. Our method does not require explicit object modeling and offers significant computational efficiency compared to optimization-based methods. The video can be found at https://youtu.be/fO_PVr27QxU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge