Hyunsik Oh

Legged Robot State Estimation With Invariant Extended Kalman Filter Using Neural Measurement Network

Feb 01, 2024

Abstract:This paper introduces a novel proprioceptive state estimator for legged robots that combines model-based filters and deep neural networks. Recent studies have shown that neural networks such as multi-layer perceptron or recurrent neural networks can estimate the robot states, including contact probability and linear velocity. Inspired by this, we develop a state estimation framework that integrates a neural measurement network (NMN) with an invariant extended Kalman filter. We show that our framework improves estimation performance in various terrains. Existing studies that combine model-based filters and learning-based approaches typically use real-world data. However, our approach relies solely on simulation data, as it allows us to easily obtain extensive data. This difference leads to a gap between the learning and the inference domain, commonly referred to as a sim-to-real gap. We address this challenge by adapting existing learning techniques and regularization. To validate our proposed method, we conduct experiments using a quadruped robot on four types of terrain: \textit{flat}, \textit{debris}, \textit{soft}, and \textit{slippery}. We observe that our approach significantly reduces position drift compared to the existing model-based state estimator.

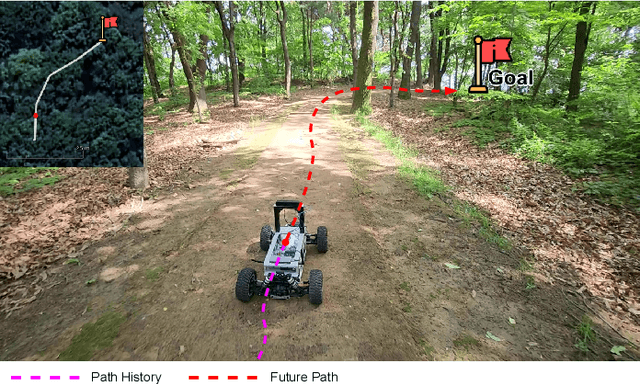

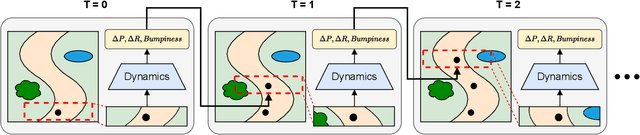

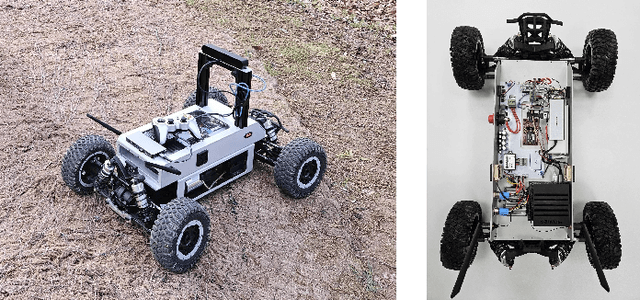

Learning Vehicle Dynamics from Cropped Image Patches for Robot Navigation in Unpaved Outdoor Terrains

Sep 06, 2023

Abstract:In the realm of autonomous mobile robots, safe navigation through unpaved outdoor environments remains a challenging task. Due to the high-dimensional nature of sensor data, extracting relevant information becomes a complex problem, which hinders adequate perception and path planning. Previous works have shown promising performances in extracting global features from full-sized images. However, they often face challenges in capturing essential local information. In this paper, we propose Crop-LSTM, which iteratively takes cropped image patches around the current robot's position and predicts the future position, orientation, and bumpiness. Our method performs local feature extraction by paying attention to corresponding image patches along the predicted robot trajectory in the 2D image plane. This enables more accurate predictions of the robot's future trajectory. With our wheeled mobile robot platform Raicart, we demonstrated the effectiveness of Crop-LSTM for point-goal navigation in an unpaved outdoor environment. Our method enabled safe and robust navigation using RGBD images in challenging unpaved outdoor terrains. The summary video is available at https://youtu.be/iIGNZ8ignk0.

Not Only Rewards But Also Constraints: Applications on Legged Robot Locomotion

Aug 24, 2023

Abstract:Several earlier studies have shown impressive control performance in complex robotic systems by designing the controller using a neural network and training it with model-free reinforcement learning. However, these outstanding controllers with natural motion style and high task performance are developed through extensive reward engineering, which is a highly laborious and time-consuming process of designing numerous reward terms and determining suitable reward coefficients. In this work, we propose a novel reinforcement learning framework for training neural network controllers for complex robotic systems consisting of both rewards and constraints. To let the engineers appropriately reflect their intent to constraints and handle them with minimal computation overhead, two constraint types and an efficient policy optimization algorithm are suggested. The learning framework is applied to train locomotion controllers for several legged robots with different morphology and physical attributes to traverse challenging terrains. Extensive simulation and real-world experiments demonstrate that performant controllers can be trained with significantly less reward engineering, by tuning only a single reward coefficient. Furthermore, a more straightforward and intuitive engineering process can be utilized, thanks to the interpretability and generalizability of constraints. The summary video is available at https://youtu.be/KAlm3yskhvM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge