Stefan Rudolph

Segmentation-guided Domain Adaptation for Efficient Depth Completion

Oct 14, 2022

Abstract:Complete depth information and efficient estimators have become vital ingredients in scene understanding for automated driving tasks. A major problem for LiDAR-based depth completion is the inefficient utilization of convolutions due to the lack of coherent information as provided by the sparse nature of uncorrelated LiDAR point clouds, which often leads to complex and resource-demanding networks. The problem is reinforced by the expensive aquisition of depth data for supervised training. In this work, we propose an efficient depth completion model based on a vgg05-like CNN architecture and propose a semi-supervised domain adaptation approach to transfer knowledge from synthetic to real world data to improve data-efficiency and reduce the need for a large database. In order to boost spatial coherence, we guide the learning process using segmentations as additional source of information. The efficiency and accuracy of our approach is evaluated on the KITTI dataset. Our approach improves on previous efficient and low parameter state of the art approaches while having a noticeably lower computational footprint.

Knowledge Augmented Machine Learning with Applications in Autonomous Driving: A Survey

May 10, 2022

Abstract:The existence of representative datasets is a prerequisite of many successful artificial intelligence and machine learning models. However, the subsequent application of these models often involves scenarios that are inadequately represented in the data used for training. The reasons for this are manifold and range from time and cost constraints to ethical considerations. As a consequence, the reliable use of these models, especially in safety-critical applications, is a huge challenge. Leveraging additional, already existing sources of knowledge is key to overcome the limitations of purely data-driven approaches, and eventually to increase the generalization capability of these models. Furthermore, predictions that conform with knowledge are crucial for making trustworthy and safe decisions even in underrepresented scenarios. This work provides an overview of existing techniques and methods in the literature that combine data-based models with existing knowledge. The identified approaches are structured according to the categories integration, extraction and conformity. Special attention is given to applications in the field of autonomous driving.

On the Detection of Mutual Influences and Their Consideration in Reinforcement Learning Processes

May 10, 2019

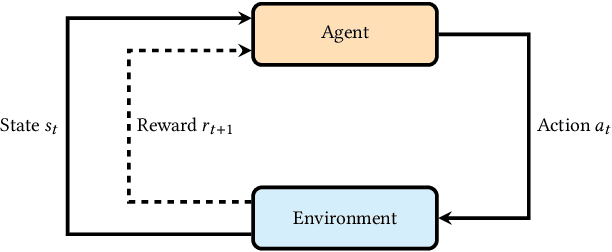

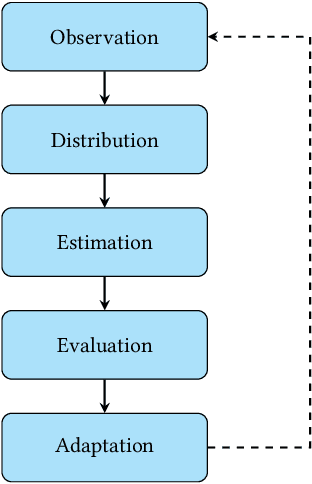

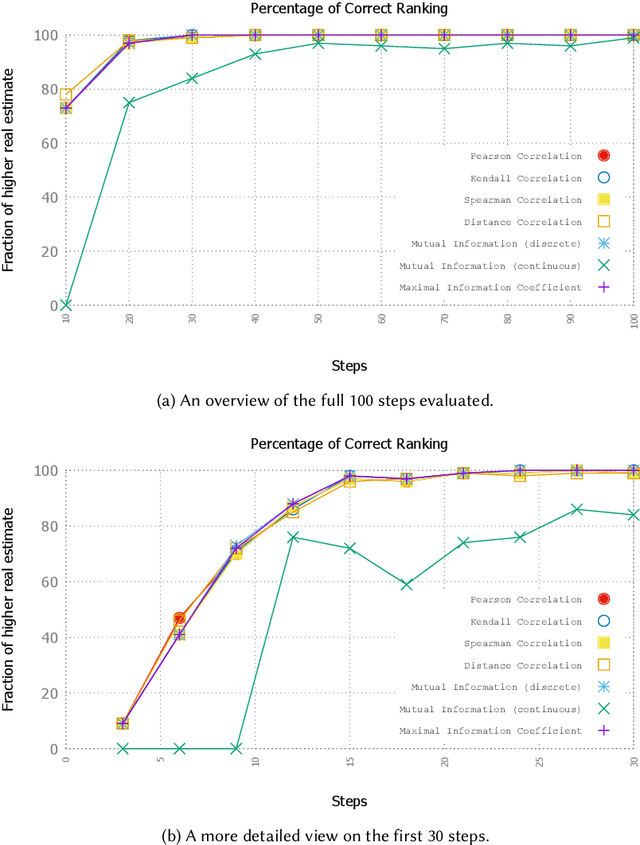

Abstract:Self-adaptation has been proposed as a mechanism to counter complexity in control problems of technical systems. A major driver behind self-adaptation is the idea to transfer traditional design-time decisions to runtime and into the responsibility of systems themselves. In order to deal with unforeseen events and conditions, systems need creativity -- typically realized by means of machine learning capabilities. Such learning mechanisms are based on different sources of knowledge. Feedback from the environment used for reinforcement purposes is probably the most prominent one within the self-adapting and self-organizing (SASO) systems community. However, the impact of other (sub-)systems on the success of the individual system's learning performance has mostly been neglected in this context. In this article, we propose a novel methodology to identify effects of actions performed by other systems in a shared environment on the utility achievement of an autonomous system. Consider smart cameras (SC) as illustrating example: For goals such as 3D reconstruction of objects, the most promising configuration of one SC in terms of pan/tilt/zoom parameters depends largely on the configuration of other SCs in the vicinity. Since such mutual influences cannot be pre-defined for dynamic systems, they have to be learned at runtime. Furthermore, they have to be taken into consideration when self-improving the own configuration decisions based on a feedback loop concept, e.g., known from the SASO domain or the Autonomic and Organic Computing initiatives. We define a methodology to detect such influences at runtime, present an approach to consider this information in a reinforcement learning technique, and analyze the behavior in artificial as well as real-world SASO system settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge