On the Detection of Mutual Influences and Their Consideration in Reinforcement Learning Processes

Paper and Code

May 10, 2019

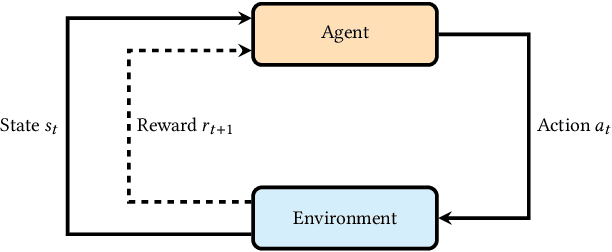

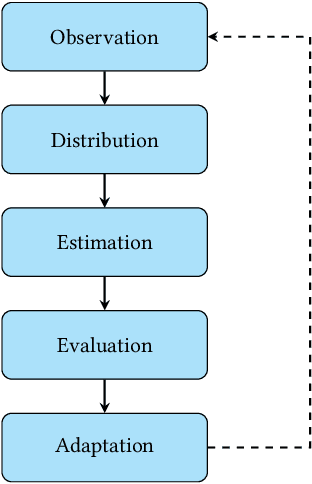

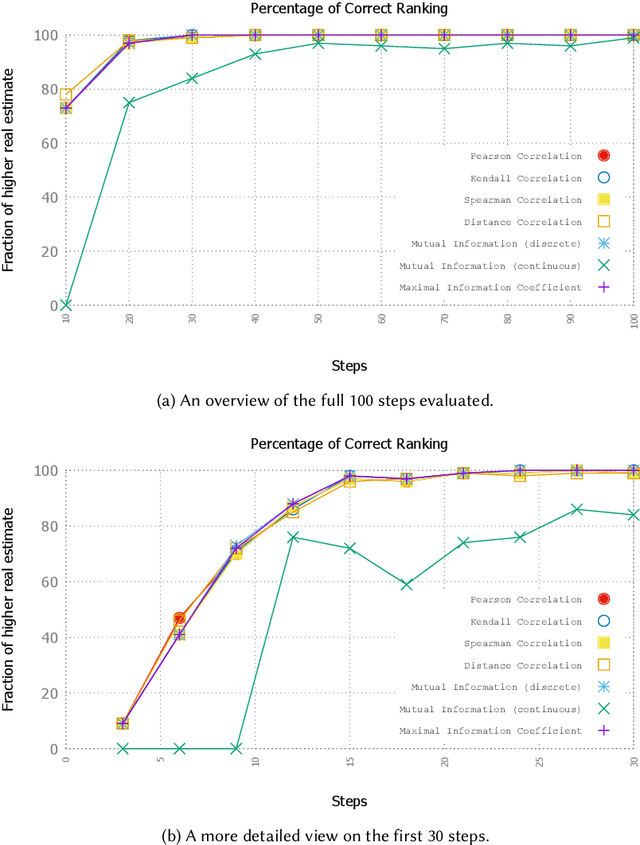

Self-adaptation has been proposed as a mechanism to counter complexity in control problems of technical systems. A major driver behind self-adaptation is the idea to transfer traditional design-time decisions to runtime and into the responsibility of systems themselves. In order to deal with unforeseen events and conditions, systems need creativity -- typically realized by means of machine learning capabilities. Such learning mechanisms are based on different sources of knowledge. Feedback from the environment used for reinforcement purposes is probably the most prominent one within the self-adapting and self-organizing (SASO) systems community. However, the impact of other (sub-)systems on the success of the individual system's learning performance has mostly been neglected in this context. In this article, we propose a novel methodology to identify effects of actions performed by other systems in a shared environment on the utility achievement of an autonomous system. Consider smart cameras (SC) as illustrating example: For goals such as 3D reconstruction of objects, the most promising configuration of one SC in terms of pan/tilt/zoom parameters depends largely on the configuration of other SCs in the vicinity. Since such mutual influences cannot be pre-defined for dynamic systems, they have to be learned at runtime. Furthermore, they have to be taken into consideration when self-improving the own configuration decisions based on a feedback loop concept, e.g., known from the SASO domain or the Autonomic and Organic Computing initiatives. We define a methodology to detect such influences at runtime, present an approach to consider this information in a reinforcement learning technique, and analyze the behavior in artificial as well as real-world SASO system settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge