Songyuan Liu

A Hybrid Strategy for Aggregated Probabilistic Forecasting and Energy Trading in HEFTCom2024

May 15, 2025Abstract:Obtaining accurate probabilistic energy forecasts and making effective decisions amid diverse uncertainties are routine challenges in future energy systems. This paper presents the solution of team GEB, which ranked 3rd in trading, 4th in forecasting, and 1st among student teams in the IEEE Hybrid Energy Forecasting and Trading Competition 2024 (HEFTCom2024). The solution provides accurate probabilistic forecasts for a wind-solar hybrid system, and achieves substantial trading revenue in the day-ahead electricity market. Key components include: (1) a stacking-based approach combining sister forecasts from various Numerical Weather Predictions (NWPs) to provide wind power forecasts, (2) an online solar post-processing model to address the distribution shift in the online test set caused by increased solar capacity, (3) a probabilistic aggregation method for accurate quantile forecasts of hybrid generation, and (4) a stochastic trading strategy to maximize expected trading revenue considering uncertainties in electricity prices. This paper also explores the potential of end-to-end learning to further enhance the trading revenue by adjusting the distribution of forecast errors. Detailed case studies are provided to validate the effectiveness of these proposed methods. Code for all mentioned methods is available for reproduction and further research in both industry and academia.

Early Risk Prediction of Pediatric Cardiac Arrest from Electronic Health Records via Multimodal Fused Transformer

Feb 11, 2025Abstract:Early prediction of pediatric cardiac arrest (CA) is critical for timely intervention in high-risk intensive care settings. We introduce PedCA-FT, a novel transformer-based framework that fuses tabular view of EHR with the derived textual view of EHR to fully unleash the interactions of high-dimensional risk factors and their dynamics. By employing dedicated transformer modules for each modality view, PedCA-FT captures complex temporal and contextual patterns to produce robust CA risk estimates. Evaluated on a curated pediatric cohort from the CHOA-CICU database, our approach outperforms ten other artificial intelligence models across five key performance metrics and identifies clinically meaningful risk factors. These findings underscore the potential of multimodal fusion techniques to enhance early CA detection and improve patient care.

Systematic Analysis of LLM Contributions to Planning: Solver, Verifier, Heuristic

Dec 12, 2024

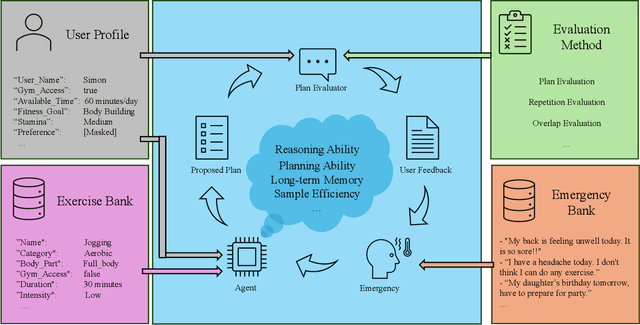

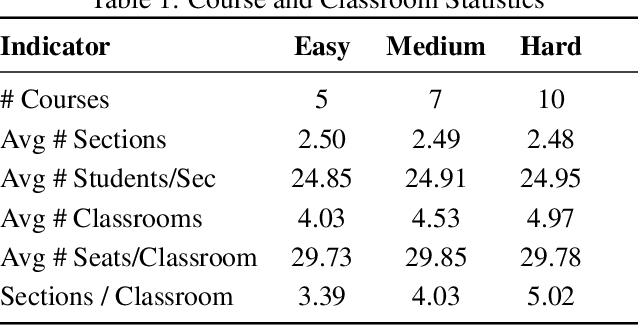

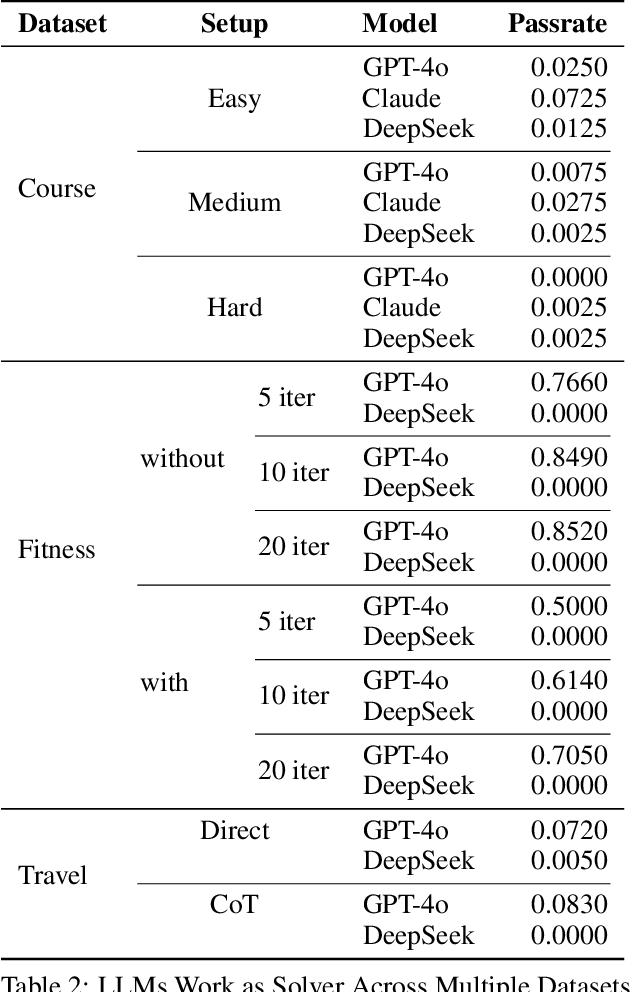

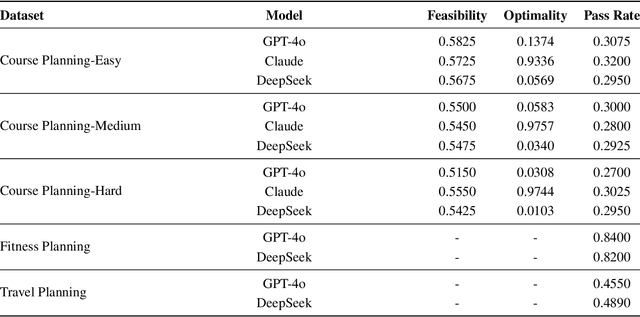

Abstract:In this work, we provide a systematic analysis of how large language models (LLMs) contribute to solving planning problems. In particular, we examine how LLMs perform when they are used as problem solver, solution verifier, and heuristic guidance to improve intermediate solutions. Our analysis reveals that although it is difficult for LLMs to generate correct plans out-of-the-box, LLMs are much better at providing feedback signals to intermediate/incomplete solutions in the form of comparative heuristic functions. This evaluation framework provides insights into how future work may design better LLM-based tree-search algorithms to solve diverse planning and reasoning problems. We also propose a novel benchmark to evaluate LLM's ability to learn user preferences on the fly, which has wide applications in practical settings.

Measuring Spiritual Values and Bias of Large Language Models

Oct 15, 2024

Abstract:Large language models (LLMs) have become integral tool for users from various backgrounds. LLMs, trained on vast corpora, reflect the linguistic and cultural nuances embedded in their pre-training data. However, the values and perspectives inherent in this data can influence the behavior of LLMs, leading to potential biases. As a result, the use of LLMs in contexts involving spiritual or moral values necessitates careful consideration of these underlying biases. Our work starts with verification of our hypothesis by testing the spiritual values of popular LLMs. Experimental results show that LLMs' spiritual values are quite diverse, as opposed to the stereotype of atheists or secularists. We then investigate how different spiritual values affect LLMs in social-fairness scenarios e.g., hate speech identification). Our findings reveal that different spiritual values indeed lead to different sensitivity to different hate target groups. Furthermore, we propose to continue pre-training LLMs on spiritual texts, and empirical results demonstrate the effectiveness of this approach in mitigating spiritual bias.

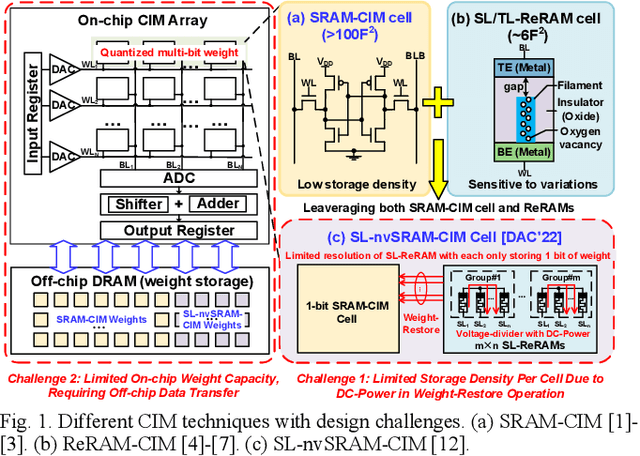

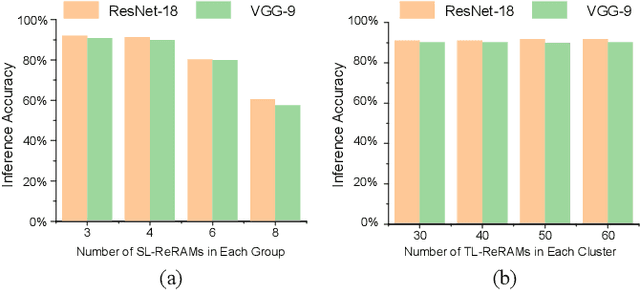

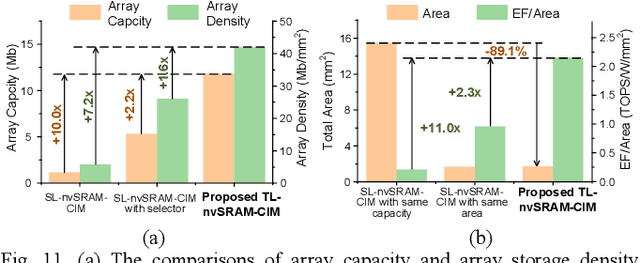

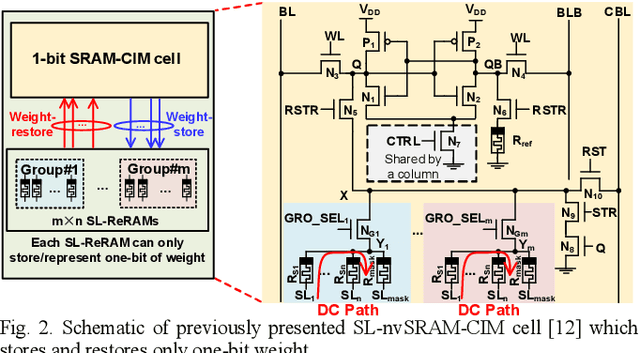

TL-nvSRAM-CIM: Ultra-High-Density Three-Level ReRAM-Assisted Computing-in-nvSRAM with DC-Power Free Restore and Ternary MAC Operations

Jul 06, 2023

Abstract:Accommodating all the weights on-chip for large-scale NNs remains a great challenge for SRAM based computing-in-memory (SRAM-CIM) with limited on-chip capacity. Previous non-volatile SRAM-CIM (nvSRAM-CIM) addresses this issue by integrating high-density single-level ReRAMs on the top of high-efficiency SRAM-CIM for weight storage to eliminate the off-chip memory access. However, previous SL-nvSRAM-CIM suffers from poor scalability for an increased number of SL-ReRAMs and limited computing efficiency. To overcome these challenges, this work proposes an ultra-high-density three-level ReRAMs-assisted computing-in-nonvolatile-SRAM (TL-nvSRAM-CIM) scheme for large NN models. The clustered n-selector-n-ReRAM (cluster-nSnRs) is employed for reliable weight-restore with eliminated DC power. Furthermore, a ternary SRAM-CIM mechanism with differential computing scheme is proposed for energy-efficient ternary MAC operations while preserving high NN accuracy. The proposed TL-nvSRAM-CIM achieves 7.8x higher storage density, compared with the state-of-art works. Moreover, TL-nvSRAM-CIM shows up to 2.9x and 1.9x enhanced energy-efficiency, respectively, compared to the baseline designs of SRAM-CIM and ReRAM-CIM, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge