Sohan Dsouza

Blaming humans in autonomous vehicle accidents: Shared responsibility across levels of automation

Mar 21, 2018

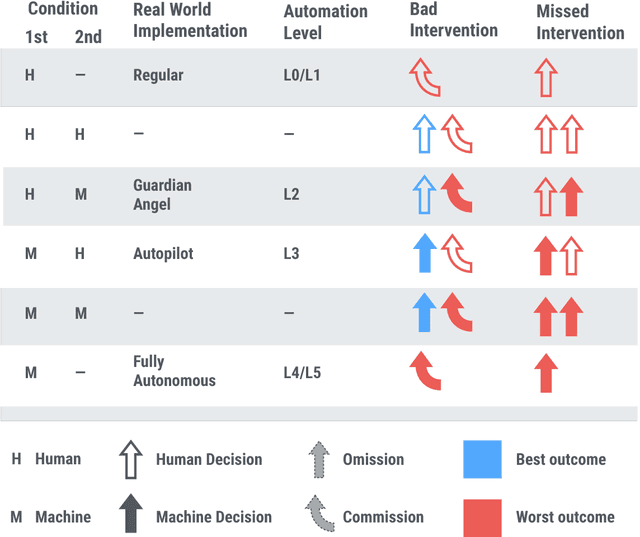

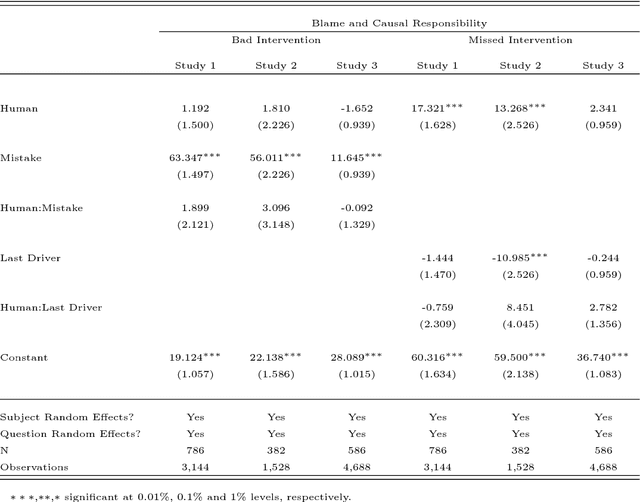

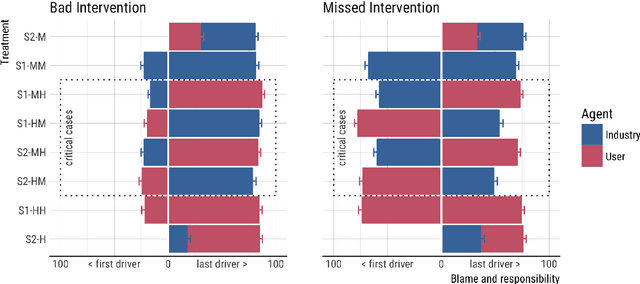

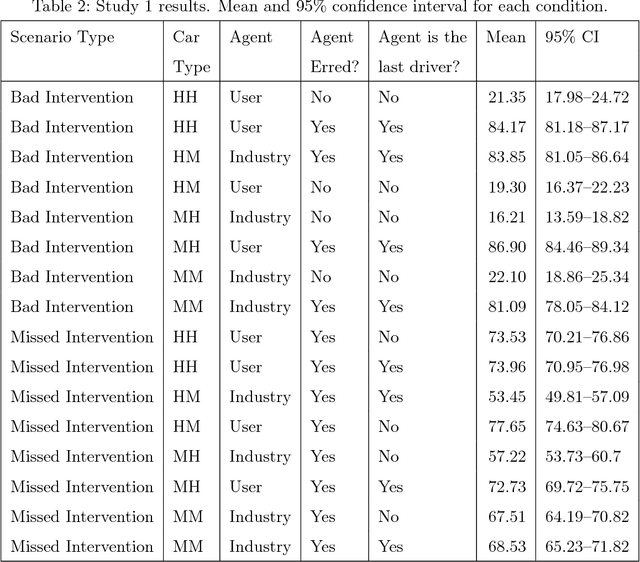

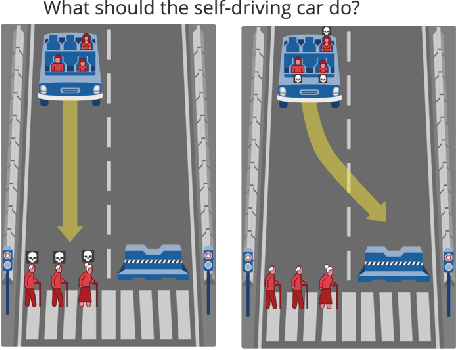

Abstract:When a semi-autonomous car crashes and harms someone, how are blame and causal responsibility distributed across the human and machine drivers? In this article, we consider cases in which a pedestrian was hit and killed by a car being operated under shared control of a primary and a secondary driver. We find that when only one driver makes an error, that driver receives the blame and is considered causally responsible for the harm, regardless of whether that driver is a machine or a human. However, when both drivers make errors in cases of shared control between a human and a machine, the blame and responsibility attributed to the machine is reduced. This finding portends a public under-reaction to the malfunctioning AI components of semi-autonomous cars and therefore has a direct policy implication: a bottom-up regulatory scheme (which operates through tort law that is adjudicated through the jury system) could fail to properly regulate the safety of shared-control vehicles; instead, a top-down scheme (enacted through federal laws) may be called for.

A Computational Model of Commonsense Moral Decision Making

Jan 12, 2018

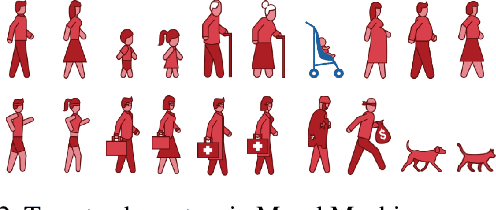

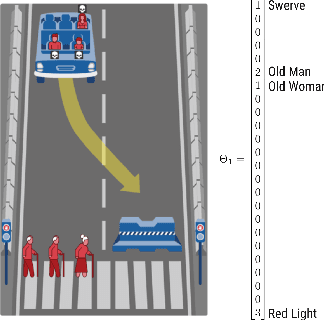

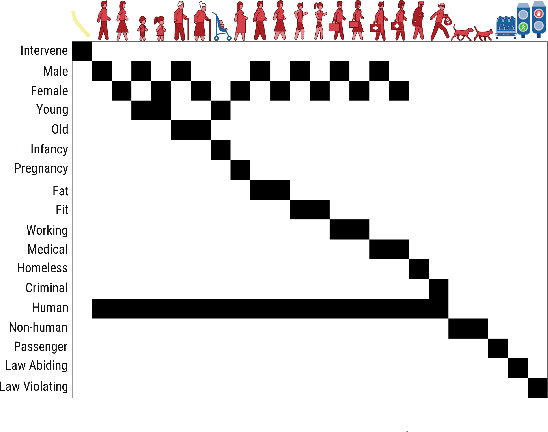

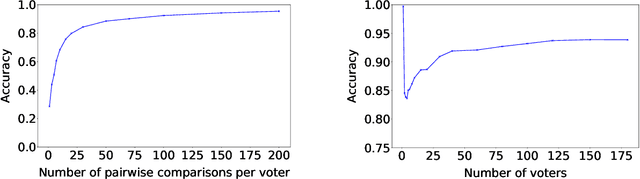

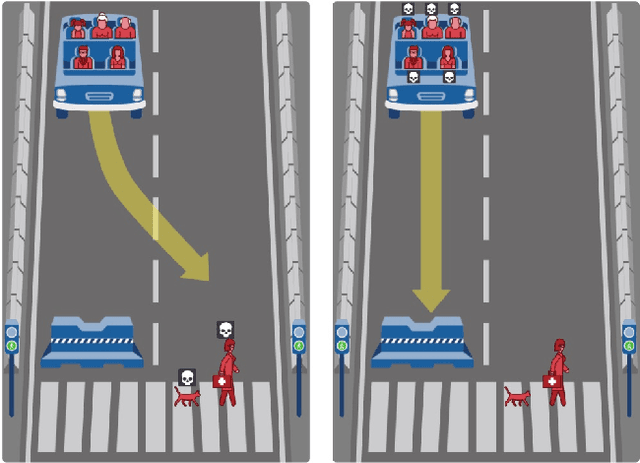

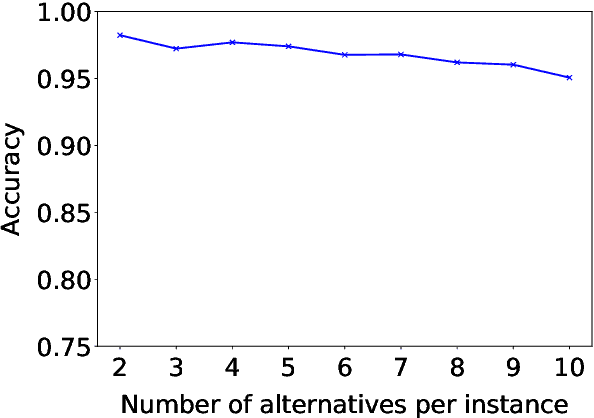

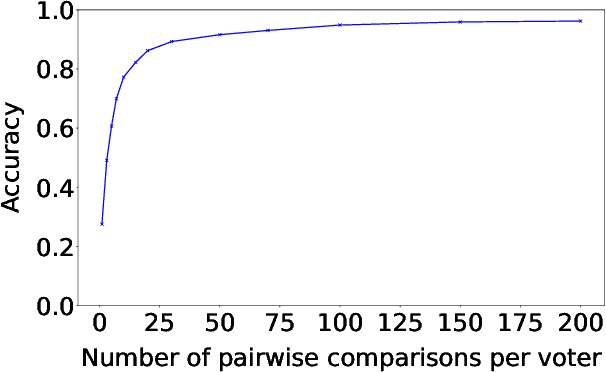

Abstract:We introduce a new computational model of moral decision making, drawing on a recent theory of commonsense moral learning via social dynamics. Our model describes moral dilemmas as a utility function that computes trade-offs in values over abstract moral dimensions, which provide interpretable parameter values when implemented in machine-led ethical decision-making. Moreover, characterizing the social structures of individuals and groups as a hierarchical Bayesian model, we show that a useful description of an individual's moral values - as well as a group's shared values - can be inferred from a limited amount of observed data. Finally, we apply and evaluate our approach to data from the Moral Machine, a web application that collects human judgments on moral dilemmas involving autonomous vehicles.

A Voting-Based System for Ethical Decision Making

Sep 20, 2017

Abstract:We present a general approach to automating ethical decisions, drawing on machine learning and computational social choice. In a nutshell, we propose to learn a model of societal preferences, and, when faced with a specific ethical dilemma at runtime, efficiently aggregate those preferences to identify a desirable choice. We provide a concrete algorithm that instantiates our approach; some of its crucial steps are informed by a new theory of swap-dominance efficient voting rules. Finally, we implement and evaluate a system for ethical decision making in the autonomous vehicle domain, using preference data collected from 1.3 million people through the Moral Machine website.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge