Sira Ferradans

NYU

Deep Portrait Quality Assessment. A NTIRE 2024 Challenge Survey

Apr 17, 2024

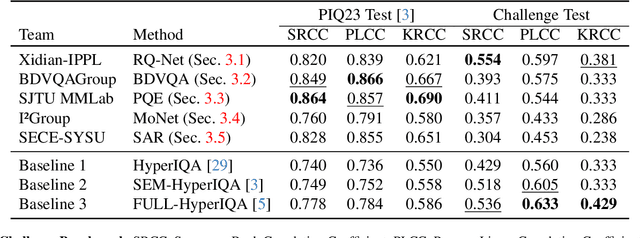

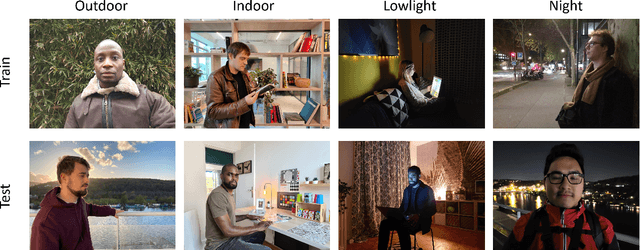

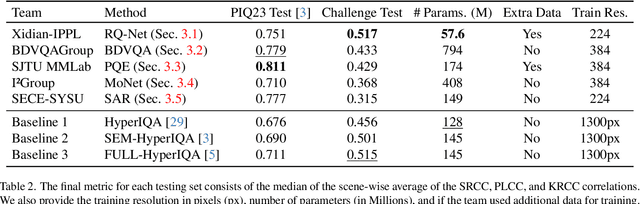

Abstract:This paper reviews the NTIRE 2024 Portrait Quality Assessment Challenge, highlighting the proposed solutions and results. This challenge aims to obtain an efficient deep neural network capable of estimating the perceptual quality of real portrait photos. The methods must generalize to diverse scenes and diverse lighting conditions (indoor, outdoor, low-light), movement, blur, and other challenging conditions. In the challenge, 140 participants registered, and 35 submitted results during the challenge period. The performance of the top 5 submissions is reviewed and provided here as a gauge for the current state-of-the-art in Portrait Quality Assessment.

PICNIQ: Pairwise Comparisons for Natural Image Quality Assessment

Mar 13, 2024

Abstract:Blind image quality assessment (BIQA) approaches, while promising for automating image quality evaluation, often fall short in real-world scenarios due to their reliance on a generic quality standard applied uniformly across diverse images. This one-size-fits-all approach overlooks the crucial perceptual relationship between image content and quality, leading to a 'domain shift' challenge where a single quality metric inadequately represents various content types. Furthermore, BIQA techniques typically overlook the inherent differences in the human visual system among different observers. In response to these challenges, this paper introduces PICNIQ, an innovative pairwise comparison framework designed to bypass the limitations of conventional BIQA by emphasizing relative, rather than absolute, quality assessment. PICNIQ is specifically designed to assess the quality differences between image pairs. The proposed framework implements a carefully crafted deep learning architecture, a specialized loss function, and a training strategy optimized for sparse comparison settings. By employing psychometric scaling algorithms like TrueSkill, PICNIQ transforms pairwise comparisons into just-objectionable-difference (JOD) quality scores, offering a granular and interpretable measure of image quality. We conduct our research using comparison matrices from the PIQ23 dataset, which are published in this paper. Our extensive experimental analysis showcases PICNIQ's broad applicability and superior performance over existing models, highlighting its potential to set new standards in the field of BIQA.

Generalized Portrait Quality Assessment

Feb 14, 2024

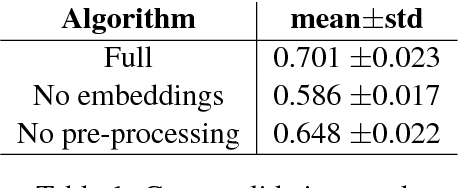

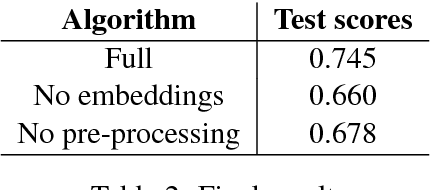

Abstract:Automated and robust portrait quality assessment (PQA) is of paramount importance in high-impact applications such as smartphone photography. This paper presents FHIQA, a learning-based approach to PQA that introduces a simple but effective quality score rescaling method based on image semantics, to enhance the precision of fine-grained image quality metrics while ensuring robust generalization to various scene settings beyond the training dataset. The proposed approach is validated by extensive experiments on the PIQ23 benchmark and comparisons with the current state of the art. The source code of FHIQA will be made publicly available on the PIQ23 GitHub repository at https://github.com/DXOMARK-Research/PIQ2023.

An Image Quality Assessment Dataset for Portraits

Apr 12, 2023

Abstract:Year after year, the demand for ever-better smartphone photos continues to grow, in particular in the domain of portrait photography. Manufacturers thus use perceptual quality criteria throughout the development of smartphone cameras. This costly procedure can be partially replaced by automated learning-based methods for image quality assessment (IQA). Due to its subjective nature, it is necessary to estimate and guarantee the consistency of the IQA process, a characteristic lacking in the mean opinion scores (MOS) widely used for crowdsourcing IQA. In addition, existing blind IQA (BIQA) datasets pay little attention to the difficulty of cross-content assessment, which may degrade the quality of annotations. This paper introduces PIQ23, a portrait-specific IQA dataset of 5116 images of 50 predefined scenarios acquired by 100 smartphones, covering a high variety of brands, models, and use cases. The dataset includes individuals of various genders and ethnicities who have given explicit and informed consent for their photographs to be used in public research. It is annotated by pairwise comparisons (PWC) collected from over 30 image quality experts for three image attributes: face detail preservation, face target exposure, and overall image quality. An in-depth statistical analysis of these annotations allows us to evaluate their consistency over PIQ23. Finally, we show through an extensive comparison with existing baselines that semantic information (image context) can be used to improve IQA predictions. The dataset along with the proposed statistical analysis and BIQA algorithms are available: https://github.com/DXOMARK-Research/PIQ2023

Table-Of-Contents generation on contemporary documents

Nov 20, 2019

Abstract:The generation of precise and detailed Table-Of-Contents (TOC) from a document is a problem of major importance for document understanding and information extraction. Despite its importance, it is still a challenging task, especially for non-standardized documents with rich layout information such as commercial documents. In this paper, we present a new neural-based pipeline for TOC generation applicable to any searchable document. Unlike previous methods, we do not use semantic labeling nor assume the presence of parsable TOC pages in the document. Moreover, we analyze the influence of using external knowledge encoded as a template. We empirically show that this approach is only useful in a very low resource environment. Finally, we propose a new domain-specific data set that sheds some light on the difficulties of TOC generation in real-world documents. The proposed method shows better performance than the state-of-the-art on a public data set and on the newly released data set.

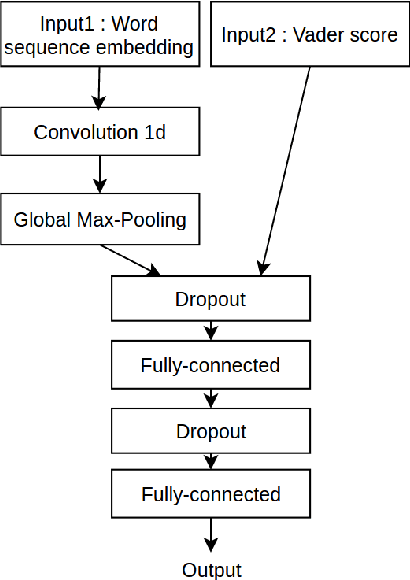

Fortia-FBK at SemEval-2017 Task 5: Bullish or Bearish? Inferring Sentiment towards Brands from Financial News Headlines

Apr 04, 2017

Abstract:In this paper, we describe a methodology to infer Bullish or Bearish sentiment towards companies/brands. More specifically, our approach leverages affective lexica and word embeddings in combination with convolutional neural networks to infer the sentiment of financial news headlines towards a target company. Such architecture was used and evaluated in the context of the SemEval 2017 challenge (task 5, subtask 2), in which it obtained the best performance.

Regularized Discrete Optimal Transport

Jul 21, 2013

Abstract:This article introduces a generalization of the discrete optimal transport, with applications to color image manipulations. This new formulation includes a relaxation of the mass conservation constraint and a regularization term. These two features are crucial for image processing tasks, which necessitate to take into account families of multimodal histograms, with large mass variation across modes. The corresponding relaxed and regularized transportation problem is the solution of a convex optimization problem. Depending on the regularization used, this minimization can be solved using standard linear programming methods or first order proximal splitting schemes. The resulting transportation plan can be used as a color transfer map, which is robust to mass variation across images color palettes. Furthermore, the regularization of the transport plan helps to remove colorization artifacts due to noise amplification. We also extend this framework to the computation of barycenters of distributions. The barycenter is the solution of an optimization problem, which is separately convex with respect to the barycenter and the transportation plans, but not jointly convex. A block coordinate descent scheme converges to a stationary point of the energy. We show that the resulting algorithm can be used for color normalization across several images. The relaxed and regularized barycenter defines a common color palette for those images. Applying color transfer toward this average palette performs a color normalization of the input images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge