Simon Stelter

MARLIN: A Cloud Integrated Robotic Solution to Support Intralogistics in Retail

Jul 02, 2024Abstract:In this paper, we present the service robot MARLIN and its integration with the K4R platform, a cloud system for complex AI applications in retail. At its core, this platform contains so-called semantic digital twins, a semantically annotated representation of the retail store. MARLIN continuously exchanges data with the K4R platform, improving the robot's capabilities in perception, autonomous navigation, and task planning. We exploit these capabilities in a retail intralogistics scenario, specifically by assisting store employees in stocking shelves. We demonstrate that MARLIN is able to update the digital representation of the retail store by detecting and classifying obstacles, autonomously planning and executing replenishment missions, adapting to unforeseen changes in the environment, and interacting with store employees. Experiments are conducted in simulation, in a laboratory environment, and in a real store. We also describe and evaluate a novel algorithm for autonomous navigation of articulated tractor-trailer systems. The algorithm outperforms the manufacturer's proprietary navigation approach and improves MARLIN's navigation capabilities in confined spaces.

Translating Universal Scene Descriptions into Knowledge Graphs for Robotic Environment

Oct 27, 2023Abstract:Robots performing human-scale manipulation tasks require an extensive amount of knowledge about their surroundings in order to perform their actions competently and human-like. In this work, we investigate the use of virtual reality technology as an implementation for robot environment modeling, and present a technique for translating scene graphs into knowledge bases. To this end, we take advantage of the Universal Scene Description (USD) format which is an emerging standard for the authoring, visualization and simulation of complex environments. We investigate the conversion of USD-based environment models into Knowledge Graph (KG) representations that facilitate semantic querying and integration with additional knowledge sources.

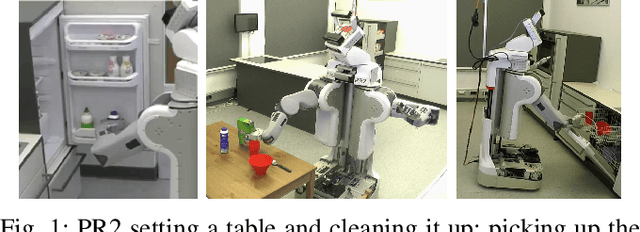

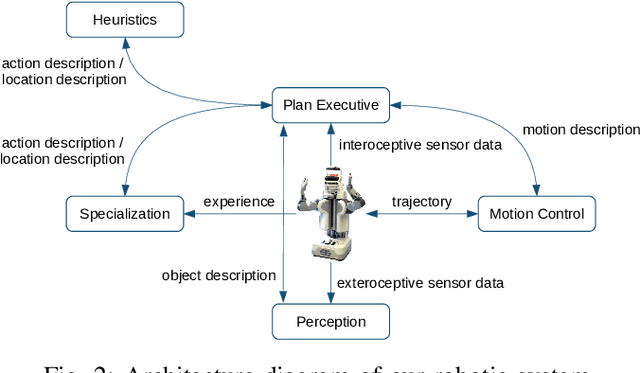

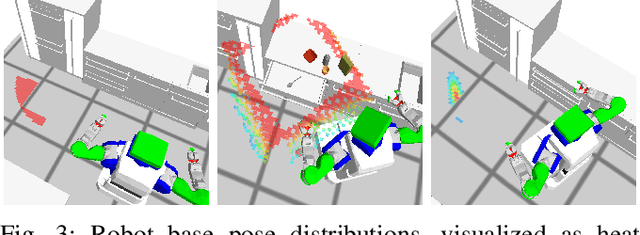

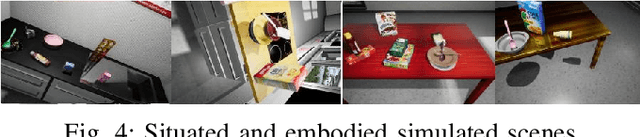

The Robot Household Marathon Experiment

Nov 19, 2020

Abstract:In this paper, we present an experiment, designed to investigate and evaluate the scalability and the robustness aspects of mobile manipulation. The experiment involves performing variations of mobile pick and place actions and opening/closing environment containers in a human household. The robot is expected to act completely autonomously for extended periods of time. We discuss the scientific challenges raised by the experiment as well as present our robotic system that can address these challenges and successfully perform all the tasks of the experiment. We present empirical results and the lessons learned as well as discuss where we hit limitations.

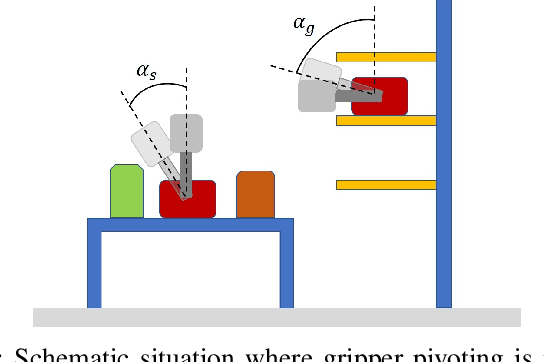

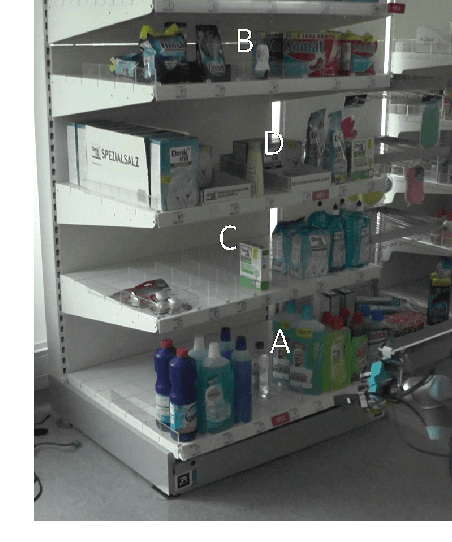

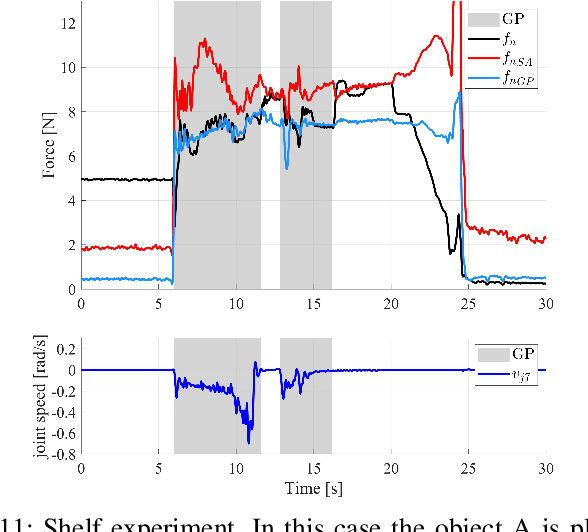

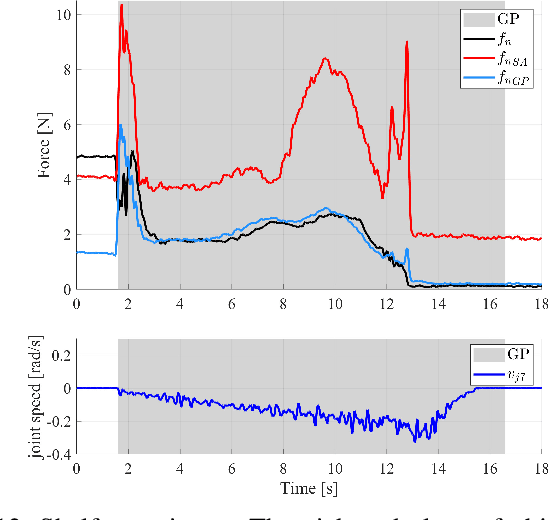

Manipulation Planning and Control for Shelf Replenishment

Dec 23, 2019

Abstract:Manipulation planning and control are relevant building blocks of a robotic system and their tight integration is a key factor to improve robot autonomy and allows robots to perform manipulation tasks of increasing complexity, such as those needed in the in-store logistics domain. Supermarkets contain a large variety of objects to be placed on the shelf layers with specific constraints, doing this with a robot is a challenge and requires a high dexterity. However, an integration of reactive grasping control and motion planning can allow robots to perform such tasks even with grippers with limited dexterity. The main contribution of the paper is a novel method for planning manipulation tasks to be executed using a reactive control layer that provides more control modalities, i.e., slipping avoidance and controlled sliding. Experiments with a new force/tactile sensor equipping the gripper of a mobile manipulator show that the approach allows the robot to successfully perform manipulation tasks unfeasible with a standard fixed grasp.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge