Ciro Natale

Active Cross-Modal Visuo-Tactile Perception of Deformable Linear Objects

Jan 20, 2026Abstract:This paper presents a novel cross-modal visuo-tactile perception framework for the 3D shape reconstruction of deformable linear objects (DLOs), with a specific focus on cables subject to severe visual occlusions. Unlike existing methods relying predominantly on vision, whose performance degrades under varying illumination, background clutter, or partial visibility, the proposed approach integrates foundation-model-based visual perception with adaptive tactile exploration. The visual pipeline exploits SAM for instance segmentation and Florence for semantic refinement, followed by skeletonization, endpoint detection, and point-cloud extraction. Occluded cable segments are autonomously identified and explored with a tactile sensor, which provides local point clouds that are merged with the visual data through Euclidean clustering and topology-preserving fusion. A B-spline interpolation driven by endpoint-guided point sorting yields a smooth and complete reconstruction of the cable shape. Experimental validation using a robotic manipulator equipped with an RGB-D camera and a tactile pad demonstrates that the proposed framework accurately reconstructs both simple and highly curved single or multiple cable configurations, even when large portions are occluded. These results highlight the potential of foundation-model-enhanced cross-modal perception for advancing robotic manipulation of deformable objects.

Enhanced 6D Pose Estimation for Robotic Fruit Picking

May 25, 2023Abstract:This paper proposes a novel method to refine the 6D pose estimation inferred by an instance-level deep neural network which processes a single RGB image and that has been trained on synthetic images only. The proposed optimization algorithm usefully exploits the depth measurement of a standard RGB-D camera to estimate the dimensions of the considered object, even though the network is trained on a single CAD model of the same object with given dimensions. The improved accuracy in the pose estimation allows a robot to grasp apples of various types and significantly different dimensions successfully; this was not possible using the standard pose estimation algorithm, except for the fruits with dimensions very close to those of the CAD drawing used in the training process. Grasping fresh fruits without damaging each item also demands a suitable grasp force control. A parallel gripper equipped with special force/tactile sensors is thus adopted to achieve safe grasps with the minimum force necessary to lift the fruits without any slippage and any deformation at the same time, with no knowledge of their weight.

A General Framework for Hierarchical Redundancy Resolution Under Arbitrary Constraints

Apr 08, 2022

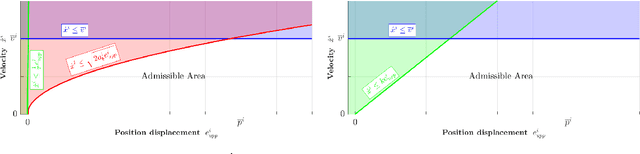

Abstract:The increasing interest in autonomous robots with a high number of degrees of freedom for industrial applications and service robotics demands control algorithms to handle multiple tasks as well as hard constraints efficiently. This paper presents a general framework in which both kinematic (velocity- or acceleration-based) and dynamic (torque-based) control of redundant robots are handled in a unified fashion. The framework allows for the specification of redundancy resolution problems featuring a hierarchy of arbitrary (equality and inequality) constraints, arbitrary weighting of the control effort in the cost function and an additional input used to optimize possibly remaining redundancy. To solve such problems, a generalization of the Saturation in the Null Space (SNS) algorithm is introduced, which extends the original method according to the features required by our general control framework. Variants of the developed algorithm are presented, which ensure both efficient computation and optimality of the solution. Experiments on a KUKA LBRiiwa robotic arm, as well as simulations with a highly redundant mobile manipulator are reported.

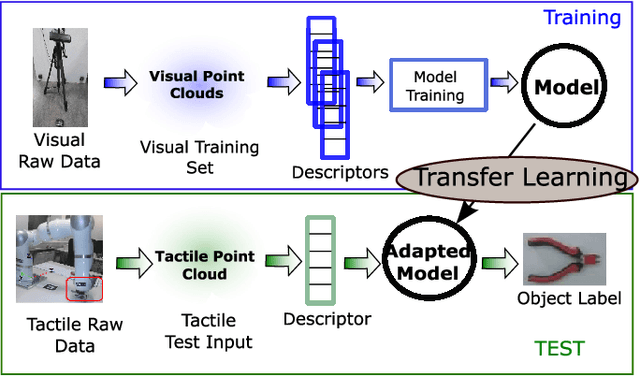

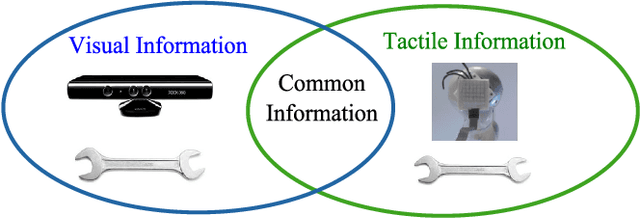

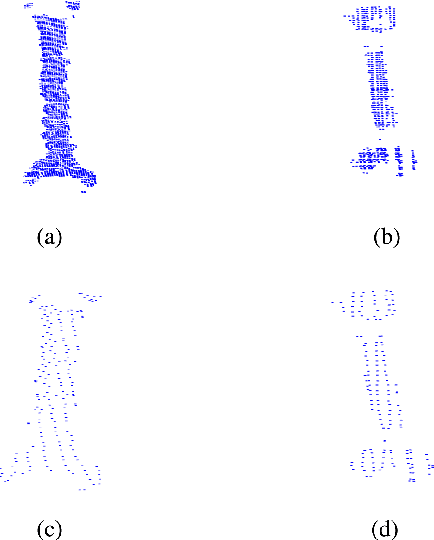

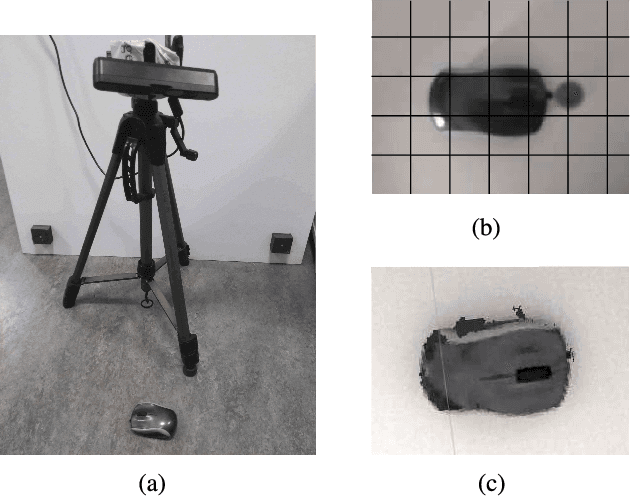

A Transfer Learning Approach to Cross-Modal Object Recognition: From Visual Observation to Robotic Haptic Exploration

Jan 18, 2020

Abstract:In this work, we introduce the problem of cross-modal visuo-tactile object recognition with robotic active exploration. With this term, we mean that the robot observes a set of objects with visual perception and, later on, it is able to recognize such objects only with tactile exploration, without having touched any object before. Using a machine learning terminology, in our application we have a visual training set and a tactile test set, or vice versa. To tackle this problem, we propose an approach constituted by four steps: finding a visuo-tactile common representation, defining a suitable set of features, transferring the features across the domains, and classifying the objects. We show the results of our approach using a set of 15 objects, collecting 40 visual examples and five tactile examples for each object. The proposed approach achieves an accuracy of 94.7%, which is comparable with the accuracy of the monomodal case, i.e., when using visual data both as training set and test set. Moreover, it performs well compared to the human ability, which we have roughly estimated carrying out an experiment with ten participants.

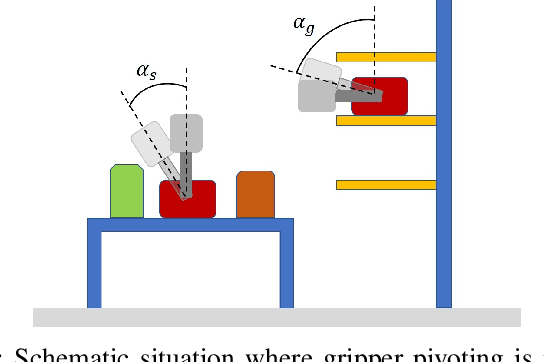

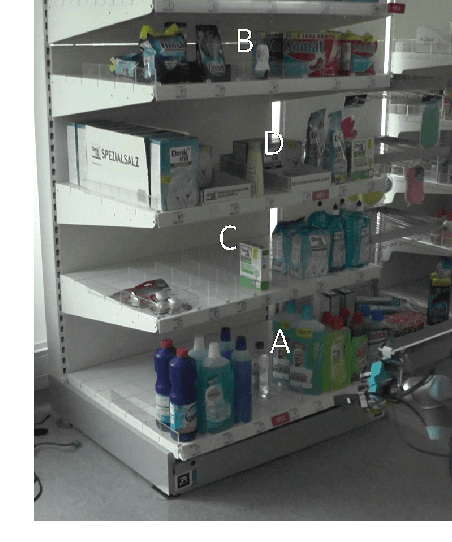

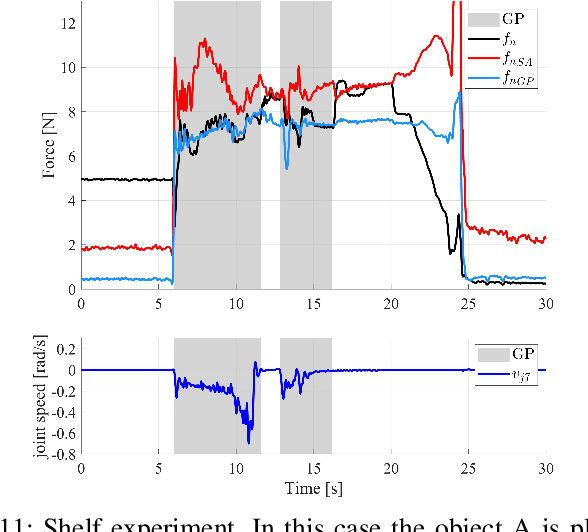

Manipulation Planning and Control for Shelf Replenishment

Dec 23, 2019

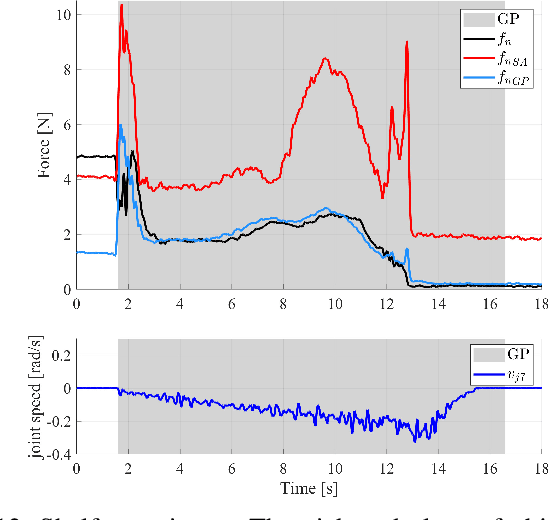

Abstract:Manipulation planning and control are relevant building blocks of a robotic system and their tight integration is a key factor to improve robot autonomy and allows robots to perform manipulation tasks of increasing complexity, such as those needed in the in-store logistics domain. Supermarkets contain a large variety of objects to be placed on the shelf layers with specific constraints, doing this with a robot is a challenge and requires a high dexterity. However, an integration of reactive grasping control and motion planning can allow robots to perform such tasks even with grippers with limited dexterity. The main contribution of the paper is a novel method for planning manipulation tasks to be executed using a reactive control layer that provides more control modalities, i.e., slipping avoidance and controlled sliding. Experiments with a new force/tactile sensor equipping the gripper of a mobile manipulator show that the approach allows the robot to successfully perform manipulation tasks unfeasible with a standard fixed grasp.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge