Georg Bartels

Kineverse: A Symbolic Articulation Model Framework for Model-Generic Software for Mobile Manipulation

Dec 09, 2020

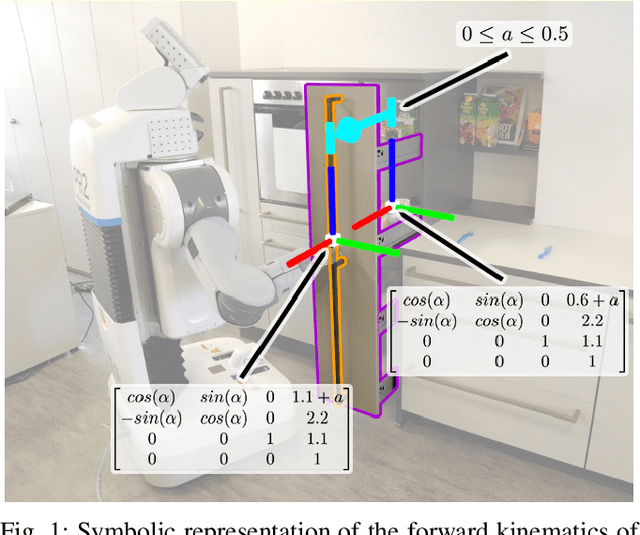

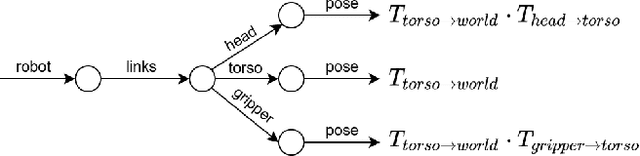

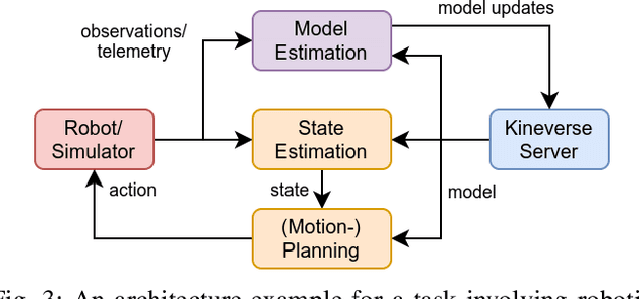

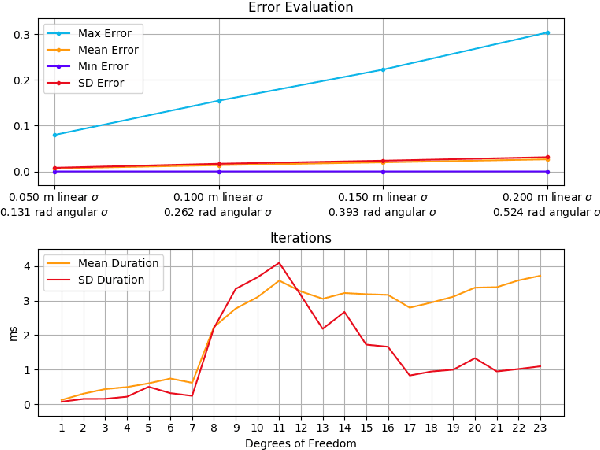

Abstract:Human developers want to program robots using abstract instructions, such as "fetch the milk from the fridge". To translate such instructions into actionable plans, the robot's software requires in-depth background knowledge. With regards to interactions with articulated objects such as doors and drawers, the robot requires a model that it can use for state estimation and motion planning. Existing articulation model frameworks take a descriptive approach to model building, which requires additional background knowledge to construct mathematical models for computation. In this paper, we introduce the articulation model framework Kineverse which uses symbolic mathematical expressions to model articulated objects. We provide a theoretical description of this framework, and the operations that are supported by its models, and suggest a software architecture for integrating our framework in a robotics application. To demonstrate the applicability of our framework to robotics, we employ it in solving two common robotics problems from state estimation and manipulation.

Manipulation Planning and Control for Shelf Replenishment

Dec 23, 2019

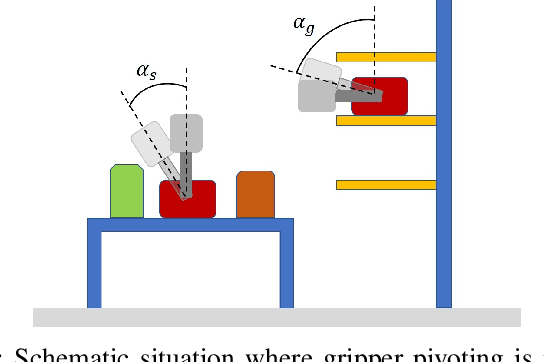

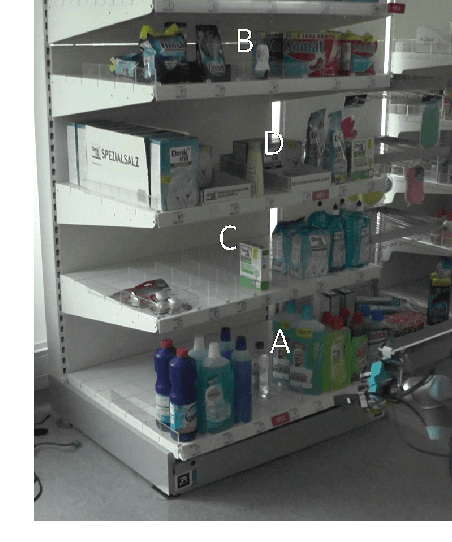

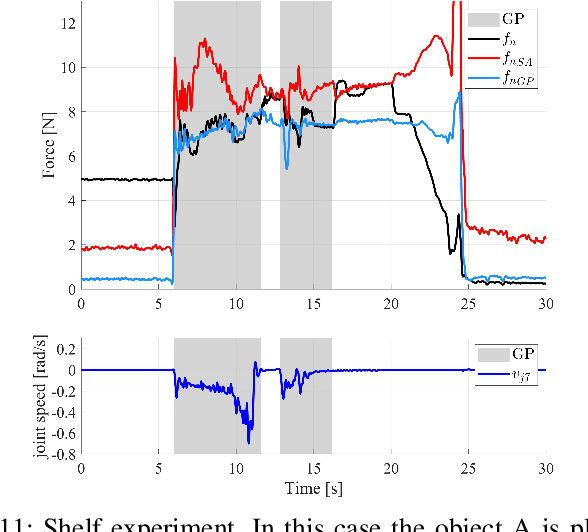

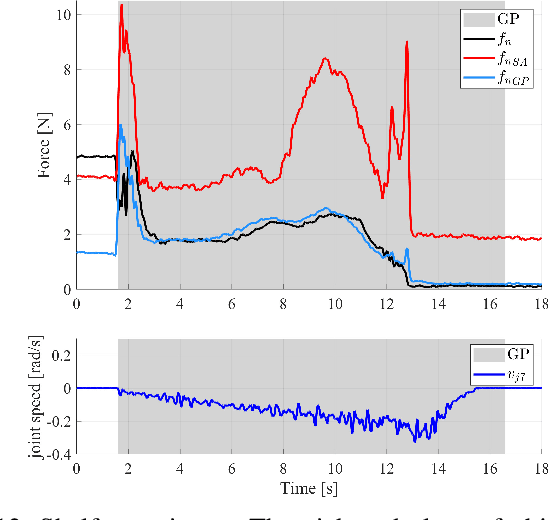

Abstract:Manipulation planning and control are relevant building blocks of a robotic system and their tight integration is a key factor to improve robot autonomy and allows robots to perform manipulation tasks of increasing complexity, such as those needed in the in-store logistics domain. Supermarkets contain a large variety of objects to be placed on the shelf layers with specific constraints, doing this with a robot is a challenge and requires a high dexterity. However, an integration of reactive grasping control and motion planning can allow robots to perform such tasks even with grippers with limited dexterity. The main contribution of the paper is a novel method for planning manipulation tasks to be executed using a reactive control layer that provides more control modalities, i.e., slipping avoidance and controlled sliding. Experiments with a new force/tactile sensor equipping the gripper of a mobile manipulator show that the approach allows the robot to successfully perform manipulation tasks unfeasible with a standard fixed grasp.

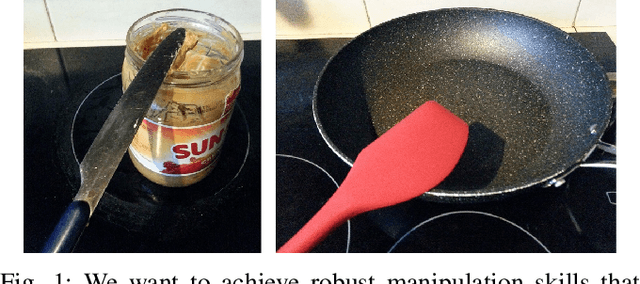

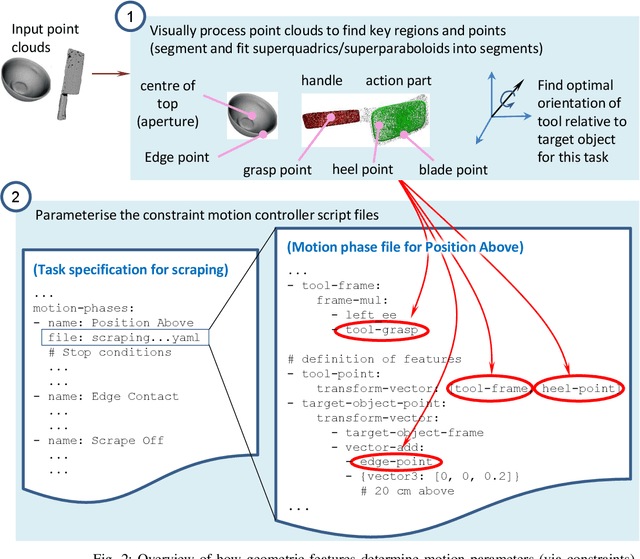

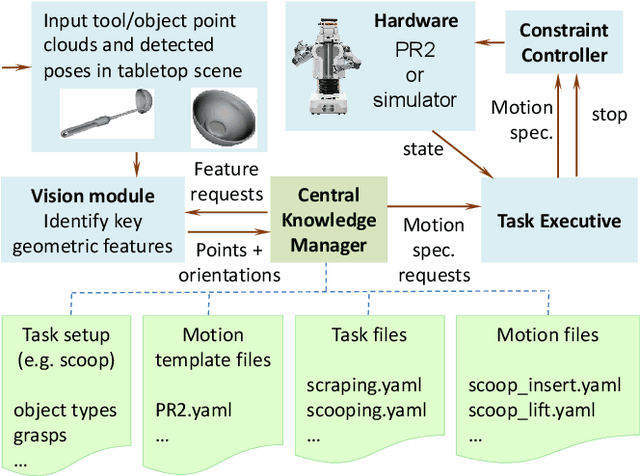

Adapting Everyday Manipulation Skills to Varied Scenarios

Mar 04, 2019

Abstract:We address the problem of executing tool-using manipulation skills in scenarios where the objects to be used may vary. We assume that point clouds of the tool and target object can be obtained, but no interpretation or further knowledge about these objects is provided. The system must interpret the point clouds and decide how to use the tool to complete a manipulation task with a target object; this means it must adjust motion trajectories appropriately to complete the task. We tackle three everyday manipulations: scraping material from a tool into a container, cutting, and scooping from a container. Our solution encodes these manipulation skills in a generic way, with parameters that can be filled in at run-time via queries to a robot perception module; the perception module abstracts the functional parts for the tool and extracts key parameters that are needed for the task. The approach is evaluated in simulation and with selected examples on a PR2 robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge