Kineverse: A Symbolic Articulation Model Framework for Model-Generic Software for Mobile Manipulation

Paper and Code

Dec 09, 2020

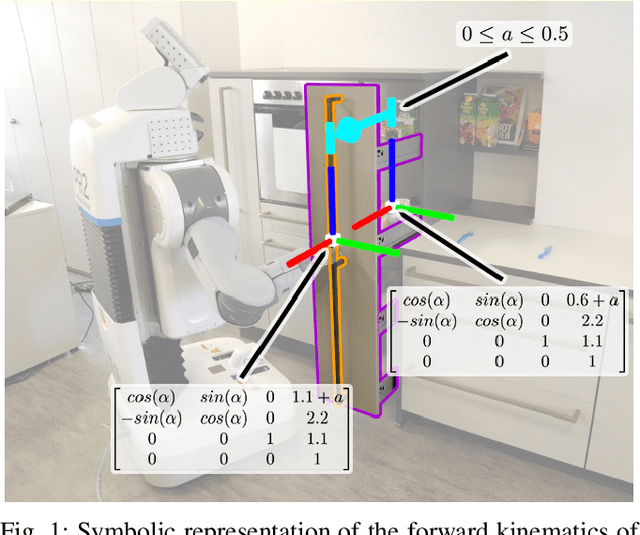

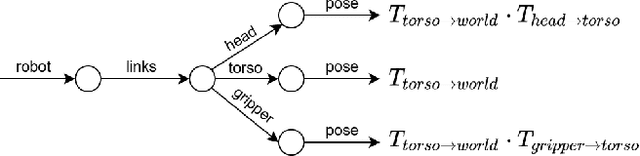

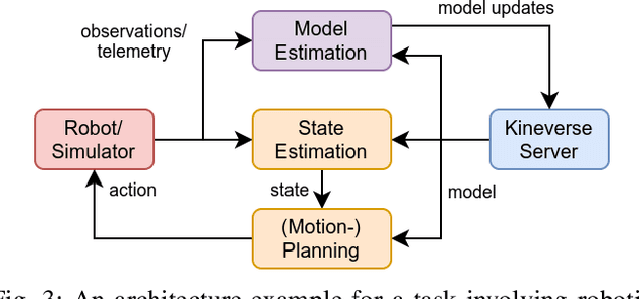

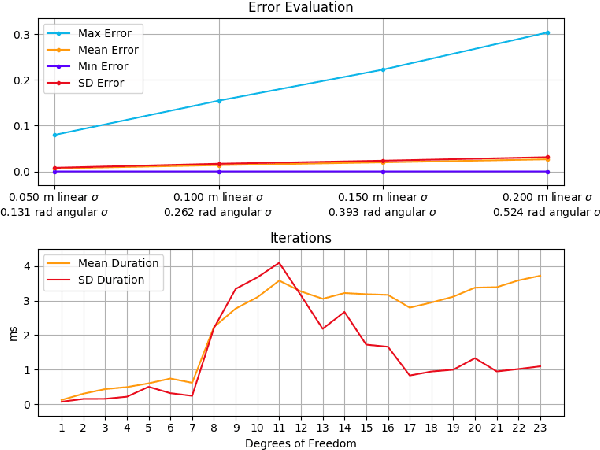

Human developers want to program robots using abstract instructions, such as "fetch the milk from the fridge". To translate such instructions into actionable plans, the robot's software requires in-depth background knowledge. With regards to interactions with articulated objects such as doors and drawers, the robot requires a model that it can use for state estimation and motion planning. Existing articulation model frameworks take a descriptive approach to model building, which requires additional background knowledge to construct mathematical models for computation. In this paper, we introduce the articulation model framework Kineverse which uses symbolic mathematical expressions to model articulated objects. We provide a theoretical description of this framework, and the operations that are supported by its models, and suggest a software architecture for integrating our framework in a robotics application. To demonstrate the applicability of our framework to robotics, we employ it in solving two common robotics problems from state estimation and manipulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge