Gayane Kazhoyan

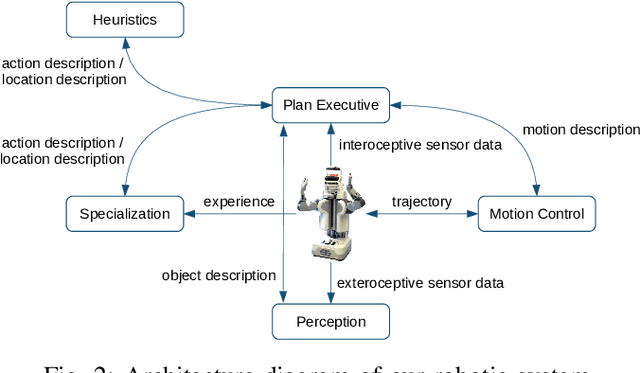

The CRAM Cognitive Architecture for Robot Manipulation in Everyday Activities

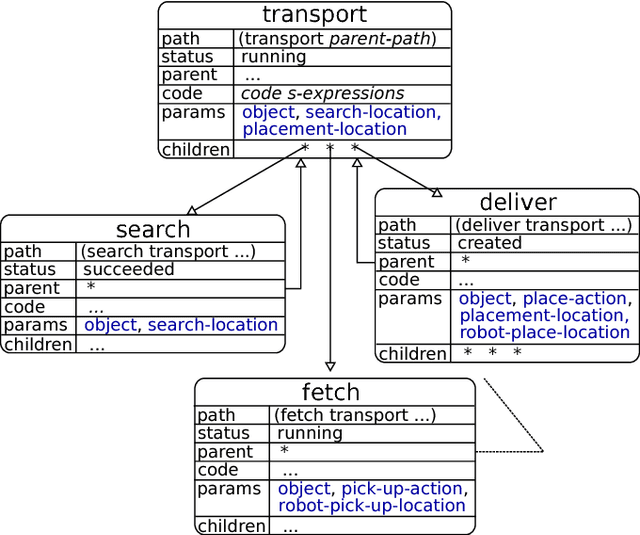

Apr 27, 2023Abstract:This paper presents a hybrid robot cognitive architecture, CRAM, that enables robot agents to accomplish everyday manipulation tasks. It addresses five key challenges that arise when carrying out everyday activities. These include (i) the underdetermined nature of task specification, (ii) the generation of context-specific behavior, (iii) the ability to make decisions based on knowledge, experience, and prediction, (iv) the ability to reason at the levels of motions and sensor data, and (v) the ability to explain actions and the consequences of these actions. We explore the computational foundations of the CRAM cognitive model: the self-programmability entailed by physical symbol systems, the CRAM plan language, generalized action plans and implicit-to-explicit manipulation, generative models, digital twin knowledge representation & reasoning, and narrative-enabled episodic memories. We describe the structure of the cognitive architecture and explain the process by which CRAM transforms generalized action plans into parameterized motion plans. It does this using knowledge and reasoning to identify the parameter values that maximize the likelihood of successfully accomplishing the action. We demonstrate the ability of a CRAM-controlled robot to carry out everyday activities in a kitchen environment. Finally, we consider future extensions that focus on achieving greater flexibility through transformational learning and metacognition.

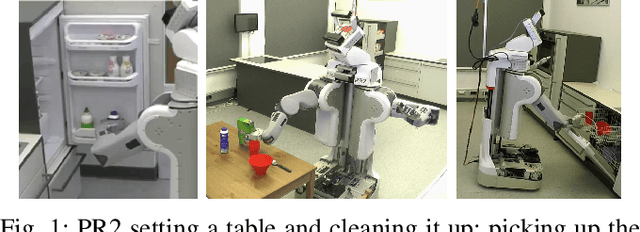

The Robot Household Marathon Experiment

Nov 19, 2020

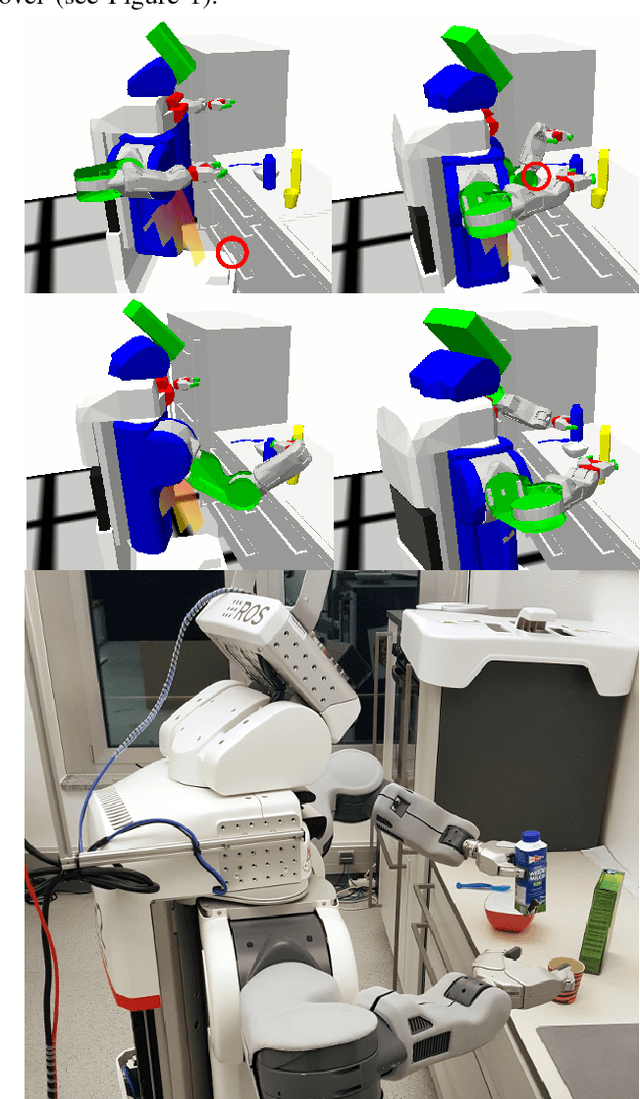

Abstract:In this paper, we present an experiment, designed to investigate and evaluate the scalability and the robustness aspects of mobile manipulation. The experiment involves performing variations of mobile pick and place actions and opening/closing environment containers in a human household. The robot is expected to act completely autonomously for extended periods of time. We discuss the scientific challenges raised by the experiment as well as present our robotic system that can address these challenges and successfully perform all the tasks of the experiment. We present empirical results and the lessons learned as well as discuss where we hit limitations.

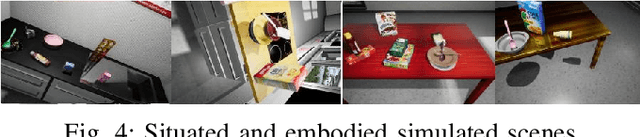

Towards Plan Transformations for Real-World Pick and Place Tasks

Dec 19, 2018

Abstract:In this paper, we investigate the possibility of applying plan transformations to general manipulation plans in order to specialize them to the specific situation at hand. We present a framework for optimizing execution and achieving higher performance by autonomously transforming robot's behavior at runtime. We show that plans employed by robotic agents in real-world environments can be transformed, despite their control structures being very complex due to the specifics of acting in the real world. The evaluation is carried out on a plan of a PR2 robot performing pick and place tasks, to which we apply three example transformations, as well as on a large amount of experiments in a fast plan projection environment.

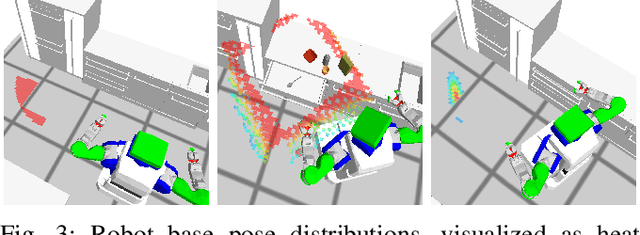

Specializing Underdetermined Action Descriptions Through Plan Projection

Dec 19, 2018

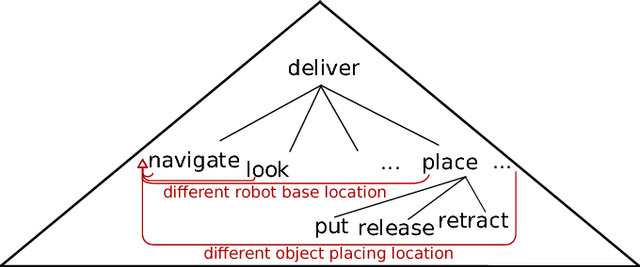

Abstract:Plan execution on real robots in realistic environments is underdetermined and often leads to failures. The choice of action parameterization is crucial for task success. By thinking ahead of time with the fast plan projection mechanism proposed in this paper, a general plan can be specialized towards the environment and task at hand by choosing action parameterizations that are predicted to lead to successful execution. For finding causal relationships between action parameterizations and task success, we provide the robot with means for plan introspection and propose a systematic and hierarchical plan structure to support that. We evaluate our approach by showing how a PR2 robot, when equipped with the proposed system, is able to choose action parameterizations that increase task execution success rates and overall performance of fetch and deliver actions in a real world setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge