Mihai Pomarlan

Hanging Around: Cognitive Inspired Reasoning for Reactive Robotics

Jul 28, 2025Abstract:Situationally-aware artificial agents operating with competence in natural environments face several challenges: spatial awareness, object affordance detection, dynamic changes and unpredictability. A critical challenge is the agent's ability to identify and monitor environmental elements pertinent to its objectives. Our research introduces a neurosymbolic modular architecture for reactive robotics. Our system combines a neural component performing object recognition over the environment and image processing techniques such as optical flow, with symbolic representation and reasoning. The reasoning system is grounded in the embodied cognition paradigm, via integrating image schematic knowledge in an ontological structure. The ontology is operatively used to create queries for the perception system, decide on actions, and infer entities' capabilities derived from perceptual data. The combination of reasoning and image processing allows the agent to focus its perception for normal operation as well as discover new concepts for parts of objects involved in particular interactions. The discovered concepts allow the robot to autonomously acquire training data and adjust its subsymbolic perception to recognize the parts, as well as making planning for more complex tasks feasible by focusing search on those relevant object parts. We demonstrate our approach in a simulated world, in which an agent learns to recognize parts of objects involved in support relations. While the agent has no concept of handle initially, by observing examples of supported objects hanging from a hook it learns to recognize the parts involved in establishing support and becomes able to plan the establishment/destruction of the support relation. This underscores the agent's capability to expand its knowledge through observation in a systematic way, and illustrates the potential of combining deep reasoning [...].

* This article is published online with Open Access by IOS Press and distributed under the terms of the Creative Commons Attribution Non-Commercial License 4.0 (CC BY-NC 4.0)

The Wilhelm Tell Dataset of Affordance Demonstrations

Jul 23, 2025Abstract:Affordances - i.e. possibilities for action that an environment or objects in it provide - are important for robots operating in human environments to perceive. Existing approaches train such capabilities on annotated static images or shapes. This work presents a novel dataset for affordance learning of common household tasks. Unlike previous approaches, our dataset consists of video sequences demonstrating the tasks from first- and third-person perspectives, along with metadata about the affordances that are manifested in the task, and is aimed towards training perception systems to recognize affordance manifestations. The demonstrations were collected from several participants and in total record about seven hours of human activity. The variety of task performances also allows studying preparatory maneuvers that people may perform for a task, such as how they arrange their task space, which is also relevant for collaborative service robots.

* \c{opyright} 2025 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Generating Actionable Robot Knowledge Bases by Combining 3D Scene Graphs with Robot Ontologies

Jul 15, 2025Abstract:In robotics, the effective integration of environmental data into actionable knowledge remains a significant challenge due to the variety and incompatibility of data formats commonly used in scene descriptions, such as MJCF, URDF, and SDF. This paper presents a novel approach that addresses these challenges by developing a unified scene graph model that standardizes these varied formats into the Universal Scene Description (USD) format. This standardization facilitates the integration of these scene graphs with robot ontologies through semantic reporting, enabling the translation of complex environmental data into actionable knowledge essential for cognitive robotic control. We evaluated our approach by converting procedural 3D environments into USD format, which is then annotated semantically and translated into a knowledge graph to effectively answer competency questions, demonstrating its utility for real-time robotic decision-making. Additionally, we developed a web-based visualization tool to support the semantic mapping process, providing users with an intuitive interface to manage the 3D environment.

An Ontological Model of User Preferences

Oct 29, 2023Abstract:The notion of preferences plays an important role in many disciplines including service robotics which is concerned with scenarios in which robots interact with humans. These interactions can be favored by robots taking human preferences into account. This raises the issue of how preferences should be represented to support such preference-aware decision making. Several formal accounts for a notion of preferences exist. However, these approaches fall short on defining the nature and structure of the options that a robot has in a given situation. In this work, we thus investigate a formal model of preferences where options are non-atomic entities that are defined by the complex situations they bring about.

Translating Universal Scene Descriptions into Knowledge Graphs for Robotic Environment

Oct 27, 2023Abstract:Robots performing human-scale manipulation tasks require an extensive amount of knowledge about their surroundings in order to perform their actions competently and human-like. In this work, we investigate the use of virtual reality technology as an implementation for robot environment modeling, and present a technique for translating scene graphs into knowledge bases. To this end, we take advantage of the Universal Scene Description (USD) format which is an emerging standard for the authoring, visualization and simulation of complex environments. We investigate the conversion of USD-based environment models into Knowledge Graph (KG) representations that facilitate semantic querying and integration with additional knowledge sources.

Towards a Neuronally Consistent Ontology for Robotic Agents

Sep 26, 2023Abstract:The Collaborative Research Center for Everyday Activity Science & Engineering (CRC EASE) aims to enable robots to perform environmental interaction tasks with close to human capacity. It therefore employs a shared ontology to model the activity of both kinds of agents, empowering robots to learn from human experiences. To properly describe these human experiences, the ontology will strongly benefit from incorporating characteristics of neuronal information processing which are not accessible from a behavioral perspective alone. We, therefore, propose the analysis of human neuroimaging data for evaluation and validation of concepts and events defined in the ontology model underlying most of the CRC projects. In an exploratory analysis, we employed an Independent Component Analysis (ICA) on functional Magnetic Resonance Imaging (fMRI) data from participants who were presented with the same complex video stimuli of activities as robotic and human agents in different environments and contexts. We then correlated the activity patterns of brain networks represented by derived components with timings of annotated event categories as defined by the ontology model. The present results demonstrate a subset of common networks with stable correlations and specificity towards particular event classes and groups, associated with environmental and contextual factors. These neuronal characteristics will open up avenues for adapting the ontology model to be more consistent with human information processing.

Formalising Natural Language Quantifiers for Human-Robot Interactions

Aug 25, 2023

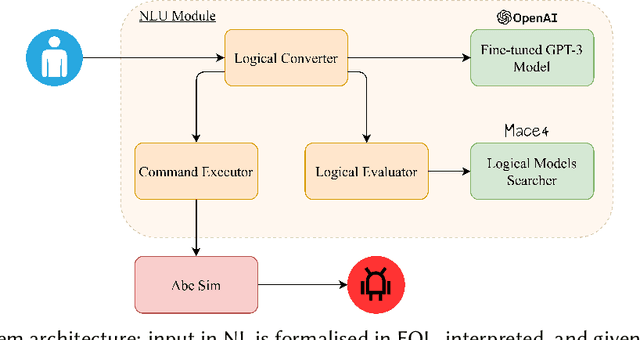

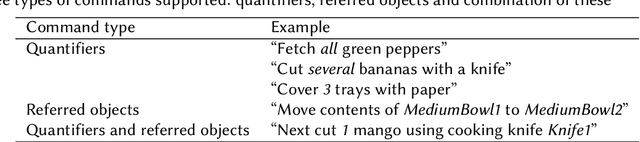

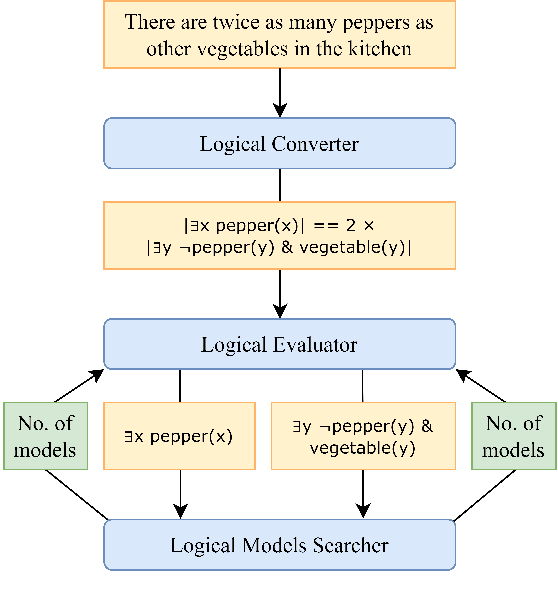

Abstract:We present a method for formalising quantifiers in natural language in the context of human-robot interactions. The solution is based on first-order logic extended with capabilities to represent the cardinality of variables, operating similarly to generalised quantifiers. To demonstrate the method, we designed an end-to-end system able to receive input as natural language, convert it into a formal logical representation, evaluate it, and return a result or send a command to a simulated robot.

Foundations of the Socio-physical Model of Activities for Autonomous Robotic Agents

Nov 24, 2020

Abstract:In this paper, we present foundations of the Socio-physical Model of Activities (SOMA). SOMA represents both the physical as well as the social context of everyday activities. Such tasks seem to be trivial for humans, however, they pose severe problems for artificial agents. For starters, a natural language command requesting something will leave many pieces of information necessary for performing the task unspecified. Humans can solve such problems fast as we reduce the search space by recourse to prior knowledge such as a connected collection of plans that describe how certain goals can be achieved at various levels of abstraction. Rather than enumerating fine-grained physical contexts SOMA sets out to include socially constructed knowledge about the functions of actions to achieve a variety of goals or the roles objects can play in a given situation. As the human cognition system is capable of generalizing experiences into abstract knowledge pieces applicable to novel situations, we argue that both physical and social context need be modeled to tackle these challenges in a general manner. This is represented by the link between the physical and social context in SOMA where relationships are established between occurrences and generalizations of them, which has been demonstrated in several use cases that validate SOMA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge