Simon Setzer

Optimising Spatial and Tonal Data for PDE-based Inpainting

Jun 15, 2015

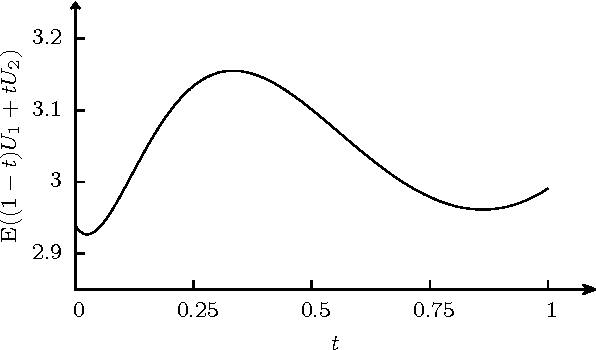

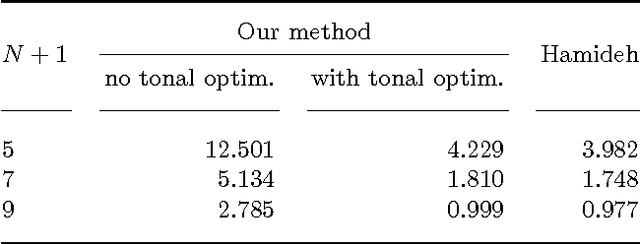

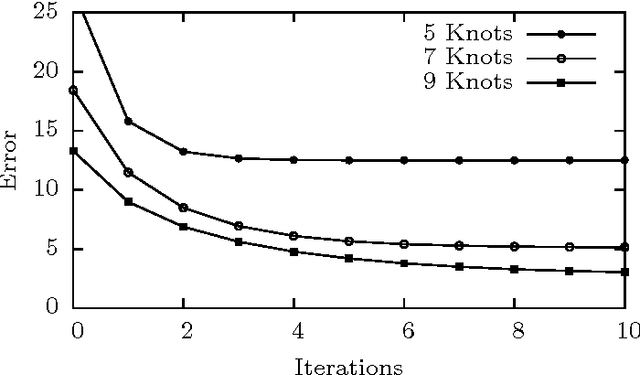

Abstract:Some recent methods for lossy signal and image compression store only a few selected pixels and fill in the missing structures by inpainting with a partial differential equation (PDE). Suitable operators include the Laplacian, the biharmonic operator, and edge-enhancing anisotropic diffusion (EED). The quality of such approaches depends substantially on the selection of the data that is kept. Optimising this data in the domain and codomain gives rise to challenging mathematical problems that shall be addressed in our work. In the 1D case, we prove results that provide insights into the difficulty of this problem, and we give evidence that a splitting into spatial and tonal (i.e. function value) optimisation does hardly deteriorate the results. In the 2D setting, we present generic algorithms that achieve a high reconstruction quality even if the specified data is very sparse. To optimise the spatial data, we use a probabilistic sparsification, followed by a nonlocal pixel exchange that avoids getting trapped in bad local optima. After this spatial optimisation we perform a tonal optimisation that modifies the function values in order to reduce the global reconstruction error. For homogeneous diffusion inpainting, this comes down to a least squares problem for which we prove that it has a unique solution. We demonstrate that it can be found efficiently with a gradient descent approach that is accelerated with fast explicit diffusion (FED) cycles. Our framework allows to specify the desired density of the inpainting mask a priori. Moreover, is more generic than other data optimisation approaches for the sparse inpainting problem, since it can also be extended to nonlinear inpainting operators such as EED. This is exploited to achieve reconstructions with state-of-the-art quality. We also give an extensive literature survey on PDE-based image compression methods.

Robust PCA: Optimization of the Robust Reconstruction Error over the Stiefel Manifold

Jun 01, 2015

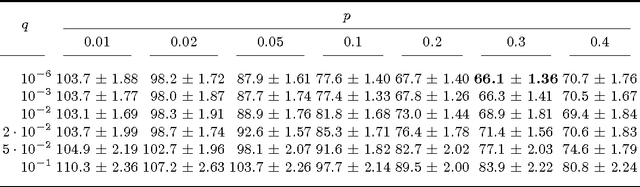

Abstract:It is well known that Principal Component Analysis (PCA) is strongly affected by outliers and a lot of effort has been put into robustification of PCA. In this paper we present a new algorithm for robust PCA minimizing the trimmed reconstruction error. By directly minimizing over the Stiefel manifold, we avoid deflation as often used by projection pursuit methods. In distinction to other methods for robust PCA, our method has no free parameter and is computationally very efficient. We illustrate the performance on various datasets including an application to background modeling and subtraction. Our method performs better or similar to current state-of-the-art methods while being faster.

Nonlinear Eigenproblems in Data Analysis - Balanced Graph Cuts and the RatioDCA-Prox

Mar 24, 2014

Abstract:It has been recently shown that a large class of balanced graph cuts allows for an exact relaxation into a nonlinear eigenproblem. We review briefly some of these results and propose a family of algorithms to compute nonlinear eigenvectors which encompasses previous work as special cases. We provide a detailed analysis of the properties and the convergence behavior of these algorithms and then discuss their application in the area of balanced graph cuts.

The Total Variation on Hypergraphs - Learning on Hypergraphs Revisited

Dec 18, 2013

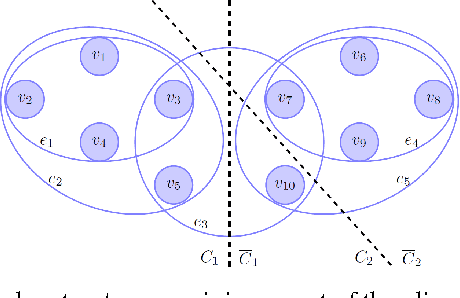

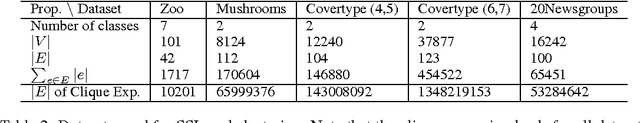

Abstract:Hypergraphs allow one to encode higher-order relationships in data and are thus a very flexible modeling tool. Current learning methods are either based on approximations of the hypergraphs via graphs or on tensor methods which are only applicable under special conditions. In this paper, we present a new learning framework on hypergraphs which fully uses the hypergraph structure. The key element is a family of regularization functionals based on the total variation on hypergraphs.

Constrained fractional set programs and their application in local clustering and community detection

Jun 14, 2013

Abstract:The (constrained) minimization of a ratio of set functions is a problem frequently occurring in clustering and community detection. As these optimization problems are typically NP-hard, one uses convex or spectral relaxations in practice. While these relaxations can be solved globally optimally, they are often too loose and thus lead to results far away from the optimum. In this paper we show that every constrained minimization problem of a ratio of non-negative set functions allows a tight relaxation into an unconstrained continuous optimization problem. This result leads to a flexible framework for solving constrained problems in network analysis. While a globally optimal solution for the resulting non-convex problem cannot be guaranteed, we outperform the loose convex or spectral relaxations by a large margin on constrained local clustering problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge