Joachim Weickert

Image Compression with Isotropic and Anisotropic Shepard Inpainting

Jun 10, 2024Abstract:Inpainting-based codecs store sparse selected pixel data and decode by reconstructing the discarded image parts by inpainting. Successful codecs (coders and decoders) traditionally use inpainting operators that solve partial differential equations. This requires some numerical expertise if efficient implementations are necessary. Our goal is to investigate variants of Shepard inpainting as simple alternatives for inpainting-based compression. They can be implemented efficiently when we localise their weighting function. To turn them into viable codecs, we have to introduce novel extensions of classical Shepard interpolation that adapt successful ideas from previous codecs: Anisotropy allows direction-dependent inpainting, which improves reconstruction quality. Additionally, we incorporate data selection by subdivision as an efficient way to tailor the stored information to the image structure. On the encoding side, we introduce the novel concept of joint inpainting and prediction for isotropic Shepard codecs, where storage cost can be reduced based on intermediate inpainting results. In an ablation study, we show the usefulness of these individual contributions and demonstrate that they offer synergies which elevate the performance of Shepard inpainting to surprising levels. Our resulting approaches offer a more favourable trade-off between simplicity and quality than traditional inpainting-based codecs. Experiments show that they can outperform JPEG and JPEG2000 at high compression ratios.

Neuroexplicit Diffusion Models for Inpainting of Optical Flow Fields

May 23, 2024Abstract:Deep learning has revolutionized the field of computer vision by introducing large scale neural networks with millions of parameters. Training these networks requires massive datasets and leads to intransparent models that can fail to generalize. At the other extreme, models designed from partial differential equations (PDEs) embed specialized domain knowledge into mathematical equations and usually rely on few manually chosen hyperparameters. This makes them transparent by construction and if designed and calibrated carefully, they can generalize well to unseen scenarios. In this paper, we show how to bring model- and data-driven approaches together by combining the explicit PDE-based approaches with convolutional neural networks to obtain the best of both worlds. We illustrate a joint architecture for the task of inpainting optical flow fields and show that the combination of model- and data-driven modeling leads to an effective architecture. Our model outperforms both fully explicit and fully data-driven baselines in terms of reconstruction quality, robustness and amount of required training data. Averaging the endpoint error across different mask densities, our method outperforms the explicit baselines by 11-27%, the GAN baseline by 47% and the Probabilisitic Diffusion baseline by 42%. With that, our method sets a new state of the art for inpainting of optical flow fields from random masks.

Efficient Parallel Algorithms for Inpainting-Based Representations of 4K Images -- Part II: Spatial and Tonal Data Optimization

Jan 12, 2024

Abstract:Homogeneous diffusion inpainting can reconstruct missing image areas with high quality from a sparse subset of known pixels, provided that their location as well as their gray or color values are well optimized. This property is exploited in inpainting-based image compression, which is a promising alternative to classical transform-based codecs such as JPEG and JPEG2000. However, optimizing the inpainting data is a challenging task. Current approaches are either quite slow or do not produce high quality results. As a remedy we propose fast spatial and tonal optimization algorithms for homogeneous diffusion inpainting that efficiently utilize GPU parallelism, with a careful adaptation of some of the most successful numerical concepts. We propose a densification strategy using ideas from error-map dithering combined with a Delaunay triangulation for the spatial optimization. For the tonal optimization we design a domain decomposition solver that solves the corresponding normal equations in a matrix-free fashion and supplement it with a Voronoi-based initialization strategy. With our proposed methods we are able to generate high quality inpainting masks for homogeneous diffusion and optimized tonal values in a runtime that outperforms prior state-of-the-art by a wide margin.

Efficient Parallel Algorithms for Inpainting-Based Representations of 4K Images -- Part I: Homogeneous Diffusion Inpainting

Jan 12, 2024

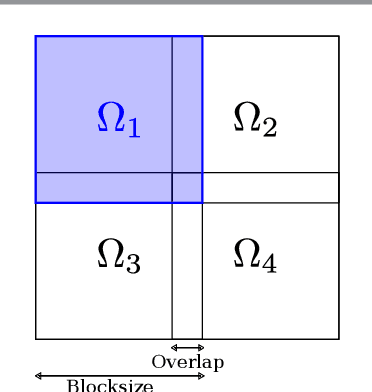

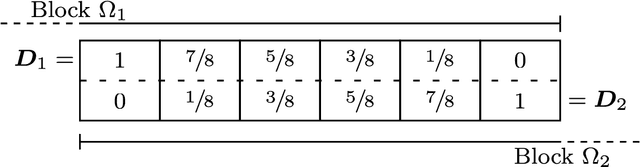

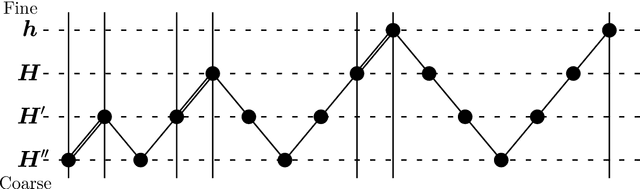

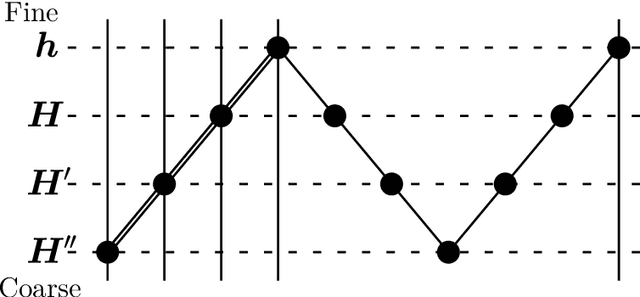

Abstract:In recent years inpainting-based compression methods have been shown to be a viable alternative to classical codecs such as JPEG and JPEG2000. Unlike transform-based codecs, which store coefficients in the transform domain, inpainting-based approaches store a small subset of the original image pixels and reconstruct the image from those by using a suitable inpainting operator. A good candidate for such an inpainting operator is homogeneous diffusion inpainting, as it is simple, theoretically well-motivated, and can achieve good reconstruction quality for optimized data. However, a major challenge has been to design fast solvers for homogeneous diffusion inpainting that scale to 4K image resolution ($3840 \times 2160$ pixels) and are real-time capable. We overcome this with a careful adaptation and fusion of two of the most efficient concept from numerical analysis: multigrid and domain decomposition. Our domain decomposition algorithm efficiently utilizes GPU parallelism by solving inpainting problems on small overlapping blocks. Unlike simple block decomposition strategies such as the ones in JPEG, our approach yields block artifact-free reconstructions. Furthermore, embedding domain decomposition in a full multigrid scheme provides global interactions and allows us to achieve optimal convergence by reducing both low- and high-frequency errors at the same rate. We are able to achieve 4K color image reconstruction at more than $60$ frames per second even from very sparse data - something which has been previously unfeasible.

Gaining Insights into Denoising by Inpainting

Sep 23, 2023Abstract:The filling-in effect of diffusion processes is a powerful tool for various image analysis tasks such as inpainting-based compression and dense optic flow computation. For noisy data, an interesting side effect occurs: The interpolated data have higher confidence, since they average information from many noisy sources. This observation forms the basis of our denoising by inpainting (DbI) framework. It averages multiple inpainting results from different noisy subsets. Our goal is to obtain fundamental insights into key properties of DbI and its connections to existing methods. Like in inpainting-based image compression, we choose homogeneous diffusion as a very simple inpainting operator that performs well for highly optimized data. We propose several strategies to choose the location of the selected pixels. Moreover, to improve the global approximation quality further, we also allow to change the function values of the noisy pixels. In contrast to traditional denoising methods that adapt the operator to the data, our approach adapts the data to the operator. Experimentally we show that replacing homogeneous diffusion inpainting by biharmonic inpainting does not improve the reconstruction quality. This again emphasizes the importance of data adaptivity over operator adaptivity. On the foundational side, we establish deterministic and probabilistic theories with convergence estimates. In the non-adaptive 1-D case, we derive equivalence results between DbI on shifted regular grids and classical homogeneous diffusion filtering via an explicit relation between the density and the diffusion time.

Regularised Diffusion-Shock Inpainting

Sep 15, 2023Abstract:We introduce regularised diffusion--shock (RDS) inpainting as a modification of diffusion--shock inpainting from our SSVM 2023 conference paper. RDS inpainting combines two carefully chosen components: homogeneous diffusion and coherence-enhancing shock filtering. It benefits from the complementary synergy of its building blocks: The shock term propagates edge data with perfect sharpness and directional accuracy over large distances due to its high degree of anisotropy. Homogeneous diffusion fills large areas efficiently. The second order equation underlying RDS inpainting inherits a maximum--minimum principle from its components, which is also fulfilled in the discrete case, in contrast to competing anisotropic methods. The regularisation addresses the largest drawback of the original model: It allows a drastic reduction in model parameters without any loss in quality. Furthermore, we extend RDS inpainting to vector-valued data. Our experiments show a performance that is comparable to or better than many inpainting models, including anisotropic processes of second or fourth order.

Anisotropic Diffusion Stencils: From Simple Derivations over Stability Estimates to ResNet Implementations

Sep 13, 2023

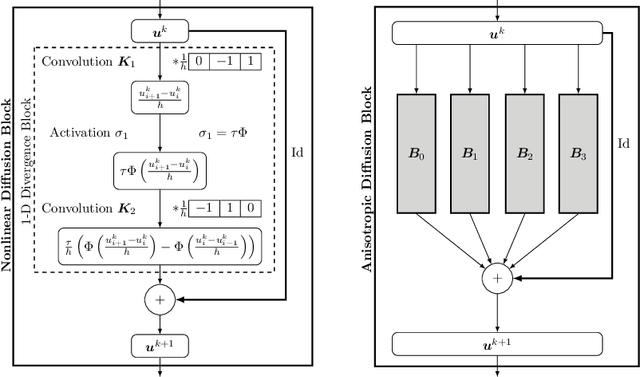

Abstract:Anisotropic diffusion processes with a diffusion tensor are important in image analysis, physics, and engineering. However, their numerical approximation has a strong impact on dissipative artefacts and deviations from rotation invariance. In this work, we study a large family of finite difference discretisations on a 3 x 3 stencil. We derive it by splitting 2-D anisotropic diffusion into four 1-D diffusions. The resulting stencil class involves one free parameter and covers a wide range of existing discretisations. It comprises the full stencil family of Weickert et al. (2013) and shows that their two parameters contain redundancy. Furthermore, we establish a bound on the spectral norm of the matrix corresponding to the stencil. This gives time step size limits that guarantee stability of an explicit scheme in the Euclidean norm. Our directional splitting also allows a very natural translation of the explicit scheme into ResNet blocks. Employing neural network libraries enables simple and highly efficient parallel implementations on GPUs.

Efficient Neural Generation of 4K Masks for Homogeneous Diffusion Inpainting

Mar 17, 2023Abstract:With well-selected data, homogeneous diffusion inpainting can reconstruct images from sparse data with high quality. While 4K colour images of size 3840 x 2160 can already be inpainted in real time, optimising the known data for applications like image compression remains challenging: Widely used stochastic strategies can take days for a single 4K image. Recently, a first neural approach for this so-called mask optimisation problem offered high speed and good quality for small images. It trains a mask generation network with the help of a neural inpainting surrogate. However, these mask networks can only output masks for the resolution and mask density they were trained for. We solve these problems and enable mask optimisation for high-resolution images through a neuroexplicit coarse-to-fine strategy. Additionally, we improve the training and interpretability of mask networks by including a numerical inpainting solver directly into the network. This allows to generate masks for 4K images in around 0.6 seconds while exceeding the quality of stochastic methods on practically relevant densities. Compared to popular existing approaches, this is an acceleration of up to four orders of magnitude.

Diffusion-Shock Inpainting

Mar 16, 2023Abstract:We propose diffusion-shock (DS) inpainting as a hitherto unexplored integrodifferential equation for filling in missing structures in images. It combines two carefully chosen components that have proven their usefulness in different applications: homogeneous diffusion inpainting and coherence-enhancing shock filtering. DS inpainting enjoys the complementary synergy of its building blocks: It offers a high degree of anisotropy along an eigendirection of the structure tensor. This enables it to connect interrupted structures over large distances. Moreover, it benefits from the sharp edge structure generated by the shock filter, and it exploits the efficient filling-in effect of homogeneous diffusion. The second order equation that underlies DS inpainting inherits a continuous maximum-minimum principle from its constituents. In contrast to other attractive second order inpainting equations such as edge-enhancing anisotropic diffusion, we can guarantee this property also for the proposed discrete algorithm. Our experiments show a performance that is comparable to or better than many linear or nonlinear, isotropic or anisotropic processes of second or fourth order. They include homogeneous diffusion, biharmonic interpolation, TV inpainting, edge-enhancing anisotropic diffusion, the methods of Tschumperl\'e and of Bornemann and M\"arz, Cahn-Hilliard inpainting, and Euler's elastica.

Image Blending with Osmosis

Mar 15, 2023Abstract:Image blending is an integral part of many multi-image applications such as panorama stitching or remote image acquisition processes. In such scenarios, multiple images are connected at predefined boundaries to form a larger image. A convincing transition between these boundaries may be challenging, since each image might have been acquired under different conditions or even by different devices. We propose the first blending approach based on osmosis filters. These drift-diffusion processes define an image evolution with a non-trivial steady state. For our blending purposes, we explore several ways to compose drift vector fields based on the derivatives of our input images. These vector fields guide the evolution such that the steady state yields a convincing blended result. Our method benefits from the well-founded theoretical results for osmosis, which include useful invariances under multiplicative changes of the colour values. Experiments on real-world data show that this yields better quality than traditional gradient domain blending, especially under challenging illumination conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge