Sihui Zheng

Misaligned Over-The-Air Computation of Multi-Sensor Data with Wiener-Denoiser Network

Sep 01, 2024Abstract:In data driven deep learning, distributed sensing and joint computing bring heavy load for computing and communication. To face the challenge, over-the-air computation (OAC) has been proposed for multi-sensor data aggregation, which enables the server to receive a desired function of massive sensing data during communication. However, the strict synchronization and accurate channel estimation constraints in OAC are hard to be satisfied in practice, leading to time and channel-gain misalignment. The paper formulates the misalignment problem as a non-blind image deblurring problem. At the receiver side, we first use the Wiener filter to deblur, followed by a U-Net network designed for further denoising. Our method is capable to exploit the inherent correlations in the signal data via learning, thus outperforms traditional methods in term of accuracy. Our code is available at https://github.com/auto-Dog/MOAC_deep

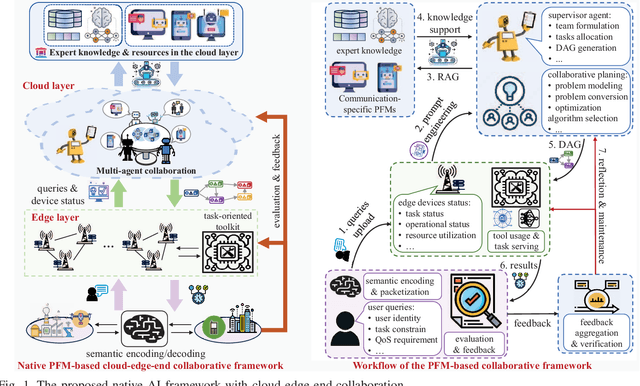

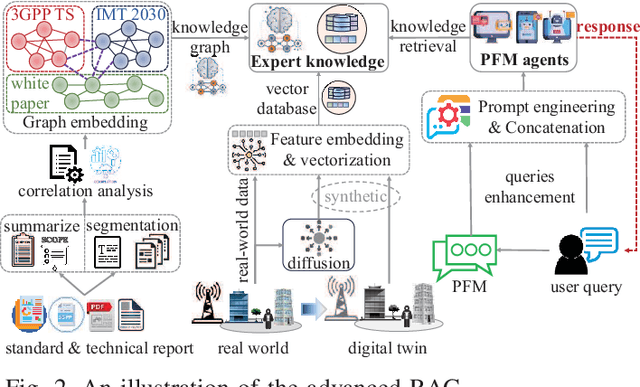

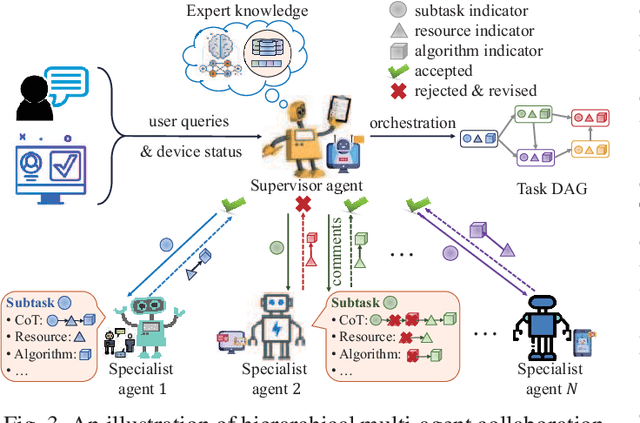

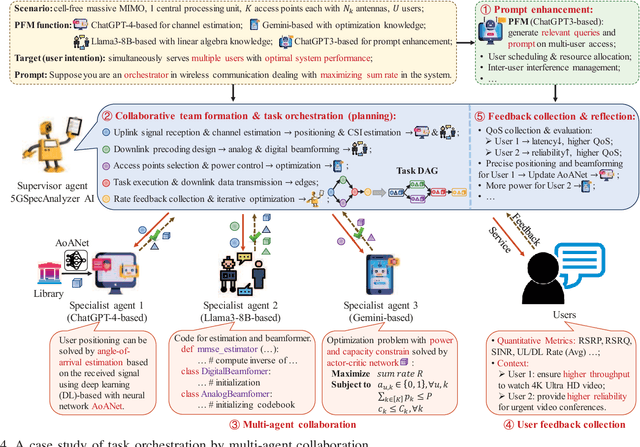

Foundation Model Based Native AI Framework in 6G with Cloud-Edge-End Collaboration

Oct 26, 2023

Abstract:Future wireless communication networks are in a position to move beyond data-centric, device-oriented connectivity and offer intelligent, immersive experiences based on task-oriented connections, especially in the context of the thriving development of pre-trained foundation models (PFM) and the evolving vision of 6G native artificial intelligence (AI). Therefore, redefining modes of collaboration between devices and servers and constructing native intelligence libraries become critically important in 6G. In this paper, we analyze the challenges of achieving 6G native AI from the perspectives of data, intelligence, and networks. Then, we propose a 6G native AI framework based on foundation models, provide a customization approach for intent-aware PFM, present a construction of a task-oriented AI toolkit, and outline a novel cloud-edge-end collaboration paradigm. As a practical use case, we apply this framework for orchestration, achieving the maximum sum rate within a wireless communication system, and presenting preliminary evaluation results. Finally, we outline research directions for achieving native AI in 6G.

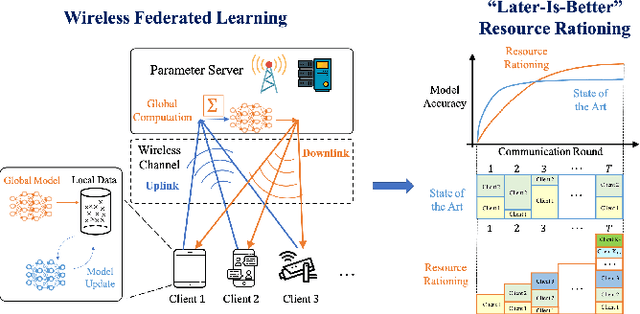

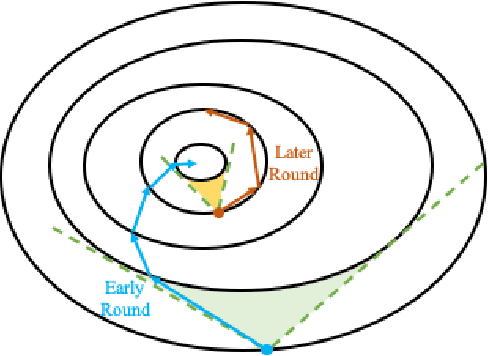

Resource Rationing for Wireless Federated Learning: Concept, Benefits, and Challenges

Apr 14, 2021

Abstract:We advocate a new resource allocation framework, which we term resource rationing, for wireless federated learning (FL). Unlike existing resource allocation methods for FL, resource rationing focuses on balancing resources across learning rounds so that their collective impact on the federated learning performance is explicitly captured. This new framework can be integrated seamlessly with existing resource allocation schemes to optimize the convergence of FL. In particular, a novel "later-is-better" principle is at the front and center of resource rationing, which is validated empirically in several instances of wireless FL. We also point out technical challenges and research opportunities that are worth pursuing. Resource rationing highlights the benefits of treating the emerging FL as a new class of service that has its own characteristics, and designing communication algorithms for this particular service.

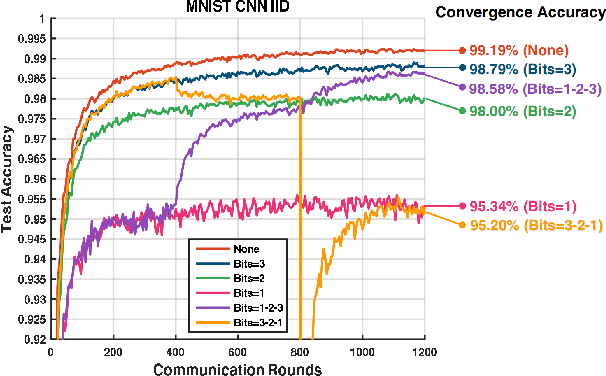

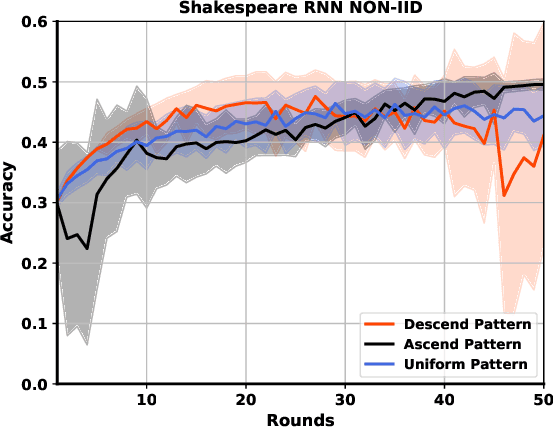

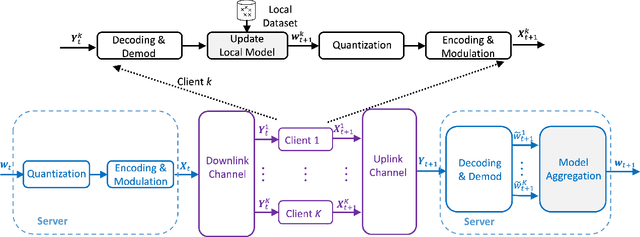

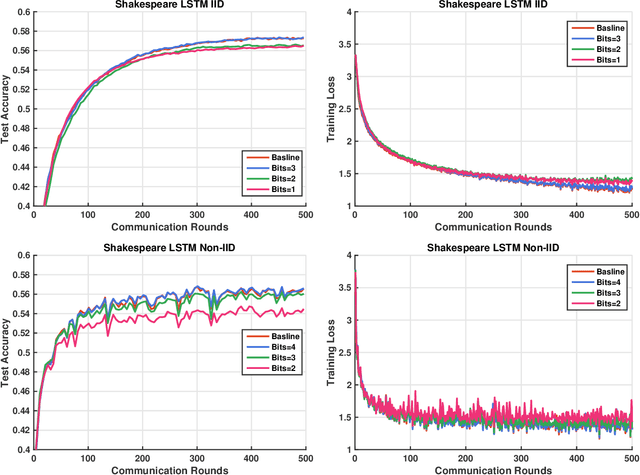

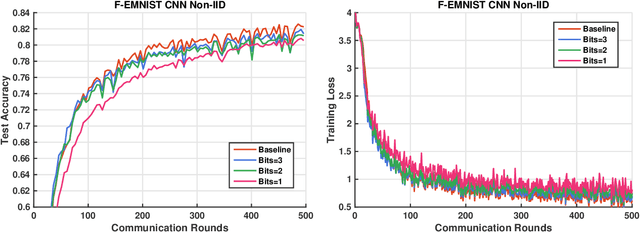

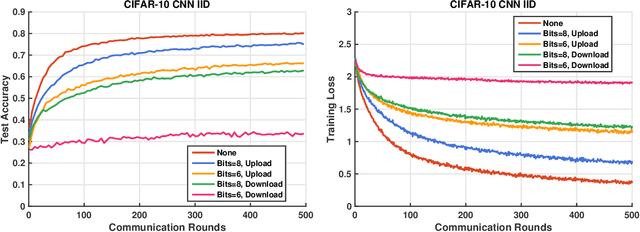

Design and Analysis of Uplink and Downlink Communications for Federated Learning

Dec 07, 2020

Abstract:Communication has been known to be one of the primary bottlenecks of federated learning (FL), and yet existing studies have not addressed the efficient communication design, particularly in wireless FL where both uplink and downlink communications have to be considered. In this paper, we focus on the design and analysis of physical layer quantization and transmission methods for wireless FL. We answer the question of what and how to communicate between clients and the parameter server and evaluate the impact of the various quantization and transmission options of the updated model on the learning performance. We provide new convergence analysis of the well-known FedAvg under non-i.i.d. dataset distributions, partial clients participation, and finite-precision quantization in uplink and downlink communications. These analyses reveal that, in order to achieve an O(1/T) convergence rate with quantization, transmitting the weight requires increasing the quantization level at a logarithmic rate, while transmitting the weight differential can keep a constant quantization level. Comprehensive numerical evaluation on various real-world datasets reveals that the benefit of a FL-tailored uplink and downlink communication design is enormous - a carefully designed quantization and transmission achieves more than 98% of the floating-point baseline accuracy with fewer than 10% of the baseline bandwidth, for majority of the experiments on both i.i.d. and non-i.i.d. datasets. In particular, 1-bit quantization (3.1% of the floating-point baseline bandwidth) achieves 99.8% of the floating-point baseline accuracy at almost the same convergence rate on MNIST, representing the best known bandwidth-accuracy tradeoff to the best of the authors' knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge