Zhiheng Guo

CoCo-Fed: A Unified Framework for Memory- and Communication-Efficient Federated Learning at the Wireless Edge

Jan 02, 2026Abstract:The deployment of large-scale neural networks within the Open Radio Access Network (O-RAN) architecture is pivotal for enabling native edge intelligence. However, this paradigm faces two critical bottlenecks: the prohibitive memory footprint required for local training on resource-constrained gNBs, and the saturation of bandwidth-limited backhaul links during the global aggregation of high-dimensional model updates. To address these challenges, we propose CoCo-Fed, a novel Compression and Combination-based Federated learning framework that unifies local memory efficiency and global communication reduction. Locally, CoCo-Fed breaks the memory wall by performing a double-dimension down-projection of gradients, adapting the optimizer to operate on low-rank structures without introducing additional inference parameters/latency. Globally, we introduce a transmission protocol based on orthogonal subspace superposition, where layer-wise updates are projected and superimposed into a single consolidated matrix per gNB, drastically reducing the backhaul traffic. Beyond empirical designs, we establish a rigorous theoretical foundation, proving the convergence of CoCo-Fed even under unsupervised learning conditions suitable for wireless sensing tasks. Extensive simulations on an angle-of-arrival estimation task demonstrate that CoCo-Fed significantly outperforms state-of-the-art baselines in both memory and communication efficiency while maintaining robust convergence under non-IID settings.

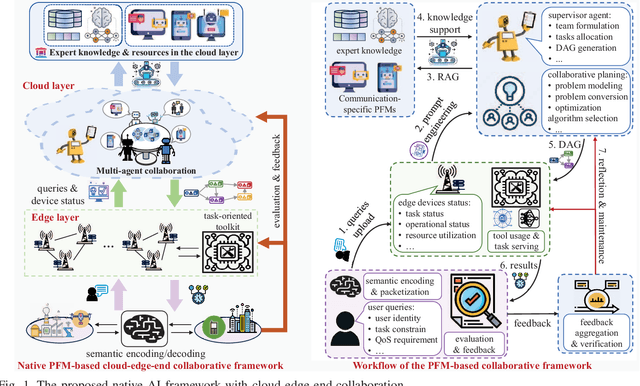

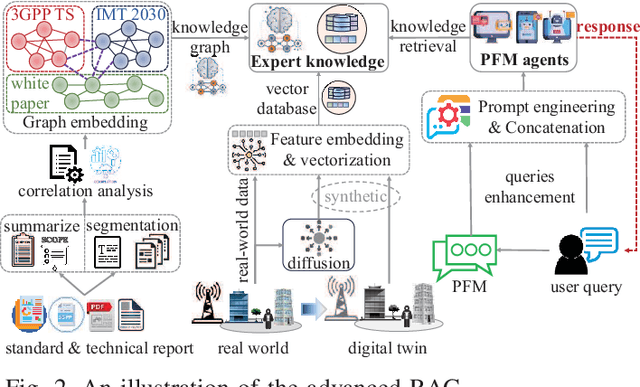

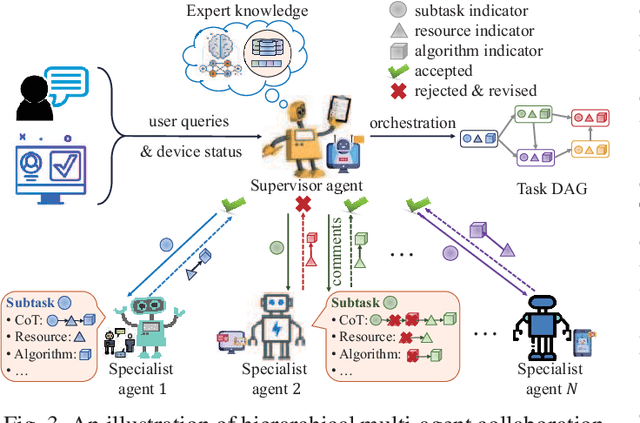

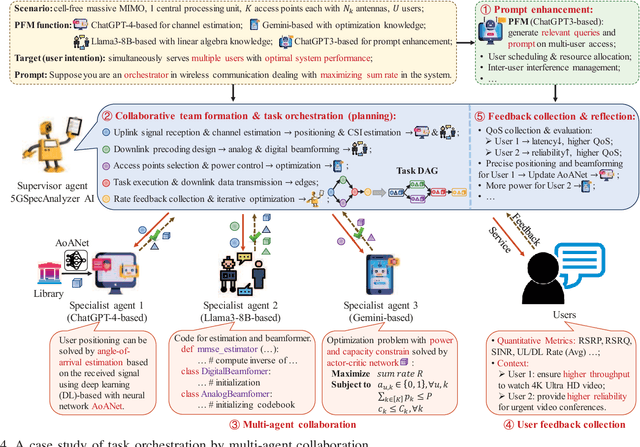

Foundation Model Based Native AI Framework in 6G with Cloud-Edge-End Collaboration

Oct 26, 2023

Abstract:Future wireless communication networks are in a position to move beyond data-centric, device-oriented connectivity and offer intelligent, immersive experiences based on task-oriented connections, especially in the context of the thriving development of pre-trained foundation models (PFM) and the evolving vision of 6G native artificial intelligence (AI). Therefore, redefining modes of collaboration between devices and servers and constructing native intelligence libraries become critically important in 6G. In this paper, we analyze the challenges of achieving 6G native AI from the perspectives of data, intelligence, and networks. Then, we propose a 6G native AI framework based on foundation models, provide a customization approach for intent-aware PFM, present a construction of a task-oriented AI toolkit, and outline a novel cloud-edge-end collaboration paradigm. As a practical use case, we apply this framework for orchestration, achieving the maximum sum rate within a wireless communication system, and presenting preliminary evaluation results. Finally, we outline research directions for achieving native AI in 6G.

Unsupervised Massive MIMO Channel Estimation with Dual-Path Knowledge-Aware Auto-Encoders

May 30, 2023

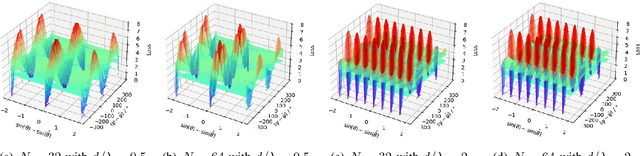

Abstract:In this paper, an unsupervised deep learning framework based on dual-path model-driven variational auto-encoders (VAE) is proposed for angle-of-arrivals (AoAs) and channel estimation in massive MIMO systems. Specifically designed for channel estimation, the proposed VAE differs from the original VAE in two aspects. First, the encoder is a dual-path neural network, where one path uses the received signal to estimate the path gains and path angles, and another uses the correlation matrix of the received signal to estimate AoAs. Second, the decoder has fixed weights that implement the signal propagation model, instead of learnable parameters. This knowledge-aware decoder forces the encoder to output meaningful physical parameters of interests (i.e., path gains, path angles, and AoAs), which cannot be achieved by original VAE. Rigorous analysis is carried out to characterize the multiple global optima and local optima of the estimation problem, which motivates the design of the dual-path encoder. By alternating between the estimation of path gains, path angles and the estimation of AoAs, the encoder is proved to converge. To further improve the convergence performance, a low-complexity procedure is proposed to find good initial points. Numerical results validate theoretical analysis and demonstrate the performance improvements of our proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge