Shirsendu Sukanta Halder

Rain rendering for evaluating and improving robustness to bad weather

Sep 06, 2020

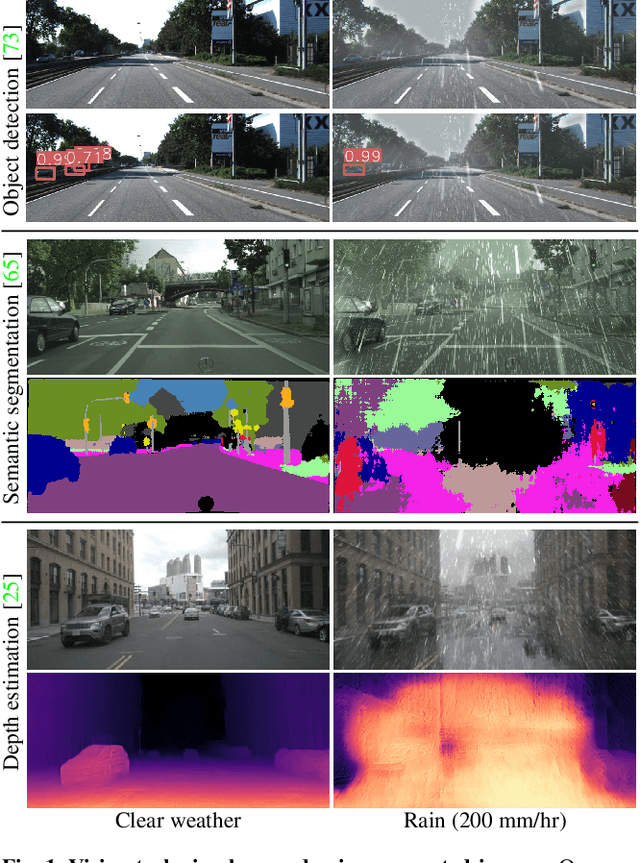

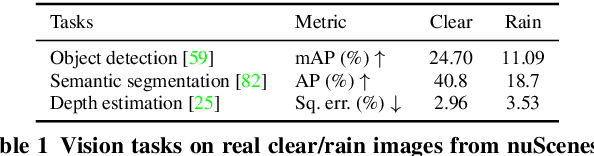

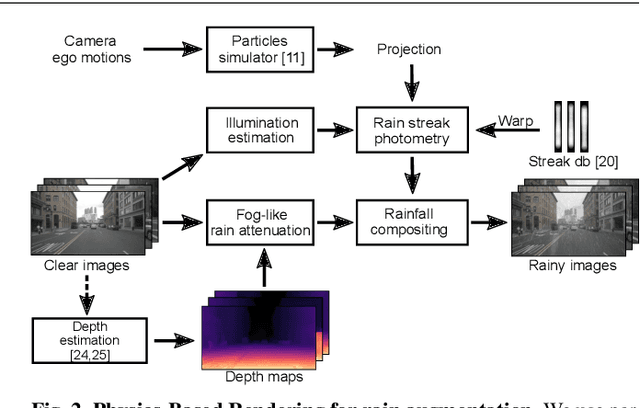

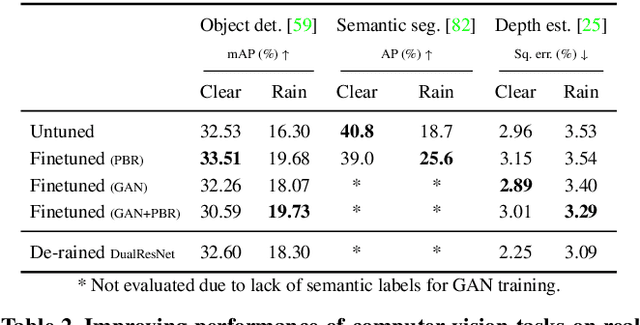

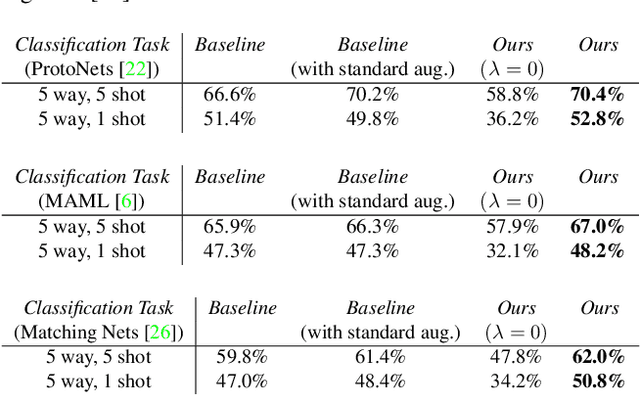

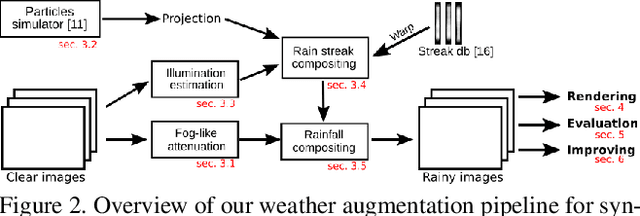

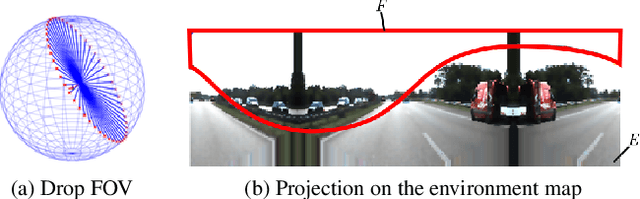

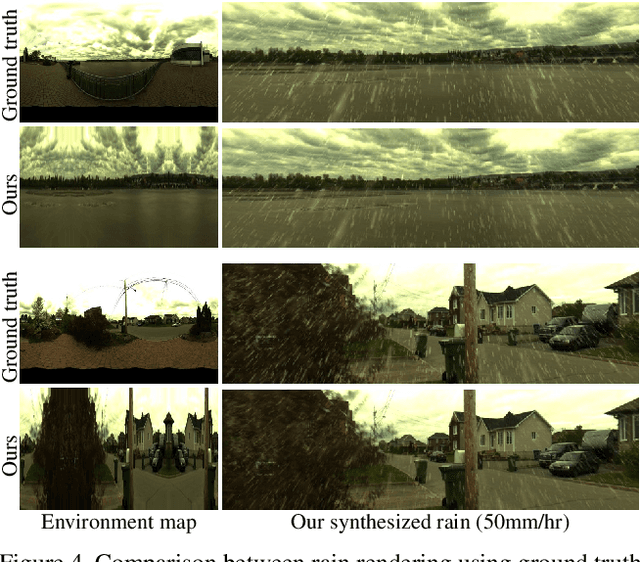

Abstract:Rain fills the atmosphere with water particles, which breaks the common assumption that light travels unaltered from the scene to the camera. While it is well-known that rain affects computer vision algorithms, quantifying its impact is difficult. In this context, we present a rain rendering pipeline that enables the systematic evaluation of common computer vision algorithms to controlled amounts of rain. We present three different ways to add synthetic rain to existing images datasets: completely physic-based; completely data-driven; and a combination of both. The physic-based rain augmentation combines a physical particle simulator and accurate rain photometric modeling. We validate our rendering methods with a user study, demonstrating our rain is judged as much as 73% more realistic than the state-of-theart. Using our generated rain-augmented KITTI, Cityscapes, and nuScenes datasets, we conduct a thorough evaluation of object detection, semantic segmentation, and depth estimation algorithms and show that their performance decreases in degraded weather, on the order of 15% for object detection, 60% for semantic segmentation, and 6-fold increase in depth estimation error. Finetuning on our augmented synthetic data results in improvements of 21% on object detection, 37% on semantic segmentation, and 8% on depth estimation.

Enhancing Perceptual Loss with Adversarial Feature Matching for Super-Resolution

May 15, 2020

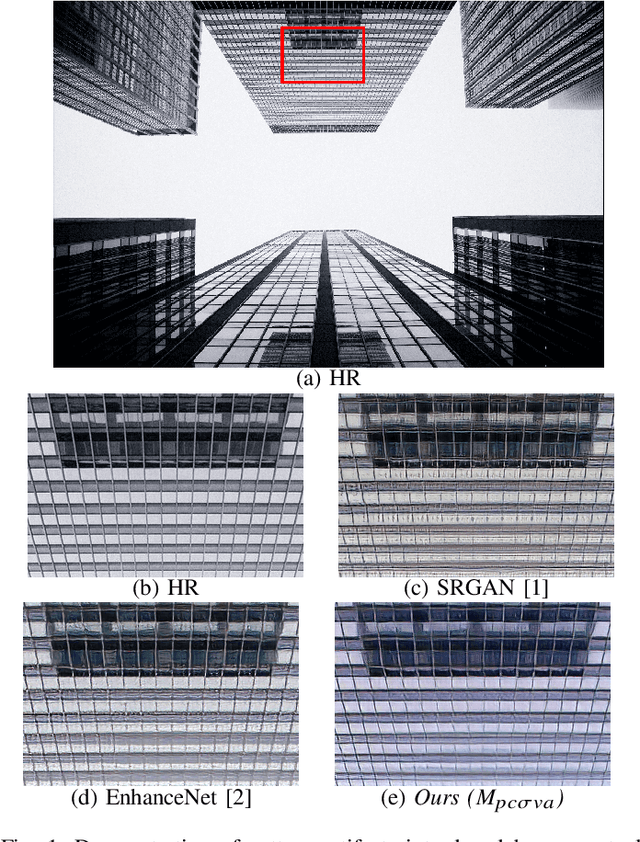

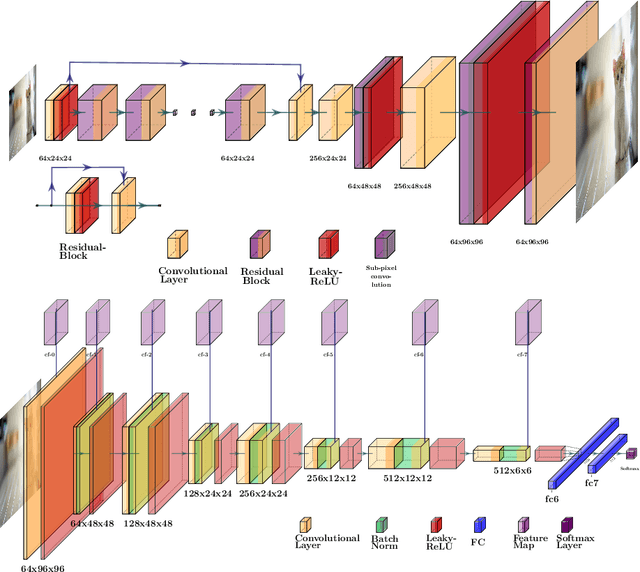

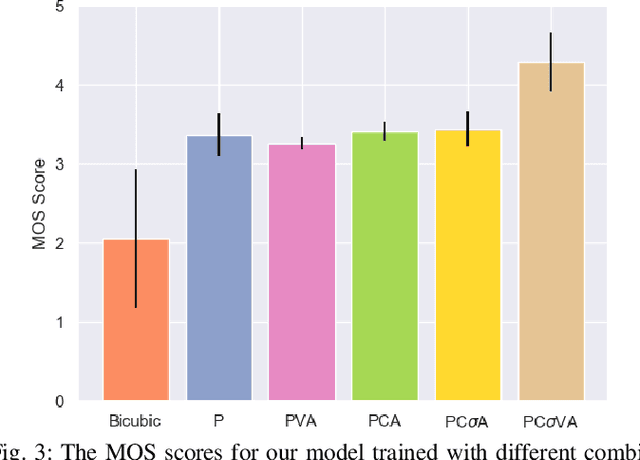

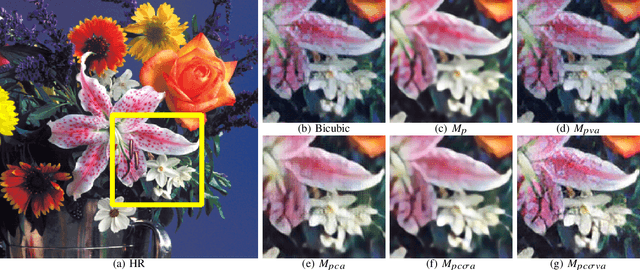

Abstract:Single image super-resolution (SISR) is an ill-posed problem with an indeterminate number of valid solutions. Solving this problem with neural networks would require access to extensive experience, either presented as a large training set over natural images or a condensed representation from another pre-trained network. Perceptual loss functions, which belong to the latter category, have achieved breakthrough success in SISR and several other computer vision tasks. While perceptual loss plays a central role in the generation of photo-realistic images, it also produces undesired pattern artifacts in the super-resolved outputs. In this paper, we show that the root cause of these pattern artifacts can be traced back to a mismatch between the pre-training objective of perceptual loss and the super-resolution objective. To address this issue, we propose to augment the existing perceptual loss formulation with a novel content loss function that uses the latent features of a discriminator network to filter the unwanted artifacts across several levels of adversarial similarity. Further, our modification has a stabilizing effect on non-convex optimization in adversarial training. The proposed approach offers notable gains in perceptual quality based on an extensive human evaluation study and a competent reconstruction fidelity when tested on objective evaluation metrics.

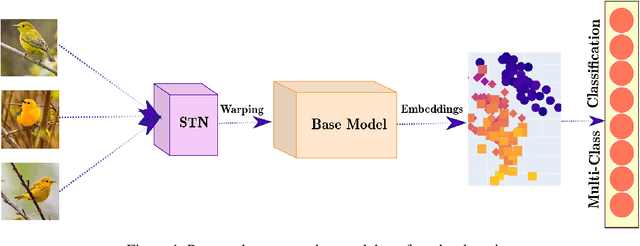

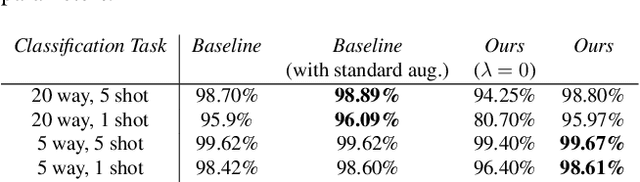

MA 3 : Model Agnostic Adversarial Augmentation for Few Shot learning

Apr 10, 2020

Abstract:Despite the recent developments in vision-related problems using deep neural networks, there still remains a wide scope in the improvement of generalizing these models to unseen examples. In this paper, we explore the domain of few-shot learning with a novel augmentation technique. In contrast to other generative augmentation techniques, where the distribution over input images are learnt, we propose to learn the probability distribution over the image transformation parameters which are easier and quicker to learn. Our technique is fully differentiable which enables its extension to versatile data-sets and base models. We evaluate our proposed method on multiple base-networks and 2 data-sets to establish the robustness and efficiency of this method. We obtain an improvement of nearly 4% by adding our augmentation module without making any change in network architectures. We also make the code readily available for usage by the community.

Physics-Based Rendering for Improving Robustness to Rain

Aug 27, 2019

Abstract:To improve the robustness to rain, we present a physically-based rain rendering pipeline for realistically inserting rain into clear weather images. Our rendering relies on a physical particle simulator, an estimation of the scene lighting and an accurate rain photometric modeling to augment images with arbitrary amount of realistic rain or fog. We validate our rendering with a user study, proving our rain is judged 40% more realistic that state-of-the-art. Using our generated weather augmented Kitti and Cityscapes dataset, we conduct a thorough evaluation of deep object detection and semantic segmentation algorithms and show that their performance decreases in degraded weather, on the order of 15% for object detection and 60% for semantic segmentation. Furthermore, we show refining existing networks with our augmented images improves the robustness of both object detection and semantic segmentation algorithms. We experiment on nuScenes and measure an improvement of 15% for object detection and 35% for semantic segmentation compared to original rainy performance. Augmented databases and code are available on the project page.

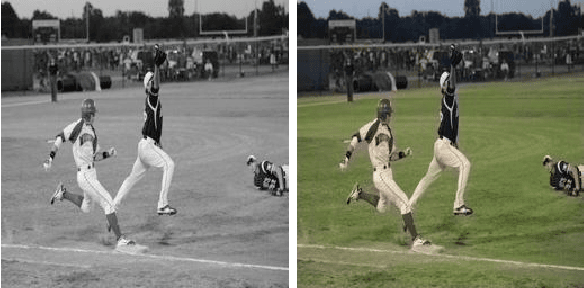

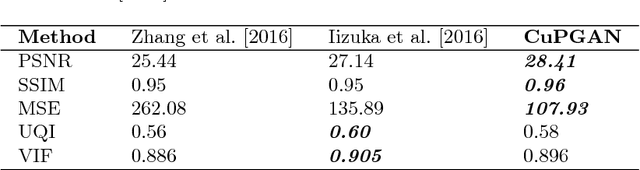

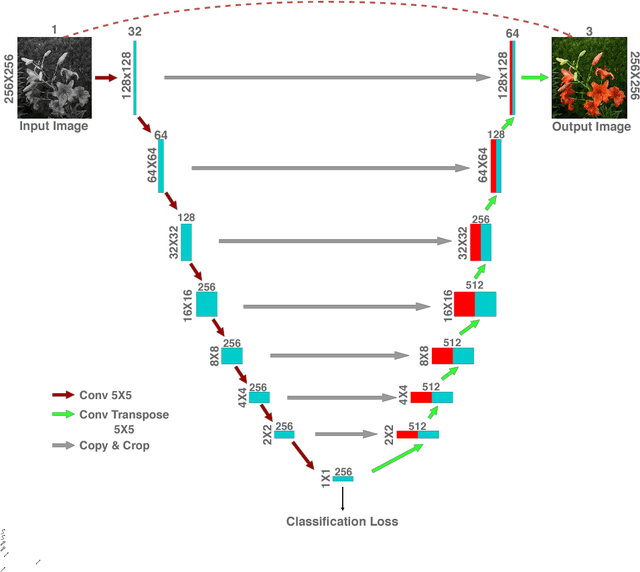

Perceptual Conditional Generative Adversarial Networks for End-to-End Image Colourization

Nov 27, 2018

Abstract:Colours are everywhere. They embody a significant part of human visual perception. In this paper, we explore the paradigm of hallucinating colours from a given gray-scale image. The problem of colourization has been dealt in previous literature but mostly in a supervised manner involving user-interference. With the emergence of Deep Learning methods numerous tasks related to computer vision and pattern recognition have been automatized and carried in an end-to-end fashion due to the availability of large data-sets and high-power computing systems. We investigate and build upon the recent success of Conditional Generative Adversarial Networks (cGANs) for Image-to-Image translations. In addition to using the training scheme in the basic cGAN, we propose an encoder-decoder generator network which utilizes the class-specific cross-entropy loss as well as the perceptual loss in addition to the original objective function of cGAN. We train our model on a large-scale dataset and present illustrative qualitative and quantitative analysis of our results. Our results vividly display the versatility and proficiency of our methods through life-like colourization outcomes.

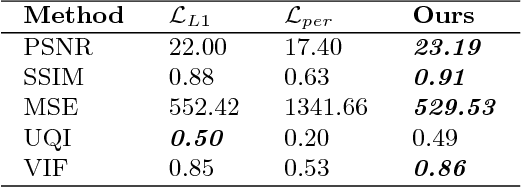

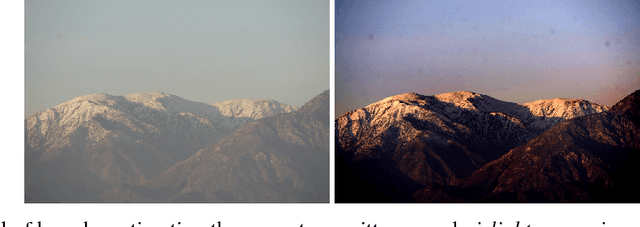

Reconstruction Loss Minimized FCN for Single Image Dehazing

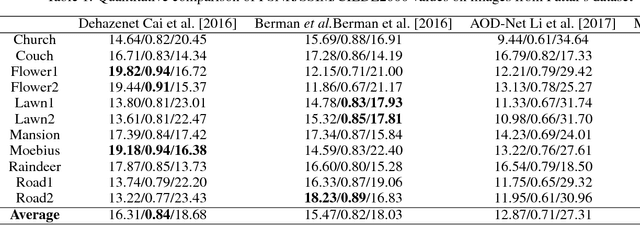

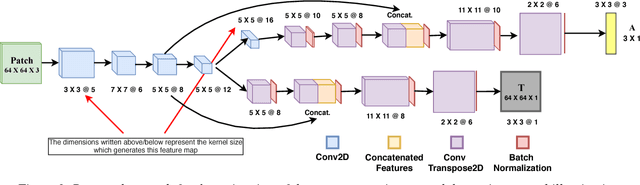

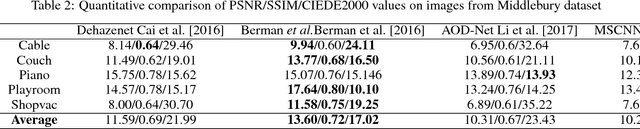

Nov 27, 2018

Abstract:Haze and fog reduce the visibility of outdoor scenes as a veil like semi-transparent layer appears over the objects. As a result, images captured under such conditions lack contrast. Image dehazing methods try to alleviate this problem by recovering a clear version of the image. In this paper, we propose a Fully Convolutional Neural Network based model to recover the clear scene radiance by estimating the environmental illumination and the scene transmittance jointly from a hazy image. The method uses a relaxed haze imaging model to allow for the situations with non-uniform illumination. We have trained the network by minimizing a custom-defined loss that measures the error of reconstructing the hazy image in three different ways. Additionally, we use a multilevel approach to determine the scene transmittance and the environmental illumination in order to reduce the dependence of the estimate on image scale. Evaluations show that our model performs well compared to the existing state-of-the-art methods. It also verifies the potential of our model in diverse situations and various lighting conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge